Hadoop的 HA的搭建

2017-12-29 10:51

387 查看

Hadoop HA高可用集群搭建(2.7.2)

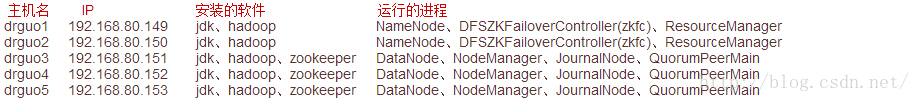

1.集群规划:主机名 IP 安装的软件

运行的进程

drguo1 192.168.80.149 jdk、hadoop

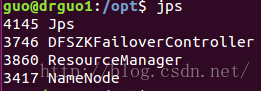

NameNode、DFSZKFailoverController(zkfc)、ResourceManager

drguo2 192.168.80.150 jdk、hadoop

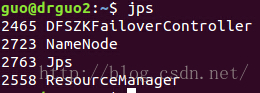

NameNode、DFSZKFailoverController(zkfc)、ResourceManager

drguo3 192.168.80.151 jdk、hadoop、zookeeper

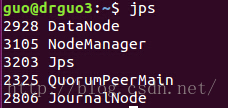

DataNode、NodeManager、JournalNode、QuorumPeerMain

drguo4 192.168.80.152 jdk、hadoop、zookeeper

DataNode、NodeManager、JournalNode、QuorumPeerMain

drguo5 192.168.80.153 jdk、hadoop、zookeeper

DataNode、NodeManager、JournalNode、QuorumPeerMain

排的好好的,显示出来就乱了!!!

2.前期准备:

准备五台机器,修改静态IP、主机名、主机名与IP的映射,关闭防火墙,安装JDK并配置环境变量(不会请看这http://blog.csdn.net/dr_guo/article/details/50886667),创建用户:用户组,SSH免密码登录SSH免密码登录(报错请看这http://blog.csdn.net/dr_guo/article/details/50967442)。

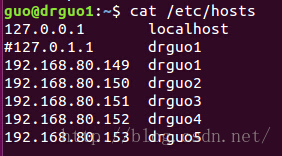

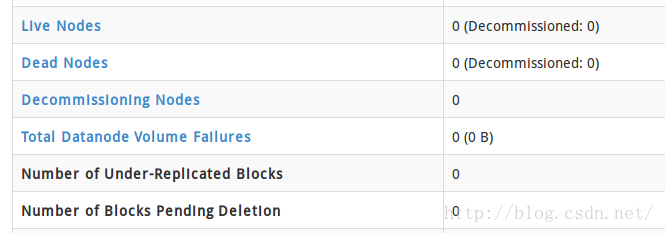

注意:要把127.0.1.1那一行注释掉,要不然会出现jps显示有datanode,但网页显示live nodes为0;

注释之后就正常了,好像有人没注释也正常,我也不知道为什么0.0

3.搭建zookeeper集群(drguo3/drguo4/drguo5)

见:ZooKeeper完全分布式集群搭建

4.正式开始搭建Hadoop HA集群

去官网下最新的Hadoop(http://apache.opencas.org/hadoop/common/stable/),目前最新的是2.7.2,下载完之后把它放到/opt/Hadoop下

[plain] view

plain copy

guo@guo:~/下载$ mv ./hadoop-2.7.2.tar.gz /opt/Hadoop/

mv: 无法创建普通文件"/opt/Hadoop/hadoop-2.7.2.tar.gz": 权限不够

guo@guo:~/下载$ su root

密码:

root@guo:/home/guo/下载# mv ./hadoop-2.7.2.tar.gz /opt/Hadoop/

解压

[plain] view

plain copy

guo@guo:/opt/Hadoop$ sudo tar -zxf hadoop-2.7.2.tar.gz

[sudo] guo 的密码:

解压jdk的时候我用的是tar -zxvf,其中的v呢就是看一下解压的过程,不想看你可以不写。

修改opt目录所有者(用户:用户组)直接把opt目录的所有者/组换成了guo。具体情况在ZooKeeper完全分布式集群搭建说过。

[plain] view

plain copy

root@guo:/opt/Hadoop# chown -R guo:guo /opt

设置环境变量

[plain] view

plain copy

guo@guo:/opt/Hadoop$ sudo gedit /etc/profile

在最后加上(这样设置在执行bin/sbin目录下的脚本时就不用进入该目录用了)

[plain] view

plain copy

#hadoop

export HADOOP_HOME=/opt/Hadoop/hadoop-2.7.2

export PATH=$PATH:$HADOOP_HOME/sbin

export PATH=$PATH:$HADOOP_HOME/bin

然后更新配置

[plain] view

plain copy

guo@guo:/opt/Hadoop$ source /etc/profile

修改/opt/Hadoop/hadoop-2.7.2/etc/hadoop下的hadoop-env.sh

[plain] view

plain copy

guo@guo:/opt/Hadoop$ cd hadoop-2.7.2

guo@guo:/opt/Hadoop/hadoop-2.7.2$ cd etc/hadoop/

guo@guo:/opt/Hadoop/hadoop-2.7.2/etc/hadoop$ sudo gedit ./hadoop-env.sh

进入文件后

[plain] view

plain copy

export JAVA_HOME=${JAVA_HOME}#将这个改成JDK路径,如下

export JAVA_HOME=/opt/Java/jdk1.8.0_73

然后更新文件配置

[plain] view

plain copy

guo@guo:/opt/Hadoop/hadoop-2.7.2/etc/hadoop$ source ./hadoop-env.sh

前面配置和单机模式一样,我就直接复制了。

注意:汉语注释是给你看的,复制粘贴的时候都删了!!!

修改core-site.xml

[html] view

plain copy

<configuration>

<!-- 指定hdfs的nameservice为ns1 -->

<property>

<name>fs.defaultFS</name>

<value>hdfs://ns1/</value>

</property>

<!-- 指定hadoop临时目录 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/Hadoop/hadoop-2.7.2/tmp</value>

</property>

<!-- 指定zookeeper地址 -->

<property>

<name>ha.zookeeper.quorum</name>

<value>drguo3:2181,drguo4:2181,drguo5:2181</value>

</property>

</configuration>

修改hdfs-site.xml

[html] view

plain copy

<configuration>

<!--指定hdfs的nameservice为ns1,需要和core-site.xml中的保持一致 -->

<property>

<name>dfs.nameservices</name>

<value>ns1</value>

</property>

<!-- ns1下面有两个NameNode,分别是nn1,nn2 -->

<property>

<name>dfs.ha.namenodes.ns1</name>

<value>nn1,nn2</value>

</property>

<!-- nn1的RPC通信地址 -->

<property>

<name>dfs.namenode.rpc-address.ns1.nn1</name>

<value>drguo1:9000</value>

</property>

<!-- nn1的http通信地址 -->

<property>

<name>dfs.namenode.http-address.ns1.nn1</name>

<value>drguo1:50070</value>

</property>

<!-- nn2的RPC通信地址 -->

<property>

<name>dfs.namenode.rpc-address.ns1.nn2</name>

<value>drguo2:9000</value>

</property>

<!-- nn2的http通信地址 -->

<property>

<name>dfs.namenode.http-address.ns1.nn2</name>

<value>drguo2:50070</value>

</property>

<!-- 指定NameNode的元数据在JournalNode上的存放位置 -->

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://drguo3:8485;drguo4:8485;drguo5:8485/ns1</value>

</property>

<!-- 指定JournalNode在本地磁盘存放数据的位置 -->

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/opt/Hadoop/hadoop-2.7.2/journaldata</value>

</property>

<!-- 开启NameNode失败自动切换 -->

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<!-- 配置失败自动切换实现方式 -->

<property>

<name>dfs.client.failover.proxy.provider.ns1</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<!-- 配置隔离机制方法,多个机制用换行分割,即每个机制暂用一行-->

<property>

<name>dfs.ha.fencing.methods</name>

<value>

sshfence

shell(/bin/true)

</value>

</property>

<!-- 使用sshfence隔离机制时需要ssh免登陆 -->

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/home/guo/.ssh/id_rsa</value>

</property>

<!-- 配置sshfence隔离机制超时时间 -->

<property>

<name>dfs.ha.fencing.ssh.connect-timeout</name>

<value>30000</value>

</property>

</configuration>

先将mapred-site.xml.template改名为mapred-site.xml然后修改mapred-site.xml

[html] view

plain copy

<configuration>

<!-- 指定mr框架为yarn方式 -->

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

修改yarn-site.xml

[html] view

plain copy

<configuration>

<!-- 开启RM高可用 -->

<property>

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

</property>

<!-- 指定RM的cluster id -->

<property>

<name>yarn.resourcemanager.cluster-id</name>

<value>yrc</value>

</property>

<!-- 指定RM的名字 -->

<property>

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>rm1,rm2</value>

</property>

<!-- 分别指定RM的地址 -->

<property>

<name>yarn.resourcemanager.hostname.rm1</name>

<value>drguo1</value>

</property>

<property>

<name>yarn.resourcemanager.hostname.rm2</name>

<value>drguo2</value>

</property>

<!-- 指定zk集群地址 -->

<property>

<name>yarn.resourcemanager.zk-address</name>

<value>drguo3:2181,drguo4:2181,drguo5:2181</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

修改slaves

[html] view

plain copy

drguo3

drguo4

drguo5

把Hadoop整个目录拷贝到drguo2/3/4/5,拷之前把share下doc删了(文档不用拷),这样会快点。

5.启动zookeeper集群(分别在drguo3、drguo4、drguo5上启动zookeeper)

[plain] view

plain copy

guo@drguo3:~$ zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper-3.4.8/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

guo@drguo3:~$ jps

2005 Jps

1994 QuorumPeerMain

guo@drguo3:~$ ssh drguo4

Welcome to Ubuntu 15.10 (GNU/Linux 4.2.0-16-generic x86_64)

* Documentation: https://help.ubuntu.com/

Last login: Fri Mar 25 14:04:43 2016 from 192.168.80.151

guo@drguo4:~$ zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper-3.4.8/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

guo@drguo4:~$ jps

1977 Jps

1966 QuorumPeerMain

guo@drguo4:~$ exit

注销

Connection to drguo4 closed.

guo@drguo3:~$ ssh drguo5

Welcome to Ubuntu 15.10 (GNU/Linux 4.2.0-16-generic x86_64)

* Documentation: https://help.ubuntu.com/

Last login: Fri Mar 25 14:04:56 2016 from 192.168.80.151

guo@drguo5:~$ zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper-3.4.8/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

guo@drguo5:~$ jps

2041 Jps

2030 QuorumPeerMain

guo@drguo5:~$ exit

注销

Connection to drguo5 closed.

guo@drguo3:~$ zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper-3.4.8/bin/../conf/zoo.cfg

Mode: leader

6.启动journalnode(分别在drguo3、drguo4、drguo5上启动journalnode)注意只有第一次需要这么启动,之后启动hdfs会包含journalnode。

[plain] view

plain copy

guo@drguo3:~$ hadoop-daemon.sh start journalnode

starting journalnode, logging to /opt/Hadoop/hadoop-2.7.2/logs/hadoop-guo-journalnode-drguo3.out

guo@drguo3:~$ jps

2052 Jps

2020 JournalNode

1963 QuorumPeerMain

guo@drguo3:~$ ssh drguo4

Welcome to Ubuntu 15.10 (GNU/Linux 4.2.0-16-generic x86_64)

* Documentation: https://help.ubuntu.com/

Last login: Fri Mar 25 00:09:08 2016 from 192.168.80.149

guo@drguo4:~$ hadoop-daemon.sh start journalnode

starting journalnode, logging to /opt/Hadoop/hadoop-2.7.2/logs/hadoop-guo-journalnode-drguo4.out

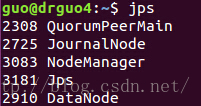

guo@drguo4:~$ jps

2103 Jps

2071 JournalNode

1928 QuorumPeerMain

guo@drguo4:~$ exit

注销

Connection to drguo4 closed.

guo@drguo3:~$ ssh drguo5

Welcome to Ubuntu 15.10 (GNU/Linux 4.2.0-16-generic x86_64)

* Documentation: https://help.ubuntu.com/

Last login: Thu Mar 24 23:52:17 2016 from 192.168.80.152

guo@drguo5:~$ hadoop-daemon.sh start journalnode

starting journalnode, logging to /opt/Hadoop/hadoop-2.7.2/logs/hadoop-guo-journalnode-drguo5.out

guo@drguo5:~$ jps

2276 JournalNode

2308 Jps

1959 QuorumPeerMain

guo@drguo5:~$ exit

注销

Connection to drguo5 closed.

在drguo4/5启动时发现了问题,没有journalnode,查看日志发现是因为汉语注释造成的,drguo4/5全删了问题解决。drguo4/5的拼音输入法也不能用,我很蛋疼。。镜像都是复制的,咋还变异了呢。

7.格式化HDFS(在drguo1上执行)

[plain] view

plain copy

guo@drguo1:/opt$ hdfs namenode -format

这回又出问题了,还是汉语注释闹得,drguo1/2/3也全删了,问题解决。

注意:格式化之后需要把tmp目录拷给drguo2(不然drguo2的namenode起不来)

[plain] view

plain copy

guo@drguo1:/opt/Hadoop/hadoop-2.7.2$ scp -r tmp/ drguo2:/opt/Hadoop/hadoop-2.7.2/

8.格式化ZKFC(在drguo1上执行)

[plain] view

plain copy

guo@drguo1:/opt$ hdfs zkfc -formatZK

9.启动HDFS(在drguo1上执行)

[plain] view

plain copy

guo@drguo1:/opt$ start-dfs.sh

10.启动YARN(在drguo1上执行)

[plain] view

plain copy

guo@drguo1:/opt$ start-yarn.sh

PS:

1.drguo2的resourcemanager需要手动单独启动:

yarn-daemon.sh start resourcemanager

2.namenode、datanode也可以单独启动:

hadoop-daemon.sh start namenode

hadoop-daemon.sh start datanode

3.NN 由standby转化成active

hdfs haadmin -transitionToActive nn1 --forcemanual

大功告成!!!

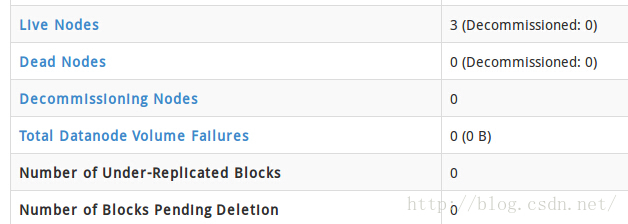

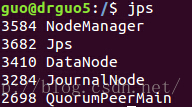

是不是和之前规划的一样0.0

相关文章推荐

- Hadoop-2.3.0-cdh5.0.1完全分布式环境搭建(NameNode,ResourceManager HA)

- Hadoop2.2.0 HA + Jdk1.8.0 + Zookeeper3.4.5 + Hbase0.98 集群搭建详细过程(服务器集群)

- 【1】搭建HA高可用hadoop-2.3(规划+环境准备)

- Ubuntu16.04搭建HA集群hadoop-2.7.4

- Hadoop Federation + HA 搭建(二) -- hadoop Federation + HA

- Hadoop HA (高可用)集群搭建

- Hadoop分布式文件存储系统HDFS高可用HA搭建(何志雄)

- hadoop伪分布式ha框架搭建

- hadoop ha的集群搭建

- hadoop-企业版环境搭建(二)-HA搭建和zookpeer集群的搭建

- hadoop2.2.0的ha分布式集群搭建

- 搭建hadoop2.2.0 HDFS,HA / Federation 和 HA&Federation

- hadoop2.4的HA集群搭建

- Hadoop2.6集群环境搭建(HDFS HA+YARN)原来4G内存也能任性一次.

- Hadoop 2.2.0 HDFS HA(Automatic Failover)搭建

- hadoop-2.6.2 namenode resourcemanager ha 环境搭建

- hadoop之文件系统HA搭建的部署细节

- 【2】搭建HA高可用hadoop-2.3(安装zookeeper)

- sqoop2 hadoop ha搭建注意

- hadoop2.7.3 HA YARN 环境搭建