OpenStack deployed by SaltStack

2017-11-06 00:22

393 查看

SaltStack具体实现请看 GitHub

相关安装包百度盘链接

实验主机环境

实验软件版本:

本地解析:

安装配置:

项目结构:

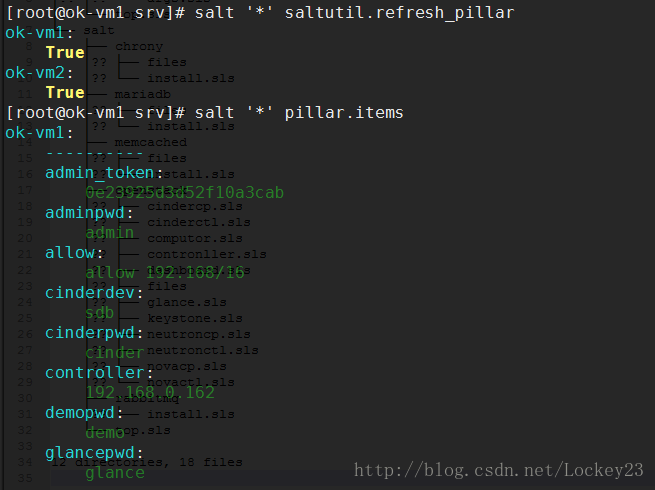

Pillar配置参数

2.2.1 salt——top.sls

2.2.2 salt——chrony

2.2.3 salt——rabbitmq

2.2.4 salt——mariadb

2.2.5 salt——memcached

2.2.6 salt——openstack

chrony

rabbitmq-server

memcached

mariadb

openstack.keystone

openstack.glance

openstack.nova

openstack.neutron

openstack.dashboard

openstack.cinder

计算节点:

chrony

openstack.nova

openstack.neutron

openstack.cinder

推送之前进行单节点测试:

一般情况下对于服务的启动项报错可以忽略,但是其他的错误一定要解决!!!

整体推送测试,测试除服务启动项报错外没有其它错误方可进行实际推送,请将saltstack超时设置久一点!!!

作为 admin 用户,请求认证令牌:

端点查看:

镜像服务检查

在控制节点查看计算节点是否加入

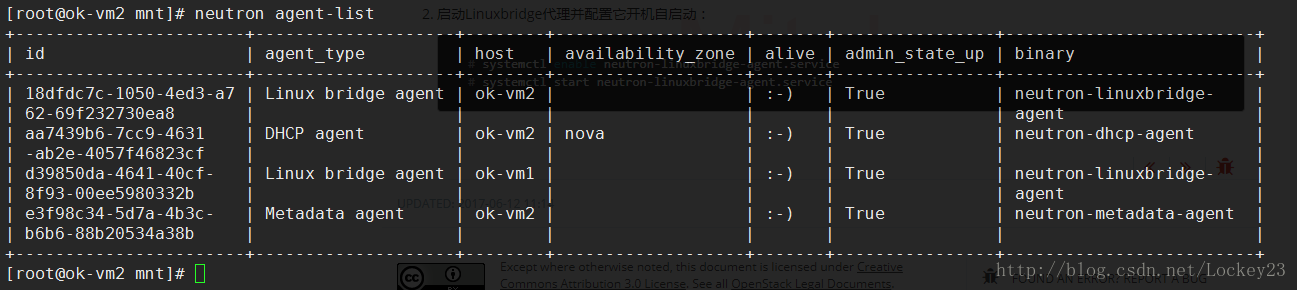

网络检验:

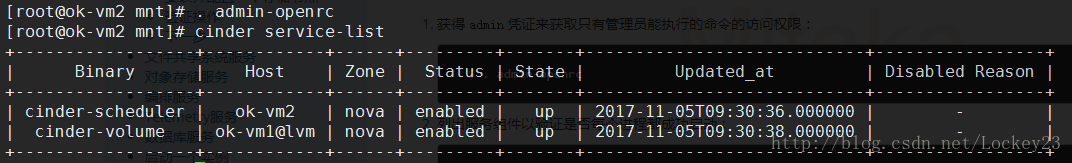

块存储检验:

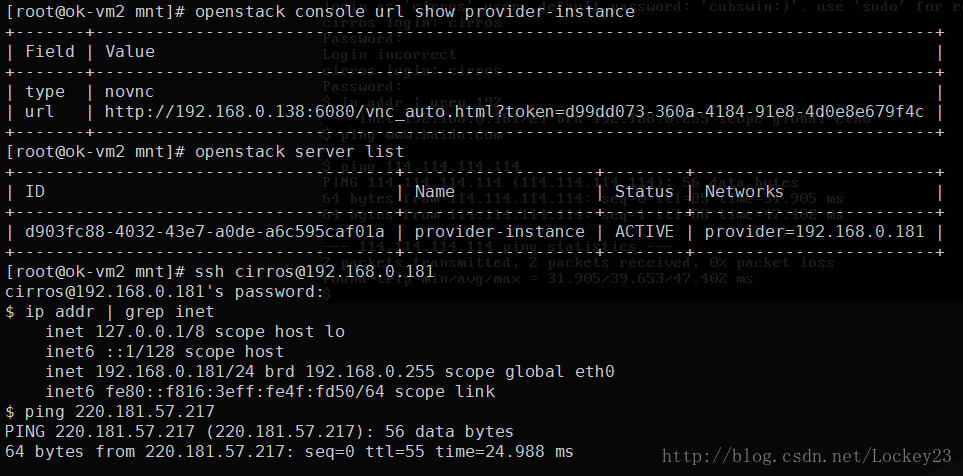

启动一个实例

创建提供者网络

在网络上创建一个子网

创建m1.nano规格的主机

生成一个键值对

验证公钥的添加:

增加安全组规则

在公有网络上创建实例

检查实例的状态:

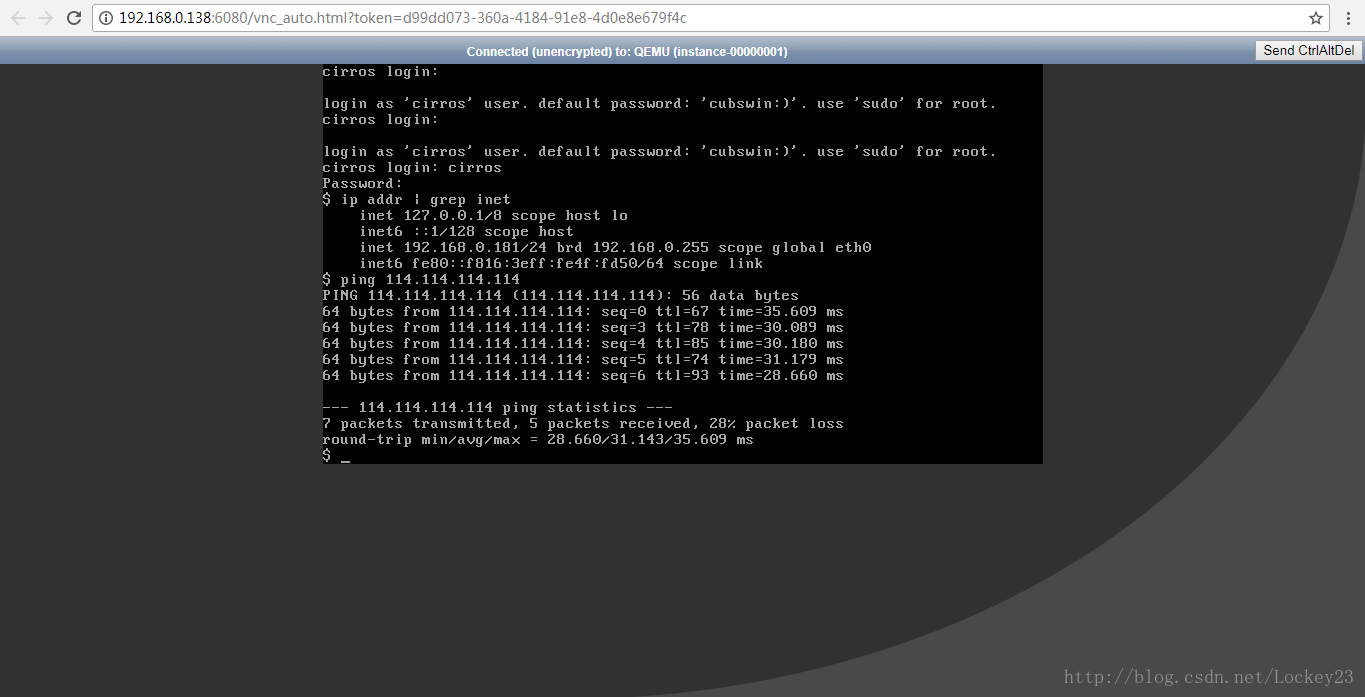

使用虚拟控制台访问实例

获取你实例的 Virtual Network Computing (VNC) 会话URL并从web浏览器访问它:

浏览器访问:

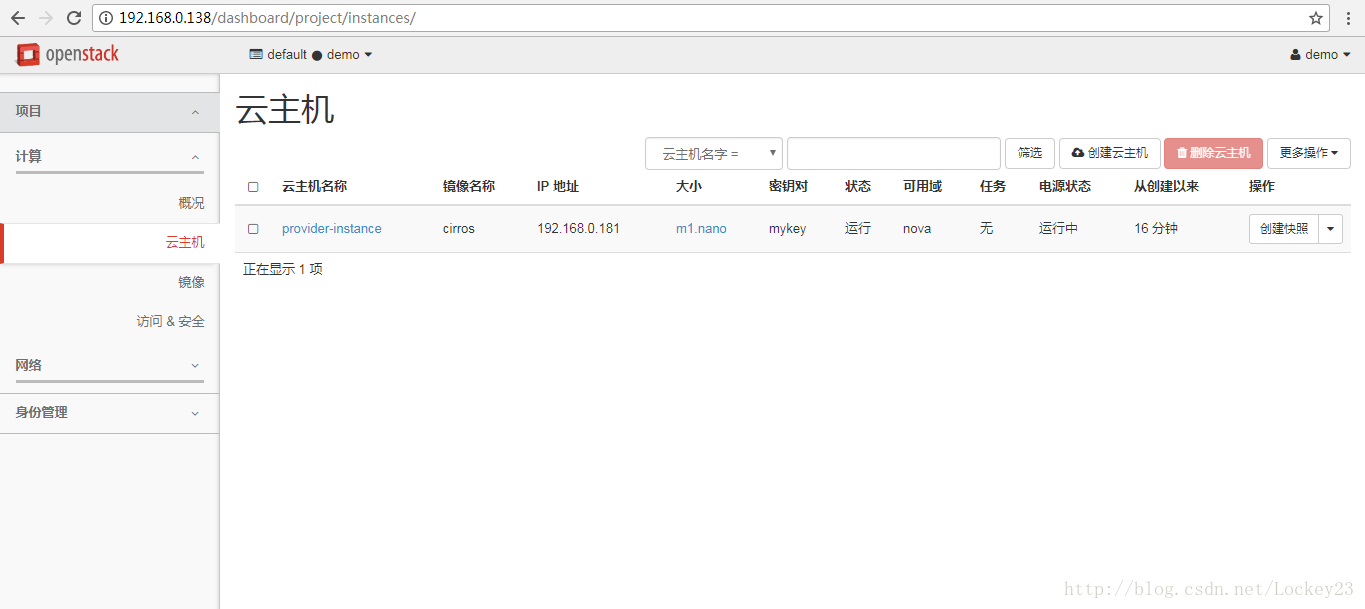

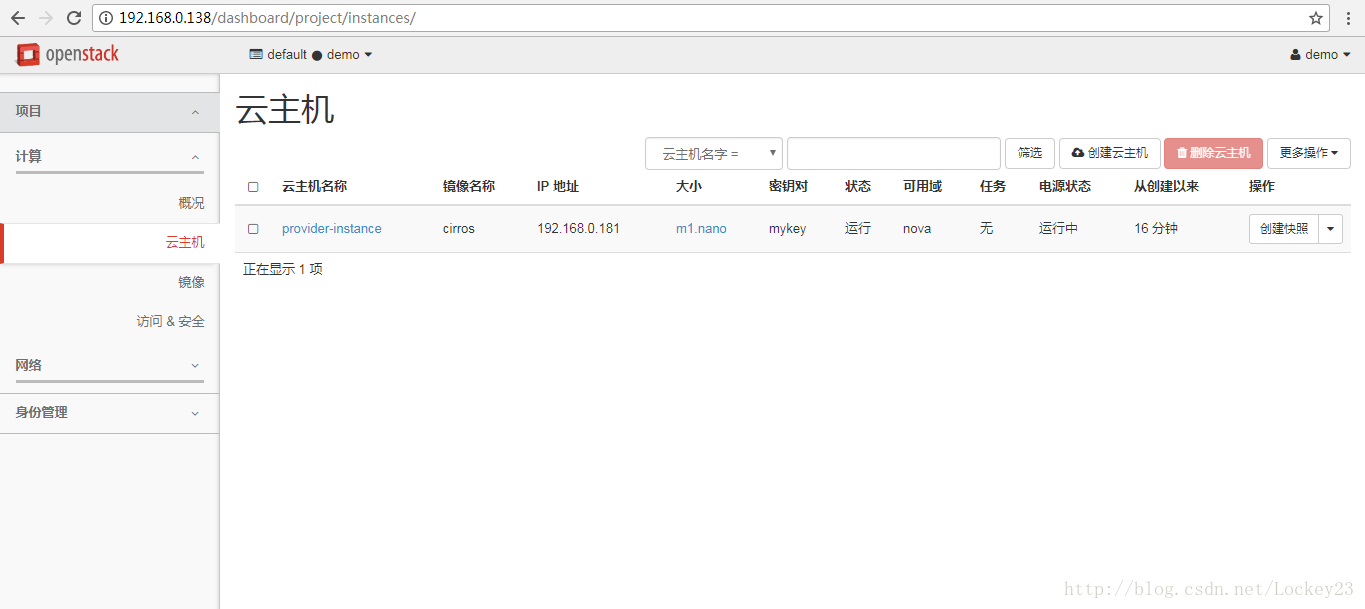

Dashboard访问测试:

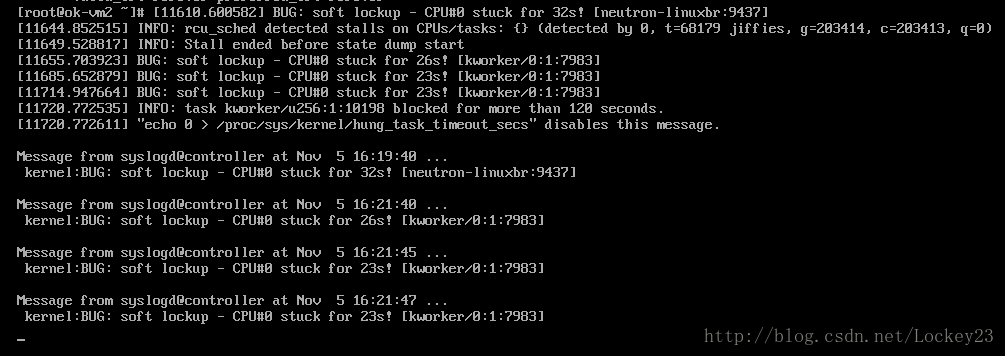

最后来两张搞笑的:

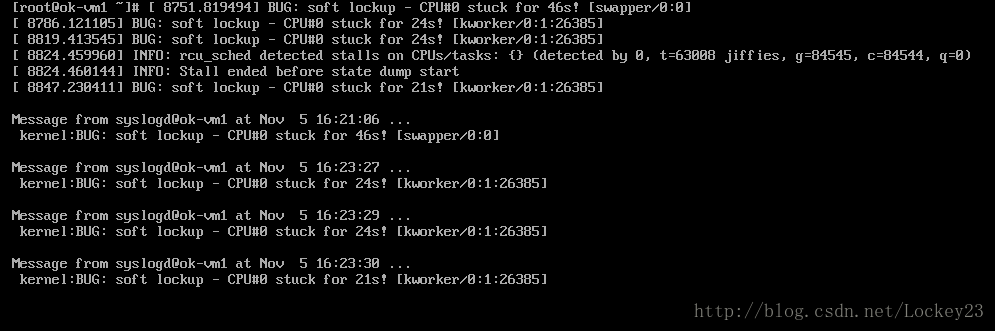

内存不充足就要做好觉悟

相关安装包百度盘链接

实验主机环境

VMware rhel7.2 x86_64bit

实验软件版本:

salt 2017.7.2 (Nitrogen) Mitaka for Red Hat Enterprise Linux 7、CentOS 7

本地解析:

192.168.0.182 ok-vm1 192.168.0.162 controller ok-vm2

安装配置:

| ok-vm1 | ok-vm2 |

|---|---|

| salt-master salt-minion | salt-minion |

| chrony | chrony,mariadb,rabbitmq,Memcached,httpd |

| openstackclient,nova,neutron,cinder | openstackclient,keystone,glance,nova,neutron,Dashboard,cinder |

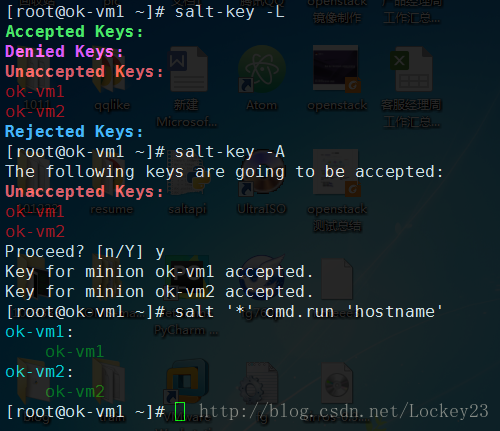

1、SaltStack准备工作

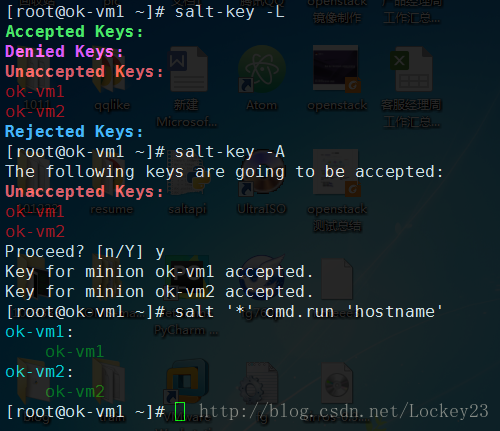

saltstack节点认证

项目结构:

[root@ok-vm1 srv]# tree -L 3 . ├── pillar │?? ├── openstack │?? │?? └── args.sls │?? └── top.sls └── salt ├── chrony │?? ├── files │?? └── install.sls ├── mariadb │?? ├── files │?? └── install.sls ├── memcached │?? ├── files │?? └── install.sls ├── openstack │?? ├── cindercp.sls │?? ├── cinderctl.sls │?? ├── computor.sls │?? ├── contronller.sls │?? ├── dashboard.sls │?? ├── files │?? ├── glance.sls │?? ├── keystone.sls │?? ├── neutroncp.sls │?? ├── neutronctl.sls │?? ├── novacp.sls │?? └── novactl.sls ├── rabbitmq │?? └── install.sls └── top.sls 12 directories, 18 files

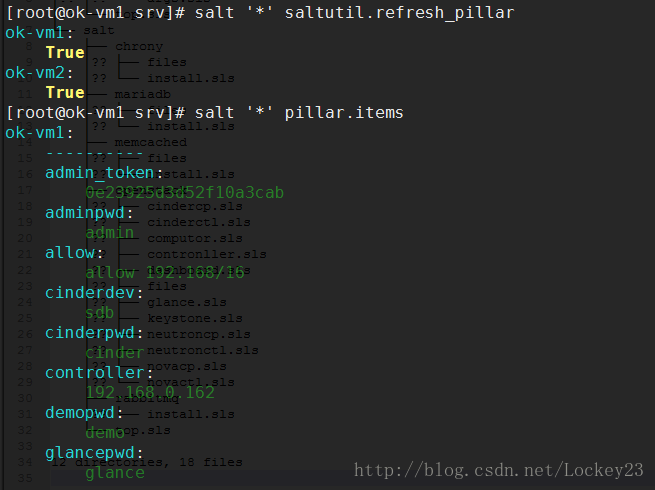

Pillar配置参数

2.项目目录文件分解

2.1 pillar

[root@ok-vm1 pillar]# tree . ├── openstack │ └── args.sls#变参文件 └── top.sls#pillar顶级文件

2.2 salt

[root@ok-vm1 salt]# tree -L 1 . ├── chrony#时间同步服务 ├── mariadb#数据库安装操作 ├── memcached ├── openstack#openstack套件包 ├── rabbitmq#消息队列服务 └── top.sls#salt顶级文件 5 directories, 1 file

2.2.1 salt——top.sls

[root@ok-vm1 salt]# cat top.sls base: 'ok-vm2': - openstack.controller#控制节点安装相关套件 'ok-vm1': - openstack.computor#计算网络存储节点安装相关套件

2.2.2 salt——chrony

[root@ok-vm1 chrony]# tree . ├── files │ └── chrony.conf#日志服务配置文件 └── install.sls#日志服务安装 1 directory, 2 files

2.2.3 salt——rabbitmq

[root@ok-vm1 rabbitmq]# tree . └── install.sls 0 directories, 1 file

2.2.4 salt——mariadb

[root@ok-vm1 mariadb]# tree . ├── files │ ├── openstack.cnf └── install.sls 1 directory, 2 files

2.2.5 salt——memcached

[root@ok-vm1 memcached]# tree . ├── files │ └── memcached#配置文件,主要是开启所有端口的监听 └── install.sls 1 directory, 2 files

2.2.6 salt——openstack

[root@ok-vm1 openstack]# tree . ├── cindercp.sls#cinder计算节点安装 ├── cinderctl.sls#cinder控制节点安装 ├── computor.sls#计算节点软件安装中控文件 ├── controller.sls#控制节点软件安装中控文件 ├── dashboard.sls#控制节点图形界面安装 ├── files#openstack组件内所有服务的配置文件 │ ├── admin-openrc │ ├── cindercp.conf │ ├── cinderctl.conf │ ├── demo-openrc │ ├── dhcp_agent.ini │ ├── glance-api.conf │ ├── glance-registry.conf │ ├── httpd.conf │ ├── keystone.conf │ ├── keystone.sh │ ├── linuxbridge_agent.ini │ ├── local_settings │ ├── lvm.conf │ ├── ml2_conf.ini │ ├── neutroncp.conf │ ├── neutronctl.conf │ ├── novacp.conf │ ├── novactl.conf │ ├── openstack.sh │ └── wsgi-keystone.conf ├── glance.sls#镜像服务安装 ├── keystone.sls#认证服务 ├── neutroncp.sls#计算节点网络服务 ├── neutronctl.sls#控制节点网络服务 ├── novacp.sls#计算节点 └── novactl.sls#控制节点计算服务 1 directory, 31 files

3、SaltStack推送顺序

控制节点:chrony

rabbitmq-server

memcached

mariadb

openstack.keystone

openstack.glance

openstack.nova

openstack.neutron

openstack.dashboard

openstack.cinder

计算节点:

chrony

openstack.nova

openstack.neutron

openstack.cinder

推送之前进行单节点测试:

salt ok-vm2 state.sls openstack.controller test=true salt ok-vm1 state.sls openstack.computor test=true

一般情况下对于服务的启动项报错可以忽略,但是其他的错误一定要解决!!!

整体推送测试,测试除服务启动项报错外没有其它错误方可进行实际推送,请将saltstack超时设置久一点!!!

salt ‘*’ state.highstate test=true

4、服务检验:

数据库检验可以等到推送执行完成后对各数据库表进行查看,确认库和表创建成功

作为 admin 用户,请求认证令牌:

[root@ok-vm2 keystone]# . admin-openrc [root@ok-vm2 keystone]# openstack --os-auth-url http://controller:35357/v3 --os-project-domain-name default --os-user-domain-name default --os-project-name admin --os-username admin token issue Password: +------------+---------------------------------------------------------------------------------------------------------------------------------------+ | Field | Value | +------------+---------------------------------------------------------------------------------------------------------------------------------------+ | expires | 2017-11-01T07:12:20.415935Z | | id | gAAAAABZ-WXEJ_CtxOdJAWzJQEtf1fJt_LOtPEYg8Jgj5va_AQ0Cb7ZEoNYrmK_oI4PemNJr8MXb4XlpvnuAo7ihH7qOLvedW6YaBeQRuySNUYdL8g2RZuz7G9_X50gQN6uEH | | | yqS8zF0W6g-rcuN6F9TQxbIooSu3XcJUBMMVuvIoNTo5VS2uw4 | | project_id | ff2e0400bcdf441498916133adc5a449 | | user_id | 7d027773c47a40d19b05dbeda38f871d

端点查看:

[root@ok-vm2 keystone]# openstack endpoint list +----------------------------------+-----------+--------------+--------------+---------+-----------+------------------------------+ | ID | Region | Service Name | Service Type | Enabled | Interface | URL | +----------------------------------+-----------+--------------+--------------+---------+-----------+------------------------------+ | 0082e8a139f7455584149ea288618883 | RegionOne | keystone | identity | True | internal | http://controller:5000/v3 | | 67e016e82dff462e91788254af3a67c2 | RegionOne | keystone | identity | True | admin | http://controller:35357/v3 | | e222ea31a0344d6d8cb88d54c120a557 | RegionOne | keystone | identity | True | public | http://controller:5000/v3 |

镜像服务检查

下载源镜像: $ wget http://download.cirros-cloud.net/0.3.4/cirros-0.3.4-x86_64-disk.img 使用 QCOW2 磁盘格式, bare 容器格式上传镜像到镜像服务并设置公共可见,这样所有的项目都可以访问它: openstack image create "cirros" --file cirros-0.3.4-x86_64-disk.img --disk-format qcow2 --container-format bare --public 确认镜像的上传并验证属性:因为镜像的属性是public所以所有用户都可以通过一下命令查看 openstack image list

在控制节点查看计算节点是否加入

[root@ok-vm2 keystone]# openstack compute service list +----+------------------+--------+----------+---------+-------+----------------------------+ | Id | Binary | Host | Zone | Status | State | Updated At | +----+------------------+--------+----------+---------+-------+----------------------------+ | 1 | nova-conductor | ok-vm2 | internal | enabled | up | 2017-11-01T08:40:48.000000 | | 2 | nova-scheduler | ok-vm2 | internal | enabled | up | 2017-11-01T08:40:48.000000 | | 3 | nova-consoleauth | ok-vm2 | internal | enabled | up | 2017-11-01T08:40:48.000000 | | 7 | nova-compute | ok-vm1 | nova | enabled | up | 2017-11-01T08:40:53.000000 | +----+------------------+--------+----------+---------+-------+----------------------------+

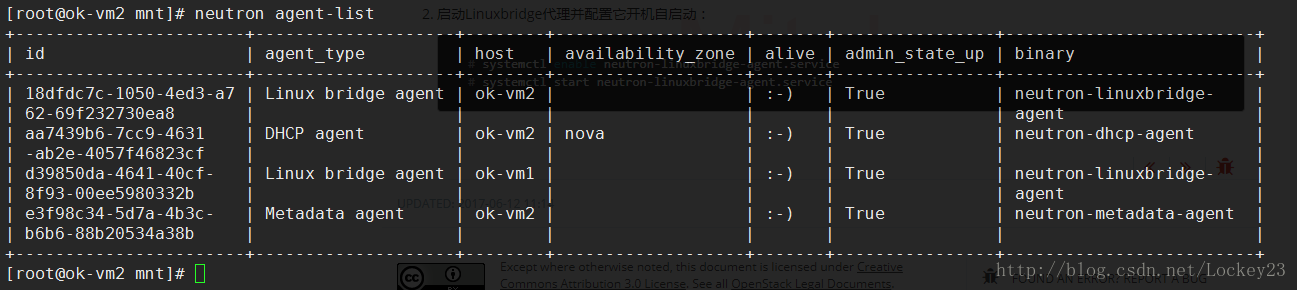

网络检验:

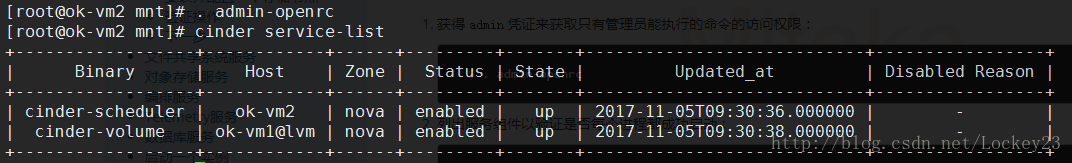

块存储检验:

启动一个实例

创建提供者网络

neutron net-create --shared --provider:physical_network provider --provider:network_type flat provider

在网络上创建一个子网

neutron subnet-create --name provider --allocation-pool start=192.168.0.180,end=192.168.0.200 --dns-nameserver 8.8.8.8 --gateway 192.168.0.1 provider 192.168.0.0/24

创建m1.nano规格的主机

openstack flavor create --id 0 --vcpus 1 --ram 64 --disk 1 m1.nano

生成一个键值对

$ ssh-keygen -q -N "" $ openstack keypair create --public-key ~/.ssh/id_rsa.pub mykey

验证公钥的添加:

$ openstack keypair list

增加安全组规则

$ openstack security group rule create --proto icmp default $ openstack security group rule create --proto tcp --dst-port 22 default

在公有网络上创建实例

列出可用类型: $ openstack flavor list 列出可用镜像: $ openstack image list 列出可用网络: $ openstack network list 列出可用的安全组: $ openstack security group list 创建实例 $ openstack server create --flavor m1.nano --image cirros --nic net-id=3fbce983-0d45-4808-a02f-4cd845f5ca0a --security-group default --key-name mykey provider-instance

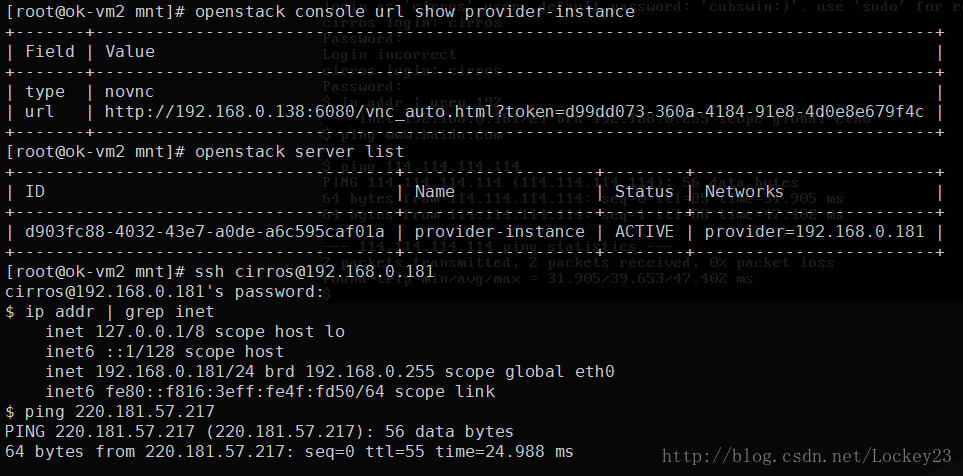

检查实例的状态:

$ openstack server list

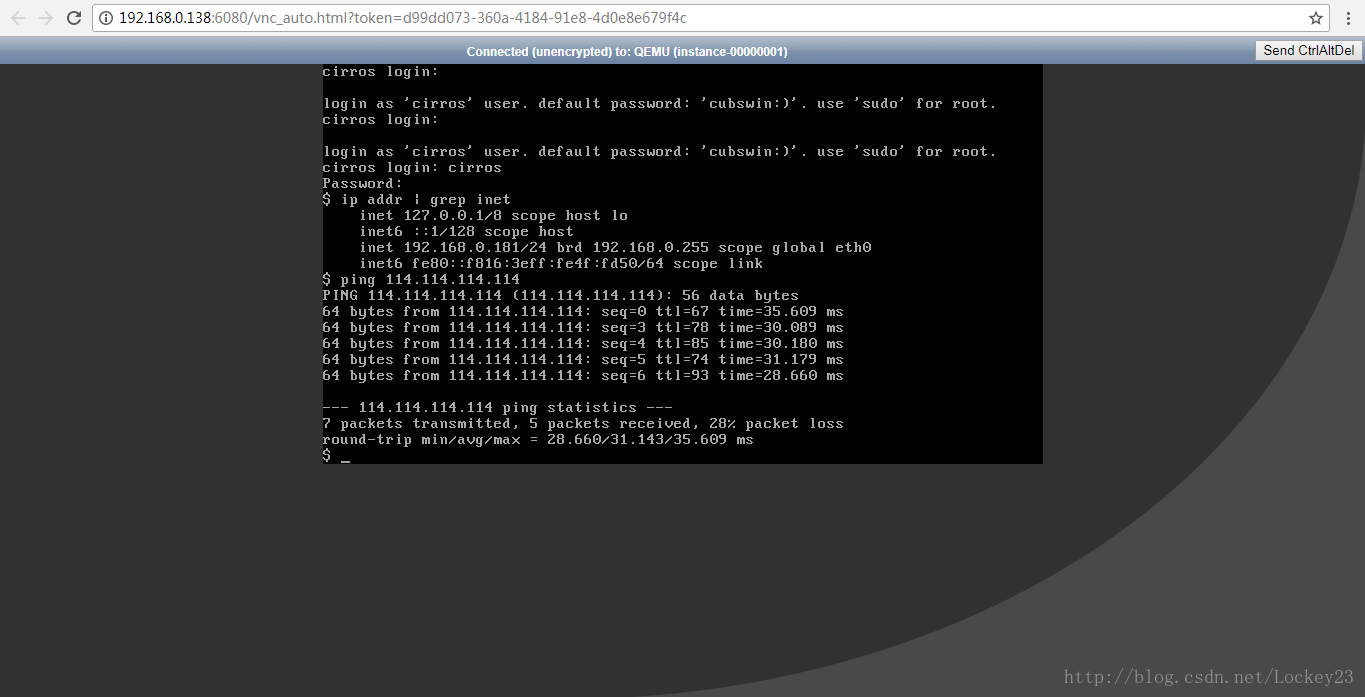

使用虚拟控制台访问实例

获取你实例的 Virtual Network Computing (VNC) 会话URL并从web浏览器访问它:

$ openstack console url show provider-instance

浏览器访问:

Dashboard访问测试:

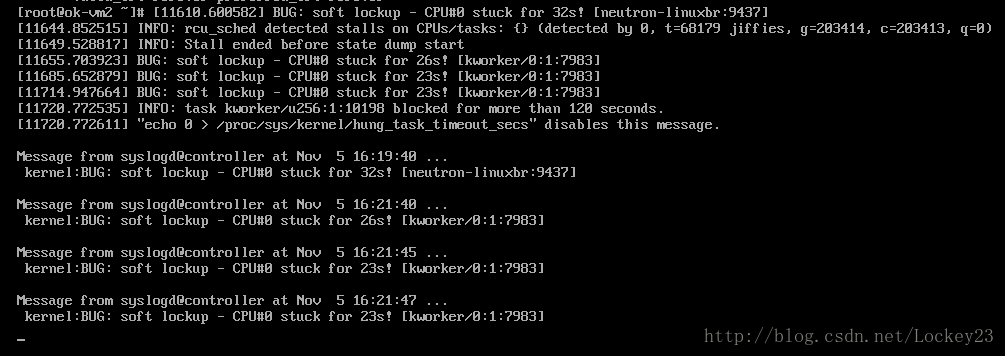

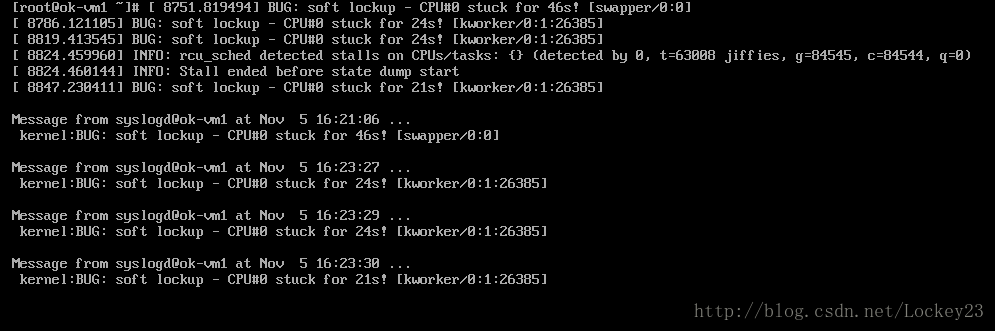

最后来两张搞笑的:

内存不充足就要做好觉悟

相关文章推荐

- 利用saltstack初始化OpenStack服务器环境

- Openstack Integration with VMware vCenter by Devstack and Opencontrail

- Saltstack实现Openstack管理

- salt stack deploy openstack G version

- saltstack-本地安装rpm方式

- 关于CLOSE BY CLIENT STACK TRACE

- saltstack 基础入门文档

- 集中化管理平台saltstack--原理及部署

- 3.saltstack模块介绍

- 学习saltstack之如何使用salt states

- CMDB资产采集SaltStack

- ZOJ1004 Anagrams by Stack

- saltstack/salt的state.sls的使用

- 2、自动化运维之SaltStack远程执行详解

- Saltstack实战配置client_acl

- Saltstack自动化的学习笔记

- saltstack event 实践

- openstack devstack 脚本安装(多结点,计算结点安装)

- 自动化管理工具Saltstack之安装篇(1)

- 自动化管理工具Saltstack之认证篇(3)