hadoop安装部署

2017-09-18 00:00

459 查看

摘要: 独立安装和伪分布安装,小白看了都会部署的教程

JAVA JDK版本:jdk-8u144-x64

Hadoop版本:3.0

创建目录并解压JDK包文件

更新profile配置文件使之立即生效

设置默认Java版本

检测现在java版本默认版本是否和新增一致

确认环境变量是否配置正确

/usr/java/jdk1.8.0_144

/usr/java/jdk1.8.0_144/jre/lib/ext:/usr/java/jdk1.8.0_144/lib/tools.jar

/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin:/usr/java/jdk1.8.0_144/bin:

创建hadoop目录并解压安装包到该目录

创建Hadoop环境变量

vim /etc/profile

更新环境变量目录使之即时生效

确定环境变量是否配置正确

/usr/hadoop/hadoop-3.0.0-alpha4

/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin:/usr/java/jdk1.8.0_144/bin:/usr/hadoop/hadoop-3.0.0-alpha4/bin:/usr/hadoop/hadoop-3.0.0-alpha4/sbin:

指定JAVAHOME路径

检查Hadoop安装版本

Hadoop 3.0.0-alpha4

Source code repository https://git-wip-us.apache.org/repos/asf/hadoop.git -r e324cf8a2a6e55e996414ff281fee757f09d8172

Compiled by andrew on 2017-06-30T01:52Z

Compiled with protoc 2.5.0

From source with checksum 74491a36456845ab59719bc761659d3

This command was run using /usr/hadoop/hadoop-3.0.0-alpha4/share/hadoop/common/hadoop-common-3.0.0-alpha4.jar

查看java运行进程

41233 Jps

==注:除了Jps没有其它进程,说明Hadoop在独立模式下运行==

hdfs-site.xml

yarn-site.xml

mapred-site.xml

vim /usr/hadoop/hadoop-3.0.0-alpha4/sbin/start-dfs.sh

vim /usr/hadoop/hadoop-3.0.0-alpha4/sbin/stop-dfs.sh

在==yarn==启动和停止脚本==顶部空白处==中加入用户定义信息

vim /usr/hadoop/hadoop-3.0.0-alpha4/sbin/start-yarn.sh

vim /usr/hadoop/hadoop-3.0.0-alpha4/sbin/stop-yarn.sh

给予公私密钥安全权限

更改私钥名称

关闭密码登录 ==【如果关闭密码登录务必要把私钥导出来!!!】==

重载sshd服务

访问测试

查看进程树确认登录,bash后边还有ssh

启用

停止

停止

所有停止方式

==可以在$HADOOP_INSTALL/etc中创建hadoop_pseudo的软连接,来使用start-all.sh启动,记得备份原hadoop目录==

如:

这样就可以用默认的 ==start-all.sh== 和 ==stop-all.sh== 启用停止了

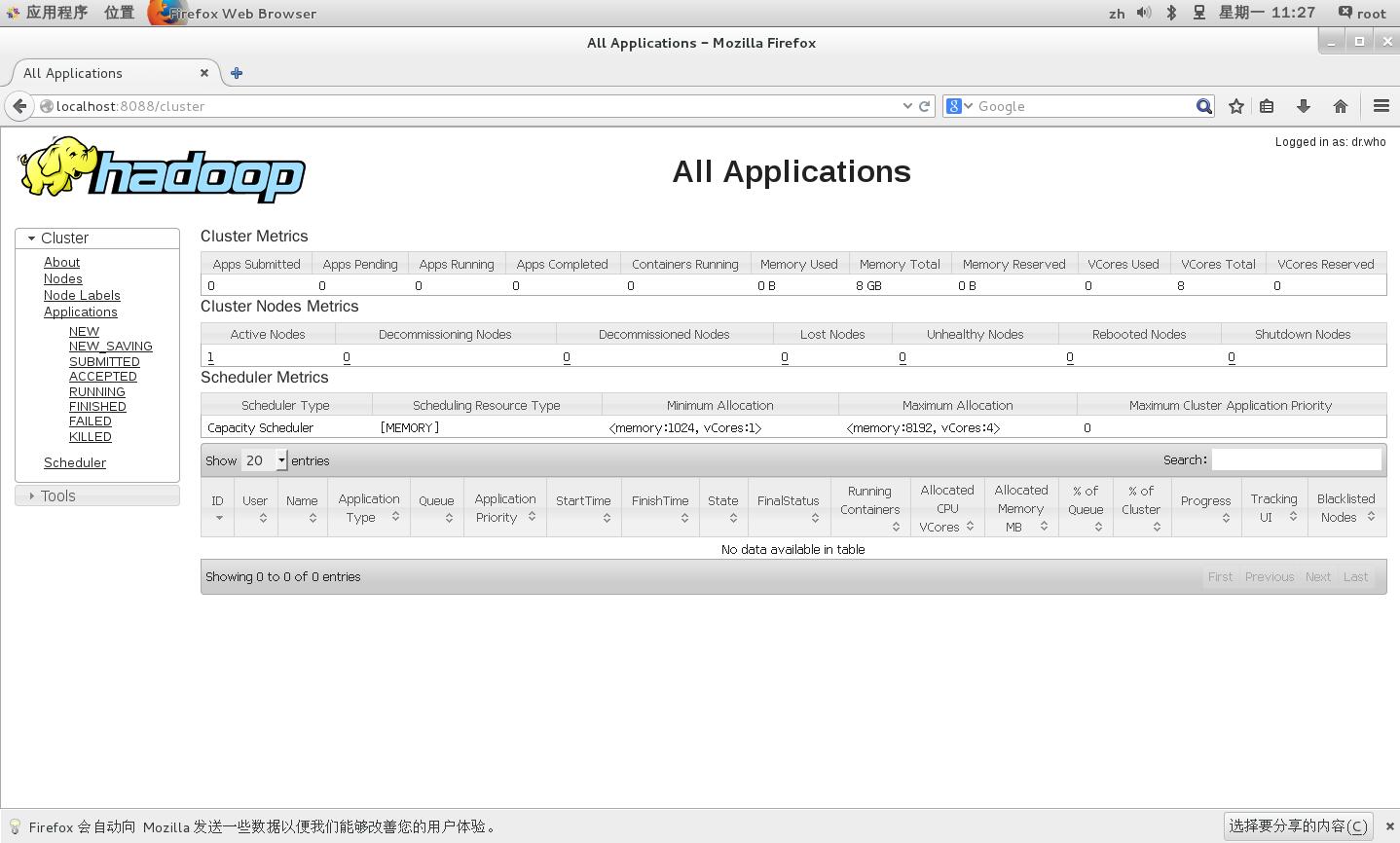

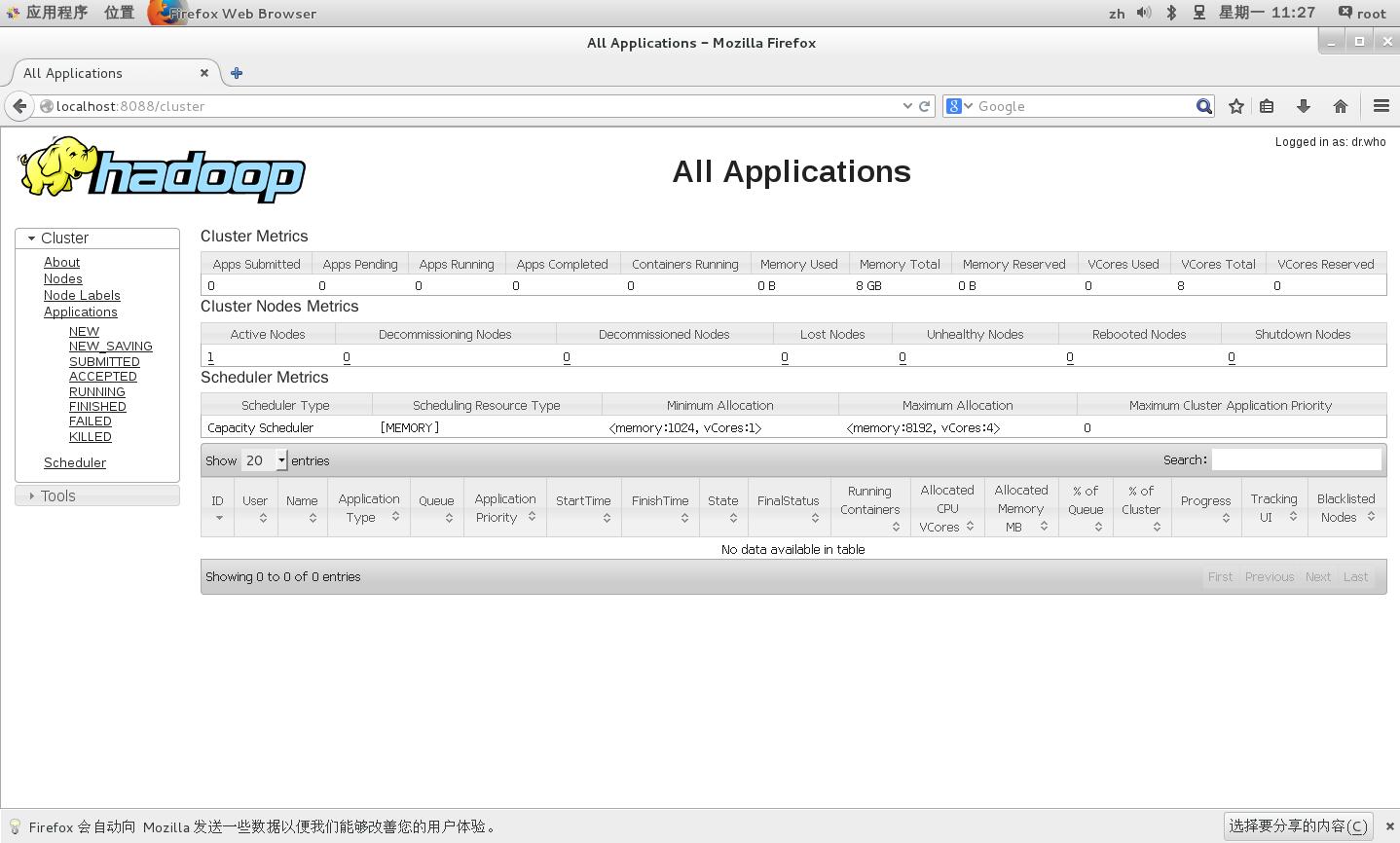

访问ResourceManager地址,默认端口8088

Windows可以直接浏览输入

linux 测试可以使用

创建目录并查看

创建

查看

声明

版本

系统版本:Linux Centos 7.3JAVA JDK版本:jdk-8u144-x64

Hadoop版本:3.0

默认配置文件

core-default.xml hdfs-default.xml yarn-default.xml mapred-default.xml

HDFS守护进程

NameNode SecondaryNameNode DataNode

YARN守护程序

ResourceManager NodeManager WebAppProxy

安装JDK

下载JDK

下载页面http://www.oracle.com/technetwork/java/javase/downloads/jdk8-downloads-2133151.html创建目录并解压JDK包文件

mkdir /usr/java tar xvf jdk-8u144-linux-x64.tar.gz -C /usr/java/

环境变量设置

echo 'export JAVA_HOME=/usr/java/jdk1.8.0_144 export PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin:$JAVA_HOME/bin: export CLASSPATH=$JAVA_HOME/jre/lib/ext:$JAVA_HOME/lib/tools.jar export HADOOP_CONF_DIR=$HADOOP_INSTALL/etc/hadoop_pseudo' >> /etc/profile

更新profile配置文件使之立即生效

source /etc/profile

更新java

update-alternatives --install /usr/bin/java java /usr/java/jdk1.8.0_144/bin/java 100

设置默认Java版本

update-alternatives --config java

共有 2 个提供“java”的程序。 选项 命令 ----------------------------------------------- *+ 1 /usr/lib/jvm/java-1.7.0-openjdk-1.7.0.75-2.5.4.2.el7_0.x86_64/jre/bin/java 2 /usr/java/jdk1.8.0_144/bin/java 按 Enter 保留当前选项[+],或者键入选项编号:2

检测现在java版本默认版本是否和新增一致

java -version

java version "1.8.0_144" Java(TM) SE Runtime Environment (build 1.8.0_144-b01) Java HotSpot(TM) 64-Bit Server VM (build 25.144-b01, mixed mode)

确认环境变量是否配置正确

echo $JAVA_HOME

/usr/java/jdk1.8.0_144

echo $CLASSPATH

/usr/java/jdk1.8.0_144/jre/lib/ext:/usr/java/jdk1.8.0_144/lib/tools.jar

echo $PATH

/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin:/usr/java/jdk1.8.0_144/bin:

安装Hadoop

下载Hadoopwget http://mirrors.hust.edu.cn/apache/hadoop/common/hadoop-3.0.0-alpha4/hadoop-3.0.0-alpha4.tar.gz

创建hadoop目录并解压安装包到该目录

mkdir /usr/hadoop tar xvf hadoop-3.0.0-alpha4.tar.gz -C /usr/hadoop/

创建Hadoop环境变量

vim /etc/profile

export JAVA_HOME=/usr/java/jdk1.8.0_144 export HADOOP_INSTALL=/usr/hadoop/hadoop-3.0.0-alpha4 export PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin:$JAVA_HOME/bin:$HADOOP_INSTALL/bin:$HADOOP_INSTALL/sbin: export CLASSPATH=$JAVA_HOME/jre/lib/ext:$JAVA_HOME/lib/tools.jar export HADOOP_CONF_DIR=$HADOOP_INSTALL/etc/hadoop_pseudo

更新环境变量目录使之即时生效

source /etc/profile

确定环境变量是否配置正确

echo $HADOOP_INSTALL

/usr/hadoop/hadoop-3.0.0-alpha4

echo $PATH

/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin:/usr/java/jdk1.8.0_144/bin:/usr/hadoop/hadoop-3.0.0-alpha4/bin:/usr/hadoop/hadoop-3.0.0-alpha4/sbin:

独立模式

在Hadoop中指定配置目录配置文件vim /usr/hadoop/hadoop-3.0.0-alpha4/etc/hadoop/hadoop-env.sh

指定JAVAHOME路径

JAVA_HOME=/usr/java/jdk1.8.0_144

检查Hadoop安装版本

hadoop version

Hadoop 3.0.0-alpha4

Source code repository https://git-wip-us.apache.org/repos/asf/hadoop.git -r e324cf8a2a6e55e996414ff281fee757f09d8172

Compiled by andrew on 2017-06-30T01:52Z

Compiled with protoc 2.5.0

From source with checksum 74491a36456845ab59719bc761659d3

This command was run using /usr/hadoop/hadoop-3.0.0-alpha4/share/hadoop/common/hadoop-common-3.0.0-alpha4.jar

查看java运行进程

jps

41233 Jps

==注:除了Jps没有其它进程,说明Hadoop在独立模式下运行==

伪分布模式

包装独立模式和伪分布模式共存,拷贝一份配置文件目录cd /usr/hadoop/hadoop-3.0.0-alpha4/etc cp -rpf hadoop hadoop_pseudo

分布式配置参数

core-site.xml<configuration> <property> <name>fs.defaultFS</name> <value>hdfs://localhost:9000</value> </property> </configuration>

hdfs-site.xml

<configuration> <property> <name>dfs.replication</name> <value>1</value> </property> </configuration>

yarn-site.xml

<configuration> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <property> <name>yarn.nodemanager.env-whitelist</name> <value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME</value> </property> </configuration>

mapred-site.xml

<configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> </configuration>

用户定义信息

在==Hadoop==启动和停止脚本==顶部空白处==中加入用户定义信息vim /usr/hadoop/hadoop-3.0.0-alpha4/sbin/start-dfs.sh

vim /usr/hadoop/hadoop-3.0.0-alpha4/sbin/stop-dfs.sh

HDFS_DATANODE_USER=root HADOOP_SECURE_DN_USER=hdfs HDFS_NAMENODE_USER=root HDFS_SECONDARYNAMENODE_USER=root

在==yarn==启动和停止脚本==顶部空白处==中加入用户定义信息

vim /usr/hadoop/hadoop-3.0.0-alpha4/sbin/start-yarn.sh

vim /usr/hadoop/hadoop-3.0.0-alpha4/sbin/stop-yarn.sh

YARN_RESOURCEMANAGER_USER=root HADOOP_SECURE_DN_USER=yarn YARN_NODEMANAGER_USER=root

通信密钥

建立通信密钥对ssh-keygen

Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Created directory '/root/.ssh'. Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: c1:d5:d8:f5:c5:4a:20:68:02:34:aa:d2:a2:35:eb:25 root@localhost.localdomain The key's randomart image is: +--[ RSA 2048]----+ | .+. .o+.o...| | . ...o...o ..o| | . oo . ..| | o . . | |+ + S | |oo o | |. E . | | . o | | . | +-----------------+

给予公私密钥安全权限

chmod 400 /root/.ssh/*

更改私钥名称

mv /root/.ssh/id_rsa.pub /root/.ssh/authorized_keys

关闭密码登录 ==【如果关闭密码登录务必要把私钥导出来!!!】==

sed -i 's/PasswordAuthentication yes/PasswordAuthentication no/g' /etc/ssh/sshd_config

重载sshd服务

systemctl restart sshd

访问测试

ssh localhost

查看进程树确认登录,bash后边还有ssh

pstree | grep ssh

|-sshd---bash |-sshd-+-sshd---bash---ssh | `-sshd---bash-+-grep

服务启用停止

HDFS使用

HDFS文件系统格式化hadoop namenode -format

启用

start-dfs.sh --config $HADOOP_INSTALL/etc/hadoop_pseudo

停止

stop-dfs.sh --config $HADOOP_INSTALL/etc/hadoop_pseudo

yarn使用

启用start-yarn.sh --config $HADOOP_INSTALL/etc/hadoop_pseudo

停止

stop-yarn.sh --config $HADOOP_INSTALL/etc/hadoop_pseudo

通用方式

所有启用方式start-all.sh --config $HADOOP_INSTALL/etc/hadoop_pseudo

所有停止方式

stop-all.sh --config $HADOOP_INSTALL/etc/hadoop_pseudo

==可以在$HADOOP_INSTALL/etc中创建hadoop_pseudo的软连接,来使用start-all.sh启动,记得备份原hadoop目录==

如:

mv /usr/hadoop/hadoop-3.0.0-alpha4/etc/hadoop /usr/hadoop/hadoop-3.0.0-alpha4/etc/hadoop_alone ln -s /usr/hadoop/hadoop-3.0.0-alpha4/etc/hadoop_pseudo /usr/hadoop/hadoop-3.0.0-alpha4/etc/hadoop

这样就可以用默认的 ==start-all.sh== 和 ==stop-all.sh== 启用停止了

测试

确认守护进程jps -l

7442 org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode 11618 sun.tools.jps.Jps 11240 org.apache.hadoop.yarn.server.nodemanager.NodeManager 7227 org.apache.hadoop.hdfs.server.datanode.DataNode 7103 org.apache.hadoop.hdfs.server.namenode.NameNode 11071 org.apache.hadoop.yarn.server.resourcemanager.ResourceManager

访问ResourceManager地址,默认端口8088

Windows可以直接浏览输入

http://localhost:8088

linux 测试可以使用

curl http://localhost:8088/cluster/hodes

创建目录并查看

创建

hadoop fs -mkdir /test

查看

hadoop fs -ls /

drwxr-xr-x - root supergroup 0 2017-09-16 16:10 /test

相关文章推荐

- centos6.5之Hadoop1.2.1完全分布式部署安装

- Hadoop+hive 集群安装部署 (三)

- 用 Hadoop 进行分布式并行编程, 第 1 部分 基本概念与安装部署

- hadoop入门第七步---hive部署安装(apache-hive-1.1.0)

- CDH4.1(hadoop-2.0.0-cdh4.1.2)安装部署文档

- Hadoop2.7.2分布式部署3(安装部署hadoop分布式)

- 生产环境上的HADOOP安装部署注意事项(HDP版)

- Ganglia监控Hadoop集群的安装部署

- hadoop学习笔记(7)-Hadoop+Zookeeper+HBase分布式安装部署

- hadoop安装和维护01--JDK环境部署

- Hadoop实战-初级部分 之 Hadoop安装部署

- hadoop-2.7.4 安装部署: HA+Federation

- spark-2.2.0 集群安装部署以及hadoop集群部署

- 【原创 Hadoop&Spark 动手实践 1】Hadoop2.7.3 安装部署实践

- hadoop HA架构安装部署(QJM HA)

- hadoop2.7.3在centos7上部署安装(单机版)

- hadoop2.x 伪分布安装部署

- hadoop安装部署1------前期准备

- Hadoop安装部署

- Hadoop2.2集群安装配置-Spark集群安装部署