FFmpeg源码剖析-框架:process_input()

2017-09-17 00:32

609 查看

process_input()函数位于ffmpeg.c

它的作用的从文件中读取一个packet,并解码;

/*

* 它是在open_input_file()->add_input_stream()中初始化的,

* Add all the streams from the given input file to the global

* list of input streams. */

* static void add_input_streams(OptionsContext *o, AVFormatContext *ic)

* {

* for (i = 0; i < ic->nb_streams; i++) {

* InputStream *ist = av_mallocz(sizeof(*ist));

*

* GROW_ARRAY(input_streams, nb_input_streams);

* input_streams[nb_input_streams - 1] = ist;

* }

*/

InputStream **input_streams = NULL;

/*

* Return

* - 0 -- one packet was read and processed

* - AVERROR(EAGAIN) -- no packets were available for selected file,

* this function should be called again

* - AVERROR_EOF -- this function should not be called again

*/

static int process_input(int file_index)

{

InputFile *ifile = input_files[file_index];

AVFormatContext *is;

InputStream *ist;

AVPacket pkt;

int ret, i, j;

int64_t duration;

int64_t pkt_dts;

is = ifile->ctx;

/* 读取一个packet(可以是视频的一帧,音频的多帧) */

ret = get_input_packet(ifile, &pkt);

reset_eagain();

ist = input_streams[ifile->ist_index + pkt.stream_index];

ist->data_size += pkt.size;

ist->nb_packets++;

if(!ist->wrap_correction_done && is->start_time != AV_NOPTS_VALUE && ist->st->pts_wrap_bits < 64){

int64_t stime, stime2;

// Correcting starttime based on the enabled streams

stime = av_rescale_q(is->start_time, AV_TIME_BASE_Q, ist->st->time_base);

stime2= stime + (1ULL<st->pts_wrap_bits);

ist->wrap_correction_done = 1;

if(stime2 > stime && pkt.dts != AV_NOPTS_VALUE && pkt.dts > stime + (1LL<<(ist->st->pts_wrap_bits-1))) {

pkt.dts -= 1ULL<st->pts_wrap_bits;

ist->wrap_correction_done = 0;

}

if(stime2 > stime && pkt.pts != AV_NOPTS_VALUE && pkt.pts > stime + (1LL<<(ist->st->pts_wrap_bits-1))) {

pkt.pts -= 1ULL<st->pts_wrap_bits;

ist->wrap_correction_done = 0;

}

}

/* 将packet中的时间戳转换成ffmpeg内部的时间戳 */

if (pkt.dts != AV_NOPTS_VALUE)

pkt.dts += av_rescale_q(ifile->ts_offset, AV_TIME_BASE_Q, ist->st->time_base);

if (pkt.pts != AV_NOPTS_VALUE)

pkt.pts += av_rescale_q(ifile->ts_offset, AV_TIME_BASE_Q, ist->st->time_base);

if (pkt.pts != AV_NOPTS_VALUE)

pkt.pts *= ist->ts_scale;

if (pkt.dts != AV_NOPTS_VALUE)

pkt.dts *= ist->ts_scale;

pkt_dts = av_rescale_q_rnd(pkt.dts, ist->st->time_base, AV_TIME_BASE_Q, AV_ROUND_NEAR_INF|AV_ROUND_PASS_MINMAX);

if ((ist->dec_ctx->codec_type == AVMEDIA_TYPE_VIDEO ||

ist->dec_ctx->codec_type == AVMEDIA_TYPE_AUDIO) &&

pkt_dts != AV_NOPTS_VALUE && ist->next_dts == AV_NOPTS_VALUE && !copy_ts

&& (is->iformat->flags & AVFMT_TS_DISCONT) && ifile->last_ts != AV_NOPTS_VALUE) {

int64_t delta = pkt_dts - ifile->last_ts;

if (delta < -1LL*dts_delta_threshold*AV_TIME_BASE ||

delta > 1LL*dts_delta_threshold*AV_TIME_BASE){

ifile->ts_offset -= delta;

av_log(NULL, AV_LOG_DEBUG,

"Inter stream timestamp discontinuity %"PRId64", new offset= %"PRId64"\n",

delta, ifile->ts_offset);

pkt.dts -= av_rescale_q(delta, AV_TIME_BASE_Q, ist->st->time_base);

if (pkt.pts != AV_NOPTS_VALUE)

pkt.pts -= av_rescale_q(delta, AV_TIME_BASE_Q, ist->st->time_base);

}

}

/* 计算出当前packet所包含的音频/视频帧在显示时要持续的时长 */

duration = av_rescale_q(ifile->duration, ifile->time_base, ist->st->time_base);

if (pkt.pts != AV_NOPTS_VALUE) {

pkt.pts += duration;

ist->max_pts = FFMAX(pkt.pts, ist->max_pts);

ist->min_pts = FFMIN(pkt.pts, ist->min_pts);

}

if (pkt.dts != AV_NOPTS_VALUE)

pkt.dts += duration;

pkt_dts = av_rescale_q_rnd(pkt.dts, ist->st->time_base, AV_TIME_BASE_Q, AV_ROUND_NEAR_INF|AV_ROUND_PASS_MINMAX);

if ((ist->dec_ctx->codec_type == AVMEDIA_TYPE_VIDEO ||

ist->dec_ctx->codec_type == AVMEDIA_TYPE_AUDIO) &&

pkt_dts != AV_NOPTS_VALUE && ist->next_dts != AV_NOPTS_VALUE &&

!copy_ts) {

int64_t delta = pkt_dts - ist->next_dts;

if (is->iformat->flags & AVFMT_TS_DISCONT) {

if (delta < -1LL*dts_delta_threshold*AV_TIME_BASE ||

delta > 1LL*dts_delta_threshold*AV_TIME_BASE ||

pkt_dts + AV_TIME_BASE/10 < FFMAX(ist->pts, ist->dts)) {

ifile->ts_offset -= delta;

av_log(NULL, AV_LOG_DEBUG,

"timestamp discontinuity %"PRId64", new offset= %"PRId64"\n",

delta, ifile->ts_offset);

pkt.dts -= av_rescale_q(delta, AV_TIME_BASE_Q, ist->st->time_base);

if (pkt.pts != AV_NOPTS_VALUE)

pkt.pts -= av_rescale_q(delta, AV_TIME_BASE_Q, ist->st->time_base);

}

} else {

if ( delta < -1LL*dts_error_threshold*AV_TIME_BASE ||

delta > 1LL*dts_error_threshold*AV_TIME_BASE) {

av_log(NULL, AV_LOG_WARNING, "DTS %"PRId64", next:%"PRId64" st:%d invalid dropping\n", pkt.dts, ist->next_dts, pkt.stream_index);

pkt.dts = AV_NOPTS_VALUE;

}

if (pkt.pts != AV_NOPTS_VALUE){

int64_t pkt_pts = av_rescale_q(pkt.pts, ist->st->time_base, AV_TIME_BASE_Q);

delta = pkt_pts - ist->next_dts;

if ( delta < -1LL*dts_error_threshold*AV_TIME_BASE ||

delta > 1LL*dts_error_threshold*AV_TIME_BASE) {

av_log(NULL, AV_LOG_WARNING, "PTS %"PRId64", next:%"PRId64" invalid dropping st:%d\n", pkt.pts, ist->next_dts, pkt.stream_index);

pkt.pts = AV_NOPTS_VALUE;

}

}

}

}

if (pkt.dts != AV_NOPTS_VALUE)

ifile->last_ts = av_rescale_q(pkt.dts, ist->st->time_base, AV_TIME_BASE_Q);

sub2video_heartbeat(ist, pkt.pts);

/* 解码当前的这个packet */

process_input_packet(ist, &pkt, 0);

discard_packet:

av_packet_unref(&pkt);

return 0;

}

static int process_input_packet(InputStream *ist, const AVPacket *pkt, int no_eof)

{

int ret = 0, i;

int repeating = 0;

int eof_reached = 0;

AVPacket avpkt;

if (!ist->saw_first_ts) {

ist->dts = ist->st->avg_frame_rate.num ? - ist->dec_ctx->has_b_frames * AV_TIME_BASE / av_q2d(ist->st->avg_frame_rate) : 0;

ist->pts = 0;

if (pkt && pkt->pts != AV_NOPTS_VALUE && !ist->decoding_needed) {

ist->dts += av_rescale_q(pkt->pts, ist->st->time_base, AV_TIME_BASE_Q);

ist->pts = ist->dts; //unused but better to set it to a value thats not totally wrong

}

ist->saw_first_ts = 1;

}

if (ist->next_dts == AV_NOPTS_VALUE)

ist->next_dts = ist->dts;

if (ist->next_pts == AV_NOPTS_VALUE)

ist->next_pts = ist->pts;

if (!pkt) {

/* EOF handling */

av_init_packet(&avpkt);

avpkt.data = NULL;

avpkt.size = 0;

} else {

avpkt = *pkt;

}

if (pkt && pkt->dts != AV_NOPTS_VALUE) {

ist->next_dts = ist->dts = av_rescale_q(pkt->dts, ist->st->time_base, AV_TIME_BASE_Q);

if (ist->dec_ctx->codec_type != AVMEDIA_TYPE_VIDEO || !ist->decoding_needed)

ist->next_pts = ist->pts = ist->dts;

}

// while we have more to decode or while the decoder did output something on EOF

while (ist->decoding_needed) {

int duration = 0;

int got_output = 0;

ist->pts = ist->next_pts;

ist->dts = ist->next_dts;

/* 依据当前的packet是视频、音频还是数据,选择对应的解码器解码当前的packet */

switch (ist->dec_ctx->codec_type) {

case AVMEDIA_TYPE_AUDIO:

ret = decode_audio (ist, repeating ? NULL : &avpkt, &got_output);

break;

case AVMEDIA_TYPE_VIDEO:

ret = decode_video (ist, repeating ? NULL : &avpkt, &got_output, !pkt);

if (!repeating || !pkt || got_output) {

if (pkt && pkt->duration) {

duration = av_rescale_q(pkt->duration, ist->st->time_base, AV_TIME_BASE_Q);

} else if(ist->dec_ctx->framerate.num != 0 && ist->dec_ctx->framerate.den != 0) {

int ticks= av_stream_get_parser(ist->st) ? av_stream_get_parser(ist->st)->repeat_pict+1 : ist->dec_ctx->ticks_per_frame;

duration = ((int64_t)AV_TIME_BASE *

ist->dec_ctx->framerate.den * ticks) /

ist->dec_ctx->framerate.num / ist->dec_ctx->ticks_per_frame;

}

if(ist->dts != AV_NOPTS_VALUE && duration) {

ist->next_dts += duration;

}else

ist->next_dts = AV_NOPTS_VALUE;

}

if (got_output)

ist->next_pts += duration; //FIXME the duration is not correct in some cases

break;

case AVMEDIA_TYPE_SUBTITLE:

if (repeating)

break;

ret = transcode_subtitles(ist, &avpkt, &got_output);

if (!pkt && ret >= 0)

ret = AVERROR_EOF;

break;

default:

return -1;

}

if (ret == AVERROR_EOF) {

eof_reached = 1;

break;

}

if (ret < 0) {

av_log(NULL, AV_LOG_ERROR, "Error while decoding stream #%d:%d: %s\n",

ist->file_index, ist->st->index, av_err2str(ret));

if (exit_on_error)

exit_program(1);

// Decoding might not terminate if we're draining the decoder, and

// the decoder keeps returning an error.

// This should probably be considered a libavcodec issue.

// Sample: fate-vsynth1-dnxhd-720p-hr-lb

if (!pkt)

eof_reached = 1;

break;

}

if (!got_output)

break;

// During draining, we might get multiple output frames in this loop.

// ffmpeg.c does not drain the filter chain on configuration changes,

// which means if we send multiple frames at once to the filters, and

// one of those frames changes configuration, the buffered frames will

// be lost. This can upset certain FATE tests.

// Decode only 1 frame per call on EOF to appease these FATE tests.

// The ideal solution would be to rewrite decoding to use the new

// decoding API in a better way.

if (!pkt)

break;

repeating = 1;

}

/* after flushing, send an EOF on all the filter inputs attached to the stream */

/* except when looping we need to flush but not to send an EOF */

if (!pkt && ist->decoding_needed && eof_reached && !no_eof) {

int ret = send_filter_eof(ist);

if (ret < 0) {

av_log(NULL, AV_LOG_FATAL, "Error marking filters as finished\n");

exit_program(1);

}

}

/* handle stream copy */

...

for (i = 0; pkt && i < nb_output_streams; i++) {

OutputStream *ost = output_streams[i];

if (!check_output_constraints(ist, ost) || ost->encoding_needed)

continue;

do_streamcopy(ist, ost, pkt);

}

return !eof_reached;

}

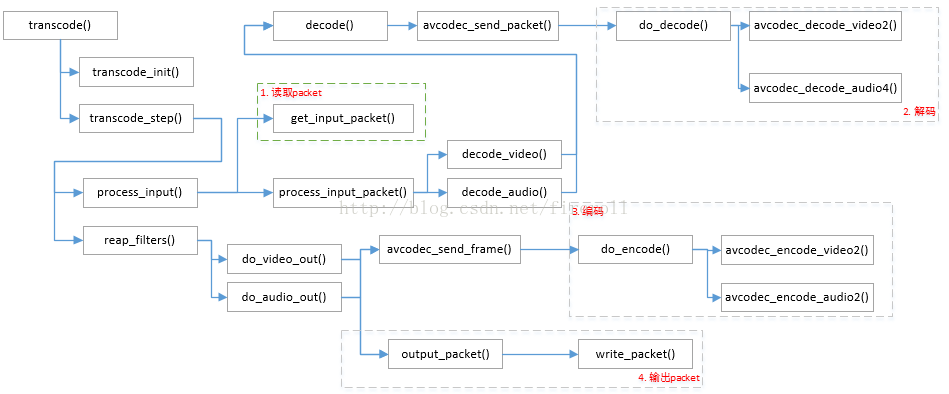

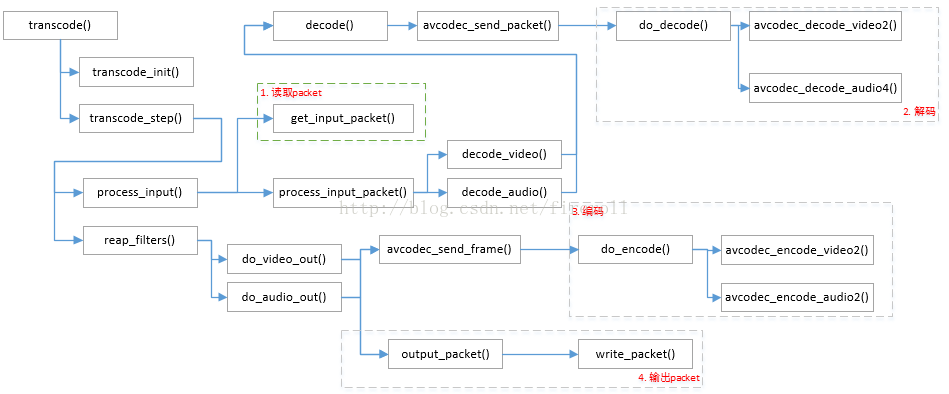

1. 函数概述

它的作用的从文件中读取一个packet,并解码;

2. 函数调用结构图

3. 代码分析

/* * 它是在open_input_file()->add_input_stream()中初始化的,

* Add all the streams from the given input file to the global

* list of input streams. */

* static void add_input_streams(OptionsContext *o, AVFormatContext *ic)

* {

* for (i = 0; i < ic->nb_streams; i++) {

* InputStream *ist = av_mallocz(sizeof(*ist));

*

* GROW_ARRAY(input_streams, nb_input_streams);

* input_streams[nb_input_streams - 1] = ist;

* }

*/

InputStream **input_streams = NULL;

/*

* Return

* - 0 -- one packet was read and processed

* - AVERROR(EAGAIN) -- no packets were available for selected file,

* this function should be called again

* - AVERROR_EOF -- this function should not be called again

*/

static int process_input(int file_index)

{

InputFile *ifile = input_files[file_index];

AVFormatContext *is;

InputStream *ist;

AVPacket pkt;

int ret, i, j;

int64_t duration;

int64_t pkt_dts;

is = ifile->ctx;

/* 读取一个packet(可以是视频的一帧,音频的多帧) */

ret = get_input_packet(ifile, &pkt);

reset_eagain();

ist = input_streams[ifile->ist_index + pkt.stream_index];

ist->data_size += pkt.size;

ist->nb_packets++;

if(!ist->wrap_correction_done && is->start_time != AV_NOPTS_VALUE && ist->st->pts_wrap_bits < 64){

int64_t stime, stime2;

// Correcting starttime based on the enabled streams

stime = av_rescale_q(is->start_time, AV_TIME_BASE_Q, ist->st->time_base);

stime2= stime + (1ULL<st->pts_wrap_bits);

ist->wrap_correction_done = 1;

if(stime2 > stime && pkt.dts != AV_NOPTS_VALUE && pkt.dts > stime + (1LL<<(ist->st->pts_wrap_bits-1))) {

pkt.dts -= 1ULL<st->pts_wrap_bits;

ist->wrap_correction_done = 0;

}

if(stime2 > stime && pkt.pts != AV_NOPTS_VALUE && pkt.pts > stime + (1LL<<(ist->st->pts_wrap_bits-1))) {

pkt.pts -= 1ULL<st->pts_wrap_bits;

ist->wrap_correction_done = 0;

}

}

/* 将packet中的时间戳转换成ffmpeg内部的时间戳 */

if (pkt.dts != AV_NOPTS_VALUE)

pkt.dts += av_rescale_q(ifile->ts_offset, AV_TIME_BASE_Q, ist->st->time_base);

if (pkt.pts != AV_NOPTS_VALUE)

pkt.pts += av_rescale_q(ifile->ts_offset, AV_TIME_BASE_Q, ist->st->time_base);

if (pkt.pts != AV_NOPTS_VALUE)

pkt.pts *= ist->ts_scale;

if (pkt.dts != AV_NOPTS_VALUE)

pkt.dts *= ist->ts_scale;

pkt_dts = av_rescale_q_rnd(pkt.dts, ist->st->time_base, AV_TIME_BASE_Q, AV_ROUND_NEAR_INF|AV_ROUND_PASS_MINMAX);

if ((ist->dec_ctx->codec_type == AVMEDIA_TYPE_VIDEO ||

ist->dec_ctx->codec_type == AVMEDIA_TYPE_AUDIO) &&

pkt_dts != AV_NOPTS_VALUE && ist->next_dts == AV_NOPTS_VALUE && !copy_ts

&& (is->iformat->flags & AVFMT_TS_DISCONT) && ifile->last_ts != AV_NOPTS_VALUE) {

int64_t delta = pkt_dts - ifile->last_ts;

if (delta < -1LL*dts_delta_threshold*AV_TIME_BASE ||

delta > 1LL*dts_delta_threshold*AV_TIME_BASE){

ifile->ts_offset -= delta;

av_log(NULL, AV_LOG_DEBUG,

"Inter stream timestamp discontinuity %"PRId64", new offset= %"PRId64"\n",

delta, ifile->ts_offset);

pkt.dts -= av_rescale_q(delta, AV_TIME_BASE_Q, ist->st->time_base);

if (pkt.pts != AV_NOPTS_VALUE)

pkt.pts -= av_rescale_q(delta, AV_TIME_BASE_Q, ist->st->time_base);

}

}

/* 计算出当前packet所包含的音频/视频帧在显示时要持续的时长 */

duration = av_rescale_q(ifile->duration, ifile->time_base, ist->st->time_base);

if (pkt.pts != AV_NOPTS_VALUE) {

pkt.pts += duration;

ist->max_pts = FFMAX(pkt.pts, ist->max_pts);

ist->min_pts = FFMIN(pkt.pts, ist->min_pts);

}

if (pkt.dts != AV_NOPTS_VALUE)

pkt.dts += duration;

pkt_dts = av_rescale_q_rnd(pkt.dts, ist->st->time_base, AV_TIME_BASE_Q, AV_ROUND_NEAR_INF|AV_ROUND_PASS_MINMAX);

if ((ist->dec_ctx->codec_type == AVMEDIA_TYPE_VIDEO ||

ist->dec_ctx->codec_type == AVMEDIA_TYPE_AUDIO) &&

pkt_dts != AV_NOPTS_VALUE && ist->next_dts != AV_NOPTS_VALUE &&

!copy_ts) {

int64_t delta = pkt_dts - ist->next_dts;

if (is->iformat->flags & AVFMT_TS_DISCONT) {

if (delta < -1LL*dts_delta_threshold*AV_TIME_BASE ||

delta > 1LL*dts_delta_threshold*AV_TIME_BASE ||

pkt_dts + AV_TIME_BASE/10 < FFMAX(ist->pts, ist->dts)) {

ifile->ts_offset -= delta;

av_log(NULL, AV_LOG_DEBUG,

"timestamp discontinuity %"PRId64", new offset= %"PRId64"\n",

delta, ifile->ts_offset);

pkt.dts -= av_rescale_q(delta, AV_TIME_BASE_Q, ist->st->time_base);

if (pkt.pts != AV_NOPTS_VALUE)

pkt.pts -= av_rescale_q(delta, AV_TIME_BASE_Q, ist->st->time_base);

}

} else {

if ( delta < -1LL*dts_error_threshold*AV_TIME_BASE ||

delta > 1LL*dts_error_threshold*AV_TIME_BASE) {

av_log(NULL, AV_LOG_WARNING, "DTS %"PRId64", next:%"PRId64" st:%d invalid dropping\n", pkt.dts, ist->next_dts, pkt.stream_index);

pkt.dts = AV_NOPTS_VALUE;

}

if (pkt.pts != AV_NOPTS_VALUE){

int64_t pkt_pts = av_rescale_q(pkt.pts, ist->st->time_base, AV_TIME_BASE_Q);

delta = pkt_pts - ist->next_dts;

if ( delta < -1LL*dts_error_threshold*AV_TIME_BASE ||

delta > 1LL*dts_error_threshold*AV_TIME_BASE) {

av_log(NULL, AV_LOG_WARNING, "PTS %"PRId64", next:%"PRId64" invalid dropping st:%d\n", pkt.pts, ist->next_dts, pkt.stream_index);

pkt.pts = AV_NOPTS_VALUE;

}

}

}

}

if (pkt.dts != AV_NOPTS_VALUE)

ifile->last_ts = av_rescale_q(pkt.dts, ist->st->time_base, AV_TIME_BASE_Q);

sub2video_heartbeat(ist, pkt.pts);

/* 解码当前的这个packet */

process_input_packet(ist, &pkt, 0);

discard_packet:

av_packet_unref(&pkt);

return 0;

}

4.1 process_input_packet()

/* pkt = NULL means EOF (needed to flush decoder buffers) */static int process_input_packet(InputStream *ist, const AVPacket *pkt, int no_eof)

{

int ret = 0, i;

int repeating = 0;

int eof_reached = 0;

AVPacket avpkt;

if (!ist->saw_first_ts) {

ist->dts = ist->st->avg_frame_rate.num ? - ist->dec_ctx->has_b_frames * AV_TIME_BASE / av_q2d(ist->st->avg_frame_rate) : 0;

ist->pts = 0;

if (pkt && pkt->pts != AV_NOPTS_VALUE && !ist->decoding_needed) {

ist->dts += av_rescale_q(pkt->pts, ist->st->time_base, AV_TIME_BASE_Q);

ist->pts = ist->dts; //unused but better to set it to a value thats not totally wrong

}

ist->saw_first_ts = 1;

}

if (ist->next_dts == AV_NOPTS_VALUE)

ist->next_dts = ist->dts;

if (ist->next_pts == AV_NOPTS_VALUE)

ist->next_pts = ist->pts;

if (!pkt) {

/* EOF handling */

av_init_packet(&avpkt);

avpkt.data = NULL;

avpkt.size = 0;

} else {

avpkt = *pkt;

}

if (pkt && pkt->dts != AV_NOPTS_VALUE) {

ist->next_dts = ist->dts = av_rescale_q(pkt->dts, ist->st->time_base, AV_TIME_BASE_Q);

if (ist->dec_ctx->codec_type != AVMEDIA_TYPE_VIDEO || !ist->decoding_needed)

ist->next_pts = ist->pts = ist->dts;

}

// while we have more to decode or while the decoder did output something on EOF

while (ist->decoding_needed) {

int duration = 0;

int got_output = 0;

ist->pts = ist->next_pts;

ist->dts = ist->next_dts;

/* 依据当前的packet是视频、音频还是数据,选择对应的解码器解码当前的packet */

switch (ist->dec_ctx->codec_type) {

case AVMEDIA_TYPE_AUDIO:

ret = decode_audio (ist, repeating ? NULL : &avpkt, &got_output);

break;

case AVMEDIA_TYPE_VIDEO:

ret = decode_video (ist, repeating ? NULL : &avpkt, &got_output, !pkt);

if (!repeating || !pkt || got_output) {

if (pkt && pkt->duration) {

duration = av_rescale_q(pkt->duration, ist->st->time_base, AV_TIME_BASE_Q);

} else if(ist->dec_ctx->framerate.num != 0 && ist->dec_ctx->framerate.den != 0) {

int ticks= av_stream_get_parser(ist->st) ? av_stream_get_parser(ist->st)->repeat_pict+1 : ist->dec_ctx->ticks_per_frame;

duration = ((int64_t)AV_TIME_BASE *

ist->dec_ctx->framerate.den * ticks) /

ist->dec_ctx->framerate.num / ist->dec_ctx->ticks_per_frame;

}

if(ist->dts != AV_NOPTS_VALUE && duration) {

ist->next_dts += duration;

}else

ist->next_dts = AV_NOPTS_VALUE;

}

if (got_output)

ist->next_pts += duration; //FIXME the duration is not correct in some cases

break;

case AVMEDIA_TYPE_SUBTITLE:

if (repeating)

break;

ret = transcode_subtitles(ist, &avpkt, &got_output);

if (!pkt && ret >= 0)

ret = AVERROR_EOF;

break;

default:

return -1;

}

if (ret == AVERROR_EOF) {

eof_reached = 1;

break;

}

if (ret < 0) {

av_log(NULL, AV_LOG_ERROR, "Error while decoding stream #%d:%d: %s\n",

ist->file_index, ist->st->index, av_err2str(ret));

if (exit_on_error)

exit_program(1);

// Decoding might not terminate if we're draining the decoder, and

// the decoder keeps returning an error.

// This should probably be considered a libavcodec issue.

// Sample: fate-vsynth1-dnxhd-720p-hr-lb

if (!pkt)

eof_reached = 1;

break;

}

if (!got_output)

break;

// During draining, we might get multiple output frames in this loop.

// ffmpeg.c does not drain the filter chain on configuration changes,

// which means if we send multiple frames at once to the filters, and

// one of those frames changes configuration, the buffered frames will

// be lost. This can upset certain FATE tests.

// Decode only 1 frame per call on EOF to appease these FATE tests.

// The ideal solution would be to rewrite decoding to use the new

// decoding API in a better way.

if (!pkt)

break;

repeating = 1;

}

/* after flushing, send an EOF on all the filter inputs attached to the stream */

/* except when looping we need to flush but not to send an EOF */

if (!pkt && ist->decoding_needed && eof_reached && !no_eof) {

int ret = send_filter_eof(ist);

if (ret < 0) {

av_log(NULL, AV_LOG_FATAL, "Error marking filters as finished\n");

exit_program(1);

}

}

/* handle stream copy */

...

for (i = 0; pkt && i < nb_output_streams; i++) {

OutputStream *ost = output_streams[i];

if (!check_output_constraints(ist, ost) || ost->encoding_needed)

continue;

do_streamcopy(ist, ost, pkt);

}

return !eof_reached;

}

相关文章推荐

- FFmpeg源码剖析-框架:transcode()

- FFmpeg源码剖析-通用:get_input_packet()

- Mina2.0框架源码剖析(四)

- Java类集框架之LinkedList源码剖析

- Android 网络框架 volley源码剖析

- Mina2.0框架源码剖析(一)

- Caffe框架源码剖析(6)—池化层PoolingLayer

- spring源码剖析之Spring Security安全框架

- Python爬虫框架Scrapy 学习笔记 7------- scrapy.Item源码剖析

- Caffe框架源码剖析—数据层DataLayer

- 深入集合框架之HashSet源码剖析

- FFMPEG源码分析:avformat_open_input()(媒体打开函数)

- 转:Mina2.0框架源码剖析(一)

- TP框架笔记 -- 391-module功能及源码剖析(1)

- Mina2.0框架源码剖析(六)

- Java类集框架之ArrayList源码剖析

- 淘宝开源缓存框架taobao-pamirs-proxycache源码剖析

- Xposed源码剖析——app_process作用详解

- CI框架源码完全分析之核心文件(输入类)Input.php

- FFmpeg源码剖析-通用:av_register_all()