caffe代码详细注解--init

2017-09-07 14:13

267 查看

Caffe net:init()函数代码详细注解

Caffe 中net的初始化函数init()是整个网络创建的关键函数。在此对此函数做详细的梳理。

[cpp] view

plain copy

layer {

name: "mnist"

type: "Data"

top: "data"

top: "label"

include {

phase: TRAIN

}

transform_param {

scale: 0.00390625

}

data_param {

source: "examples/mnist/mnist_train_lmdb"

batch_size: 64

backend: LMDB

}

}//在test计算中,此层就会调用函数FilterNet()被过滤掉

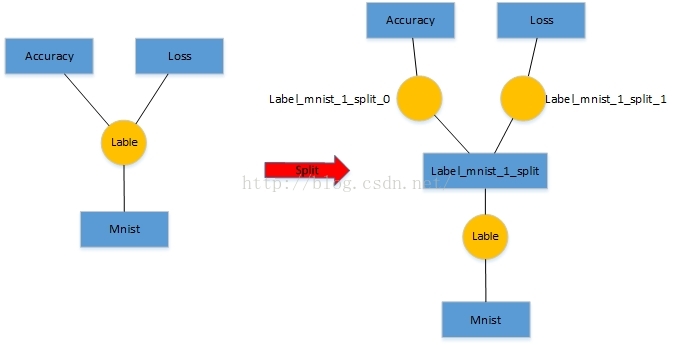

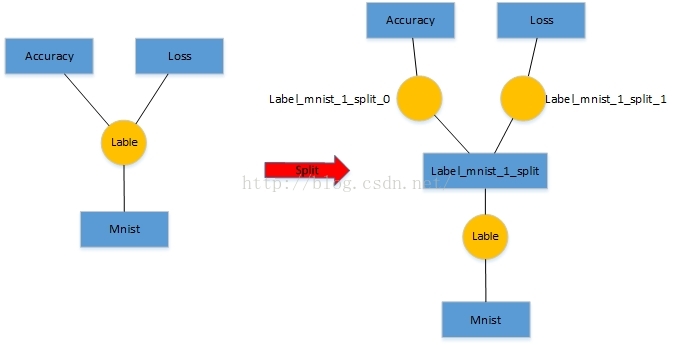

例如:LeNet网络中的数据层的top label blob对应两个输入层,分别是accuracy层和loss层,那么需要在数据层在插入一层。如下图:

数据层之上插入了一个新的层,label_mnist_1_split层,为该层的创建两个top blob分别为,Label_mnist_1_split_0和Label_mnist_1_split_1。

plain copy

template <typename Dtype>

void Net<Dtype>::Init(const NetParameter& in_param) {

CHECK(Caffe::root_solver() || root_net_)

<< "root_net_ needs to be set for all non-root solvers";

// Set phase from the state.

phase_ = in_param.state().phase();

// Filter layers based on their include/exclude rules and

// the current NetState.

NetParameter filtered_param;

/*将in_param中的某些不符合规则的层去掉*/

FilterNet(in_param, &filtered_param);

LOG_IF(INFO, Caffe::root_solver())

<< "Initializing net from parameters: " << std::endl

<< filtered_param.DebugString();

// Create a copy of filtered_param with splits added where necessary.

NetParameter param;

/*

*调用InsertSplits()函数,对于底层的一个输出blob对应多个上层的情况,

*则要在加入分裂层,形成新的网络。

**/

InsertSplits(filtered_param, ¶m);

/*

*以上部分只是根据 *.prototxt文件,确定网络name 和 blob的name的连接情况,

*下面部分是层以及层间的blob的创建,函数ApendTop()中间blob的实例化

*函数layer->SetUp()分配中间层blob的内存空间

*appendparam()

*/

// Basically, build all the layers and set up their connections.

name_ = param.name();

map<string, int> blob_name_to_idx;

set<string> available_blobs;

memory_used_ = 0;

// For each layer, set up its input and output

bottom_vecs_.resize(param.layer_size());//存每一层的输入(bottom)blob指针

top_vecs_.resize(param.layer_size());//存每一层输出(top)的blob指针

bottom_id_vecs_.resize(param.layer_size());//存每一层输入(bottom)blob的id

param_id_vecs_.resize(param.layer_size());//存每一层参数blob的id

top_id_vecs_.resize(param.layer_size());//存每一层输出(top)的blob的id

bottom_need_backward_.resize(param.layer_size());//该blob是需要返回的bool值

//(很大的一个for循环)对每一层处理

for (int layer_id = 0; layer_id < param.layer_size(); ++layer_id) {

// For non-root solvers, whether this layer is shared from root_net_.

bool share_from_root = !Caffe::root_solver()

&& root_net_->layers_[layer_id]->ShareInParallel();// ???

// Inherit phase from net if unset.

//如果当前层没有设置phase,则将当前层phase设置为网络net 的phase

if (!param.layer(layer_id).has_phase()) {

param.mutable_layer(layer_id)->set_phase(phase_);

}

// Setup layer.

// param.layers(i)返回的是关于第当前层的参数:

const LayerParameter& layer_param = param.layer(layer_id);

if (layer_param.propagate_down_size() > 0) {

CHECK_EQ(layer_param.propagate_down_size(),

layer_param.bottom_size())

<< "propagate_down param must be specified "

<< "either 0 or bottom_size times ";

}

if (share_from_root) {

LOG(INFO) << "Sharing layer " << layer_param.name() << " from root net";

layers_.push_back(root_net_->layers_[layer_id]);

layers_[layer_id]->SetShared(true);

} else {

/*

*把当前层的参数转换为shared_ptr<Layer<Dtype>>,

*创建一个具体的层,并压入到layers_中

*/

layers_.push_back(LayerRegistry<Dtype>::CreateLayer(layer_param));

}

//把当前层的名字压入到layer_names_:vector<string> layer_names_

layer_names_.push_back(layer_param.name());

LOG_IF(INFO, Caffe::root_solver())

<< "Creating Layer " << layer_param.name();

bool need_backward = false;

// Figure out this layer's input and output

//下面开始产生当前层:分别处理bottom的blob和top的blob两个步骤

//输入bottom blob

for (int bottom_id = 0; bottom_id < layer_param.bottom_size();

++bottom_id) {

const int blob_id = AppendBottom(param, layer_id, bottom_id,

&available_blobs, &blob_name_to_idx);

// If a blob needs backward, this layer should provide it.

/*

*blob_need_backward_,整个网络中,所有非参数blob,是否需要backward。

*注意,这里所说的所有非参数blob其实指的是AppendTop函数中遍历的所有top blob,

*并不是每一层的top+bottom,因为这一层的top就是下一层的bottom,网络是一层一层堆起来的。

*/

need_backward |= blob_need_backward_[blob_id];

}

//输出top blob

int num_top = layer_param.top_size();

for (int top_id = 0; top_id < num_top; ++top_id) {

AppendTop(param, layer_id, top_id, &available_blobs, &blob_name_to_idx);

// Collect Input layer tops as Net inputs.

if (layer_param.type() == "Input") {

const int blob_id = blobs_.size() - 1;

net_input_blob_indices_.push_back(blob_id);

net_input_blobs_.push_back(blobs_[blob_id].get());

}

}

// If the layer specifies that AutoTopBlobs() -> true and the LayerParameter

// specified fewer than the required number (as specified by

// ExactNumTopBlobs() or MinTopBlobs()), allocate them here.

Layer<Dtype>* layer = layers_[layer_id].get();

if (layer->AutoTopBlobs()) {

const int needed_num_top =

std::max(layer->MinTopBlobs(), layer->ExactNumTopBlobs());

for (; num_top < needed_num_top; ++num_top) {

// Add "anonymous" top blobs -- do not modify available_blobs or

// blob_name_to_idx as we don't want these blobs to be usable as input

// to other layers.

AppendTop(param, layer_id, num_top, NULL, NULL);

}

}

// After this layer is connected, set it up.

if (share_from_root) {

// Set up size of top blobs using root_net_

const vector<Blob<Dtype>*>& base_top = root_net_->top_vecs_[layer_id];

const vector<Blob<Dtype>*>& this_top = this->top_vecs_[layer_id];

for (int top_id = 0; top_id < base_top.size(); ++top_id) {

this_top[top_id]->ReshapeLike(*base_top[top_id]);

LOG(INFO) << "Created top blob " << top_id << " (shape: "

<< this_top[top_id]->shape_string() << ") for shared layer "

<< layer_param.name();

}

} else {

// 在 SetUp()中为 appendTop()中创建的Blob分配内存空间

layers_[layer_id]->SetUp(bottom_vecs_[layer_id], top_vecs_[layer_id]);

}

LOG_IF(INFO, Caffe::root_solver())

<< "Setting up " << layer_names_[layer_id];

//每次循环,都会更新向量blob_loss_weights

for (int top_id = 0; top_id < top_vecs_[layer_id].size(); ++top_id) {

//blob_loss_weights_,每次遍历一个layer的时候,都会resize blob_loss_weights_,

//然后调用模板类layer的loss函数返回loss_weight

if (blob_loss_weights_.size() <= top_id_vecs_[layer_id][top_id]) {

blob_loss_weights_.resize(top_id_vecs_[layer_id][top_id] + 1, Dtype(0));

}

//top_id_vecs_中存储的最基本元素是blob_id -> 每一个新的blob都会赋予其一个blob_id,

//但是这个blob_id可能是会有重复的

blob_loss_weights_[top_id_vecs_[layer_id][top_id]] = layer->loss(top_id);

//loss函数返回loss_weight —> 在模板类的SetUp方法中会调用SetLossWeights来设置其私有数据成员loss_,

//里面存储的其实是loss_weight

LOG_IF(INFO, Caffe::root_solver())

<< "Top shape: " << top_vecs_[layer_id][top_id]->shape_string();

if (layer->loss(top_id)) {

LOG_IF(INFO, Caffe::root_solver())

<< " with loss weight " << layer->loss(top_id);

}

//计算所需内存

memory_used_ += top_vecs_[layer_id][top_id]->count();

}

LOG_IF(INFO, Caffe::root_solver())

<< "Memory required for data: " << memory_used_ * sizeof(Dtype);

/*

*以下部分是对 每层的param blob 的处理,主要是AppendParam()函数,

*将param blob 以及blob的ID添加到 params_,param_id_vecs_ 等

*/

const int param_size = layer_param.param_size();

// 层内blob_的数量,即该层有几个权重参数,每个blob内有一个参数,例如;cov层和IP层都有两个参数

const int num_param_blobs = layers_[layer_id]->blobs().size();

//param_size是Layermeter类型对象layer_param中ParamSpec param成员的个数,

//num_param_blobs是一个Layer中learnable parameter blob的个数,param_size <= num_param_blobs

CHECK_LE(param_size, num_param_blobs)

<< "Too many params specified for layer " << layer_param.name();

ParamSpec default_param_spec;

for (int param_id = 0; param_id < num_param_blobs; ++param_id) {

const ParamSpec* param_spec = (param_id < param_size) ? &layer_param.param(param_id) : &default_param_spec;

const bool param_need_backward = param_spec->lr_mult() != 0;

//由param_need_backward来决定need_backward是否为真,

//并且,只要有一次遍历使得need_backward为真,则这个for循环结束后,need_backward也为真

need_backward |= param_need_backward;

layers_[layer_id]->set_param_propagate_down(param_id,

param_need_backward);

}

/*

*添加parameter blob,如果当前layer没有parameter blob(num_param_blobs==0),

*比如ReLU,那么就不进入循环,不添加parameter blob

*AppendParam只是执行为当前layer添加parameter blob的相关工作,

*并不会修改与backward的相关属性

*/

for (int param_id = 0; param_id < num_param_blobs; ++param_id) {

AppendParam(param, layer_id, param_id);

}

// Finally, set the backward flag

layer_need_backward_.push_back(need_backward);

/*

*在上述的AppendTop函数中,在遍历当前层的每一个top blob的时候

*都会将一个false(默认值)压入向量blob_need_backward_。

*在下面的代码中,如果这个layer need backward,则会更新blob_need_backward_

*/

if (need_backward) {

for (int top_id = 0; top_id < top_id_vecs_[layer_id].size(); ++top_id) {

blob_need_backward_[top_id_vecs_[layer_id][top_id]] = true;

}

}

}

/*至此上面部分各个层被创建并启动,下面部分是按后向顺序修正backward设置 */

// Go through the net backwards to determine which blobs contribute to the

// loss. We can skip backward computation for blobs that don't contribute

// to the loss.

// Also checks if all bottom blobs don't need backward computation (possible

// because the skip_propagate_down param) and so we can skip bacward

// computation for the entire layer

/*

*需要注意的是,上述代码中关于backward设置的部分,是按照前向的顺序设置的,

*而下面的代码是按后向顺序修正前向设置的结果。

* 一个layer是否需要backward computation,主要依据两个方面:

* (1)该layer的top blob 是否参与loss的计算;

* (2)该layer的bottom blob 是否需要backward computation,

* 比如Data层一般就不需要backward computation

*/

set<string> blobs_under_loss;

set<string> blobs_skip_backp;

//反向,从后向前

for (int layer_id = layers_.size() - 1; layer_id >= 0; --layer_id) {

bool layer_contributes_loss = false;

bool layer_skip_propagate_down = true;

/*

*为true,则表示当前layer的bottom blob不需要backward computation

*即该层不需要backward computation。

*这个局部变量所表示的意义与caffe.proto里

*message Layerparameter的propagate_down的定义恰好相反。

*/

for (int top_id = 0; top_id < top_vecs_[layer_id].size(); ++top_id) {

//blob_names_整个网络中,所有非参数blob的name

const string& blob_name = blob_names_[top_id_vecs_[layer_id][top_id]];

if (layers_[layer_id]->loss(top_id) ||

(blobs_under_loss.find(blob_name) != blobs_under_loss.end())) {

layer_contributes_loss = true;

}

if (blobs_skip_backp.find(blob_name) == blobs_skip_backp.end()) {

layer_skip_propagate_down = false;

}

if (layer_contributes_loss && !layer_skip_propagate_down)

break;

}

// If this layer can skip backward computation, also all his bottom blobs

// don't need backpropagation

if (layer_need_backward_[layer_id] && layer_skip_propagate_down) {

layer_need_backward_[layer_id] = false;

for (int bottom_id = 0; bottom_id < bottom_vecs_[layer_id].size();

++bottom_id) {

//bottom_need_backward_,整个网络所有网络层的bottom blob是否需要backward

bottom_need_backward_[layer_id][bottom_id] = false;

}

}

if (!layer_contributes_loss) { layer_need_backward_[layer_id] = false; }

if (Caffe::root_solver()) {

if (layer_need_backward_[layer_id]) {

LOG(INFO) << layer_names_[layer_id] << " needs backward computation.";

} else {

LOG(INFO) << layer_names_[layer_id]

<< " does not need backward computation.";

}

}

//修正前向设置的结果

for (int bottom_id = 0; bottom_id < bottom_vecs_[layer_id].size();

++bottom_id) {

if (layer_contributes_loss) {

const string& blob_name =

blob_names_[bottom_id_vecs_[layer_id][bottom_id]];

blobs_under_loss.insert(blob_name);//为blobs_under_loss添加新元素

} else {

bottom_need_backward_[layer_id][bottom_id] = false;

}

if (!bottom_need_backward_[layer_id][bottom_id]) {

const string& blob_name =

blob_names_[bottom_id_vecs_[layer_id][bottom_id]];

blobs_skip_backp.insert(blob_name);

}

}

}

// Handle force_backward if needed.

if (param.force_backward()) {

for (int layer_id = 0; layer_id < layers_.size(); ++layer_id) {

layer_need_backward_[layer_id] = true;

for (int bottom_id = 0;

bottom_id < bottom_need_backward_[layer_id].size(); ++bottom_id) {

bottom_need_backward_[layer_id][bottom_id] =

bottom_need_backward_[layer_id][bottom_id] ||

layers_[layer_id]->AllowForceBackward(bottom_id);

blob_need_backward_[bottom_id_vecs_[layer_id][bottom_id]] =

blob_need_backward_[bottom_id_vecs_[layer_id][bottom_id]] ||

bottom_need_backward_[layer_id][bottom_id];

}

for (int param_id = 0; param_id < layers_[layer_id]->blobs().size();

++param_id) {

layers_[layer_id]->set_param_propagate_down(param_id, true);

}

}

}

// In the end, all remaining blobs are considered output blobs.

for (set<string>::iterator it = available_blobs.begin();

it != available_blobs.end(); ++it) {

LOG_IF(INFO, Caffe::root_solver())

<< "This network produces output " << *it;

net_output_blobs_.push_back(blobs_[blob_name_to_idx[*it]].get());

net_output_blob_indices_.push_back(blob_name_to_idx[*it]);

}

for (size_t blob_id = 0; blob_id < blob_names_.size(); ++blob_id) {

//第一次使用向量blob_names_index_,逐一添加元素,是一个map

blob_names_index_[blob_names_[blob_id]] = blob_id;

}

for (size_t layer_id = 0; layer_id < layer_names_.size(); ++layer_id) {

//第一次使用向量layer_names_index_,逐一添加元素,是一个map

layer_names_index_[layer_names_[layer_id]] = layer_id;

}

ShareWeights();

debug_info_ = param.debug_info();

LOG_IF(INFO, Caffe::root_solver()) << "Network initialization done.";

}

Caffe 中net的初始化函数init()是整个网络创建的关键函数。在此对此函数做详细的梳理。

一、代码的总体介绍

该init()函数中主要包括以下几个函数:1. FilterNet(in_param,&filtered_param);

此函数的作用就是模型参数文件(*.prototxt)中的不符合规则的层去掉。例如:在caffe的examples/mnist中的lenet网络中,如果只是用于网络的前向,则需要将包含train的数据层去掉。如下:[cpp] view

plain copy

layer {

name: "mnist"

type: "Data"

top: "data"

top: "label"

include {

phase: TRAIN

}

transform_param {

scale: 0.00390625

}

data_param {

source: "examples/mnist/mnist_train_lmdb"

batch_size: 64

backend: LMDB

}

}//在test计算中,此层就会调用函数FilterNet()被过滤掉

2、InsertSplits(filtered_param,¶m);

此函数作用是,对于底层一个输出blob对应多个上层的情况,则要在加入分裂层,形成新的网络。这么做的主要原因是多个层反传给该blob的梯度需要累加。例如:LeNet网络中的数据层的top label blob对应两个输入层,分别是accuracy层和loss层,那么需要在数据层在插入一层。如下图:

数据层之上插入了一个新的层,label_mnist_1_split层,为该层的创建两个top blob分别为,Label_mnist_1_split_0和Label_mnist_1_split_1。

3、layers_.push_back();

该行代码是把当前层的参数转换为shared_ptr<Layer<Dtype>>,创建一个具体的层,并压入到layers_中4、AppendBottom();

此函数为该层创建bottom blob,由于网络是堆叠而成,即:当前层的输出 bottom是前一层的输出top blob,因此此函数并没没有真正的创建blob,只是在将前一层的指针压入到了bottom_vecs_中。5、AppendTop();

此函数为该层创建top blob,该函数真正的new的一个blob的对象。并将topblob 的指针压入到top_vecs_中6、layers_[layer_id]->SetUp();

前面创建了具体的层,并为层创建了输入bottom blob 和输出top blob。改行代码这是启动该层,setup()函数的功能是为创建的blob分配数据内存空间,如有必要还需要调整该层的输入bottom blob 和输出top blob的shape。7、AppendParam();

对于某些有参数的层,例如:卷基层、全连接层有weight和bias。该函数主要是修改和参数有关的变量,实际的层参数的blob在上面提到的setup()函数中已经创建。如:将层参数blob的指针压入到params_。二、下面是对函数Net:init()的代码的详细注解。

[cpp] viewplain copy

template <typename Dtype>

void Net<Dtype>::Init(const NetParameter& in_param) {

CHECK(Caffe::root_solver() || root_net_)

<< "root_net_ needs to be set for all non-root solvers";

// Set phase from the state.

phase_ = in_param.state().phase();

// Filter layers based on their include/exclude rules and

// the current NetState.

NetParameter filtered_param;

/*将in_param中的某些不符合规则的层去掉*/

FilterNet(in_param, &filtered_param);

LOG_IF(INFO, Caffe::root_solver())

<< "Initializing net from parameters: " << std::endl

<< filtered_param.DebugString();

// Create a copy of filtered_param with splits added where necessary.

NetParameter param;

/*

*调用InsertSplits()函数,对于底层的一个输出blob对应多个上层的情况,

*则要在加入分裂层,形成新的网络。

**/

InsertSplits(filtered_param, ¶m);

/*

*以上部分只是根据 *.prototxt文件,确定网络name 和 blob的name的连接情况,

*下面部分是层以及层间的blob的创建,函数ApendTop()中间blob的实例化

*函数layer->SetUp()分配中间层blob的内存空间

*appendparam()

*/

// Basically, build all the layers and set up their connections.

name_ = param.name();

map<string, int> blob_name_to_idx;

set<string> available_blobs;

memory_used_ = 0;

// For each layer, set up its input and output

bottom_vecs_.resize(param.layer_size());//存每一层的输入(bottom)blob指针

top_vecs_.resize(param.layer_size());//存每一层输出(top)的blob指针

bottom_id_vecs_.resize(param.layer_size());//存每一层输入(bottom)blob的id

param_id_vecs_.resize(param.layer_size());//存每一层参数blob的id

top_id_vecs_.resize(param.layer_size());//存每一层输出(top)的blob的id

bottom_need_backward_.resize(param.layer_size());//该blob是需要返回的bool值

//(很大的一个for循环)对每一层处理

for (int layer_id = 0; layer_id < param.layer_size(); ++layer_id) {

// For non-root solvers, whether this layer is shared from root_net_.

bool share_from_root = !Caffe::root_solver()

&& root_net_->layers_[layer_id]->ShareInParallel();// ???

// Inherit phase from net if unset.

//如果当前层没有设置phase,则将当前层phase设置为网络net 的phase

if (!param.layer(layer_id).has_phase()) {

param.mutable_layer(layer_id)->set_phase(phase_);

}

// Setup layer.

// param.layers(i)返回的是关于第当前层的参数:

const LayerParameter& layer_param = param.layer(layer_id);

if (layer_param.propagate_down_size() > 0) {

CHECK_EQ(layer_param.propagate_down_size(),

layer_param.bottom_size())

<< "propagate_down param must be specified "

<< "either 0 or bottom_size times ";

}

if (share_from_root) {

LOG(INFO) << "Sharing layer " << layer_param.name() << " from root net";

layers_.push_back(root_net_->layers_[layer_id]);

layers_[layer_id]->SetShared(true);

} else {

/*

*把当前层的参数转换为shared_ptr<Layer<Dtype>>,

*创建一个具体的层,并压入到layers_中

*/

layers_.push_back(LayerRegistry<Dtype>::CreateLayer(layer_param));

}

//把当前层的名字压入到layer_names_:vector<string> layer_names_

layer_names_.push_back(layer_param.name());

LOG_IF(INFO, Caffe::root_solver())

<< "Creating Layer " << layer_param.name();

bool need_backward = false;

// Figure out this layer's input and output

//下面开始产生当前层:分别处理bottom的blob和top的blob两个步骤

//输入bottom blob

for (int bottom_id = 0; bottom_id < layer_param.bottom_size();

++bottom_id) {

const int blob_id = AppendBottom(param, layer_id, bottom_id,

&available_blobs, &blob_name_to_idx);

// If a blob needs backward, this layer should provide it.

/*

*blob_need_backward_,整个网络中,所有非参数blob,是否需要backward。

*注意,这里所说的所有非参数blob其实指的是AppendTop函数中遍历的所有top blob,

*并不是每一层的top+bottom,因为这一层的top就是下一层的bottom,网络是一层一层堆起来的。

*/

need_backward |= blob_need_backward_[blob_id];

}

//输出top blob

int num_top = layer_param.top_size();

for (int top_id = 0; top_id < num_top; ++top_id) {

AppendTop(param, layer_id, top_id, &available_blobs, &blob_name_to_idx);

// Collect Input layer tops as Net inputs.

if (layer_param.type() == "Input") {

const int blob_id = blobs_.size() - 1;

net_input_blob_indices_.push_back(blob_id);

net_input_blobs_.push_back(blobs_[blob_id].get());

}

}

// If the layer specifies that AutoTopBlobs() -> true and the LayerParameter

// specified fewer than the required number (as specified by

// ExactNumTopBlobs() or MinTopBlobs()), allocate them here.

Layer<Dtype>* layer = layers_[layer_id].get();

if (layer->AutoTopBlobs()) {

const int needed_num_top =

std::max(layer->MinTopBlobs(), layer->ExactNumTopBlobs());

for (; num_top < needed_num_top; ++num_top) {

// Add "anonymous" top blobs -- do not modify available_blobs or

// blob_name_to_idx as we don't want these blobs to be usable as input

// to other layers.

AppendTop(param, layer_id, num_top, NULL, NULL);

}

}

// After this layer is connected, set it up.

if (share_from_root) {

// Set up size of top blobs using root_net_

const vector<Blob<Dtype>*>& base_top = root_net_->top_vecs_[layer_id];

const vector<Blob<Dtype>*>& this_top = this->top_vecs_[layer_id];

for (int top_id = 0; top_id < base_top.size(); ++top_id) {

this_top[top_id]->ReshapeLike(*base_top[top_id]);

LOG(INFO) << "Created top blob " << top_id << " (shape: "

<< this_top[top_id]->shape_string() << ") for shared layer "

<< layer_param.name();

}

} else {

// 在 SetUp()中为 appendTop()中创建的Blob分配内存空间

layers_[layer_id]->SetUp(bottom_vecs_[layer_id], top_vecs_[layer_id]);

}

LOG_IF(INFO, Caffe::root_solver())

<< "Setting up " << layer_names_[layer_id];

//每次循环,都会更新向量blob_loss_weights

for (int top_id = 0; top_id < top_vecs_[layer_id].size(); ++top_id) {

//blob_loss_weights_,每次遍历一个layer的时候,都会resize blob_loss_weights_,

//然后调用模板类layer的loss函数返回loss_weight

if (blob_loss_weights_.size() <= top_id_vecs_[layer_id][top_id]) {

blob_loss_weights_.resize(top_id_vecs_[layer_id][top_id] + 1, Dtype(0));

}

//top_id_vecs_中存储的最基本元素是blob_id -> 每一个新的blob都会赋予其一个blob_id,

//但是这个blob_id可能是会有重复的

blob_loss_weights_[top_id_vecs_[layer_id][top_id]] = layer->loss(top_id);

//loss函数返回loss_weight —> 在模板类的SetUp方法中会调用SetLossWeights来设置其私有数据成员loss_,

//里面存储的其实是loss_weight

LOG_IF(INFO, Caffe::root_solver())

<< "Top shape: " << top_vecs_[layer_id][top_id]->shape_string();

if (layer->loss(top_id)) {

LOG_IF(INFO, Caffe::root_solver())

<< " with loss weight " << layer->loss(top_id);

}

//计算所需内存

memory_used_ += top_vecs_[layer_id][top_id]->count();

}

LOG_IF(INFO, Caffe::root_solver())

<< "Memory required for data: " << memory_used_ * sizeof(Dtype);

/*

*以下部分是对 每层的param blob 的处理,主要是AppendParam()函数,

*将param blob 以及blob的ID添加到 params_,param_id_vecs_ 等

*/

const int param_size = layer_param.param_size();

// 层内blob_的数量,即该层有几个权重参数,每个blob内有一个参数,例如;cov层和IP层都有两个参数

const int num_param_blobs = layers_[layer_id]->blobs().size();

//param_size是Layermeter类型对象layer_param中ParamSpec param成员的个数,

//num_param_blobs是一个Layer中learnable parameter blob的个数,param_size <= num_param_blobs

CHECK_LE(param_size, num_param_blobs)

<< "Too many params specified for layer " << layer_param.name();

ParamSpec default_param_spec;

for (int param_id = 0; param_id < num_param_blobs; ++param_id) {

const ParamSpec* param_spec = (param_id < param_size) ? &layer_param.param(param_id) : &default_param_spec;

const bool param_need_backward = param_spec->lr_mult() != 0;

//由param_need_backward来决定need_backward是否为真,

//并且,只要有一次遍历使得need_backward为真,则这个for循环结束后,need_backward也为真

need_backward |= param_need_backward;

layers_[layer_id]->set_param_propagate_down(param_id,

param_need_backward);

}

/*

*添加parameter blob,如果当前layer没有parameter blob(num_param_blobs==0),

*比如ReLU,那么就不进入循环,不添加parameter blob

*AppendParam只是执行为当前layer添加parameter blob的相关工作,

*并不会修改与backward的相关属性

*/

for (int param_id = 0; param_id < num_param_blobs; ++param_id) {

AppendParam(param, layer_id, param_id);

}

// Finally, set the backward flag

layer_need_backward_.push_back(need_backward);

/*

*在上述的AppendTop函数中,在遍历当前层的每一个top blob的时候

*都会将一个false(默认值)压入向量blob_need_backward_。

*在下面的代码中,如果这个layer need backward,则会更新blob_need_backward_

*/

if (need_backward) {

for (int top_id = 0; top_id < top_id_vecs_[layer_id].size(); ++top_id) {

blob_need_backward_[top_id_vecs_[layer_id][top_id]] = true;

}

}

}

/*至此上面部分各个层被创建并启动,下面部分是按后向顺序修正backward设置 */

// Go through the net backwards to determine which blobs contribute to the

// loss. We can skip backward computation for blobs that don't contribute

// to the loss.

// Also checks if all bottom blobs don't need backward computation (possible

// because the skip_propagate_down param) and so we can skip bacward

// computation for the entire layer

/*

*需要注意的是,上述代码中关于backward设置的部分,是按照前向的顺序设置的,

*而下面的代码是按后向顺序修正前向设置的结果。

* 一个layer是否需要backward computation,主要依据两个方面:

* (1)该layer的top blob 是否参与loss的计算;

* (2)该layer的bottom blob 是否需要backward computation,

* 比如Data层一般就不需要backward computation

*/

set<string> blobs_under_loss;

set<string> blobs_skip_backp;

//反向,从后向前

for (int layer_id = layers_.size() - 1; layer_id >= 0; --layer_id) {

bool layer_contributes_loss = false;

bool layer_skip_propagate_down = true;

/*

*为true,则表示当前layer的bottom blob不需要backward computation

*即该层不需要backward computation。

*这个局部变量所表示的意义与caffe.proto里

*message Layerparameter的propagate_down的定义恰好相反。

*/

for (int top_id = 0; top_id < top_vecs_[layer_id].size(); ++top_id) {

//blob_names_整个网络中,所有非参数blob的name

const string& blob_name = blob_names_[top_id_vecs_[layer_id][top_id]];

if (layers_[layer_id]->loss(top_id) ||

(blobs_under_loss.find(blob_name) != blobs_under_loss.end())) {

layer_contributes_loss = true;

}

if (blobs_skip_backp.find(blob_name) == blobs_skip_backp.end()) {

layer_skip_propagate_down = false;

}

if (layer_contributes_loss && !layer_skip_propagate_down)

break;

}

// If this layer can skip backward computation, also all his bottom blobs

// don't need backpropagation

if (layer_need_backward_[layer_id] && layer_skip_propagate_down) {

layer_need_backward_[layer_id] = false;

for (int bottom_id = 0; bottom_id < bottom_vecs_[layer_id].size();

++bottom_id) {

//bottom_need_backward_,整个网络所有网络层的bottom blob是否需要backward

bottom_need_backward_[layer_id][bottom_id] = false;

}

}

if (!layer_contributes_loss) { layer_need_backward_[layer_id] = false; }

if (Caffe::root_solver()) {

if (layer_need_backward_[layer_id]) {

LOG(INFO) << layer_names_[layer_id] << " needs backward computation.";

} else {

LOG(INFO) << layer_names_[layer_id]

<< " does not need backward computation.";

}

}

//修正前向设置的结果

for (int bottom_id = 0; bottom_id < bottom_vecs_[layer_id].size();

++bottom_id) {

if (layer_contributes_loss) {

const string& blob_name =

blob_names_[bottom_id_vecs_[layer_id][bottom_id]];

blobs_under_loss.insert(blob_name);//为blobs_under_loss添加新元素

} else {

bottom_need_backward_[layer_id][bottom_id] = false;

}

if (!bottom_need_backward_[layer_id][bottom_id]) {

const string& blob_name =

blob_names_[bottom_id_vecs_[layer_id][bottom_id]];

blobs_skip_backp.insert(blob_name);

}

}

}

// Handle force_backward if needed.

if (param.force_backward()) {

for (int layer_id = 0; layer_id < layers_.size(); ++layer_id) {

layer_need_backward_[layer_id] = true;

for (int bottom_id = 0;

bottom_id < bottom_need_backward_[layer_id].size(); ++bottom_id) {

bottom_need_backward_[layer_id][bottom_id] =

bottom_need_backward_[layer_id][bottom_id] ||

layers_[layer_id]->AllowForceBackward(bottom_id);

blob_need_backward_[bottom_id_vecs_[layer_id][bottom_id]] =

blob_need_backward_[bottom_id_vecs_[layer_id][bottom_id]] ||

bottom_need_backward_[layer_id][bottom_id];

}

for (int param_id = 0; param_id < layers_[layer_id]->blobs().size();

++param_id) {

layers_[layer_id]->set_param_propagate_down(param_id, true);

}

}

}

// In the end, all remaining blobs are considered output blobs.

for (set<string>::iterator it = available_blobs.begin();

it != available_blobs.end(); ++it) {

LOG_IF(INFO, Caffe::root_solver())

<< "This network produces output " << *it;

net_output_blobs_.push_back(blobs_[blob_name_to_idx[*it]].get());

net_output_blob_indices_.push_back(blob_name_to_idx[*it]);

}

for (size_t blob_id = 0; blob_id < blob_names_.size(); ++blob_id) {

//第一次使用向量blob_names_index_,逐一添加元素,是一个map

blob_names_index_[blob_names_[blob_id]] = blob_id;

}

for (size_t layer_id = 0; layer_id < layer_names_.size(); ++layer_id) {

//第一次使用向量layer_names_index_,逐一添加元素,是一个map

layer_names_index_[layer_names_[layer_id]] = layer_id;

}

ShareWeights();

debug_info_ = param.debug_info();

LOG_IF(INFO, Caffe::root_solver()) << "Network initialization done.";

}

相关文章推荐

- 【深度学习:CNN】Batch Normalization解析(2)-- caffe中batch_norm层代码详细注解

- caffe代码详细注解

- caffe中batch_norm层代码详细注解

- java 大数取模(有可运行代码和详细注解)

- [置顶] 简单理解混淆矩阵—Matlab详细代码注解

- 这是我以前copy的关于多线程 4000 下载代码,来源我忘记了,我进行了注解。个人认知,不详细之处还请谅解

- EM算法MATLAB代码及详细注解

- 用caffe对自己的图片进行分类,包含详细代码

- (新手)关于linux管道的代码详细注解

- 双启动详细实现(代码+注解) 转载于汇编网

- caffe源码深入学习5:超级详细的caffe卷积层代码解析

- 五子棋代码详细注解

- 【caffe】Caffe的Python接口-官方教程-00-classification-详细说明(含代码)

- 多线程断点续传(代码,附有详细注解)

- SSD算法及Caffe代码详解(最详细版本)

- 【caffe】Caffe的Python接口-官方教程-01-learning-Lenet-详细说明(含代码)

- paip.java 注解的详细使用代码

- paip.java 注解的详细使用代码

- hibernate+struts2的详细分页代码

- 详细解读Python中的__init__()方法