从零开始编写深度学习库(二)FullyconnecteLayer CPU编写

2017-05-11 15:04

260 查看

从零开始编写深度学习库(二)FullyconnecteLayer CPU编写

博客:http://blog.csdn.net/hjimce

微博:黄锦池-hjimce qq:1393852684

一、C++实现

//y=x*w+b

static void CFullyconnecteLayer::forward(const Eigen::MatrixXf &inputs, const Eigen::MatrixXf &weights, const Eigen::VectorXf &bais

, Eigen::MatrixXf &outputs) {

outputs = inputs*weights;

outputs.rowwise() += bais.transpose();//每一行加上b

}

//y=x*w+b,反向求导后dw=x.T*dy,dx=dy*w.T,db=dy

static void CFullyconnecteLayer::backward(const Eigen::MatrixXf &inputs, const Eigen::MatrixXf &weights, const Eigen::VectorXf &bais,

const Eigen::MatrixXf &d_outputs,Eigen::MatrixXf &d_inputs, Eigen::MatrixXf &d_weights, Eigen::VectorXf &d_bais) {

d_weights = inputs.transpose()*d_outputs;

d_inputs = d_outputs*weights.transpose();

d_bais = d_outputs.colwise().sum();

}

static void CFullyconnecteLayer::test() {

int batch_size = 4;

int input_size = 3;

int output_size = 2;

Eigen::MatrixXf inputs(batch_size, input_size);

inputs <<1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12;

Eigen::MatrixXf weights(input_size, output_size);

weights << 0.55, 0.88, 0.75, 1.1, 0.11, 0.002;

Eigen::VectorXf bais(output_size);

bais << 3, 2;

Eigen::VectorXf label(batch_size);

label << 1, 0, 1, 1;

Eigen::MatrixXf outputs;//全连接层

forward(inputs, weights, bais, outputs);

Eigen::MatrixXf d_input, d_weights,d_output;

float loss;

CSoftmaxLayer::softmax_loss_forward_backward(outputs, label, d_output, loss);

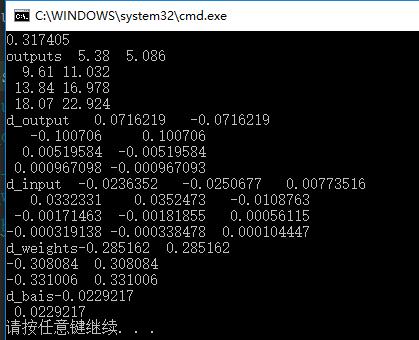

std::cout << loss << std::endl;

Eigen::VectorXf d_bais;

backward(inputs, weights, bais, d_output, d_input, d_weights, d_bais);

std::cout << "outputs" << outputs << std::endl;

std::cout << "d_output" << d_output << std::endl;

std::cout << "d_input" << d_input << std::endl;

std::cout << "d_weights" << d_weights << std::endl;

std::cout << "d_bais"<<d_bais << std::endl;

}二、tensorflow验证:

import tensorflow as tf

inputs=tf.constant([[1,2,3],[4,5,6],[7,8,9],[10,11,12]],shape=(4,3),dtype=tf.float32)

weights=tf.constant([0.55, 0.88, 0.75, 1.1, 0.11, 0.002],shape=(3,2),dtype=tf.float32)

bais=tf.constant([3, 2],dtype=tf.float32)

label=tf.constant([1,0,1,1])

output=tf.matmul(inputs,weights)+bais

one_hot=tf.one_hot(label,2)

predicts=tf.nn.softmax(output)

loss =-tf.reduce_mean(one_hot * tf.log(predicts))

d_output,d_inputs,d_weights,d_bais=tf.gradients(loss,[output,inputs,weights,bais])

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

loss_np,output_np,d_output_np, d_inputs_np, d_weights_np, d_bais_np=sess.run([loss,output,d_output,

d_inputs,d_weights,d_bais])

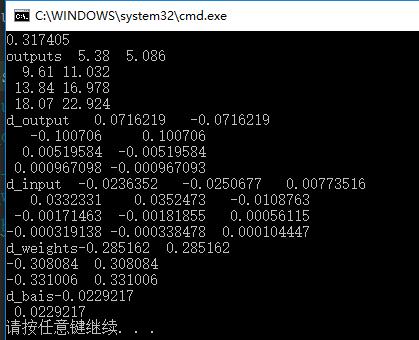

print (loss_np)

print ('output',output_np)

print('d_output', d_output_np)

print ("d_inputs",d_inputs_np)

print("d_weights", d_weights_np)

print("d_bais", d_bais_np)

博客:http://blog.csdn.net/hjimce

微博:黄锦池-hjimce qq:1393852684

一、C++实现

//y=x*w+b

static void CFullyconnecteLayer::forward(const Eigen::MatrixXf &inputs, const Eigen::MatrixXf &weights, const Eigen::VectorXf &bais

, Eigen::MatrixXf &outputs) {

outputs = inputs*weights;

outputs.rowwise() += bais.transpose();//每一行加上b

}

//y=x*w+b,反向求导后dw=x.T*dy,dx=dy*w.T,db=dy

static void CFullyconnecteLayer::backward(const Eigen::MatrixXf &inputs, const Eigen::MatrixXf &weights, const Eigen::VectorXf &bais,

const Eigen::MatrixXf &d_outputs,Eigen::MatrixXf &d_inputs, Eigen::MatrixXf &d_weights, Eigen::VectorXf &d_bais) {

d_weights = inputs.transpose()*d_outputs;

d_inputs = d_outputs*weights.transpose();

d_bais = d_outputs.colwise().sum();

}

static void CFullyconnecteLayer::test() {

int batch_size = 4;

int input_size = 3;

int output_size = 2;

Eigen::MatrixXf inputs(batch_size, input_size);

inputs <<1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12;

Eigen::MatrixXf weights(input_size, output_size);

weights << 0.55, 0.88, 0.75, 1.1, 0.11, 0.002;

Eigen::VectorXf bais(output_size);

bais << 3, 2;

Eigen::VectorXf label(batch_size);

label << 1, 0, 1, 1;

Eigen::MatrixXf outputs;//全连接层

forward(inputs, weights, bais, outputs);

Eigen::MatrixXf d_input, d_weights,d_output;

float loss;

CSoftmaxLayer::softmax_loss_forward_backward(outputs, label, d_output, loss);

std::cout << loss << std::endl;

Eigen::VectorXf d_bais;

backward(inputs, weights, bais, d_output, d_input, d_weights, d_bais);

std::cout << "outputs" << outputs << std::endl;

std::cout << "d_output" << d_output << std::endl;

std::cout << "d_input" << d_input << std::endl;

std::cout << "d_weights" << d_weights << std::endl;

std::cout << "d_bais"<<d_bais << std::endl;

}二、tensorflow验证:

import tensorflow as tf

inputs=tf.constant([[1,2,3],[4,5,6],[7,8,9],[10,11,12]],shape=(4,3),dtype=tf.float32)

weights=tf.constant([0.55, 0.88, 0.75, 1.1, 0.11, 0.002],shape=(3,2),dtype=tf.float32)

bais=tf.constant([3, 2],dtype=tf.float32)

label=tf.constant([1,0,1,1])

output=tf.matmul(inputs,weights)+bais

one_hot=tf.one_hot(label,2)

predicts=tf.nn.softmax(output)

loss =-tf.reduce_mean(one_hot * tf.log(predicts))

d_output,d_inputs,d_weights,d_bais=tf.gradients(loss,[output,inputs,weights,bais])

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

loss_np,output_np,d_output_np, d_inputs_np, d_weights_np, d_bais_np=sess.run([loss,output,d_output,

d_inputs,d_weights,d_bais])

print (loss_np)

print ('output',output_np)

print('d_output', d_output_np)

print ("d_inputs",d_inputs_np)

print("d_weights", d_weights_np)

print("d_bais", d_bais_np)

相关文章推荐

- 从零开始编写深度学习库(五)PoolingLayer 网络层CPU编写

- 从零开始编写深度学习库(一)SoftmaxWithLoss CPU编写

- 从零开始编写深度学习库(三)ActivationLayer网络层CPU实现

- 从零开始编写深度学习库(五)ConvolutionLayer CPU编写

- 从零开始编写深度学习库(五)Eigen Tensor学习笔记2.0

- 从零开始编写深度学习库(四)Eigen::Tensor学习使用及代码重构

- 从零开始编写自己的C#框架(8)——后台管理系统功能设计

- 编写CPU和GPU之间可移植的代码

- 从零开始学_JavaScript_系列(八)——js系列<2>(事件触发顺序、文本读取、js编写ajax、输入验证、下拉菜单)

- 编写检测ip端口是否存在的工具类,解决异常ConnectException: failed to connect to /127.0.0.1 (port 12345): connect faile

- 从零开始写javaweb框架笔记3-编写一个简单的web应用

- 从零开始编写自己的C#框架(5)——三层架构介绍

- 使用Python编写一个模仿CPU工作的程序

- 从零开始编写一个flex组件。FLEX自定义控件

- 从零开始编写自己的C#框架——框架学习补充说明

- 非阻塞connect编写方法介绍

- u-boot下编写测试CPU的GPIO状态代码[转]

- vs自动注释add-in插件编写(二)--CConnect

- 如何减少C++编写程序的CPU使用率

- 从零开始编写自己的C#框架(4)——文档编写说明