Java、JFrame制作录制GIF小工具

2017-03-10 12:04

330 查看

和截屏工具类似,选取一块区域截图保存成图片,gif录制就是选取一块区域持续录制,最后保存成gif动画图片。

运行效果

1、选取需要录制的区域(鼠标拖拽选择录制的区域)

2、点击开始按钮开始录制GIF图片(开始录制时,区域边框会闪烁)

3、点击结束按钮保存GIF图片(没错提示就是这么裸露,自己懒得优化)

4、最后生成GIF图片

设计思路

1、如何绘制矩形区域如何绘制用户可见的矩形区域,这是一个小的难点,你可以在JFrame绘制任意的形状,如何不遮挡屏幕的情况下,再屏幕任何地方选取一块区域呢

a、首先画一个和屏幕一样大的透明JFrame。

来看下上面的一段代码,是显示一个和电脑屏幕一样大的透明窗口。

// 设置窗口无标题部分

this.setUndecorated(true);

// 设置透明背景色,0x01000000 ,01表示透明度

// 这里要注意,不能将窗口设置为00(完全透明),如果设置为完全透明,鼠标将无法聚焦当前窗口,也就无法绘制图形了,这里你可以改成00试试看你就明白了。

this.setBackground(new Color(0x01000000, true));

b、 再监听鼠标拖拽事件,画矩形

上面代码就是画一个矩形,是通过设置label的边框、大小和起点位置来画矩形的

if (sx == -1) // sx=-1说明是鼠标第一次按下,设置label的位置,也就是矩形的位置

else // 否则是鼠标按下拖拽的过程,只需要设置label的大小即可形成一个绘制矩形的效果

选取矩形区域这里有个BUG,用了你就知道了,懒得改 :)

2、截屏

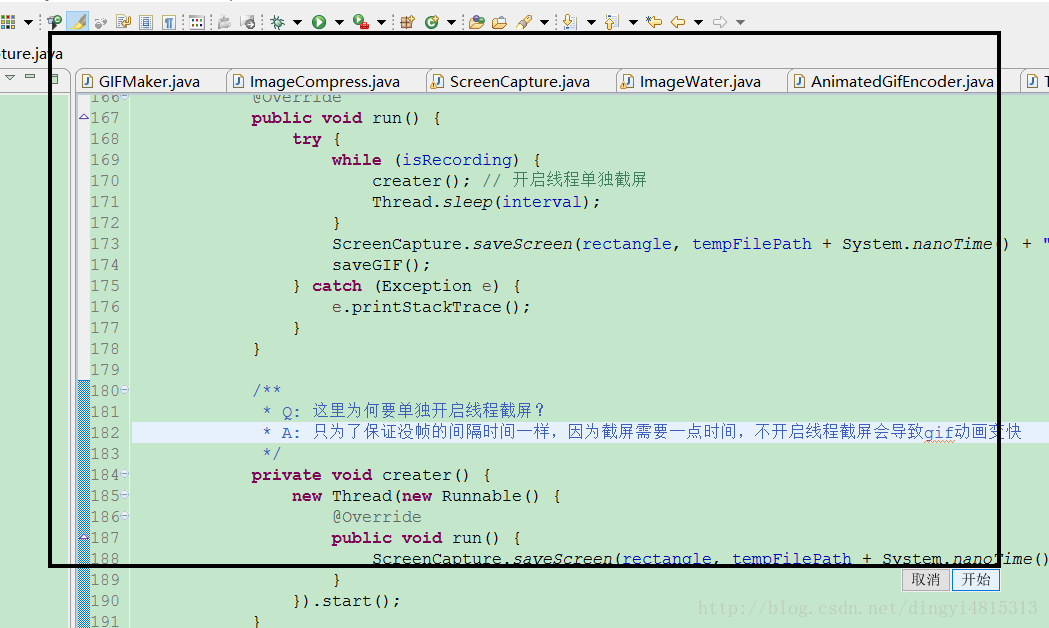

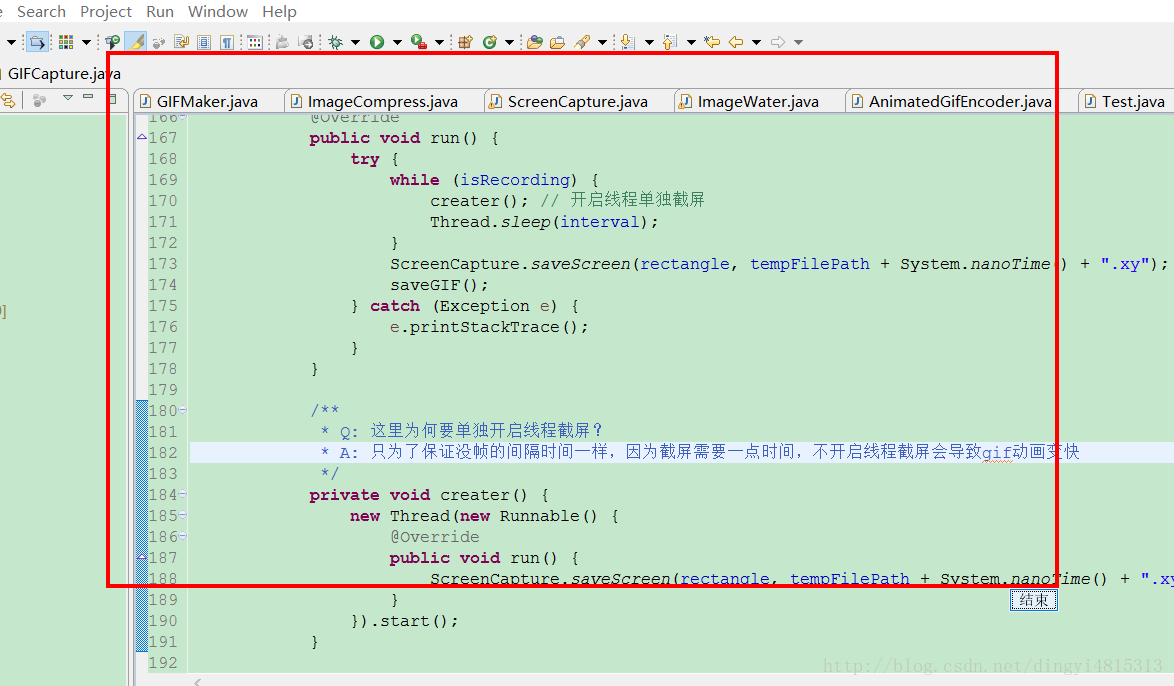

这个录gif不是说选取区域之后就开始录制了,而是先不停的截取区域的图片保存到临时路径下。

上图代码就是每隔interval毫秒截取屏幕并保存为图片的,截屏保存图片的代码很简单,自己稍微看下就明白了。

这里有个需要注意的地方就是,截取的屏幕是不含鼠标的。但是截图没有鼠标就算了,gif图片肯定记录操作的,没有鼠标怎么行。这里的处理是:每次截屏的时候获取鼠标位置信息,在鼠标所在的位置绘制一个小圆圈即可,如下图代码。

3、保存GIF

保存GIF就比较简单了,网上找的一个GIF工具类AnimatedGifEncoder,不知道哪位大神写的。

代码

大家可以下载源代码,不要积分的http://download.csdn.net/detail/dingyi4815313/9776746

主界面:GIFCapture.java

import java.awt.Color;

import java.awt.Dimension;

import java.awt.Font;

import java.awt.Insets;

import java.awt.Rectangle;

import java.awt.Toolkit;

import java.awt.event.ActionEvent;

import java.awt.event.ActionListener;

import java.awt.event.KeyAdapter;

import java.awt.event.KeyEvent;

import java.awt.event.MouseEvent;

import java.awt.event.MouseMotionListener;

import java.io.File;

import javax.swing.JButton;

import javax.swing.JFrame;

import javax.swing.JLabel;

import javax.swing.UIManager;

import javax.swing.border.LineBorder;

import javax.swing.filechooser.FileSystemView;

/**

* 屏幕GIF动画录制,有两个较大的问题可以优化(1、保存GIF图片的时间太长。2、保存GIF图片太大),如果你有好的建议可以加QQ294618814,一起探讨

*

* @author ding.yi 2017年3月10日 上午10:20:36

*

*/

@SuppressWarnings("serial")

public class GIFCapture extends JFrame {

Toolkit toolkit = Toolkit.getDefaultToolkit();

Dimension screenSize = toolkit.getScreenSize();

JLabel label = new JLabel();

JButton startBtn = new JButton("开始");

JButton existBtn = new JButton("取消");

Boolean isRecording =

4000

false;

Rectangle rectangle = null;

// 临时文件存放的路径

String tempFilePath = "C:/Windows/temp/java_capture/";

// 没帧间隔时间(ms),100MS就是说1秒钟10张图片

int interval = 100;

public GIFCapture() {

setLayout(null);

label.setName("black");

label.setVisible(false);

label.setBorder(new LineBorder(Color.black, 4));

label.setBackground(new Color(0x00000000, true));

this.add(label);

// 开始和取消按钮

startBtn.setMargin(new Insets(0, 0, 0, 0));

existBtn.setMargin(new Insets(0, 0, 0, 0));

startBtn.setSize(50, 24);

existBtn.setSize(50, 24);

startBtn.setVisible(false);

existBtn.setVisible(false);

existBtn.addActionListener(new ActionListener() {

@Override

public void actionPerformed(ActionEvent e) {

dispose();

System.exit(0);

}

});

startBtn.addActionListener(recordListener);

this.add(startBtn);

this.add(existBtn);

// 窗口属性设置

this.setDefaultCloseOperation(EXIT_ON_CLOSE);

this.setSize(screenSize);

this.setUndecorated(true);

this.setBackground(new Color(0x01000000, true));

this.setVisible(true);

this.setAlwaysOnTop(true);

// 键盘事件,按Esc键可以推出程序

this.addKeyListener(new KeyAdapter() {

@Override

public void keyPressed(KeyEvent e) {

if (e.getKeyCode() == 27) {

dispose();

System.exit(0);

}

}

});

this.addMouseMotionListener(ml);

}

int sx = -1, sy = -1, cx = -1, cy = -1;

boolean isDrag = false;

MouseMotionListener ml = new MouseMotionListener() {

@Override

public void mouseMoved(MouseEvent e) {

if (Math.abs(sx - cx) > 0 && Math.abs(sy - cy) > 0) {

startBtn.setLocation(cx - startBtn.getWidth(), cy);

existBtn.setLocation(cx - startBtn.getWidth() - existBtn.getWidth(), cy);

startBtn.setVisible(true);

existBtn.setVisible(true);

rectangle = new Rectangle(sx + 4, sy + 4, Math.abs(sx - cx) - 8, Math.abs(sy - cy) - 8);

sx = -1;

sy = -1;

cx = -1;

cy = -1;

}

}

@Override

public void mouseDragged(MouseEvent e) {

if (isRecording) {

return;

}

if (sx == -1) {

sx = e.getX();

sy = e.getY();

startBtn.setVisible(false);

label.setSize(0, 0 );

label.setLocation(sx, sy);

} else {

cx = e.getX();

cy = e.getY();

label.setSize(Math.abs(sx - cx), Math.abs(sy - cy));

label.setVisible(true);

}

repaint();

}

};

ActionListener recordListener = new ActionListener() {

@Override

public void actionPerformed(ActionEvent e) {

isRecording = "开始".contentEquals(startBtn.getText());

if (isRecording) {

setBackground(new Color(0x00000000, true));

startBtn.setText("结束");

existBtn.setVisible(false);

startRecording();

} else {

existBtn.setVisible(false);

startBtn.setVisible(false);

}

}

};

protected void startRecording() {

File f = new File(tempFilePath);

if (!f.exists()) {

f.mkdirs();

}

// 先将临时文件夹中的截图全部删除

for (File img : f.listFiles()) {

img.delete();

}

// 开始录制,闪烁边框

new Thread(new BlinkRunnable()).start();

// 开始录制,先将截屏一张一张保存到临时文件夹下

new Thread(new Runnable() {

@Override

public void run() {

try {

while (isRecording) {

creater(); // 开启线程单独截屏

Thread.sleep(interval);

}

ScreenCapture.saveScreen(rectangle, tempFilePath + System.nanoTime() + ".xy");

saveGIF();

} catch (Exception e) {

e.printStackTrace();

}

}

/**

* Q: 这里为何要单独开启线程截屏?

* A: 只为了保证没帧的间隔时间一样,因为截屏需要一点时间,不开启线程截屏会导致gif动画变快

*/

private void creater() {

new Thread(new Runnable() {

@Override

public void run() {

ScreenCapture.saveScreen(rectangle, tempFilePath + System.nanoTime() + ".xy");

}

}).start();

}

/**

* 开始将截屏图片保存为GIF图片,

*/

private void saveGIF() {

label.setBackground(Color.gray);

label.setBorder(new LineBorder(Color.black, 2));

label.setSize(400, 60);

label.setText("正在保存图片,请稍等...");

label.setFont(new Font("宋体", Font.BOLD, 20));

System.out.println("正在保存GIF图片...");

// GIF图片保存路径默认为桌面,没有提供选择保存路径界面,可以优化

String desktopPath = FileSystemView.getFileSystemView().getHomeDirectory() + "/";

ScreenCapture.createGIF(tempFilePath, desktopPath + "JAVA截图" + System.currentTimeMillis() + ".GIF", interval);

System.out.println("完成");

dispose();

System.exit(0);

}

}).start();

}

/**

* 窗口闪烁效果

*/

class BlinkRunnable implements Runnable {

@Override

public void run() {

while (true) {

try {

// isRecording为false表示没有录制,就一直等待

if (!isRecording) {

Thread.sleep(100);

continue;

}

// 来切换边框的颜色 黑红黑红的闪烁,表示正在录制

Color color = null;

if ("black".equals(label.getName())) {

color = Color.red;

label.setName("red");

} else {

color = Color.black;

label.setName("black");

}

label.setBorder(new LineBorder(color, 4));

Thread.sleep(800);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

}

public static void main(String[] args) throws Exception {

UIManager.setLookAndFeel(UIManager.getSystemLookAndFeelClassName());

new GIFCapture();

}

}自己封装的截屏工具类:ScreenCapture.java

import java.awt.AWTException;

import java.awt.Color;

import java.awt.Graphics;

import java.awt.MouseInfo;

import java.awt.Point;

import java.awt.Rectangle;

import java.awt.Robot;

import java.awt.image.BufferedImage;

import java.io.File;

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

import javax.imageio.ImageIO;

import com.test.lib.AnimatedGifEncoder;

/**

* 屏幕截取工具

*

* @author ding.yi 2017年3月10日 上午11:17:45

*

*/

public class ScreenCapture {

static List<BufferedImage> captureBuffList = new ArrayList<BufferedImage>();

/**

* 通过坐标和大小截取屏幕,得到BufferedImage

* @param x 截屏起点坐标x

* @param y 截屏起点坐标y

* @param w 宽度

* @param h 高度

* @return BufferedImage

*/

public static BufferedImage getScreen(int x, int y, int w, int h) {

Rectangle rect = new Rectangle(x, y, w, h);

BufferedImage captureImg = null;

Point p = MouseInfo.getPointerInfo().getLocation();

try {

captureImg = new Robot().createScreenCapture(rect);

// 这里截屏的时候花了一个红圆圈,标识鼠标所在的位置,也可以换成一个鼠标央视的图片

// 是为了制作gif动画用的,如果是单纯的截屏就不需要

Graphics g = captureImg.getGraphics();

g.setColor(Color.red);

g.fillArc(p.x - x, p.y - y, 15, 15, 0, 360);

} catch (AWTException e) {

e.printStackTrace();

}

return captureImg;

}

public static void saveScreen(Rectangle rect, String savePath) {

saveScreen(rect.x, rect.y, rect.width, rect.height, savePath);

}

public static void saveScreen(Rectangle rect) {

BufferedImage captureImg = getScreen(rect.x, rect.y, rect.width, rect.height);

captureBuffList.add(captureImg);

}

public static void createGIF(String gifPath, int interval) {

try {

AnimatedGifEncoder e = new AnimatedGifEncoder();

e.setRepeat(0);

e.start(gifPath);

for (BufferedImage bufferedImage : captureBuffList) {

e.setDelay(interval);下·

e.addFrame(bufferedImage);

}

e.finish();

} catch (Exception e) {

e.printStackTrace();

} finally {

captureBuffList.clear();

}

}

/**

* 保存截屏

* @param x 截屏x坐标

* @param y 截屏y坐标

* @param w 截屏宽度

* @param h 截屏高度

* @param savePath 截屏图片保存位置

*/

public static void saveScreen(int x, int y, int w, int h, String savePath) {

BufferedImage captureImg = getScreen(x, y, w, h);

File saveFile = new File(savePath);

if (!saveFile.getParentFile().exists()) {

saveFile.mkdirs();

}

try {

ImageIO.write(captureImg, "PNG", saveFile);

} catch (IOException e) {

e.printStackTrace();

}

}

/**

* 将文件夹中的所有图片制作成GIF图片,GIF图片制作的方法是从网上找来的(AnimatedGifEncoder、LZWEncoder和NeuQuant三个类)。

* @param folderPath 帧图片所在文件夹

* @param gifPath 保存的GIF图片路径

* @param interval 没帧间隔(毫秒)

*/

public static void createGIF(String folderPath, String gifPath, int interval) {

File[] pic = new File(folderPath).listFiles();

try {

AnimatedGifEncoder e = new AnimatedGifEncoder();

e.setRepeat(0);

e.start(gifPath);

BufferedImage src[] = new BufferedImage[pic.length];

for (int i = 0; i < src.length; i++) {

e.setDelay(interval); // 设置播放的延迟时间

src[i] = ImageIO.read(pic[i]); // 读入需要播放的jpg文件

e.addFrame(src[i]); // 添加到帧中

}

e.finish();

} catch (Exception e) {

e.printStackTrace();

}

}

public static void main(String[] args) throws InterruptedException {

for (int i = 1; i <= 100; i++) {

final int j = i;

new Thread(new Runnable() {

@Override

public void run() {

saveScreen(0, 0, 1069, 746, "F:/a/" + (j < 10 ? "0" : "") + j + ".png");

}

}).start();

Thread.sleep(100);

}

createGIF("F:/a/", "F:/a/gif.gif", 100);

}

}GIF工具类,一共三个

NeuQuant.java

public class NeuQuant {

protected static final int netsize = 256; /* number of colours used */

/* four primes near 500 - assume no image has a length so large */

/* that it is divisible by all four primes */

protected static final int prime1 = 499;

protected static final int prime2 = 491;

protected static final int prime3 = 487;

protected static final int prime4 = 503;

protected static final int minpicturebytes = (3 * prime4);

/* minimum size for input image */

/*

* Program Skeleton ---------------- [select samplefac in range 1..30] [read

* image from input file] pic = (unsigned char*) malloc(3*width*height);

* initnet(pic,3*width*height,samplefac); learn(); unbiasnet(); [write

* output image header, using writecolourmap(f)] inxbuild(); write output

* image using inxsearch(b,g,r)

*/

/*

* Network Definitions -------------------

*/

protected static final int maxnetpos = (netsize - 1);

protected static final int netbiasshift = 4; /* bias for colour values */

protected static final int ncycles = 100; /* no. of learning cycles */

/* defs for freq and bias */

protected static final int intbiasshift = 16; /* bias for fractions */

protected static final int intbias = (((int) 1) << intbiasshift);

protected static final int gammashift = 10; /* gamma = 1024 */

protected static final int gamma = (((int) 1) << gammashift);

protected static final int betashift = 10;

protected static final int beta = (intbias >> betashift); /*

* beta = 1/1024

*/

protected static final int betagamma = (intbias << (gammashift - betashift));

/* defs for decreasing radius factor */

protected static final int initrad = (netsize >> 3); /*

* for 256 cols, radius

* starts

*/

protected static final int radiusbiasshift = 6; /*

* at 32.0 biased by 6 bits

*/

protected static final int radiusbias = (((int) 1) << radiusbiasshift);

protected static final int initradius = (initrad

* radiusbias); /* and decreases by a */

protected static final int radiusdec = 30; /* factor of 1/30 each cycle */

/* defs for decreasing alpha factor */

protected static final int alphabiasshift = 10; /* alpha starts at 1.0 */

protected static final int initalpha = (((int) 1) << alphabiasshift);

protected int alphadec; /* biased by 10 bits */

/* radbias and alpharadbias used for radpower calculation */

protected static final int radbiasshift = 8;

protected static final int radbias = (((int) 1) << radbiasshift);

protected static final int alpharadbshift = (alphabiasshift + radbiasshift);

protected static final int alpharadbias = (((int) 1) << alpharadbshift);

/*

* Types and Global Variables --------------------------

*/

protected byte[] thepicture; /* the input image itself */

protected int lengthcount; /* lengthcount = H*W*3 */

protected int samplefac; /* sampling factor 1..30 */

// typedef int pixel[4]; /* BGRc */

protected int[][] network; /* the network itself - [netsize][4] */

protected int[] netindex = new int[256];

/* for network lookup - really 256 */

protected int[] bias = new int[netsize];

/* bias and freq arrays for learning */

protected int[] freq = new int[netsize];

protected int[] radpower = new int[initrad];

/* radpower for precomputation */

/*

* Initialise network in range (0,0,0) to (255,255,255) and set parameters

* -----------------------------------------------------------------------

*/

public NeuQuant(byte[] thepic, int len, int sample) {

int i;

int[] p;

thepicture = thepic;

lengthcount = len;

samplefac = sample;

network = new int[netsize][];

for (i = 0; i < netsize; i++) {

network[i] = new int[4];

p = network[i];

p[0] = p[1] = p[2] = (i << (netbiasshift + 8)) / netsize;

freq[i] = intbias / netsize; /* 1/netsize */

bias[i] = 0;

}

}

public byte[] colorMap() {

byte[] map = new byte[3 * netsize];

int[] index = new int[netsize];

for (int i = 0; i < netsize; i++)

index[network[i][3]] = i;

int k = 0;

for (int i = 0; i < netsize; i++) {

int j = index[i];

map[k++] = (byte) (network[j][0]);

map[k++] = (byte) (network[j][1]);

map[k++] = (byte) (network[j][2]);

}

return map;

}

/*

* Insertion sort of network and building of netindex[0..255] (to do after

* unbias)

* -------------------------------------------------------------------------

* ------

*/

public void inxbuild() {

int i, j, smallpos, smallval;

int[] p;

int[] q;

int previouscol, startpos;

previouscol = 0;

startpos = 0;

for (i = 0; i < netsize; i++) {

p = network[i];

smallpos = i;

smallval = p[1]; /* index on g */

/* find smallest in i..netsize-1 */

for (j = i + 1; j < netsize; j++) {

q = network[j];

if (q[1] < smallval) { /* index on g */

smallpos = j;

smallval = q[1]; /* index on g */

}

}

q = network[smallpos];

/* swap p (i) and q (smallpos) entries */

if (i != smallpos) {

j = q[0];

q[0] = p[0];

p[0] = j;

j = q[1];

q[1] = p[1];

p[1] = j;

j = q[2];

q[2] = p[2];

p[2] = j;

j = q[3];

q[3] = p[3];

p[3] = j;

}

/* smallval entry is now in position i */

if (smallval != previouscol) {

netindex[previouscol] = (startpos + i) >> 1;

for (j = previouscol + 1; j < smallval; j++)

netindex[j] = i;

previouscol = smallval;

startpos = i;

}

}

netindex[previouscol] = (startpos + maxnetpos) >> 1;

for (j = previouscol + 1; j < 256; j++)

netindex[j] = maxnetpos; /* really 256 */

}

/*

* Main Learning Loop ------------------

*/

public void learn() {

int i, j, b, g, r;

int radius, rad, alpha, step, delta, samplepixels;

byte[] p;

int pix, lim;

if (lengthcount < minpicturebytes)

samplefac = 1;

alphadec = 30 + ((samplefac - 1) / 3);

p = thepicture;

pix = 0;

lim = lengthcount;

samplepixels = lengthcount / (3 * samplefac);

delta = samplepixels / ncycles;

alpha = initalpha;

radius = initradius;

rad = radius >> radiusbiasshift;

if (rad <= 1)

rad = 0;

for (i = 0; i < rad; i++)

radpower[i] = alpha * (((rad * rad - i * i) * radbias) / (rad * rad));

// fprintf(stderr,"beginning 1D learning: initial radius=%d/n", rad);

if (lengthcount < minpicturebytes)

step = 3;

else if ((lengthcount % prime1) != 0)

step = 3 * prime1;

else {

if ((lengthcount % prime2) != 0)

step = 3 * prime2;

else {

if ((lengthcount % prime3) != 0)

step = 3 * prime3;

else

step = 3 * prime4;

}

}

i = 0;

while (i < samplepixels) {

b = (p[pix + 0] & 0xff) << netbiasshift;

g = (p[pix + 1] & 0xff) << netbiasshift;

r = (p[pix + 2] & 0xff) << netbiasshift;

j = contest(b, g, r);

altersingle(alpha, j, b, g, r);

if (rad != 0)

alterneigh(rad, j, b, g, r); /* alter neighbours */

pix += step;

if (pix >= lim)

pix -= lengthcount;

i++;

if (delta == 0)

delta = 1;

if (i % delta == 0) {

alpha -= alpha / alphadec;

radius -= radius / radiusdec;

rad = radius >> radiusbiasshift;

if (rad <= 1)

rad = 0;

for (j = 0; j < rad; j++)

radpower[j] = alpha * (((rad * rad - j * j) * radbias) / (rad * rad));

}

}

// fprintf(stderr,"finished 1D learning: final alpha=%f

// !/n",((float)alpha)/initalpha);

}

/*

* Search for BGR values 0..255 (after net is unbiased) and return colour

* index

* -------------------------------------------------------------------------

* ---

*/

public int map(int b, int g, int r) {

int i, j, dist, a, bestd;

int[] p;

int best;

bestd = 1000; /* biggest possible dist is 256*3 */

best = -1;

i = netindex[g]; /* index on g */

j = i - 1; /* start at netindex[g] and work outwards */

while ((i < netsize) || (j >= 0)) {

if (i < netsize) {

p = network[i];

dist = p[1] - g; /* inx key */

if (dist >= bestd)

i = netsize; /* stop iter */

else {

i++;

if (dist < 0)

dist = -dist;

a = p[0] - b;

if (a < 0)

a = -a;

dist += a;

if (dist < bestd) {

a = p[2] - r;

if (a < 0)

a = -a;

dist += a;

if (dist < bestd) {

bestd = dist;

best = p[3];

}

}

}

}

if (j >= 0) {

p = network[j];

dist = g - p[1]; /* inx key - reverse dif */

if (dist >= bestd)

j = -1; /* stop iter */

else {

j--;

if (dist < 0)

dist = -dist;

a = p[0] - b;

if (a < 0)

a = -a;

dist += a;

if (dist < bestd) {

a = p[2] - r;

if (a < 0)

a = -a;

dist += a;

if (dist < bestd) {

bestd = dist;

best = p[3];

}

}

}

}

}

return (best);

}

public byte[] process() {

learn();

unbiasnet();

inxbuild();

return colorMap();

}

/*

* Unbias network to give byte values 0..255 and record position i to

* prepare for sort

* -------------------------------------------------------------------------

* ----------

*/

public void unbiasnet() {

int i, j;

for (i = 0; i < netsize; i++) {

network[i][0] >>= netbiasshift;

network[i][1] >>= netbiasshift;

network[i][2] >>= netbiasshift;

network[i][3] = i; /* record colour no */

}

}

/*

* Move adjacent neurons by precomputed alpha*(1-((i-j)^2/[r]^2)) in

* radpower[|i-j|]

* -------------------------------------------------------------------------

* --------

*/

protected void alterneigh(int rad, int i, int b, int g, int r) {

int j, k, lo, hi, a, m;

int[] p;

lo = i - rad;

if (lo < -1)

lo = -1;

hi = i + rad;

if (hi > netsize)

hi = netsize;

j = i + 1;

k = i - 1;

m = 1;

while ((j < hi) || (k > lo)) {

a = radpower[m++];

if (j < hi) {

p = network[j++];

try {

p[0] -= (a * (p[0] - b)) / alpharadbias;

p[1] -= (a * (p[1] - g)) / alpharadbias;

p[2] -= (a * (p[2] - r)) / alpharadbias;

} catch (Exception e) {

} // prevents 1.3 miscompilation

}

if (k > lo) {

p = network[k--];

try {

p[0] -= (a * (p[0] - b)) / alpharadbias;

p[1] -= (a * (p[1] - g)) / alpharadbias;

p[2] -= (a * (p[2] - r)) / alpharadbias;

} catch (Exception e) {

}

}

}

}

/*

* Move neuron i towards biased (b,g,r) by factor alpha

* ----------------------------------------------------

*/

protected void altersingle(int alpha, int i, int b, int g, int r) {

/* alter hit neuron */

int[] n = network[i];

n[0] -= (alpha * (n[0] - b)) / initalpha;

n[1] -= (alpha * (n[1] - g)) / initalpha;

n[2] -= (alpha * (n[2] - r)) / initalpha;

}

/*

* Search for biased BGR values ----------------------------

*/

protected int contest(int b, int g, int r) {

/* finds closest neuron (min dist) and updates freq */

/* finds best neuron (min dist-bias) and returns position */

/*

* for frequently chosen neurons, freq[i] is high and bias[i] is

* negative

*/

/* bias[i] = gamma*((1/netsize)-freq[i]) */

int i, dist, a, biasdist, betafreq;

int bestpos, bestbiaspos, bestd, bestbiasd;

int[] n;

bestd = ~(((int) 1) << 31);

bestbiasd = bestd;

bestpos = -1;

bestbiaspos = bestpos;

for (i = 0; i < netsize; i++) {

n = network[i];

dist = n[0] - b;

if (dist < 0)

dist = -dist;

a = n[1] - g;

if (a < 0)

a = -a;

dist += a;

a = n[2] - r;

if (a < 0)

a = -a;

dist += a;

if (dist < bestd) {

bestd = dist;

bestpos = i;

}

biasdist = dist - ((bias[i]) >> (intbiasshift - netbiasshift));

if (biasdist < bestbiasd) {

bestbiasd = biasdist;

bestbiaspos = i;

}

betafreq = (freq[i] >> betashift);

freq[i] -= betafreq;

bias[i] += (betafreq << gammashift);

}

freq[bestpos] += beta;

bias[bestpos] -= betagamma;

return (bestbiaspos);

}

}LZWEncoder.java

import java.io.IOException;

import java.io.OutputStream;

//==============================================================================

// Adapted from Jef Poskanzer's Java port by way of J. M. G. Elliott.

// K Weiner 12/00

public class LZWEncoder {

private static final int EOF = -1;

private int imgW, imgH;

private byte[] pixAry;

private int initCodeSize;

private int remaining;

private int curPixel;

// GIFCOMPR.C - GIF Image compression routines

//

// Lempel-Ziv compression based on 'compress'. GIF modifications by

// David Rowley (mgardi@watdcsu.waterloo.edu)

// General DEFINEs

static final int BITS = 12;

static final int HSIZE = 5003; // 80% occupancy

// GIF Image compression - modified 'compress'

//

// Based on: compress.c - File compression ala IEEE Computer, June 1984.

//

// By Authors: Spencer W. Thomas (decvax!harpo!utah-cs!utah-gr!thomas)

// Jim McKie (decvax!mcvax!jim)

// Steve Davies (decvax!vax135!petsd!peora!srd)

// Ken Turkowski (decvax!decwrl!turtlevax!ken)

// James A. Woods (decvax!ihnp4!ames!jaw)

// Joe Orost (decvax!vax135!petsd!joe)

int n_bits; // number of bits/code

int maxbits = BITS; // user settable max # bits/code

int maxcode; // maximum code, given n_bits

int maxmaxcode = 1 << BITS; // should NEVER generate this code

int[] htab = new int[HSIZE];

int[] codetab = new int[HSIZE];

int hsize = HSIZE; // for dynamic table sizing

int free_ent = 0; // first unused entry

// block compression parameters -- after all codes are used up,

// and compression rate changes, start over.

boolean clear_flg = false;

// Algorithm: use open addressing double hashing (no chaining) on the

// prefix code / next character combination. We do a variant of Knuth's

// algorithm D (vol. 3, sec. 6.4) along with G. Knott's relatively-prime

// secondary probe. Here, the modular division first probe is gives way

// to a faster exclusive-or manipulation. Also do block compression with

// an adaptive reset, whereby the code table is cleared when the compression

// ratio decreases, but after the table fills. The variable-length output

// codes are re-sized at this point, and a special CLEAR code is generated

// for the decompressor. Late addition: construct the table according to

// file size for noticeable speed improvement on small files. Please direct

// questions about this implementation to ames!jaw.

int g_init_bits;

int ClearCode;

int EOFCode;

// output

//

// Output the given code.

// Inputs:

// code: A n_bits-bit integer. If == -1, then EOF. This assumes

// that n_bits =< wordsize - 1.

// Outputs:

// Outputs code to the file.

// Assumptions:

// Chars are 8 bits long.

// Algorithm:

// Maintain a BITS character long buffer (so that 8 codes will

// fit in it exactly). Use the VAX insv instruction to insert each

// code in turn. When the buffer fills up empty it and start over.

int cur_accum = 0;

int cur_bits = 0;

int masks[] = { 0x0000, 0x0001, 0x0003, 0x0007, 0x000F, 0x001F, 0x003F, 0x007F, 0x00FF, 0x01FF, 0x03FF, 0x07FF,

0x0FFF, 0x1FFF, 0x3FFF, 0x7FFF, 0xFFFF };

// Number of characters so far in this 'packet'

int a_count;

// Define the storage for the packet accumulator

byte[] accum = new byte[256];

// ----------------------------------------------------------------------------

LZWEncoder(int width, int height, byte[] pixels, int color_depth) {

imgW = width;

imgH = height;

pixAry = pixels;

initCodeSize = Math.max(2, color_depth);

}

// Add a character to the end of the current packet, and if it is 254

// characters, flush the packet to disk.

void char_out(byte c, OutputStream outs) throws IOException {

accum[a_count++] = c;

if (a_count >= 254)

flush_char(outs);

}

// Clear out the hash table

// table clear for block compress

void cl_block(OutputStream outs) throws IOException {

cl_hash(hsize);

free_ent = ClearCode + 2;

clear_flg = true;

output(ClearCode, outs);

}

// reset code table

void cl_hash(int hsize) {

for (int i = 0; i < hsize; ++i)

htab[i] = -1;

}

void compress(int init_bits, OutputStream outs) throws IOException {

int fcode;

int i /* = 0 */;

int c;

int ent;

int disp;

int hsize_reg;

int hshift;

// Set up the globals: g_init_bits - initial number of bits

g_init_bits = init_bits;

// Set up the necessary values

clear_flg = false;

n_bits = g_init_bits;

maxcode = MAXCODE(n_bits);

ClearCode = 1 << (init_bits - 1);

EOFCode = ClearCode + 1;

free_ent = ClearCode + 2;

a_count = 0; // clear packet

ent = nextPixel();

hshift = 0;

for (fcode = hsize; fcode < 65536; fcode *= 2)

++hshift;

hshift = 8 - hshift; // set hash code range bound

hsize_reg = hsize;

cl_hash(hsize_reg); // clear hash table

output(ClearCode, outs);

outer_loop: while ((c = nextPixel()) != EOF) {

fcode = (c << maxbits) + ent;

i = (c << hshift) ^ ent; // xor hashing

if (htab[i] == fcode) {

ent = codetab[i];

continue;

} else if (htab[i] >= 0) // non-empty slot

{

disp = hsize_reg - i; // secondary hash (after G. Knott)

if (i == 0)

disp = 1;

do {

if ((i -= disp) < 0)

i += hsize_reg;

if (htab[i] == fcode) {

ent = codetab[i];

continue outer_loop;

}

} while (htab[i] >= 0);

}

output(ent, outs);

ent = c;

if (free_ent < maxmaxcode) {

codetab[i] = free_ent++; // code -> hashtable

htab[i] = fcode;

} else

cl_block(outs);

}

// Put out the final code.

output(ent, outs);

output(EOFCode, outs);

}

// ----------------------------------------------------------------------------

void encode(OutputStream os) throws IOException {

os.write(initCodeSize); // write "initial code size" byte

remaining = imgW * imgH; // reset navigation variables

curPixel = 0;

compress(initCodeSize + 1, os); // compress and write the pixel data

os.write(0); // write block terminator

}

// Flush the packet to disk, and reset the accumulator

void flush_char(OutputStream outs) throws IOException {

if (a_count > 0) {

outs.write(a_count);

outs.write(accum, 0, a_count);

a_count = 0;

}

}

final int MAXCODE(int n_bits) {

return (1 << n_bits) - 1;

}

// ----------------------------------------------------------------------------

// Return the next pixel from the image

// ----------------------------------------------------------------------------

private int nextPixel() {

if (remaining == 0)

return EOF;

--remaining;

byte pix = pixAry[curPixel++];

return pix & 0xff;

}

void output(int code, OutputStream outs) throws IOException {

cur_accum &= masks[cur_bits];

if (cur_bits > 0)

cur_accum |= (code << cur_bits);

else

cur_accum = code;

cur_bits += n_bits;

while (cur_bits >= 8) {

char_out((byte) (cur_accum & 0xff), outs);

cur_accum >>= 8;

cur_bits -= 8;

}

// If the next entry is going to be too big for the code size,

// then increase it, if possible.

if (free_ent > maxcode || clear_flg) {

if (clear_flg) {

maxcode = MAXCODE(n_bits = g_init_bits);

clear_flg = false;

} else {

++n_bits;

if (n_bits == maxbits)

maxcode = maxmaxcode;

else

maxcode = MAXCODE(n_bits);

}

}

if (code == EOFCode) {

// At EOF, write the rest of the buffer.

while (cur_bits > 0) {

char_out((byte) (cur_accum & 0xff), outs);

cur_accum >>= 8;

cur_bits -= 8;

}

flush_char(outs);

}

}

}AnimatedGifEncoder.java

import java.awt.Color;

import java.awt.Graphics2D;

import java.awt.image.BufferedImage;

import java.awt.image.DataBufferByte;

import java.io.BufferedOutputStream;

import java.io.FileOutputStream;

import java.io.IOException;

import java.io.OutputStream;

/**

* Class AnimatedGifEncoder - Encodes a GIF file consisting of one or more

* frames.

*

* <pre>

* Example:

* AnimatedGifEncoder e = new AnimatedGifEncoder();

* e.start(outputFileName);

* e.setDelay(1000); // 1 frame per sec

* e.addFrame(image1);

* e.addFrame(image2);

* e.finish();

* </pre>

*

* No copyright asserted on the source code of this class. May be used for any

* purpose, however, refer to the Unisys LZW patent for restrictions on use of

* the associated LZWEncoder class. Please forward any corrections to

* kweiner@fmsware.com.

*

* @author Kevin Weiner, FM Software

* @version 1.03 November 2003

*

*/

public class AnimatedGifEncoder {

protected int width; // image size

protected int height;

protected Color transparent = null; // transparent color if given

protected int transIndex; // transparent index in color table

protected int repeat = -1; // no repeat

protected int delay = 0; // frame delay (hundredths)

protected boolean started = false; // ready to output frames

protected OutputStream out;

protected BufferedImage image; // current frame

protected byte[] pixels; // BGR byte array from frame

protected byte[] indexedPixels; // converted frame indexed to palette

protected int colorDepth; // number of bit planes

protected byte[] colorTab; // RGB palette

protected boolean[] usedEntry = new boolean[256]; // active palette entries

protected int palSize = 7; // color table size (bits-1)

protected int dispose = -1; // disposal code (-1 = use default)

protected boolean closeStream = false; // close stream when finished

protected boolean firstFrame = true;

protected boolean sizeSet = false; // if false, get size from first frame

protected int sample = 10; // default sample interval for quantizer

/**

* Sets the delay time between each frame, or changes it for subsequent

* frames (applies to last frame added).

*

* @param ms

* int delay time in milliseconds

*/

public void setDelay(int ms) {

delay = Math.round(ms / 10.0f);

}

/**

* Sets the GIF frame disposal code for the last added frame and any

* subsequent frames. Default is 0 if no transparent color has been set,

* otherwise 2.

*

* @param code

* int disposal code.

*/

public void setDispose(int code) {

if (code >= 0) {

dispose = code;

}

}

/**

* Sets the number of times the set of GIF frames should be played. Default

* is 1; 0 means play indefinitely. Must be invoked before the first image

* is added.

*

* @param iter

* int number of iterations.

* @return

*/

public void setRepeat(int iter) {

if (iter >= 0) {

repeat = iter;

}

}

/**

* Sets the transparent color for the last added frame and any subsequent

* frames. Since all colors are subject to modification in the quantization

* process, the color in the final palette for each frame closest to the

* given color becomes the transparent color for that frame. May be set to

* null to indicate no transparent color.

*

* @param c

* Color to be treated as transparent on display.

*/

public void setTransparent(Color c) {

transparent = c;

}

/**

* Adds next GIF frame. The frame is not written immediately, but is

* actually deferred until the next frame is received so that timing data

* can be inserted. Invoking <code>finish()</code> flushes all frames. If

* <code>setSize</code> was not invoked, the size of the first image is used

* for all subsequent frames.

*

* @param im

* BufferedImage containing frame to write.

* @return true if successful.

*/

public boolean addFrame(BufferedImage im) {

if ((im == null) || !started) {

return false;

}

boolean ok = true;

try {

if (!sizeSet) {

// use first frame's size

setSize(im.getWidth(), im.getHeight());

}

image = im;

getImagePixels(); // convert to correct format if necessary

analyzePixels(); // build color table & map pixels

if (firstFrame) {

writeLSD(); // logical screen descriptior

writePalette(); // global color table

if (repeat >= 0) {

// use NS app extension to indicate reps

writeNetscapeExt();

}

}

writeGraphicCtrlExt(); // write graphic control extension

writeImageDesc(); // image descriptor

if (!firstFrame) {

writePalette(); // local color table

}

writePixels(); // encode and write pixel data

firstFrame = false;

} catch (IOException e) {

ok = false;

}

return ok;

}

/**

* Flushes any pending data and closes output file. If writing to an

* OutputStream, the stream is not closed.

*/

public boolean finish() {

if (!started)

return false;

boolean ok = true;

started = false;

try {

out.write(0x3b); // gif trailer

out.flush();

if (closeStream) {

out.close();

}

} catch (IOException e) {

ok = false;

}

// reset for subsequent use

transIndex = 0;

out = null;

image = null;

pixels = null;

indexedPixels = null;

colorTab = null;

closeStream = false;

firstFrame = true;

return ok;

}

/**

* Sets frame rate in frames per second. Equivalent to

* <code>setDelay(1000/fps)</code>.

*

* @param fps

* float frame rate (frames per second)

*/

public void setFrameRate(float fps) {

if (fps != 0f) {

delay = Math.round(100f / fps);

}

}

/**

* Sets quality of color quantization (conversion of images to the maximum

* 256 colors allowed by the GIF specification). Lower values (minimum = 1)

* produce better colors, but slow processing significantly. 10 is the

* default, and produces good color mapping at reasonable speeds. Values

* greater than 20 do not yield significant improvements in speed.

*

* @param quality

* int greater than 0.

* @return

*/

public void setQuality(int quality) {

if (quality < 1)

quality = 1;

sample = quality;

}

/**

* Sets the GIF frame size. The default size is the size of the first frame

* added if this method is not invoked.

*

* @param w

* int frame width.

* @param h

* int frame width.

*/

public void setSize(int w, int h) {

if (started && !firstFrame)

return;

width = w;

height = h;

if (width < 1)

width = 320;

if (height < 1)

height = 240;

sizeSet = true;

}

/**

* Initiates GIF file creation on the given stream. The stream is not closed

* automatically.

*

* @param os

* OutputStream on which GIF images are written.

* @return false if initial write failed.

*/

public boolean start(OutputStream os) {

if (os == null)

return false;

boolean ok = true;

closeStream = false;

out = os;

try {

writeString("GIF89a"); // header

} catch (IOException e) {

ok = false;

}

return started = ok;

}

/**

* Initiates writing of a GIF file with the specified name.

*

* @param file

* String containing output file name.

* @return false if open or initial write failed.

*/

public boolean start(String file) {

boolean ok = true;

try {

out = new BufferedOutputStream(new FileOutputStream(file));

ok = start(out);

closeStream = true;

} catch (IOException e) {

ok = false;

}

return started = ok;

}

/**

* Analyzes image colors and creates color map.

*/

protected void analyzePixels() {

int len = pixels.length;

int nPix = len / 3;

indexedPixels = new byte[nPix];

NeuQuant nq = new NeuQuant(pixels, len, sample);

// initialize quantizer

colorTab = nq.process(); // create reduced palette

// convert map from BGR to RGB

for (int i = 0; i < colorTab.length; i += 3) {

byte temp = colorTab[i];

colorTab[i] = colorTab[i + 2];

colorTab[i + 2] = temp;

usedEntry[i / 3] = false;

}

// map image pixels to new palette

int k = 0;

for (int i = 0; i < nPix; i++) {

int index = nq.map(pixels[k++] & 0xff, pixels[k++] & 0xff, pixels[k++] & 0xff);

usedEntry[index] = true;

indexedPixels[i] = (byte) index;

}

pixels = null;

colorDepth = 8;

palSize = 7;

// get closest match to transparent color if specified

if (transparent != null) {

transIndex = findClosest(transparent);

}

}

/**

* Returns index of palette color closest to c

*

*/

protected int findClosest(Color c) {

if (colorTab == null)

return -1;

int r = c.getRed();

int g = c.getGreen();

int b = c.getBlue();

int minpos = 0;

int dmin = 256 * 256 * 256;

int len = colorTab.length;

for (int i = 0; i < len;) {

int dr = r - (colorTab[i++] & 0xff);

int dg = g - (colorTab[i++] & 0xff);

int db = b - (colorTab[i] & 0xff);

int d = dr * dr + dg * dg + db * db;

int index = i / 3;

if (usedEntry[index] && (d < dmin)) {

dmin = d;

minpos = index;

}

i++;

}

return minpos;

}

/**

* Extracts image pixels into byte array "pixels"

*/

protected void getImagePixels() {

int w = image.getWidth();

int h = image.getHeight();

int type = image.getType();

if ((w != width) || (h != height) || (type != BufferedImage.TYPE_3BYTE_BGR)) {

// create new image with right size/format

BufferedImage temp = new BufferedImage(width, height, BufferedImage.TYPE_3BYTE_BGR);

Graphics2D g = temp.createGraphics();

g.drawImage(image, 0, 0, null);

image = temp;

}

pixels = ((DataBufferByte) image.getRaster().getDataBuffer()).getData();

}

/**

* Writes Graphic Control Extension

*/

protected void writeGraphicCtrlExt() throws IOException {

out.write(0x21); // extension introducer

out.write(0xf9); // GCE label

out.write(4); // data block size

int transp, disp;

if (transparent == null) {

transp = 0;

disp = 0; // dispose = no action

} else {

transp = 1;

disp = 2; // force clear if using transparent color

}

if (dispose >= 0) {

disp = dispose & 7; // user override

}

disp <<= 2;

// packed fields

out.write(0 | // 1:3 reserved

disp | // 4:6 disposal

0 | // 7 user input - 0 = none

transp); // 8 transparency flag

writeShort(delay); // delay x 1/100 sec

out.write(transIndex); // transparent color index

out.write(0); // block terminator

}

/**

* Writes Image Descriptor

*/

protected void writeImageDesc() throws IOException {

out.write(0x2c); // image separator

writeShort(0); // image position x,y = 0,0

writeShort(0);

writeShort(width); // image size

writeShort(height);

// packed fields

if (firstFrame) {

// no LCT - GCT is used for first (or only) frame

out.write(0);

} else {

// specify normal LCT

out.write(0x80 | // 1 local color table 1=yes

0 | // 2 interlace - 0=no

0 | // 3 sorted - 0=no

0 | // 4-5 reserved

palSize); // 6-8 size of color table

}

}

/**

* Writes Logical Screen Descriptor

*/

protected void writeLSD() throws IOException {

// logical screen size

writeShort(width);

writeShort(height);

// packed fields

out.write((0x80 | // 1 : global color table flag = 1 (gct used)

0x70 | // 2-4 : color resolution = 7

0x00 | // 5 : gct sort flag = 0

palSize)); // 6-8 : gct size

out.write(0); // background color index

out.write(0); // pixel aspect ratio - assume 1:1

}

/**

* Writes Netscape application extension to define repeat count.

*/

protected void writeNetscapeExt() throws IOException {

out.write(0x21); // extension introducer

out.write(0xff); // app extension label

out.write(11); // block size

writeString("NETSCAPE" + "2.0"); // app id + auth code

out.write(3); // sub-block size

out.write(1); // loop sub-block id

writeShort(repeat); // loop count (extra iterations, 0=repeat forever)

out.write(0); // block terminator

}

/**

* Writes color table

*/

protected void writePalette() throws IOException {

out.write(colorTab, 0, colorTab.length);

int n = (3 * 256) - colorTab.length;

for (int i = 0; i < n; i++) {

out.write(0);

}

}

/**

* Encodes and writes pixel data

*/

protected void writePixels() throws IOException {

LZWEncoder encoder = new LZWEncoder(width, height, indexedPixels, colorDepth);

encoder.encode(out);

}

/**

* Write 16-bit value to output stream, LSB first

*/

protected void writeShort(int value) throws IOException {

out.write(value & 0xff);

out.write((value >> 8) & 0xff);

}

/**

* Writes string to output stream

*/

protected void writeString(String s) throws IOException {

for (int i = 0; i < s.length(); i++) {

out.write((byte) s.charAt(i));

}

}

}

相关文章推荐

- 我的Android进阶之旅------>介绍一款集录制与剪辑为一体的屏幕GIF 动画制作工具 GifCam

- [置顶] 我的Android进阶之旅------>介绍一款集录制与剪辑为一体的屏幕GIF 动画制作工具 GifCam

- 基于java-applet的简单流程图绘制小工具的制作

- 超酷java主页特效制作工具Anfy

- 推荐6款常用的Java开源报表制作工具

- 6款常用的Java开源报表制作工具 .

- Java制作的快速打开文件夹、程序的小工具

- 小巧的GIF制作工具

- 常用的Java开源报表制作工具

- 推荐6款常用的Java开源报表制作工具

- [java]izpack3.7.2 安装制作工具 中文问题解决

- Java应用程序安装包制作工具简介

- <html xmlns:v="urn:schemas-microsoft-com:vml"Java软件制作全过程-----------------------工具installAnyWhere

- 推荐6款常用的Java开源报表制作工具

- 基于java-applet的简单流程图绘制小工具的制作

- [转载]应用程序(Java程序)安装包制作工具简介

- 基于java-applet的简单流程图绘制小工具的制作

- 6款常用的Java开源报表制作工具

- 推荐6款常用的Java开源报表制作工具

- 推荐6款常用的Java开源报表制作工具