[IO系统]08 IO读流程分析

2017-02-07 09:32

344 查看

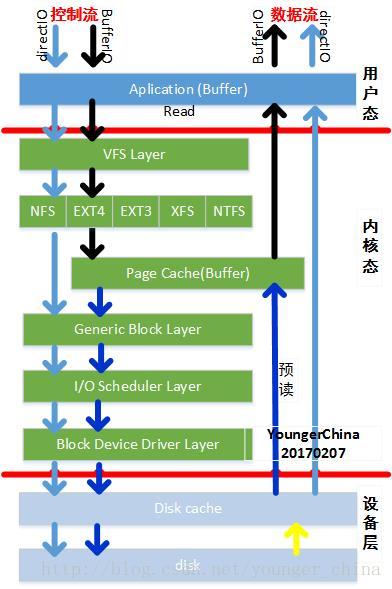

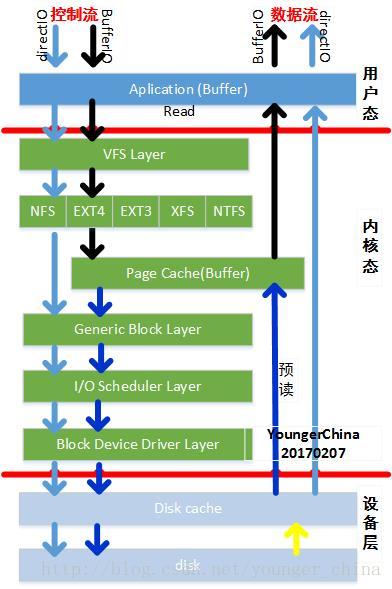

本文从整体来分析缓存IO的控制流和数据流,并基于IO系统图来解析读IO:

注:对上述层次图的理解参见文章《[IO系统]01 IO子系统》

一步一步往前走。(内核代码版本4.5.4)

1.1 用户态

程序的最终目的是要把数据写到磁盘上,如前所述,用户态有两个“打开”函数——read和fread。其中fread是glibc对系统调用read的封装。

参见《[IO系统]02 用户态的文件IO操作》

fread的流程在用户态的操作比较复杂,涉及到更多的数据复制和处理流程,本文不做介绍,后续单独分析。

Poxis接口read直接通过系统调用read。

1.2 read系统调用/VFS层

read系统调用是在fs/read_write.c中实现的:

函数解析:

1. 通过函数fdget_pos将整形的句柄ID fd转化为内核数据结构fd,可称之为文件句柄描述符。

fd结构体如下:

struct fd {

struct file *file;/* 文件对象 */

unsigned int flags;

};

内核中文件系统各结构体之间的关系参照文章《[IO系统]因OPEN建立的结构体关系图》

2. 获取文件file的操作位置,也可以理解光标(记录在pos);

3. 调用VFS接口vfs_read()实现读操作:检查参数的有效性,通过__vfs_read函数调用具体文件系统(如ext4,xfs,btrfs,ocfs2)的write函数。

当然如果没有定义具体的read函数,则通过接口read_iter实现数据读操作。

4. 更新file的pos变量。

1.3 具体文件系统层

如果具体文件系统,比如ext4,没有read接口,则调用read_iter接口来去读取数据:

const struct file_operations ext4_file_operations = {

.llseek = ext4_llseek,

.read_iter = generic_file_read_iter,

.write_iter = ext4_file_write_iter,

…

};

而read_iter时直接调用generic_file_read_iter函数。

文件系统通过预读机制将磁盘上的数据读入到page cache中

1.4 Page Cache层

Page Cache是文件数据在内存中的副本,因此Page Cache管理与内存管理系统和文件系统都相关:一方面Page Cache作为物理内存的一部分,需要参与物理内存的分配回收过程,另一方面Page Cache中的数据来源于存储设备上的文件,需要通过文件系统与存储设备进行读写交互。从操作系统的角度考虑,Page Cache可以看做是内存管理系统与文件系统之间的联系纽带。因此,Page Cache管理是操作系统的一个重要组成部分,它的性能直接影响着文件系统和内存管理系统的性能。

本文不做详细介绍,后续讲解。

1.5 通用块层

同IO写流程分析。

1.6 IO调度层

同IO写流程分析。

1.7 设备驱动层

同IO写流程分析。

1.8 设备层

暂不分析。

1.9 参考文献

[博客] http://blog.chinaunix.net/uid-28236237-id-4030381.html

注:对上述层次图的理解参见文章《[IO系统]01 IO子系统》

一步一步往前走。(内核代码版本4.5.4)

1.1 用户态

程序的最终目的是要把数据写到磁盘上,如前所述,用户态有两个“打开”函数——read和fread。其中fread是glibc对系统调用read的封装。

参见《[IO系统]02 用户态的文件IO操作》

fread的流程在用户态的操作比较复杂,涉及到更多的数据复制和处理流程,本文不做介绍,后续单独分析。

Poxis接口read直接通过系统调用read。

1.2 read系统调用/VFS层

read系统调用是在fs/read_write.c中实现的:

SYSCALL_DEFINE3(read, unsigned int, fd, char __user *, buf, size_t, count)

{

struct fd f = fdget_pos(fd);

ssize_t ret = -EBADF;

if (f.file) {

loff_t pos = file_pos_read(f.file);

ret = vfs_read(f.file, buf, count, &pos);

if (ret >= 0)

file_pos_write(f.file, pos);

fdput_pos(f);

}

return ret;

}函数解析:

1. 通过函数fdget_pos将整形的句柄ID fd转化为内核数据结构fd,可称之为文件句柄描述符。

fd结构体如下:

struct fd {

struct file *file;/* 文件对象 */

unsigned int flags;

};

内核中文件系统各结构体之间的关系参照文章《[IO系统]因OPEN建立的结构体关系图》

2. 获取文件file的操作位置,也可以理解光标(记录在pos);

3. 调用VFS接口vfs_read()实现读操作:检查参数的有效性,通过__vfs_read函数调用具体文件系统(如ext4,xfs,btrfs,ocfs2)的write函数。

ssize_t vfs_read(struct file *file, char __user *buf, size_t count, loff_t *pos)

{

ssize_t ret;

if (!(file->f_mode & FMODE_READ)) /* 判断是否具有读权限 */

return -EBADF;

if (!(file->f_mode & FMODE_CAN_READ))

return -EINVAL;

if (unlikely(!access_ok(VERIFY_WRITE, buf, count)))

return -EFAULT;

ret = rw_verify_area(READ, file, pos, count);

if (ret >= 0) {

count = ret;

ret = __vfs_read(file, buf, count, pos);

if (ret > 0) {

fsnotify_access(file);

add_rchar(current, ret);

}

inc_syscr(current);

}

return ret;

}当然如果没有定义具体的read函数,则通过接口read_iter实现数据读操作。

ssize_t __vfs_read(struct file *file, char __user *buf, size_t count,

loff_t *pos)

{

if (file->f_op->read)

return file->f_op->read(file, buf, count, pos);

else if (file->f_op->read_iter)

return new_sync_read(file, buf, count, pos);

else

return -EINVAL;

}

EXPORT_SYMBOL(__vfs_read);new_sync_read是对参数进行一次封装,封装为结构体iov_iter4. 更新file的pos变量。

1.3 具体文件系统层

如果具体文件系统,比如ext4,没有read接口,则调用read_iter接口来去读取数据:

const struct file_operations ext4_file_operations = {

.llseek = ext4_llseek,

.read_iter = generic_file_read_iter,

.write_iter = ext4_file_write_iter,

…

};

而read_iter时直接调用generic_file_read_iter函数。

/**

* generic_file_read_iter - generic filesystem read routine

* @iocb: kernel I/O control block

* @iter: destination for the data read

*

* This is the "read_iter()" routine for all filesystems

* that can use the page cache directly.

*/

ssize_t

generic_file_read_iter(struct kiocb *iocb, struct iov_iter *iter)

{

/* 解析参数*/

struct file *file = iocb->ki_filp;

ssize_t retval = 0;

loff_t *ppos = &iocb->ki_pos;

loff_t pos = *ppos;

size_t count = iov_iter_count(iter);

if (!count)

goto out; /* skip atime */

if (iocb->ki_flags & IOCB_DIRECT) { /* 直接IO读 */

struct address_space *mapping = file->f_mapping;

struct inode *inode = mapping->host;

loff_t size;

size = i_size_read(inode);

retval = filemap_write_and_wait_range(mapping, pos,

pos + count - 1);/* 刷mapping下的脏页,保证数据一致性 */

if (!retval) {/* 脏页回刷失败,则通过直接IO写回存储设备 */

struct iov_iter data = *iter;

retval = mapping->a_ops->direct_IO(iocb, &data, pos);

}

if (retval > 0) {

*ppos = pos + retval;

iov_iter_advance(iter, retval);

}

/*

* Btrfs can have a short DIO read if we encounter

* compressed extents, so if there was an error, or if

* we've already read everything we wanted to, or if

* there was a short read because we hit EOF, go ahead

* and return. Otherwise fallthrough to buffered io for

* the rest of the read. Buffered reads will not work for

* DAX files, so don't bother trying.

*/

if (retval < 0 || !iov_iter_count(iter) || *ppos >= size ||

IS_DAX(inode)) {

file_accessed(file);

goto out;

}

}

/* 缓存IO读 */

retval = do_generic_file_read(file, ppos, iter, retval);

out:

return retval;

}

EXPORT_SYMBOL(generic_file_read_iter);

直接IO:

控制流,若为直接IO,在执行读取操作时,首先会将mapping下的脏数据刷回磁盘,然后调用do_generic_file_read读取数据。

数据流,数据依旧存放在用户态缓存中,并不需要将数据复制到page cache中,减少了数据复制次数。

缓存IO:

控制流,若进入BufferIO,则直接调用do_generic_file_read来读取数据。

数据流,数据从用户态复制到内核态page cache中。

函数do_generic_file_read分析

/**

* do_generic_file_read - generic file read routine

* @filp: the file to read

* @ppos: current file position

* @iter: data destination

* @written: already copied

*

* This is a generic file read routine, and uses the

* mapping->a_ops->readpage() function for the actual low-level stuff.

*

* This is really ugly. But the goto's actually try to clarify some

* of the logic when it comes to error handling etc.

*/

static ssize_t do_generic_file_read(struct file *filp, loff_t *ppos,

struct iov_iter *iter, ssize_t written)

{

struct address_space *mapping = filp->f_mapping;

struct inode *inode = mapping->host;

struct file_ra_state *ra = &filp->f_ra;

pgoff_t index;

pgoff_t last_index;

pgoff_t prev_index;

unsigned long offset; /* offset into pagecache page */

unsigned int prev_offset;

int error = 0;

/* 因为读取是按照页来的,所以需要计算本次读取的第一个page*/

index = *ppos >> PAGE_SHIFT;

prev_index = ra->prev_pos >> PAGE_SHIFT; /* 上次预读page的起始索引 */

prev_offset = ra->prev_pos & (PAGE_SIZE-1); /* 上次预读的起始位置 */

last_index = (*ppos + iter->count + PAGE_SIZE-1) >> PAGE_SHIFT; /*读取最后一个页 */

offset = *ppos & ~PAGE_MASK;

for (;;) {

struct page *page;

pgoff_t end_index;

loff_t isize;

unsigned long nr, ret;

cond_resched();

find_page:

page = find_get_page(mapping, index); /*在radix树中查找相应的page*/

if (!page) { /*在radix树中查找相应的page*/

/* 如果没有找到page,内存中没有将数据,先进行预读 */

page_cache_sync_readahead(mapping,

ra, filp,

index, last_index - index);

page = find_get_page(mapping, index); /*在radix树中再次查找相应的page*/

if (unlikely(page == NULL))

goto no_cached_page;

}

if (PageReadahead(page)) {

/* 发现找到的page已经是预读的情况了,再继续异步预读,此处是基于经验的优化 */

page_cache_async_readahead(mapping,

ra, filp, page,

index, last_index - index);

}

if (!PageUptodate(page)) {/* 数据内容不是最新,则需要更新数据内容 */

/*

* See comment in do_read_cache_page on why

* wait_on_page_locked is used to avoid unnecessarily

* serialisations and why it's safe.

*/

wait_on_page_locked_killable(page);

if (PageUptodate(page))

goto page_ok;

if (inode->i_blkbits == PAGE_SHIFT ||

!mapping->a_ops->is_partially_uptodate)

goto page_not_up_to_date;

if (!trylock_page(page))

goto page_not_up_to_date;

/* Did it get truncated before we got the lock? */

if (!page->mapping)

goto page_not_up_to_date_locked;

if (!mapping->a_ops->is_partially_uptodate(page,

offset, iter->count))

goto page_not_up_to_date_locked;

unlock_page(page);

}

page_ok: /* 数据内容是最新的 */

/*

* i_size must be checked after we know the page is Uptodate.

*

* Checking i_size after the check allows us to calculate

* the correct value for "nr", which means the zero-filled

* part of the page is not copied back to userspace (unless

* another truncate extends the file - this is desired though).

*/

/*下面这段代码是在page中的内容ok的情况下将page中的内容拷贝到用户空间去,主要的逻辑分为检查参数是否合法进性拷贝操作*/

/*合法性检查*/

isize = i_size_read(inode);

end_index = (isize - 1) >> PAGE_SHIFT;

if (unlikely(!isize || index > end_index)) {

put_page(page);

goto out;

}

/* nr is the maximum number of bytes to copy from this page */

nr = PAGE_SIZE;

if (index == end_index) {

nr = ((isize - 1) & ~PAGE_MASK) + 1;

if (nr <= offset) {

put_page(page);

goto out;

}

}

nr = nr - offset;

/* If users can be writing to this page using arbitrary

* virtual addresses, take care about potential aliasing

* before reading the page on the kernel side.

*/

if (mapping_writably_mapped(mapping))

flush_dcache_page(page);

/*

* When a sequential read accesses a page several times,

* only mark it as accessed the first time.

*/

if (prev_index != index || offset != prev_offset)

mark_page_accessed(page);

prev_index = index;

/*

* Ok, we have the page, and it's up-to-date, so

* now we can copy it to user space...

*/

/* 将数据从内核态复制到用户态 */

ret = copy_page_to_iter(page, offset, nr, iter);

offset += ret;

index += offset >> PAGE_SHIFT;

offset &= ~PAGE_MASK;

prev_offset = offset;

put_page(page);

written += ret;

if (!iov_iter_count(iter))

goto out;

if (ret < nr) {

error = -EFAULT;

goto out;

}

continue;

page_not_up_to_date:

/* Get exclusive access to the page ...互斥访问 */

error = lock_page_killable(page);

if (unlikely(error))

goto readpage_error;

page_not_up_to_date_locked:

/* Did it get truncated before we got the lock? */

/* 获取到锁之后,发现这个page没有被映射了,

* 可能是在获取锁之前就被其它模块释放掉了,重新开始获取lock*/

if (!page->mapping) {

unlock_page(page);

put_page(page);

continue;

}

/* Did somebody else fill it already? */

/* 获取到锁后发现page中的数据已经ok了,不需要再读取数据 */

if (PageUptodate(page)) {

unlock_page(page);

goto page_ok;

}

readpage:

/* 数据读取操作

* A previous I/O error may have been due to temporary

* failures, eg. multipath errors.

* PG_error will be set again if readpage fails.

*/

ClearPageError(page);

/* Start the actual read. The read will unlock the page. */

error = mapping->a_ops->readpage(filp, page);

…

if (…error) {

….

goto readpage_error;

}

…

goto page_ok;

readpage_error:

/* UHHUH! A synchronous read error occurred. Report it,同步读取失败 */

put_page(page);

goto out;

no_cached_page:

/* 系统中没有数据,又不进行预读的情况,显示的分配page,并读取page

* Ok, it wasn't cached, so we need to create a new page.. */

page = page_cache_alloc_cold(mapping);

…

error = add_to_page_cache_lru(page, mapping, index,

mapping_gfp_constraint(mapping, GFP_KERNEL));

if (error) {

…

goto out;

}

goto readpage;

}

out:

ra->prev_pos = prev_index;

ra->prev_pos <<= PAGE_SHIFT;

ra->prev_pos |= prev_offset;

*ppos = ((loff_t)index << PAGE_SHIFT) + offset;

file_accessed(filp);

return written ? written : error;

}文件系统通过预读机制将磁盘上的数据读入到page cache中

1.4 Page Cache层

Page Cache是文件数据在内存中的副本,因此Page Cache管理与内存管理系统和文件系统都相关:一方面Page Cache作为物理内存的一部分,需要参与物理内存的分配回收过程,另一方面Page Cache中的数据来源于存储设备上的文件,需要通过文件系统与存储设备进行读写交互。从操作系统的角度考虑,Page Cache可以看做是内存管理系统与文件系统之间的联系纽带。因此,Page Cache管理是操作系统的一个重要组成部分,它的性能直接影响着文件系统和内存管理系统的性能。

本文不做详细介绍,后续讲解。

1.5 通用块层

同IO写流程分析。

1.6 IO调度层

同IO写流程分析。

1.7 设备驱动层

同IO写流程分析。

1.8 设备层

暂不分析。

1.9 参考文献

[博客] http://blog.chinaunix.net/uid-28236237-id-4030381.html

相关文章推荐

- linux IO子系统和文件系统读写流程分析

- [IO系统]07 IO写流程分析

- [IO系统]05 open流程分析

- linux IO子系统和文件系统读写流程

- 基于ARM的嵌入式系统Bootloader启动流程分析[转自http://blog.ednchina.com/darkbluexn/11951/message.aspx#]

- linux内核文件IO的系统调用实现分析

- 基于ARM的嵌入式系统Bootloader启动流程分析

- 业务审批过程分析与使用Sbo系统存储过程实现业务审批流程状态检索

- Linux 内核文件系统与设备操作流程分析

- Camera系统中设置picture大小菜单的流程分析(三)

- Camera系统中设置picture大小菜单的流程分析(二)

- open系统调用在内核中的流程分析

- Linux系统分析之启动流程

- Linux系统分析之启动流程

- AIX性能监控系列学习-IO系统瓶颈分析

- 基于ARM的嵌入式系统Bootloader启动流程分析----- 转!!

- linux 2.6.11内核文件IO的系统调用实现分析--再续

- MBR结构以及系统启动流程分析

- open系统调用在内核中的流程分析