hbase java api操作导入数据

2016-12-01 23:13

375 查看

使用hbase存储名人数据集,数据集由名人文字信息以及名人图片组成。

名人文字信息使用scrapy框架从wiki百科上爬取并保存在csv格式中。

图片信息从百度图片上爬取每人30张保存在以该名人姓名命名的文件夹中

因此本文包含以下几个方面:

- 爬取文本的爬虫

- 爬取图片的爬虫

- 将数据导入hbase

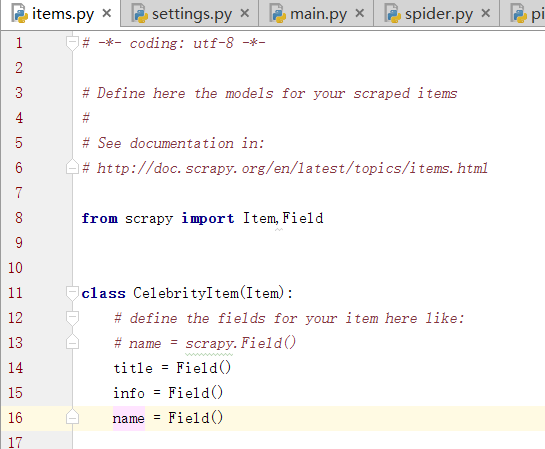

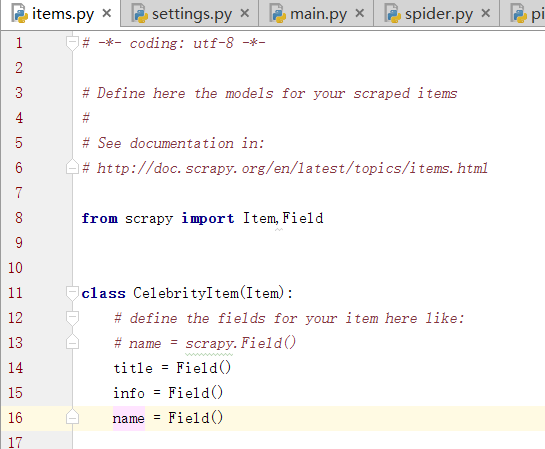

然后在settings.py文件中加入

即以csv格式保存爬取数据以及文件保存位置

在main.py文件中加入

名人文字信息使用scrapy框架从wiki百科上爬取并保存在csv格式中。

图片信息从百度图片上爬取每人30张保存在以该名人姓名命名的文件夹中

因此本文包含以下几个方面:

- 爬取文本的爬虫

- 爬取图片的爬虫

- 将数据导入hbase

scrapy 爬取wiki百科

首先新建scrapy项目

然后在settings.py文件中加入

FEED_URI = u'file:///F:/pySpace/celebrity/info1.csv' FEED_FORMAT = 'CSV'

即以csv格式保存爬取数据以及文件保存位置

在main.py文件中加入

import sys

reload(sys)

sys.setdefaultencoding('utf-8')

sys.getdefaultencoding()

from scrapy import cmdline

cmdline.execute("scrapy crawl celebrity".split())<python>

# -*- coding: utf-8 -*-

from scrapy.spiders import CrawlSpider

from scrapy.selector import Selector

from celebrity.items import CelebrityItem

from scrapy.http import Request

import pandas as pd

#读取待爬取的名人姓名列表

with open(r'F:\pySpace\celebrity\name_lists1.txt','r') as f:

url_list = f.read()

url_list = url_list.split('\n')

class Celebrity(CrawlSpider):

len_url = len(url_list)

num =1

name = "celebrity"

front_url = 'https://zh.wikipedia.org/wiki/'

start_urls = [front_url + url_list[num].encode('utf-8')]

def parse(self, response):

item = CelebrityItem()

selector = Selector(response)

body = selector.xpath('//*[@id="mw-content-text"]')[0]

Title = body.xpath('//span[@class="mw-headline"]/text()').extract()

titles = ['简介']

for i in range(len(Title)):

if Title[i] != '参考文献' and Title[i] != '注释' and Title[i] != '外部链接' and Title[i] != '参考资料':

titles.append(Title[i])

Passage = selector.xpath('//*[@id="mw-content-text"]/p')

all_info = []

for eachPassage in Passage:

info =''.join(eachPassage.xpath('.//text()').extract())

if info!= '':

all_info.append(info.strip())

Ul_list = selector.xpath('//*[@id="mw-content-text"]/ul')

for eachul in Ul_list:

info = ''.join(eachul.xpath('.//text()').extract())

if info != '' and info!= '\n' and info != ' ':

all_info.append(info)

# 爬取带标题的

k = 0

epoch = len(all_info) / len(titles)

i=0

if epoch >0:

for i in range(len(titles)):

if i == len(titles)-1:

item['name'] = url_list[self.num].encode('utf-8')

item['title'] = titles[i]

item['info'] = ''.join(all_info[k:])

else :

item['name'] = url_list[self.num].encode('utf-8')

item['title'] = titles[i]

item['info'] = ''.join(all_info[k:k+epoch])

k = k+epoch

yield item

else :

for j in range(len(all_info)):

item['name'] = url_list[self.num].encode('utf-8')

item['title'] = titles[j]

item['info'] = all_info[j]

yield item

#爬取不带标题的

# for j in range(len(all_info)):

# item['name'] = url_l

4000

ist[self.num].encode('utf-8')

# item['info'] = all_info[j]

# yield item

print item['name']

self.num = self.num + 1

print self.num

if self.num < self.len_url:

nextUrl =self.front_url + url_list[self.num].encode('utf-8')

yield Request(nextUrl,callback=self.parse)

</python>爬取图片

import urllib2

import re

import os

import sys

reload(sys)

sys.setdefaultencoding("utf-8")

def img_spider(name_file):

user_agent = "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/45.0.2454.101 Safari/537.36"

headers = {'User-Agent':user_agent}

with open(name_file) as f:

name_list = [name.rstrip().decode('utf-8') for name in f.readlines()]

f.close()

for name in name_list:

if not os.path.exists('F:/pySpace/celebrity/img_data/' + name):

os.makedirs('F:/pySpace/celebrity/img_data/' + name)

try:

url = "http://image.baidu.com/search/avatarjson?tn=resultjsonavatarnew&ie=utf-8&word=" + name.replace(' ','%20') + "&cg=girl&rn=60&pn=60"

req = urllib2.Request(url, headers=headers)

res = urllib2.urlopen(req)

page = res.read()

#print page

img_srcs = re.findall('"objURL":"(.*?)"', page, re.S)

print name,len(img_srcs)

except:

print name," error:"

continue

j = 1

src_txt = ''

for src in img_srcs:

with open('F:/pySpace/celebrity/img_data/' + name + '/' + str(j)+'.jpg','wb') as p:

try:

print "downloading No.%d"%j

req = urllib2.Request(src, headers=headers)

img = urllib2.urlopen(src,timeout=3)

p.write(img.read())

except:

print "No.%d error:"%j

p.close()

continue

p.close()

src_txt = src_txt + src + '\n'

if j==30:

break

j = j+1

#保存src路径为txt

with open('F:/pySpace/celebrity/img_data/' + name + '/' + name +'.txt','wb') as p2:

p2.write(src_txt)

p2.close()

print "save %s txt done"%name

if __name__ == '__main__':

name_file = "name_lists1.txt"

img_spider(name_file)通过java api 将数据导入hbase

在hbase中建两个表,分别为celebrity(存储图片信息)和celebrity_info(存储文本信息)名人的姓名为rowkey。<java>

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.*;

import org.apache.hadoop.hbase.client.*;

import com.csvreader.CsvReader;

import com.google.common.primitives.Chars;

import org.junit.Test;

import java.nio.charset.Charset;

import java.io.*;

import javax.swing.ImageIcon;

/**

* Created by mxy on 2016/10/31.

*/

public class CelebrityDataBase {

/*新建表*/

public void createTable(String tablename)throws Exception{

Configuration config = HBaseConfiguration.create();

config.set("hbase.zookeeper.quorum","node4,node5,node6");

HBaseAdmin admin = new HBaseAdmin(config);

String table = tablename;

if(admin.isTableAvailable(table)){

admin.disableTable(table);

admin.deleteTable(table);

}else {

HTableDescriptor t = new HTableDescriptor(table.getBytes());

HColumnDescriptor cf1 = new HColumnDescriptor("cf1".getBytes()) ;

cf1.setMaxVersions(10);

t.addFamily(cf1);

admin.createTable(t);

}

admin.close();

}

//插入数据csv格式文字数据

public void putInfo()throws Exception{

CsvReader r = new CsvReader("F://pySpace//celebrity//info.csv",',', Charset.forName("utf-8"));

r.readHeaders();

Configuration config = HBaseConfiguration.create();

config.set("hbase.zookeeper.quorum","node4,node5,node6");

HTable table = new HTable(config,"celebrity_info");

while(r.readRecord()){

System.out.println(r.get("name"));

// String rowkey = r.get("name");

Put put = new Put(r.get("name").getBytes());

put.add("cf1".getBytes(),r.get("title").getBytes(),r.get("info").getBytes());

table.put(put);

}

r.close();

table.close();

}

//查找图片数据

public void getImage(String celebrity_name,String img_num)throws Exception{

Configuration config = HBaseConfiguration.create();

config.set("hbase.zookeeper.quorum","node4,node5,node6");

HTable table = new HTable(config,"celebrity");

Get get = new Get(celebrity_name.getBytes());

Result res = table.get(get);

Cell c1 = res.getColumnLatestCell("cf1".getBytes(),img_num.getBytes());

File file=new File("D://"+celebrity_name+img_num);//将输出的二进制流转化后的图片的路径

FileOutputStream fos=new FileOutputStream(file);

fos.write(c1.getValue());

fos.flush();

System.out.println(file.length());

fos.close();

table.close();

}

//查找文本数据

public void getInfo(String name) throws Exception{

Configuration config = HBaseConfiguration.create();

config.set("hbase.zookeeper.quorum","node4,node5,node6");

HTable table = new HTable(config,"celebrity_info");

Get get = new Get(name.getBytes());

Result res = table.get(get);

Result result = table.get(get);

for(Cell cell : result.rawCells()){

System.out.println("rowKey:" + new String(CellUtil.cloneRow(cell))

+ " cfName:" + new String(CellUtil.cloneFamily(cell))

+ " qualifierName:" + new String(CellUtil.cloneQualifier(cell))

+ " value:" + new String(CellUtil.cloneValue(cell)));

}

table.close();

}

//插入图片数据

public void putImage(String each_celebrity,String each_img)throws Exception{

String str = null;

Configuration config = HBaseConfiguration.create();

config.set("hbase.zookeeper.quorum","node4,node5,node6");

HTable table = new HTable(config,"celebrity");

str = String.format("F://pySpace//celebrity//img_data//%s//%s",each_celebrity,each_img);

File file = new File(str);

int size = 0;

size = (int)file.length();

System.out.println(size);

byte[] bbb = new byte[size];

try {

InputStream a = new FileInputStream(file);

a.read(bbb);

// System.out.println(bbb);

// System.out.println(Integer.toBinaryString(bbb));

} catch (FileNotFoundException e) {

// TODO Auto-generated catch block

e.printStackTrace();

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

String rowkey = each_celebrity;

Put put = new Put(rowkey.getBytes());

put.add("cf1".getBytes(),each_img.getBytes(),bbb);

table.put(put);

table.close();

}

public static void main(String args[]){

CelebrityDatabase pt = new CelebrityDatabase();

try {

pt.createTable("celebrity);

pt.createTable("celebrity_info);

} catch (Exception e) {

e.printStackTrace();

System.out.println("createTable error");

}

String root_path = "F://pySpace//celebrity//img_data";

File file = new File(root_path);

File[] files = file.listFiles();

for(int i = 0;i < files.length;i++){

String each_path = root_path +"//"+ files[i].getName();

File celebrity_file = new File(each_path);

File[] celebrity_files = celebrity_file.listFiles();

System.out.println(each_path);

for(int j = 0;j<celebrity_files.length - 1;j++){

try {

pt.putImage(files[i].getName(),celebrity_files[j].getName());

} catch (Exception e) {

e.printStackTrace();

System.out.println("putImage error");

}

}

}

//存入文字信息

try {

pt.putInfo();

} catch (Exception e) {

e.printStackTrace();

}

//取出图片

try {

pt.getImage("龔照勝","13.jpg");

} catch (Exception e) {

e.printStackTrace();

System.out.println("getImage error")

971b

;

}

//取出文字

try {

pt.getInfo("成龙");

} catch (Exception e) {

e.printStackTrace();

}

}

}

</java>

相关文章推荐

- HBase之java api接口调用与mapreduce整合即从hdfs中通过mapreduce来导入数据到hbase中

- Java操作Hbase进行建表、删表以及对数据进行增删改查,条件查询

- Java操作Excel(三)将Excel中的数据批量的导入数据库

- Java操作Excel(三)将Excel中的数据批量的导入数据库

- 用java的api将数据从HDFS上存到HBASE中

- Java操作Hbase进行建表、删表以及对数据进行增删改查,条件查询

- 用JAVA的API操作HBASE

- Java操作Hbase进行建表、删表以及对数据进行增删改查,条件查询

- Java操作Hbase进行建表、删表以及对数据进行增删改查,条件查询

- Java操作Hbase进行建表、删表以及对数据进行增删改查,条件查询

- Java操作Hbase进行建表、删表以及对数据进行增删改查,条件查询

- HBase Java API使用操作例子

- java API 操作 hbase 数据库(这不是简单的例子,是可以用于运营系统的高性能源码)

- java操作txt或xls文件批量导入数据

- Java操作Hbase进行建表、删表以及对数据进行增删改查,条件查询

- 用Java POI操作Excel,读取数据导入DB2数据库

- java操作txt或xls文件批量导入数据

- HBase shell操作及Java API

- Java操作Hbase进行建表、删表以及对数据进行增删改查,条件查询

- java操作txt或xls文件批量导入数据