【Caffe代码分析】DataLayer

2016-10-10 10:15

232 查看

函数功能:

DataLayer 用于将数据库上的内容,一个batch一个batch的读入到相对应的Blob中,

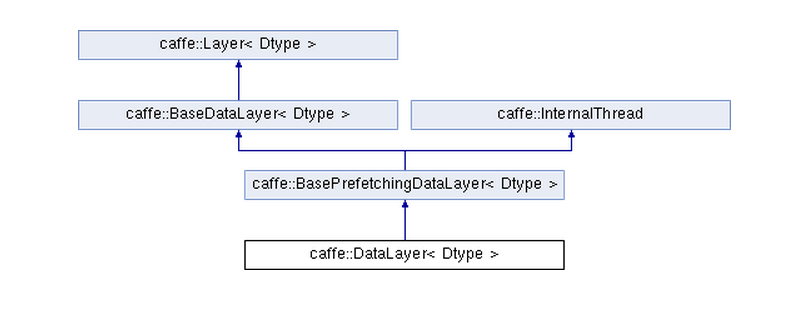

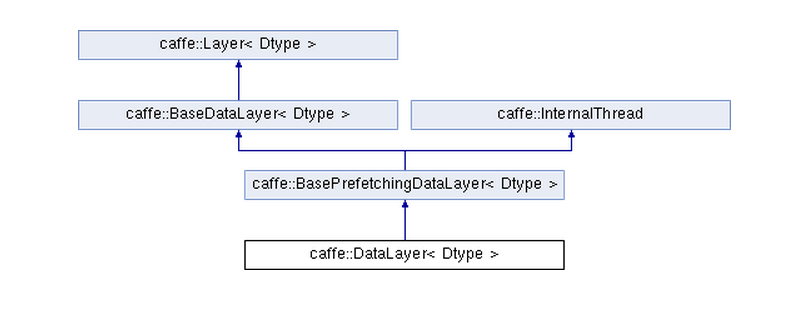

首先查看其继承关系

注意其不是直接继承于BaseDatalayer,因为,它需要并行的读取数据库上的数据,需要新开线程来预先读入数据。

DataLayer 有两个指针成员用来存放数据库和游标,

2

其继承自基类的成员变量有

2

3

4

用于保存预读取的数据,标签,以及转换过的数据

继承自BaseDataLayer的成员变量有:

其成员函数

其中

2

指针用于保留输入的批数据。

数据库里面的数据依然是先转化为Datum,

Datum datum;

datum.ParseFromString(cursor_->value());

int offset = this->prefetch_data_.offset(item_id);

this->transformed_data_.set_cpu_data(top_data + offset);

top_label[item_id] = datum.label();

其读取数据库输入也是通过游标来操作,

=============

源代码:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

其相对应的头文件信息:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

版权声明:本文为博主原创文章,未经博主允许不得转载。

DataLayer 用于将数据库上的内容,一个batch一个batch的读入到相对应的Blob中,

首先查看其继承关系

注意其不是直接继承于BaseDatalayer,因为,它需要并行的读取数据库上的数据,需要新开线程来预先读入数据。

DataLayer 有两个指针成员用来存放数据库和游标,

shared_ptr<db::DB> db_; shared_ptr<db::Cursor> cursor_;1

2

其继承自基类的成员变量有

protected: Blob<Dtype> prefetch_data_; Blob<Dtype> prefetch_label_; Blob<Dtype> transformed_data_;1

2

3

4

用于保存预读取的数据,标签,以及转换过的数据

继承自BaseDataLayer的成员变量有:

bool output_labels_;

其成员函数

InternalThreadEntry()用于真正的数据读入操作,

其中

Dtype* top_data = this->prefetch_data_.mutable_cpu_data(); Dtype* top_label = NULL; // suppress warnings about uninitialized variables1

2

指针用于保留输入的批数据。

数据库里面的数据依然是先转化为Datum,

Datum datum;

datum.ParseFromString(cursor_->value());

int offset = this->prefetch_data_.offset(item_id);

this->transformed_data_.set_cpu_data(top_data + offset);

top_label[item_id] = datum.label();

其读取数据库输入也是通过游标来操作,

cursor_->Next();,注意这里都是按照顺序读入的,所以,需要自己保证在输入存入数据库的时候确保其是无序的。

=============

源代码:

#include <opencv2/core/core.hpp>

#include <stdint.h>

#include <string>

#include <vector>

#include "caffe/common.hpp"

#include "caffe/data_layers.hpp"

#include "caffe/layer.hpp"

#include "caffe/proto/caffe.pb.h"

#include "caffe/util/benchmark.hpp"

#include "caffe/util/io.hpp"

#include "caffe/util/math_functions.hpp"

#include "caffe/util/rng.hpp"

namespace caffe {

template <typename Dtype>

DataLayer<Dtype>::~DataLayer<Dtype>() {

this->JoinPrefetchThread();

}

template <typename Dtype>

void DataLayer<Dtype>::DataLayerSetUp(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top) {

// Initialize DB

db_.reset(db::GetDB(this->layer_param_.data_param().backend()));

db_->Open(this->layer_param_.data_param().source(), db::READ);

cursor_.reset(db_->NewCursor());

// Check if we should randomly skip a few data points

if (this->layer_param_.data_param().rand_skip()) {

unsigned int skip = caffe_rng_rand() %

this->layer_param_.data_param().rand_skip();

LOG(INFO) << "Skipping first " << skip << " data points.";

while (skip-- > 0) {

cursor_->Next();

}

}

// Read a data point, and use it to initialize the top blob.

Datum datum;

datum.ParseFromString(cursor_->value());

bool force_color = this->layer_param_.data_param().force_encoded_color();

if ((force_color && DecodeDatum(&datum, true)) ||

DecodeDatumNative(&datum)) {

LOG(INFO) << "Decoding Datum";

}

// image

int crop_size = this->layer_param_.transform_param().crop_size();

if (crop_size > 0) {

top[0]->Reshape(this->layer_param_.data_param().batch_size(),

datum.channels(), crop_size, crop_size);

this->prefetch_data_.Reshape(this->layer_param_.data_param().batch_size(),

datum.channels(), crop_size, crop_size);

this->transformed_data_.Reshape(1, datum.channels(), crop_size, crop_size);

} else {

top[0]->Reshape(

this->layer_param_.data_param().batch_size(), datum.channels(),

datum.height(), datum.width());

this->prefetch_data_.Reshape(this->layer_param_.data_param().batch_size(),

datum.channels(), datum.height(), datum.width());

this->transformed_data_.Reshape(1, datum.channels(),

datum.height(), datum.width());

}

LOG(INFO) << "output data size: " << top[0]->num() << ","

<< top[0]->channels() << "," << top[0]->height() << ","

<< top[0]->width();

// label

if (this->output_labels_) {

vector<int> label_shape(1, this->layer_param_.data_param().batch_size());

top[1]->Reshape(label_shape);

this->prefetch_label_.Reshape(label_shape);

}

}

// This function is used to create a thread that prefetches the data.

template <typename Dtype>

void DataLayer<Dtype>::InternalThreadEntry() {

CPUTimer batch_timer;

batch_timer.Start();

double read_time = 0;

double trans_time = 0;

CPUTimer timer;

CHECK(this->prefetch_data_.count());

CHECK(this->transformed_data_.count());

// Reshape on single input batches for inputs of varying dimension.

const int batch_size = this->layer_param_.data_param().batch_size();

const int crop_size = this->layer_param_.transform_param().crop_size();

bool force_color = this->layer_param_.data_param().force_encoded_color();

if (batch_size == 1 && crop_size == 0) {

Datum datum;

datum.ParseFromString(cursor_->value());

if (datum.encoded()) {

if (force_color) {

DecodeDatum(&datum, true);

} else {

DecodeDatumNative(&datum);

}

}

this->prefetch_data_.Reshape(1, datum.channels(),

datum.height(), datum.width());

this->transformed_data_.Reshape(1, datum.channels(),

datum.height(), datum.width());

}

Dtype* top_data = this->prefetch_data_.mutable_cpu_data();

Dtype* top_label = NULL; // suppress warnings about uninitialized variables

if (this->output_labels_) {

top_label = this->prefetch_label_.mutable_cpu_data();

}

for (int item_id = 0; item_id < batch_size; ++item_id) {

timer.Start();

// get a blob

Datum datum;

datum.ParseFromString(cursor_->value());

cv::Mat cv_img;

if (datum.encoded()) {

if (force_color) {

cv_img = DecodeDatumToCVMat(datum, true);

} else {

cv_img = DecodeDatumToCVMatNative(datum);

}

if (cv_img.channels() != this->transformed_data_.channels()) {

LOG(WARNING) << "Your dataset contains encoded images with mixed "

<< "channel sizes. Consider adding a 'force_color' flag to the "

<< "model definition, or rebuild your dataset using "

<< "convert_imageset.";

}

}

read_time += timer.MicroSeconds();

timer.Start();

// Apply data transformations (mirror, scale, crop...)

int offset = this->prefetch_data_.offset(item_id);

this->transformed_data_.set_cpu_data(top_data + offset);

if (datum.encoded()) {

this->data_transformer_->Transform(cv_img, &(this->transformed_data_));

} else {

this->data_transformer_->Transform(datum, &(this->transformed_data_));

}

if (this->output_labels_) {

top_label[item_id] = datum.label();

}

trans_time += timer.MicroSeconds();

// go to the next iter

cursor_->Next();

if (!cursor_->valid()) {

DLOG(INFO) << "Restarting data prefetching from start.";

cursor_->SeekToFirst();

}

}

batch_timer.Stop();

DLOG(INFO) << "Prefetch batch: " << batch_timer.MilliSeconds() << " ms.";

DLOG(INFO) << " Read time: " << read_time / 1000 << " ms.";

DLOG(INFO) << "Transform time: " << trans_time / 1000 << " ms.";

}

INSTANTIATE_CLASS(DataLayer);

REGISTER_LAYER_CLASS(Data);

} // namespace caffe12

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

其相对应的头文件信息:

template <typename Dtype>

class BaseDataLayer : public Layer<Dtype> {

public:

explicit BaseDataLayer(const LayerParameter& param);

virtual ~BaseDataLayer() {}

// LayerSetUp: implements common data layer setup functionality, and calls

// DataLayerSetUp to do special data layer setup for individual layer types.

// This method may not be overridden except by the BasePrefetchingDataLayer.

virtual void LayerSetUp(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top);

virtual void DataLayerSetUp(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top) {}

// Data layers have no bottoms, so reshaping is trivial.

virtual void Reshape(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top) {}

virtual void Backward_cpu(const vector<Blob<Dtype>*>& top,

const vector<bool>& propagate_down, const vector<Blob<Dtype>*>& bottom) {}

virtual void Backward_gpu(const vector<Blob<Dtype>*>& top,

const vector<bool>& propagate_down, const vector<Blob<Dtype>*>& bottom) {}

protected:

TransformationParameter transform_param_;

shared_ptr<DataTransformer<Dtype> > data_transformer_;

bool output_labels_;

};

template <typename Dtype>

class BasePrefetchingDataLayer :

public BaseDataLayer<Dtype>, public InternalThread {

public:

explicit BasePrefetchingDataLayer(const LayerParameter& param)

: BaseDataLayer<Dtype>(param) {}

virtual ~BasePrefetchingDataLayer() {}

// LayerSetUp: implements common data layer setup functionality, and calls

// DataLayerSetUp to do special data layer setup for individual layer types.

// This method may not be overridden.

void LayerSetUp(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top);

virtual void Forward_cpu(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top);

virtual void Forward_gpu(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top);

virtual void CreatePrefetchThread();

virtual void JoinPrefetchThread();

// The thread's function

virtual void InternalThreadEntry() {}

protected:

Blob<Dtype> prefetch_data_;

Blob<Dtype> prefetch_label_;

Blob<Dtype> transformed_data_;

};

template <typename Dtype>12

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

版权声明:本文为博主原创文章,未经博主允许不得转载。

相关文章推荐

- caffe源码分析--SyncedMemory类代码研究

- caffe中loss函数代码分析--caffe学习(16)

- caffe源码分析--SyncedMemory类代码研究

- caffe源码分析--Blob类代码研究

- 分析一下weiliu89的caffe-ssd代码吧

- caffe nat 类代码分析详解

- NVCaffe 0.16.2 多 GPU 训练过程代码分析

- caffe源码分析--Blob类代码研究

- Caffe代码分析经验

- 2016.4.2 对于caffe BN代码分析

- osworkflow的config代码分析

- ORACLE常见错误代码的分析与解决(三)

- NT,2000,XP 的 CDROM 引导扇区代码分析

- XY52代码整理和分析

- 一个方向控制射击小游戏的代码分析!(AS1.0)

- little c原代码分析[一]

- osworkflow的入口代码分析

- ORACLE常见错误代码的分析与解决(一)

- VC单文档切分动态更换多视图代码分析

- w3l.exe逆向之反汇编代码分析篇