Term weight algorithm in IR

2016-08-12 11:36

211 查看

1 TF-IDF

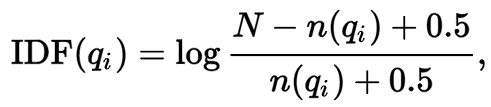

2 BM25

f是TD-IDF中的TF,|D|是文档D的长度,avgdl是语料库全部文档的平均长度。k1和b是参数。usually chosen, in absence of an advanced optimization, as k1∈[1.2,2.0] and b = 0.75 。

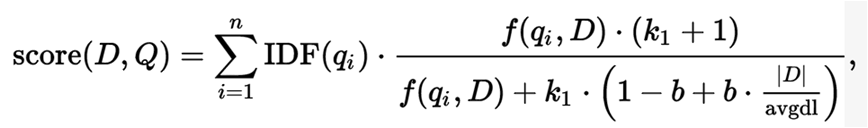

b的相关性

令: y=1-b+b*x, x表示|D|/avgdl, x与y的关系如上图。b越大,文档长度对相关性得分的影响越大,反之越小。b越大时,当文档长度大于平均长度,那么相关性得分越小;反之越大。

这可以理解为,当文档较长时,包含qi的机会越大,因此,同等fi的情况下,长文档与qi的相关性应该比短文档与qi的相关性弱。

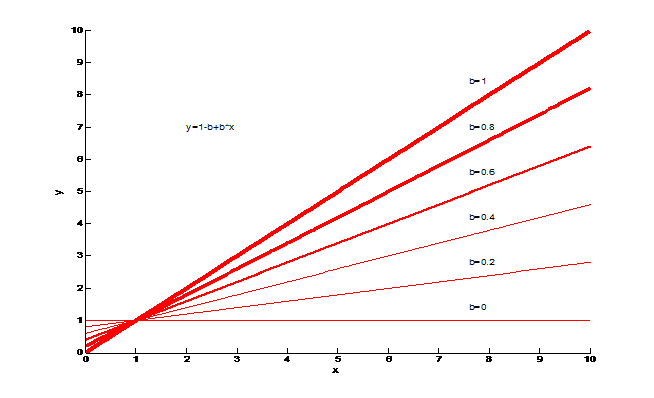

K的相关性

令: y=(tf*(k+1))./(tf+k), k与y的关系如下图。

从图表明, k对相似度的影响不大。

3 DFR(divergence form randomness)

Basic Randomness Models

The DFR models are based on this simple idea: “The more the divergence of the within-document term-frequency from its frequency within the collection, the more the information carried by the word t in the document d”. In other words the term-weight is inversely relat4000

ed to the probability of term-frequency within the document d obtained by a model M of randomness:

weight(t|d)∝−logProbM(t∈d|Collection)

(8)

where the subscript M stands for the type of model of randomness employed to compute the probability. The basic models are derived in the following table.

| Basic DFR Models | |

| D | Divergence approximation of the binomial |

| P | Approximation of the binomial |

| BE | Bose-Einstein distribution |

| G | Geometric approximation of the Bose-Einstein |

| I(n) | Inverse Document Frequency model |

| I(F) | Inverse Term Frequency model |

| I(ne) | Inverse Expected Document Frequency model |

−logProbP(t∈d|Collection)=−log(TF tf)ptfqTF−tf

where:

TF is the term-frequency of the term t in the Collection

tf is the term-frequency of the term t in the document d

N is the number of documents in the Collection

p is 1/N and q=1-p

Similarly, if the model M is the geometric distribution, then the basic model is G and computes the value:

−logProbG(t∈d|Collection)=−log((11+λ)(λ1+λ))

where λ = F/N.

相关文章推荐

- Mesh Algorithm in OpenCascade

- A Dynamic Algorithm for Local Community Detection in Graphs--阅读笔记

- PatentTips - Adaptive algorithm for selecting a virtualization algorithm in virtual machine environments

- [Erlang]Term sharing in Erlang/OTP 上篇

- Data Structures and Algorithm Analysis in c++ 第一章笔记和部分习题

- Controlling which congestion control algorithm is used in Linux

- Find Missing Term in Arithmetic Progression 等差数列缺失项

- 《Thinking In Algorithm》11.堆结构之二叉堆

- Introduction to algorithm in C++

- Implementing Apriori Algorithm in

- 《Thinking In Algorithm》02.Stacks,Queues,Linked Lists

- divide and conquer in Date Structures and Algorithm Analysis in C

- Term sharing in Erlang/OTP 下篇

- <学习笔记>Algorithm Library Design 算法库设计in c++ III(设计策略)

- 《Thinking In Algorithm》09.彻底理解递归

- 总结:Different Methods for Weight Initialization in Deep Learning

- Implementing Apriori Algorithm in R

- New ListCtrl Sorting Algorithm in XP

- dede(织梦)从5.5升级到5.6出现的“Unknown column 'weight' in 'field list'”的问题的解决办法

- Fast Algorithm To Find Unique Items in JavaScript Array