LVS负载均衡DR模式+keepalived

2016-07-18 22:34

525 查看

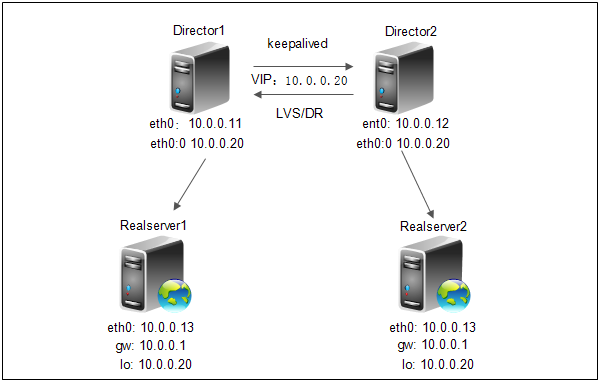

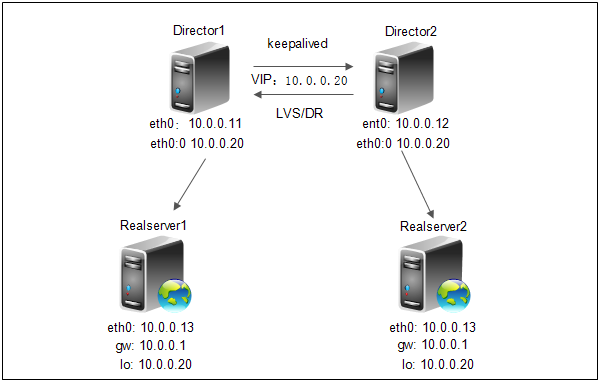

实验拓扑:

实验准备:

CentOS6.5-x86-64

一、在两台Director上安装配置ipvsadm和keepalived

1、安装ipvsadm

三、在两台Realserver上安装配置nginx

四、在两台Director上配置keepalived

1、配置keepalived

4、查看VIP(VIP默认分配在priority权值高的Director上)

五、在两台Realserver上分别在回环接口上配置vip和关闭arp转发

六、测试LVS负载功能

七、测试主Director故障,看是否实现故障转移(vip转至backup上)

八、在Realserver配置DR模式启动服务脚本

实验准备:

CentOS6.5-x86-64

| node1 10.0.0.11 软件:ipvsadm+keepalived 角色:Director1 node2 10.0.0.12 软件:ipvsadm+keepalived 角色:Director2 node3 10.0.0.13 软件:nginx 角色:Realerver1 node4 10.0.0.14 软件:nginx 角色:Realerver2 |

1、安装ipvsadm

# yum install ipvsadm -y # ipvsadm -v ipvsadm v1.26 2008/5/15 (compiled with popt and IPVS v1.2.1)2、安装keepalived

# sh hm-keepalived-install.sh (keepalived安装脚本) #!/bin/bash # Author: hm Email: mail@huangming.org kernel=/usr/src/kernels/$(uname -r) yum install gcc gcc-c++ pcre-devel openssl-devel popt-devel libnl-devel libnfnetlink libnfnetlink-devel -y [ -e keepalived-1.2.23.tar.gz ] || wget http://www.keepalived.org/software/keepalived-1.2.23.tar.gz tar -zxf keepalived-1.2.23.tar.gz && cd keepalived-1.2.23 && ./configure --prefix=/usr/local/keepalived --sysconf=/etc --with-kernel-dir=${kernel} make && make install chkconfig --add keepalived && chkconfig keepalived on ln -s /usr/local/keepalived/sbin/keepalived /usr/sbin/

三、在两台Realserver上安装配置nginx

# sh hm-nginx-install.sh(nginx安装脚本,只需将nginx源码包放到/usr/local/src目录下,执行即可)

#!/bin/bash

# Author: hm Email: mail@huangming.org

nginx_s=/usr/local/src/nginx-1.8.1.tar.gz

nginx_v=nginx-1.8.1

for p in gcc gcc-c++ zlib pcre pcre-devel openssl openssl-devel

do

if ! rpm -qa | grep -q "^$p";then

yum install -y $p

fi

done

if [ ! -d /etc/nginx -o ! -e /usr/sbin/nginx ];then

useradd -s /sbin/nologin nginx -M

cd /usr/local/src && tar -zxf ${nginx_s} -C . && cd $nginx_v &&

./configure \

--user=nginx \

--group=nginx \

--prefix=/usr/local/nginx \

--sbin-path=/usr/sbin/nginx \

--conf-path=/etc/nginx/nginx.conf \

--pid-path=/var/run/nginx.pid \

--lock-path=/var/lock/subsys/nginx \

--with-http_stub_status_module \

--with-http_ssl_module \

--with-http_gzip_static_module \

--http-log-path=/var/log/nginx/access.log \

--error-log-path=/var/log/nginx/error.log \

--http-client-body-temp-path=/tmp/nginx/client_body \

--http-proxy-temp-path=/tmp/nginx/proxy \

--http-fastcgi-temp-path=/tmp/nginx/fastcgi \

--with-http_degradation_module \

--with-http_realip_module \

--with-http_addition_module \

--with-http_sub_module \

--with-pcre

make && make install

chown -R nginx:nginx /usr/local/nginx && mkdir /tmp/nginx/client_body -p && mkdir /etc/nginx/vhosts

# nginx.conf

cat > /etc/nginx/nginx.conf << EOF

user nginx nginx;

worker_processes $(cat /proc/cpuinfo | grep 'processor' | wc -l);

error_log /var/log/nginx/error.log notice;

pid /var/run/nginx.pid;

worker_rlimit_nofile 65535;

events

{

use epoll;

worker_connections 65535;

}

http

{

include mime.types;

default_type application/octet-stream;

server_names_hash_bucket_size 2048;

server_names_hash_max_size 2048;

log_format main '\$remote_addr - \$remote_user [\$time_local] "\$request" '

'\$status \$body_bytes_sent "\$http_referer" "\$request_time"'

'"\$http_user_agent" \$HTTP_X_Forwarded_For';

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 60;

client_header_timeout 3m;

client_body_timeout 3m;

client_max_body_size 10m;

client_body_buffer_size 256k;

send_timeout 3m;

connection_pool_size 256;

client_header_buffer_size 32k;

large_client_header_buffers 4 64k;

request_pool_size 4k;

output_buffers 4 32k;

postpone_output 1460;

client_body_temp_path /tmp/nginx/client_body;

proxy_temp_path /tmp/nginx/proxy;

fastcgi_temp_path /tmp/nginx/fastcgi;

gzip on;

gzip_min_length 1k;

gzip_buffers 4 16k;

gzip_comp_level 3;

gzip_http_version 1.1;

gzip_types text/plain application/x-javascript text/css text/htm application/xml;

gzip_vary on;

fastcgi_connect_timeout 300;

fastcgi_send_timeout 300;

fastcgi_read_timeout 300;

fastcgi_buffer_size 64k;

fastcgi_buffers 4 64k;

fastcgi_busy_buffers_size 128k;

fastcgi_temp_file_write_size 128k;

fastcgi_intercept_errors on;

include vhosts/*.conf;

}

EOF

# nginx_vhosts.conf

cat > /etc/nginx/vhosts/www.conf << EOF

server {

listen 80;

server_name $(ifconfig eth0 | awk -F"[ :]+" '/inet addr/{print $4}');

index index.html index.htm index.php index.jsp;

charset UTF8;

root /data/www/html;

access_log /var/log/nginx/access.log main;

}

EOF

mkdir /data/www/html -p && echo $(ifconfig eth0 | awk -F"[ :]+" '/inet addr/{print $4}') > /data/www/html/index.html

/usr/sbin/nginx -t && /usr/sbin/nginx

echo

echo "--------------------------------"

echo "Install ${nginx_v} success"

echo "Test http://$(ifconfig eth0 | awk -F"[ :]+" '/inet addr/{print $4}')"

else

echo "Already installed ${nginx_v}"

fi四、在两台Director上配置keepalived

1、配置keepalived

# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

mail@huangming.org

741616710@qq.com

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_HA

}

vrrp_instance LVS_HA {

state BACKUP # 主备服务器均为BACKUP

interface eth0 # 指定keepalived监听网络的接口

virtual_router_id 81 # ID标识,master和backup保持一致

priority 100 # backup为90

advert_int 2 # master和backup之间检查同步的时间间隔

nopreempt # 不抢占模式,仅在master上设置

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.0.0.20/24 dev eth0 # VIP,可以设置多个,每行一个

}

}

virtual_server 10.0.0.20 80 { # (LVS配置)

delay_loop 5 # (每隔5秒查询realserver状态)

lb_algo wlc # (lvs算法)

lb_kind DR # (lvs负载均衡模式)

persistence_timeout 0 # (保持会话时间,0表示永久保持)

persistence_granularity 255.255.255.255 # (持久连接的粒度,默认为4个255,即一个单独的客户端IP分配到一个Realserver上)

protocol TCP # (转发的协议类型,TCP或UDP)

sorry_server 10.0.0.11 8080 # (备用Realserver节点,当所有的realserver节点失效后,将启用这个节点)

real_server 10.0.0.13 80 { # Realserver

weight 2 # (调度权重)

HTTP_GET { # (健康检测方式)

url { # (指定检测的url,可以指定多个)

path /index.html # (url详细路径)

status_code 200

}

connect_port 80 # (检查的端口)

#bindto 10.0.0.20 # (表示通过此地址来发送请求对服务器进行健康检查)

connect_timeout 3 # (无响应超时时间)

nb_get_retry 3 # (失败重试次数)

delay_before_retry 1 # (重试时间间隔)

}

}

real_server 10.0.0.14 80 {

weight 2 # (调度权重)

HTTP_GET { # (健康检测方式)

url { # (指定检测的url,可以指定多个)

path /index.html # (url详细路径)

status_code 200

}

connect_port 80 # (检查的端口)

#bindto 10.0.0.20 # (表示通过此地址来发送请求对服务器进行健康检查)

connect_timeout 3 # (无响应超时时间)

nb_get_retry 3 # (失败重试次数)

delay_before_retry 1 # (重试时间间隔)

}

}2、分别启动keepalived服务[root@node1 ~]# service keepalived start [root@node2 ~]# service keepalived start [root@node1 ~]# ps aux | grep keepalived root 4008 0.0 0.1 44928 1080 ? Ss 19:01 0:00 keepalived -D root 4009 0.1 0.2 47152 2332 ? S 19:01 0:00 keepalived -D root 4010 0.1 0.1 47032 1608 ? S 19:01 0:00 keepalived -D root 4018 0.0 0.0 103256 864 pts/0 S+ 19:03 0:00 grep keepalived3、查看LVS状态

# master上 [root@node1 ~]# ipvsadm -L -n IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 10.0.0.20:80 wlc persistent 0 -> 10.0.0.13:80 Route 2 0 0 -> 10.0.0.14:80 Route 2 0 0 # backup上 [root@node2 ~]# ipvsadm -L -n IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 10.0.0.20:80 wlc persistent 0 -> 10.0.0.13:80 Route 2 0 0 -> 10.0.0.14:80 Route 2 0 0

4、查看VIP(VIP默认分配在priority权值高的Director上)

[root@node1 ~]# ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:5a:9d:e0 brd ff:ff:ff:ff:ff:ff inet 10.0.0.11/24 brd 10.0.0.255 scope global eth0 inet 10.0.0.20/24 scope global secondary eth0 inet6 fe80::20c:29ff:fe5a:9de0/64 scope link valid_lft forever preferred_lft forever

五、在两台Realserver上分别在回环接口上配置vip和关闭arp转发

# 查看系统默认arp规则 [root@node3 ~]# cat /proc/sys/net/ipv4/conf/lo/arp_ignore 0 [root@node3 ~]# cat /proc/sys/net/ipv4/conf/lo/arp_announce 0 [root@node3 ~]# cat /proc/sys/net/ipv4/conf/all/arp_ignore 0 [root@node3 ~]# cat /proc/sys/net/ipv4/conf/all/arp_announce 0 在两台Realserver上执行以下脚本 # lvs_dr_rs.sh #!/bin/bash vip=10.0.0.20 ifconfig lo:0 $vip broadcast $vip netmask 255.255.255.255 up route add -host $vip lo:0 echo "1" > /proc/sys/net/ipv4/conf/lo/arp_ignore echo "2" > /proc/sys/net/ipv4/conf/lo/arp_announce echo "1" > /proc/sys/net/ipv4/conf/all/arp_ignore echo "2" > /proc/sys/net/ipv4/conf/all/arp_announce [root@node3 ~]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet 10.0.0.20/32 brd 10.0.0.20 scope global lo:0 inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:c5:b4:19 brd ff:ff:ff:ff:ff:ff inet 10.0.0.13/24 brd 10.0.0.255 scope global eth0 inet6 fe80::20c:29ff:fec5:b419/64 scope link valid_lft forever preferred_lft forever [root@node4 ~]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet 10.0.0.20/32 brd 10.0.0.20 scope global lo:0 inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:42:34:4f brd ff:ff:ff:ff:ff:ff inet 10.0.0.14/24 brd 10.0.0.255 scope global eth0 inet6 fe80::20c:29ff:fe42:344f/64 scope link valid_lft forever preferred_lft forever

六、测试LVS负载功能

# 这里分别在node3和node4上访问VIP,在我的keepalived配置里我配置了wlc最小连接调度算法, 和权值为2,所以访问的效果如下 [root@node3 ~]# curl http://10.0.0.20 10.0.0.13 [root@node3 ~]# curl http://10.0.0.20 10.0.0.13 [root@node4 ~]# curl http://10.0.0.20 10.0.0.14 [root@node4 ~]# curl http://10.0.0.20 10.0.0.14

七、测试主Director故障,看是否实现故障转移(vip转至backup上)

# 关闭主Director的keepalived服务 [root@node1 ~]# service keepalived stop Stopping keepalived: [ OK ] # 查看BACKUP的ip [root@node2 ~]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:42:43:20 brd ff:ff:ff:ff:ff:ff inet 10.0.0.12/24 brd 10.0.0.255 scope global eth0 inet 10.0.0.20/24 scope global secondary eth0 inet6 fe80::20c:29ff:fe42:4320/64 scope link valid_lft forever preferred_lft forever # 测试是否还能正常通过vip访问web服务 [root@node3 ~]# curl http://10.0.0.20 10.0.0.13 [root@node3 ~]# curl http://10.0.0.20 10.0.0.13 [root@node4 ~]# curl http://10.0.0.20 10.0.0.14 [root@node4 ~]# curl http://10.0.0.20 10.0.0.14

八、在Realserver配置DR模式启动服务脚本

# cat lvs-dr-rs.service

#!/bin/bash

. /etc/rc.d/init.d/functions

vip=10.0.0.20

RETVAL=$?

case "$1" in

start)

echo "Start realserver"

ifconfig lo:0 $vip broadcast $vip netmask 255.255.255.255 up

route add -host $vip dev lo:0

echo "1" > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo "2" > /proc/sys/net/ipv4/conf/lo/arp_announce

echo "1" > /proc/sys/net/ipv4/conf/all/arp_ignore

echo "2" > /proc/sys/net/ipv4/conf/all/arp_announce

;;

stop)

echo "Stop realserver"

ifconfig lo:0 down

echo "0" > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo "0" > /proc/sys/net/ipv4/conf/lo/arp_announce

echo "0" > /proc/sys/net/ipv4/conf/all/arp_ignore

echo "0" > /proc/sys/net/ipv4/conf/all/arp_announce

;;

*)

echo Usage: $0 {start|stop}

exit 1

esac

exit $RETVAL

相关文章推荐

- LVS+Keepalived构建高可用负载均衡(测试篇)

- LVS(Linux Virtual Server)Linux 虚拟服务器介绍及配置(负载均衡系统)

- linux服务器之LVS、Nginx和HAProxy负载均衡器对比总结

- LVS+Keepalived构建高可用负载均衡配置方法(配置篇)

- LVS 负载均衡概念篇

- LVS原理详解及部署之一:ARP原理准备

- LVS动态调整hash表大小

- linux技术应用

- LVS+keepalived

- LVS Nginx HAProxy 优缺点

- LVS

- CentOS 6.3下部署LVS(NAT)+keepalived实现高性能高可用负载均衡

- keepalived原理使用和配置

- ipvsadm的几个参数输出的说明

- 如何用DNS+GeoIP+Nginx+Varnish做世界级的CDN

- LVS

- LVS+keepalived配置

- LVS负载均衡

- 【大型网站技术实践】初级篇:借助LVS+Keepalived实现负载均衡

- Keepalived+LVS+Mysql-Cluster(7.1.10)架构方案