Matika版OpenStack伪生产环境部署-前期配置

2016-05-24 16:06

453 查看

目前matika版发布不久,目前还存在很多问题,在安装和使用过程中会遇到很多问题。但个人能力有限,希望在以后慢慢解决使用过程的问题。

本系列文章主要描述OpenStack的Matika版在物理机上安装的过程。在部署OpenStack过程中自己也在尝试使用编写脚本进行部署,有很多不足之处,望见谅!

在M版中,Controller节点和Neutron节点合并在Controller节点,根据现实需求单独划分节点安装Neutron没有必要性,所以将网络节点和控制器节点都安装配置在Controller节点。在前面安装部署Juno版中,没有使用到对象存储,所以在此也没有安装对象存储节点。

Controller: 2个 内存 32G*2 存储 300G*4 网卡端口 4个 CPU 2个(24核)

Compute: 12个 内存 128G*4 64G*8 存储 900G 网卡端口 2个CPU 2个(24核)

Block Storage: 2个 内存 32G*2 存储 1.2T*2 网卡端口 2个 CPU 2个(24核)

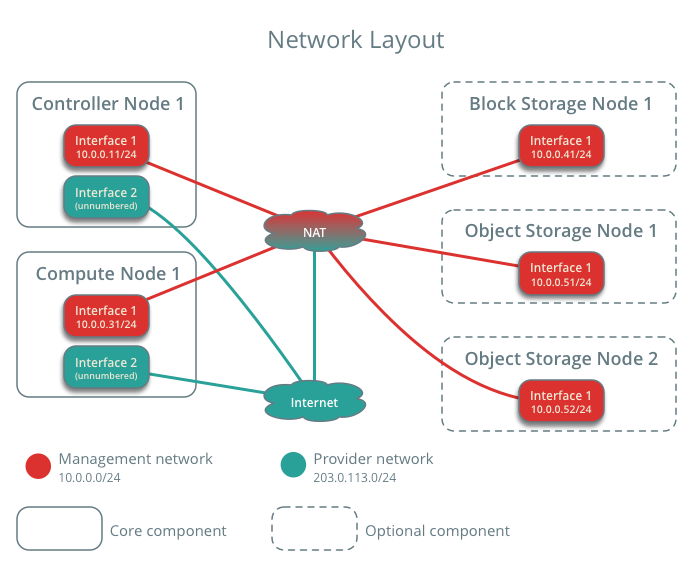

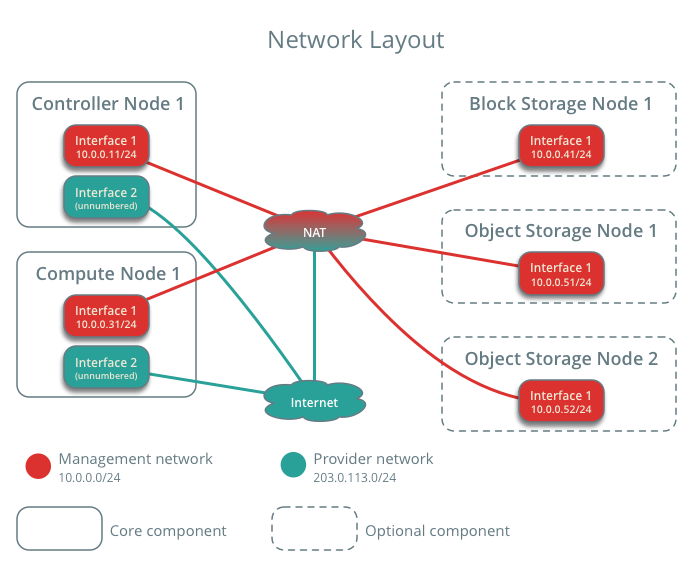

网络模式选择Self-Service Networks,主机网络层逻辑图:

在各个节点上需要安装的组件和服务:

启动数据库

设置数据库root用户密码

启动数据库

验证:

主要查看

然后执行:

启动RabbitMQ

创建一个openstack用户:

在Controller所有节点:

在Controller1节点

建立授权

配置集群

启动集群服务

使集群可用

显示集群状态

配置虚拟IP

文章引用:

1..http://docs.openstack.org/ha-guide/index.html

2.http://docs.openstack.org/mitaka/install-guide-rdo/index.html

本系列文章主要描述OpenStack的Matika版在物理机上安装的过程。在部署OpenStack过程中自己也在尝试使用编写脚本进行部署,有很多不足之处,望见谅!

在M版中,Controller节点和Neutron节点合并在Controller节点,根据现实需求单独划分节点安装Neutron没有必要性,所以将网络节点和控制器节点都安装配置在Controller节点。在前面安装部署Juno版中,没有使用到对象存储,所以在此也没有安装对象存储节点。

基本硬件配置:

管理监控节点: 1个 内存 8G 存储 600G 网卡端口 2个Controller: 2个 内存 32G*2 存储 300G*4 网卡端口 4个 CPU 2个(24核)

Compute: 12个 内存 128G*4 64G*8 存储 900G 网卡端口 2个CPU 2个(24核)

Block Storage: 2个 内存 32G*2 存储 1.2T*2 网卡端口 2个 CPU 2个(24核)

网络模式选择Self-Service Networks,主机网络层逻辑图:

在各个节点上需要安装的组件和服务:

关闭防火墙和Selinux

systemctl stop firewalld.service systemctl disable firewalld.service sed -i "s/SELINUX=enforcing/SELINUX=disabled/" /etc/selinux/config setenforce 0

配置/etc/hosts

为了方便配置,在每个节点都执行:cat >> /etc/hosts << OFF 10.0.0.10 controller 10.0.0.11 controller1 10.0.0.12 controller2 10.0.0.31 compute01 10.0.0.32 compute02 10.0.0.33 compute03 10.0.0.34 compute04 10.0.0.35 compute05 10.0.0.36 compute06 10.0.0.37 compute07 10.0.0.38 compute08 10.0.0.39 compute09 10.0.0.40 compute10 10.0.0.41 compute11 10.0.0.42 compute12 10.0.0.51 cinder1 10.0.0.52 cinder2 OFF

配置NTP

目前CentOS7已经开始放弃使用ntp改为使用chronyNTP服务器

设置本地时间同步:127.127.1.0,删除其他时间服务节点yum install -y ntp sed -i "s/server 0.centos.pool.ntp.org iburst/server 127.127.1.0 iburst/" /etc/ntp.conf sed -i "22,24d" /etc/ntp.conf systemctl disable chronyd.service systemctl stop chronyd.service systemctl enable ntpd.service systemctl start ntpd.service

配置OpenStack包源

在所有Controller节点yum install -y https://rdoproject.org/repos/rdo-release.rpm yum upgrade -y yum install -y python-openstackclient yum install -y openstack-selinux

安装MariaDB galera

许多OpenStack服务使用SQL数据库存储信息。数据库一般运行在Controller节点或者单独的数据库节点上。为了实现数据库的高可用性,本次安装采用了MariaDB Galera集群,Galera集群完成数据库之间复制操作。Galera 集群是一个同步的多主机数据库集群,基于MySQL和InnoDB存储引擎。在所有Controller节点上配置MariaDB Galera包源,编辑/etc/yum.repos.d/mariadb.repo[mariadb] name = MariaDB baseurl = http://yum.mariadb.org/10.0/centos7-amd64 gpgkey=https://yum.mariadb.org/RPM-GPG-KEY-MariaDB gpgcheck=1 enabled=0

安装数据库:

yum --enablerepo=mariadb -y install MariaDB-Galera-server

Controller1节点

创建/etc/my.cnf.d/openstack.cnfsed -i "/\[mysqld\]$/a character-set-server = utf8" /etc/my.cnf.d/openstack.cnf sed -i "/\[mysqld\]$/a init-connect = 'SET NAMES utf8'" /etc/my.cnf.d/openstack.cnf sed -i "/\[mysqld\]$/a collation-server = utf8_general_ci" /etc/my.cnf.d/openstack.cnf sed -i "/\[mysqld\]$/a innodb_file_per_table" /etc/my.cnf.d/openstack.cnf sed -i "/\[mysqld\]$/a default-storage-engine = innodb" /etc/my.cnf.d/openstack.cnf sed -i "/\[mysqld\]$/a bind-address = 0.0.0.0" /etc/my.cnf.d/openstack.cnf sed -i "/\[mysqld\]$/a max_connections = 10000" /etc/my.cnf.d/openstack.cnf sed -i "/\[galera\]$/a wsrep_provider = /usr/lib64/galera/libgalera_smm.so " /etc/my.cnf.d/openstack.cnf sed -i "/\[galera\]$/a wsrep_cluster_address = \"gcomm://10.0.0.11,10.0.0.12\" " /etc/my.cnf.d/openstack.cnf sed -i "/\[galera\]$/awsrep_cluster_name= \"MariaDB_Cluster\" " /etc/my.cnf.d/openstack.cnf sed -i "/\[galera\]$/a wsrep_node_address= \"10.0.0.11\" " /etc/my.cnf.d/openstack.cnf sed -i "/\[galera\]$/a wsrep_sst_method = rsync " /etc/my.cnf.d/openstack.cnf

启动数据库

/etc/rc.d/init.d/mysql bootstrap

设置数据库root用户密码

mysqladmin -u root password "SWPUcs406mariadb"

Controller2节点

创建/etc/my.cnf.d/openstack.cnf,与controller1节点内容相似,稍微修改为wsrep_node_address=”10.0.0.12”启动数据库

systemctl start mysql

验证:

mysql -u root -pSWPUcs406mariadb > show status like 'wsrep_%';

主要查看

| Variable_name | Value |

|---|---|

| wsrep_local_state_comment | synced |

| wsrep_cluster_size | 2 |

| wsrep_incoming_addresses | 10.0.0.11:3306,10.0.0.12:3306 |

安装RabbitMQ

OpenStack使用消息队列来协调操作和状态信息等服务。消息队列服务通常Controller节点或独立的节点上运行。OpenStack支持多种消息队列服务,包括RabbitMQ Qpid,ZeroMQ。在所有Controller节点上执行yum install -y rabbitmq-server

Controller1节点

systemctl enable rabbitmq-server.service systemctl start rabbitmq-server.service systemctl stop rabbitmq-server.service

然后执行:

scp /var/lib/rabbitmq/.erlang.cookie controller2:/var/lib/rabbitmq/.erlang.cookie chown rabbitmq:rabbitmq /var/lib/rabbitmq/.erlang.cookie chmod 400 /var/lib/rabbitmq/.erlang.cookie

启动RabbitMQ

systemctl start rabbitmq-server.service rabbitmqctl cluster_status

Controller2节点

chown rabbitmq:rabbitmq /var/lib/rabbitmq/.erlang.cookie chmod 400 /var/lib/rabbitmq/.erlang.cookie systemctl enable rabbitmq-server.service systemctl start rabbitmq-server.service rabbitmqctl stop_app rabbitmqctl join_cluster --ram rabbit@controller1 rabbitmqctl start_app

Controller1节点

rabbitmqctl set_policy ha-all '^(?!amq\.).*' '{"ha-mode": "all"}'创建一个openstack用户:

rabbitmqctl add_user openstack SWPUcs406rabbit rabbitmqctl set_permissions openstack ".*" ".*" ".*"

安装Memcached

在所有Controller节点yum install -y memcached python-memcached systemctl enable memcached.service systemctl start memcached.service

安装Pacemaker+Corosync

+----------------------+ | +----------------------+ | [ Node01 ] |10.0.0.11 | 10.0.0.12| [ Node02 ] | | controller1 +----------+----------+ controller2 | | | | | +----------------------+ +----------------------+

在Controller所有节点:

yum -y install pacemaker pcs systemctl start pcsd.service systemctl enable pcsd.service passwd hacluster

在Controller1节点

建立授权

pcs cluster auth controller1 controller2 Username: hacluster Password: controller1: Authorized controller2: Authorized

配置集群

pcs cluster setup --name ha_cluster controll1 controller2 Shutting down pacemaker/corosync services... Redirecting to /bin/systemctl stop pacemaker.service Redirecting to /bin/systemctl stop corosync.service Killing any remaining services... Removing all cluster configuration files... controller1: Succeeded controller2: Succeeded

启动集群服务

pcs cluster start --all controller1: Starting Cluster... controller2: Starting Cluster...

使集群可用

pcs cluster enable --all controller1: Cluster Enabled controller2: Cluster Enabled

显示集群状态

pcs status cluster Cluster Status: Last updated: Tue May 24 16:02:13 2016 Last change: Mon May 23 10:29:50 2016 by hacluster via crmd on controller2 Stack: corosync Current DC: controller1 (version 1.1.13-10.el7_2.2-44eb2dd) - partition with quorum 2 nodes and 0 resources configured Online: [ controller1 controller2 ] PCSD Status: controller1: Online controller2: Online

配置虚拟IP

pcs resource create OpenStack-VIP ocf:heartbeat:IPaddr2 params ip="10.0.0.10" cidr_netmask="24" op monitor interval="30s"

文章引用:

1..http://docs.openstack.org/ha-guide/index.html

2.http://docs.openstack.org/mitaka/install-guide-rdo/index.html

相关文章推荐

- openstack kilo-with-dokcer

- 什么是OpenStack 开源的云计算管理平台项目

- openstack(juno版)使用rsyslog转发日志

- OpenStack Murano Dashboard(Kilo)安装

- Openstack Murano(Kilo) 网络排错

- Openstack Murano(kilo)二次开发之添加Volume

- Openstack Horizon(kilo)二次开发之匿名访问View

- Ubuntu源码安装Openstack(一)

- Ubuntu源码安装Openstack(二)

- openstack开发之--zabbix被坑的地方

- 仿OpenStack开发云计算管理软件”--第1周:熟悉开发环境

- 【原创】OpenStack Swift源码分析(二)ring文件的生成

- 【原创】Swift服务启动架构分析

- 【原创】OpenStack Swift源码分析(三)proxy服务启动

- 【原创】OpenStack Swift源码分析(四)proxy服务响应

- 【原创】OpenStack Swift源码分析(五)keystone鉴权

- Swift中的一致性哈希算法分析

- 【原创】OpenStack Swift源码分析(六)object服务

- 【原创】OpenStack Swift源码分析(七)Replication服务

- 【原创】OpenStack Swift源码分析(八)Updater && Auditor服务