Logistic回归 Python实现

2016-04-26 15:28

721 查看

Logistic回归

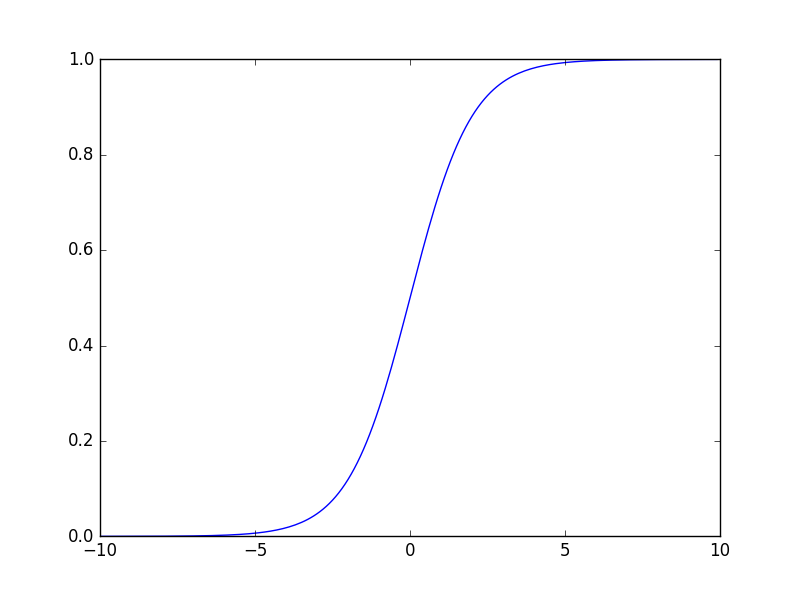

Logistic函数f(x)=11+e−x

其函数图像为:

绘图方法

>>> import numpy as np >>> x = np.arange(-10,10,0.1) >>> y = 1/(1+np.exp(-x)) >>> import matplotlib.pyplot as plt >>> plt.plot(x,y) [<matplotlib.lines.Line2D object at 0x00000000054FFE10>] >>> plt.show()

其在近0点陡峭的上升特点决定了它可以将一个回归问题转换为一个分类问题。

详情请见我的另外一篇博客

http://blog.csdn.net/taiji1985/article/details/50969697

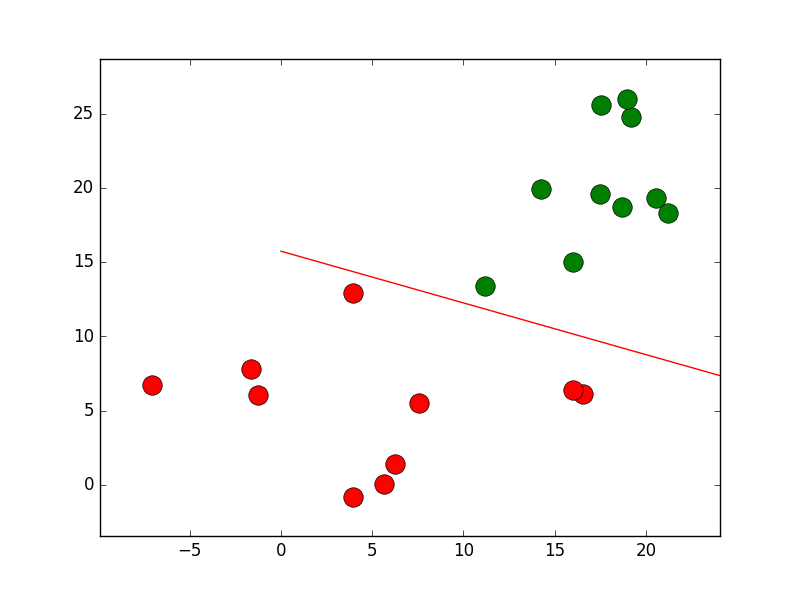

在ml/dt.py中的数据生成

def createD3():

np.random.seed(101)

ndim = 2

n2 =10

a = 3+5*np.random.randn(n2,ndim)

b = 18+4*np.random.randn(n2,ndim)

X = np.concatenate((a,b))

ay = np.zeros(n2)

by = np.ones(n2)

ylabel = np.concatenate((ay,by))

return {'X':X,'ylabel':ylabel}ml/fig.py中的绘图

# -*- coding: UTF-8 -*- ''' Created on 2016-4-24 @author: Administrator ''' import numpy as np import operator import matplotlib.pyplot as plt #绘图 def plotData(ds,type='o'): X= ds['X'] y=ds['ylabel'] n = X.shape[0] cn = len(np.unique(y)) cs = ['r','g'] dd = np.arange(n) for i in range(2): index= y == i xx=X[dd[index]] plt.plot(xx[:,0],xx[:,1],type,markerfacecolor=cs[i],markersize=14) xmax = np.max(X[:,0]) xmin = np.min(X[:,0]) ymax = np.max(X[:,1]) ymin = np.min(X[:,1]) print xmin,xmax,ymin,ymax dx = xmax - xmin dy = ymax - ymin plt.axis([xmin-dx*0.1, xmax + dx*0.1, ymin-dy*0.1, ymax +dy*0.1])

分类器代码

# -*- coding: UTF-8 -*- ''' Logistic 回归 Created on 2016-4-26 @author: taiji1985 ''' import numpy as np import operator import matplotlib.pyplot as plt def sigmoid(X): return 1.0/(1+np.exp(-X)) pass #生成该矩阵的增广矩阵,添加最后一列(全部为1) def augment(X): n,ndim = X.shape a = np.mat(np.ones((n,1))) return np.concatenate((X,a),axis=1) def classify(X,w): X = np.mat(X) w = np.mat(w) if w.shape[0] < w.shape[1]: w = w.T #增广 X= augment(X) d = X*w r = np.zeros((X.shape[0],1)) r[d>0] = 1 return r pass #梯度下降法学习 #alpha 学习因子 def learn(dataset,alpha=0.001): X= dataset['X'] ylabel = dataset['ylabel'] n,ndim = X.shape cls_label = np.unique(ylabel) cn=len(cls_label) X = np.mat(X) ylabel = np.mat(ylabel).T #生成增广矩阵 X = augment(X) ndim += 1 max_c = 500 w = np.ones((ndim,1)) i = 0 ep= 0.0001 cha = 1 while cha > ep: #计算y = wx + b ypred =X*w #计算 logistic ypred = sigmoid(ypred) #计算误差 error = ylabel - ypred cha = alpha*X.T*error w = w + cha cha = np.abs( np.sum(cha)) print i,w.T i=i+1 return w

测试

''' Created on 2016-4-26 @author: Administrator ''' from ml import lsd from ml import dt from ml import fig import numpy as np import matplotlib.pyplot as plt ds = dt.createD3() w = lsd.learn(ds,0.01) #draw line lx = [0, -w[2]/w[0]] ly = [-w[2]/w[1],0] plt.figure() fig.plotData(ds, 'o') plt.plot(lx,ly) plt.show() ypred = lsd.classify(ds['X'],w) ylabel = np.mat(ds['ylabel']).T print ypred print ylabel dist = np.sum(ypred != ylabel) print dist,len(ylabel), 1.0*dist/len(ylabel)

相关文章推荐

- nodejs实现获取某宝商品分类

- php通过分类列表产生分类树数组的方法

- asp飞飞无限级分类v1.0 Asp+sql+存储过程+ajax提供下载

- Oracle表的分类以及相关参数的详解

- Jquery+Ajax+PHP+MySQL实现分类列表管理(上)

- WordPress中用于获取文章信息以及分类链接的函数用法

- 详解WordPress中分类函数wp_list_categories的使用

- php实现无限级分类查询(递归、非递归)

- PHP实现无限级分类(不使用递归)

- PHP实现递归无限级分类

- Jquery+Ajax+PHP+MySQL实现分类列表管理(下)

- php+mysql实现无限分类实例详解

- thinkphp实现无限分类(使用递归)

- WordPress中获取指定分类及其子分类下的文章数目

- 详解WordPress开发中用于获取分类及子页面的函数用法

- JavaScript+CSS无限极分类效果完整实现方法

- 利用Python和OpenCV库将URL转换为OpenCV格式的方法

- 用Python从零实现贝叶斯分类器的机器学习的教程

- Python NumPy库安装使用笔记

- Python中的Numpy入门教程