sqoop2安装

2016-03-14 00:00

323 查看

摘要: sqoop2安装

参考网上的文档,以及源码内容

1、安装准备工作

sqoop2下载地址:http://apache.fayea.com/sqoop/1.99.6/sqoop-1.99.6-bin-hadoop200.tar.gz

2、安装到工作目录

tar -xvf sqoop-1.99.6-bin-hadoop200.tar.gz

mv sqoop-1.99.6-bin-hadoop200 /usr/local/

3、修改环境变量,可以将sqoop安装到hadoop用户下,我就用root的作为示例了

vi /etc/profile

添加如下内容:

export SQOOP_HOME=/usr/local/sqoop-1.99.6-bin-hadoop200

export PATH=$SQOOP_HOME/bin:$PATH

export CATALINA_BASE=$SQOOP_HOME/server

export LOGDIR=$SQOOP_HOME/logs

保存退出及时生效:

source /etc/profile

4、修改sqoop配置

vi /usr/local/sqoop-1.99.6-bin-hadoop200/server/conf

#修改指向我的hadoop安装目录

org.apache.sqoop.submission.engine.mapreduce.configuration.directory=/usr/local/hadoop-2.7.1/

#把hadoop目录下的jar包都引进来

vi /usr/local/sqoop-1.99.6-bin-hadoop200/server/conf/catalina.properties

common.loader=/usr/local/hadoop-2.7.1/share/hadoop/common/*.jar,/usr/local/hadoop-2.7.1/share/hadoop/common/lib/*.jar,/usr/local/hadoop-2.7.1/share/hadoop/hdfs/*.jar,/usr/local/hadoop-2.7.1/share/hadoop/hdfs/lib/*.jar,/usr/local/hadoop-2.7.1/share/hadoop/mapreduce/*.jar,/usr/local/hadoop-2.7.1/share/hadoop/mapreduce/lib/*.jar,/usr/local/hadoop-2.7.1/share/hadoop/tools/*.jar,/usr/local/hadoop-2.7.1/share/hadoop/tools/lib/*.jar,/usr/local/hadoop-2.7.1/share/hadoop/yarn/*.jar,/usr/local/hadoop-2.7.1/share/hadoop/yarn/lib/*.jar,/usr/local/hadoop-2.7.1/share/hadoop/httpfs/tomcat/lib/*.jar

#因为和hadoop-2.7.1中的jar包冲突,需删除$SQOOP_HOME/server/webapps/sqoop/WEB-INFO/lib/中的log4j的jar

#这里必须填写全路径,不能使用环境变量

或者

在$SQOOP_HOME中建个文件夹例如hadoop_lib,然后将这些jar包cp到此文件夹中,最后将此文件夹路径添加到common.loader属性中,这种方法更加直观些

5、下载mysql驱动包

mysql-connector-java-5.1.29.jar

并放到 $SQOOP_HOME/server/lib/ 目录下

[注意:下载的是 mysql-xxx.tar.gz 只需要把里面的 mysql-connector-java.xxx-bin.jar 考出来即可,这是个坑啊]

6、启动/停止sqoop200

/usr/local/sqoop-1.99.6-bin-hadoop200/bin/sqoop.sh server start/stop

查看启动日志:

vi /usr/local/sqoop-1.99.6-bin-hadoop200/server/logs/catalina.out

7、进入客户端交互目录

$SQOOP_HOME/bin/sqoop.sh client

sqoop:000>set server --host hadoopMaster --port 12000 --webapp sqoop 【红色部分为 本机hostname 主机名】

sqoop:000> show version --all 【查看版本信息】

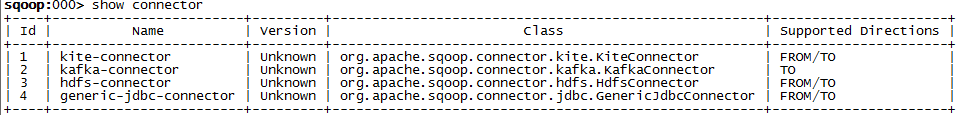

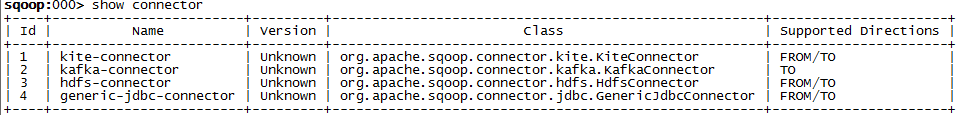

sqoop:000> show connector --all 【查看现有连接类型信息】

创建数据库连接:

1、创建hadoop连接

sqoop:000> create link --cid 3

Creating link for connector with id 3

Please fill following values to create new link object

Name: hdfs --设置连接名称

Link configuration

HDFS URI: hdfs://hadoopMaster:8020/ --HDFS访问地址

Hadoop conf directory:/usr/local/hadoop-2.7.1/etc/hadoop

New link was successfully created with validation status OK and persistent id 1

2、创建mysql连接

sqoop:000> create link --cid 4

Creating link for connector with id 4

Please fill following values to create new link object

Name: mysqltest --连接名称

Link configuration

JDBC Driver Class: com.mysql.jdbc.Driver --连接驱动类

JDBC Connection String: jdbc:mysql://mysql.server/database --jdbc连接

Username: sqoop --数据库用户

Password: ************ --数据库密码

JDBC Connection Properties:

There are currently 0 values in the map:

entry# protocol=tcp

There are currently 1 values in the map:

protocol = tcp

entry#按回车

New link was successfully created with validation status OK and persistent id 2

3、建立job(MySql 到 HDFS)

sqoop:000>show link

sqoop:000> create job -f 2 -t 1

Creating job for links with from id 2 and to id 1

Please fill following values to create new job object

Name: Sqoopy --设置 任务名称

From database configuration

Schema name: (Required)sqoop --库名:必填

Table name:(Required)sqoop --表名:必填

Table SQL statement:(Optional) --查询sql用来替代表查询:选填

Table column names:(Optional) --查询结果的字段名集合:选填

Partition column name:(Optional) id --唯一键,需使用查询语句中的【别名.字段】格式:选填

Null value allowed for the partition column:(Optional) --选填

Boundary query:(Optional) --过滤min(id)及max(id)的sql,过滤字段为checkColumn,会将lastValue传入作为第一个参数,第二个参数为获取的当前最大值【感觉第二个参数这样不好控制哈,想改源码了...】:选填

Incremental read

Check column:(Optional) --选填

Last value:(Optional)--选填

To HDFS configuration

Null value: (Optional)--选填

Output format:

0 : TEXT_FILE

1 : SEQUENCE_FILE

Choose: 0 --选择文件压缩格式

Compression format:

0 : NONE

1 : DEFAULT

2 : DEFLATE

3 : GZIP

4 : BZIP2

5 : LZO

6 : LZ4

7 : SNAPPY

8 : CUSTOM

Choose: 0 --选择压缩类型

Custom compression format:(Optional) --选填

Output directory:(Required)/root/projects/sqoop --HDFS存储目录(目的地)

Append mode:(Optional) --是否增量导入:选填

Driver Config

Extractors: 2 --提取器

Loaders: 2 --加载器

New job was successfully created with validation status OK and persistent id 1

#查看job

sqoop:000> show job

#执行任务用

#start job命令去执行这个任务,用--jid来传入任务id

sqoop:000> start job --jid 1

问题一:

要注意一下$SQOOP_HOME/server/conf/server.xml中的tomcat端口问题,确保这些端口不会和你其他tomcat服务器冲突。

问题二:

删除$SQOOP_HOME/server/sqoop/WEB-INFO/lib中的log4j-1.2.16.jar解决jar包冲突问题

问题三:

在sqoop客户端设置查看job详情:

set option --name verbose --value true

参考网上的文档,以及源码内容

1、安装准备工作

sqoop2下载地址:http://apache.fayea.com/sqoop/1.99.6/sqoop-1.99.6-bin-hadoop200.tar.gz

2、安装到工作目录

tar -xvf sqoop-1.99.6-bin-hadoop200.tar.gz

mv sqoop-1.99.6-bin-hadoop200 /usr/local/

3、修改环境变量,可以将sqoop安装到hadoop用户下,我就用root的作为示例了

vi /etc/profile

添加如下内容:

export SQOOP_HOME=/usr/local/sqoop-1.99.6-bin-hadoop200

export PATH=$SQOOP_HOME/bin:$PATH

export CATALINA_BASE=$SQOOP_HOME/server

export LOGDIR=$SQOOP_HOME/logs

保存退出及时生效:

source /etc/profile

4、修改sqoop配置

vi /usr/local/sqoop-1.99.6-bin-hadoop200/server/conf

#修改指向我的hadoop安装目录

org.apache.sqoop.submission.engine.mapreduce.configuration.directory=/usr/local/hadoop-2.7.1/

#把hadoop目录下的jar包都引进来

vi /usr/local/sqoop-1.99.6-bin-hadoop200/server/conf/catalina.properties

common.loader=/usr/local/hadoop-2.7.1/share/hadoop/common/*.jar,/usr/local/hadoop-2.7.1/share/hadoop/common/lib/*.jar,/usr/local/hadoop-2.7.1/share/hadoop/hdfs/*.jar,/usr/local/hadoop-2.7.1/share/hadoop/hdfs/lib/*.jar,/usr/local/hadoop-2.7.1/share/hadoop/mapreduce/*.jar,/usr/local/hadoop-2.7.1/share/hadoop/mapreduce/lib/*.jar,/usr/local/hadoop-2.7.1/share/hadoop/tools/*.jar,/usr/local/hadoop-2.7.1/share/hadoop/tools/lib/*.jar,/usr/local/hadoop-2.7.1/share/hadoop/yarn/*.jar,/usr/local/hadoop-2.7.1/share/hadoop/yarn/lib/*.jar,/usr/local/hadoop-2.7.1/share/hadoop/httpfs/tomcat/lib/*.jar

#因为和hadoop-2.7.1中的jar包冲突,需删除$SQOOP_HOME/server/webapps/sqoop/WEB-INFO/lib/中的log4j的jar

#这里必须填写全路径,不能使用环境变量

或者

在$SQOOP_HOME中建个文件夹例如hadoop_lib,然后将这些jar包cp到此文件夹中,最后将此文件夹路径添加到common.loader属性中,这种方法更加直观些

5、下载mysql驱动包

mysql-connector-java-5.1.29.jar

并放到 $SQOOP_HOME/server/lib/ 目录下

[注意:下载的是 mysql-xxx.tar.gz 只需要把里面的 mysql-connector-java.xxx-bin.jar 考出来即可,这是个坑啊]

6、启动/停止sqoop200

/usr/local/sqoop-1.99.6-bin-hadoop200/bin/sqoop.sh server start/stop

查看启动日志:

vi /usr/local/sqoop-1.99.6-bin-hadoop200/server/logs/catalina.out

7、进入客户端交互目录

$SQOOP_HOME/bin/sqoop.sh client

sqoop:000>set server --host hadoopMaster --port 12000 --webapp sqoop 【红色部分为 本机hostname 主机名】

sqoop:000> show version --all 【查看版本信息】

sqoop:000> show connector --all 【查看现有连接类型信息】

创建数据库连接:

1、创建hadoop连接

sqoop:000> create link --cid 3

Creating link for connector with id 3

Please fill following values to create new link object

Name: hdfs --设置连接名称

Link configuration

HDFS URI: hdfs://hadoopMaster:8020/ --HDFS访问地址

Hadoop conf directory:/usr/local/hadoop-2.7.1/etc/hadoop

New link was successfully created with validation status OK and persistent id 1

2、创建mysql连接

sqoop:000> create link --cid 4

Creating link for connector with id 4

Please fill following values to create new link object

Name: mysqltest --连接名称

Link configuration

JDBC Driver Class: com.mysql.jdbc.Driver --连接驱动类

JDBC Connection String: jdbc:mysql://mysql.server/database --jdbc连接

Username: sqoop --数据库用户

Password: ************ --数据库密码

JDBC Connection Properties:

There are currently 0 values in the map:

entry# protocol=tcp

There are currently 1 values in the map:

protocol = tcp

entry#按回车

New link was successfully created with validation status OK and persistent id 2

3、建立job(MySql 到 HDFS)

sqoop:000>show link

sqoop:000> create job -f 2 -t 1

Creating job for links with from id 2 and to id 1

Please fill following values to create new job object

Name: Sqoopy --设置 任务名称

From database configuration

Schema name: (Required)sqoop --库名:必填

Table name:(Required)sqoop --表名:必填

Table SQL statement:(Optional) --查询sql用来替代表查询:选填

Table column names:(Optional) --查询结果的字段名集合:选填

Partition column name:(Optional) id --唯一键,需使用查询语句中的【别名.字段】格式:选填

Null value allowed for the partition column:(Optional) --选填

Boundary query:(Optional) --过滤min(id)及max(id)的sql,过滤字段为checkColumn,会将lastValue传入作为第一个参数,第二个参数为获取的当前最大值【感觉第二个参数这样不好控制哈,想改源码了...】:选填

Incremental read

Check column:(Optional) --选填

Last value:(Optional)--选填

To HDFS configuration

Null value: (Optional)--选填

Output format:

0 : TEXT_FILE

1 : SEQUENCE_FILE

Choose: 0 --选择文件压缩格式

Compression format:

0 : NONE

1 : DEFAULT

2 : DEFLATE

3 : GZIP

4 : BZIP2

5 : LZO

6 : LZ4

7 : SNAPPY

8 : CUSTOM

Choose: 0 --选择压缩类型

Custom compression format:(Optional) --选填

Output directory:(Required)/root/projects/sqoop --HDFS存储目录(目的地)

Append mode:(Optional) --是否增量导入:选填

Driver Config

Extractors: 2 --提取器

Loaders: 2 --加载器

New job was successfully created with validation status OK and persistent id 1

#查看job

sqoop:000> show job

#执行任务用

#start job命令去执行这个任务,用--jid来传入任务id

sqoop:000> start job --jid 1

问题一:

要注意一下$SQOOP_HOME/server/conf/server.xml中的tomcat端口问题,确保这些端口不会和你其他tomcat服务器冲突。

问题二:

删除$SQOOP_HOME/server/sqoop/WEB-INFO/lib中的log4j-1.2.16.jar解决jar包冲突问题

问题三:

在sqoop客户端设置查看job详情:

set option --name verbose --value true

相关文章推荐

- sqoop2增量导入无法指定last value问题解决方法

- sqoop2 用POST给sqoop server发json参数请求创建连接得不到响应

- sqoop2 java 批量入库程序

- sqoop2 去除单引号与自定义分隔符

- sqoop2 调研

- sqoop2 hadoop ha搭建注意

- sqoop2基本架构、部署和个人使用感受 推荐

- Datax与hadoop2.x兼容部署与实际项目应用工作记录分享 推荐

- Data Transfer By Sqoop2

- sqoop2-数据源

- sqoop2增量导入无法指定last value问题解决方法

- 安装sqoop1.99.7

- Sqoop新品来了

- Sqoop2中传入配置文件中url之【坑】

- CDH SQOOP 2实例

- Sqoop2 使用

- Sqoop2 Hue 使用

- 安装sqoop-1.99.7报caused by java.lang.ClassNotFoundException: org.apache.hadoop.conf.Configuration

- sqoop1.99.7搭建和使用