Machine Learning - Regularized Logistic Regression

2016-02-21 16:14

429 查看

This series of articles are the study notes of " Machine Learning ", by Prof. Andrew Ng., Stanford University. This article is the notes of week 3, Solving the Problem of Overfitting, Part III. This article contains some topic

about how to implementation logistic regression with regularization to addressing overfitting.

In this section, we'll show how you can adapt both of those techniques, both gradient descent and the more advanced optimization techniques in order to have them work for regularized logistic regression.

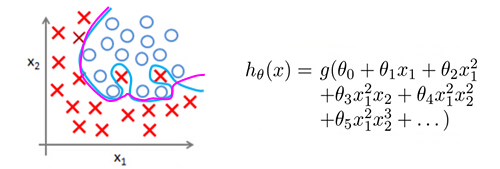

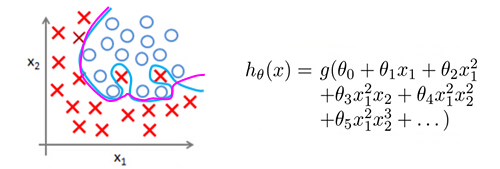

So long as you apply regularization and keep the parameters small you're more likely to get a decision boundary in pink.

And if we want to

modify it to use regularization, all we need to do is add to it the following term plusλ/2m,

sum from j = 1, and as usual sum fromj = 1 soon up to theta

n from being too large.

This is the cost function for logistic regression with regularization

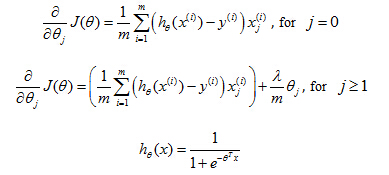

we can write the equation separately as follow

We're working out gradient descent for regularized linear regression. And of course, just to wrap up this discussion,this term here in the square brackets is the new partial derivative for respect

ofθj of the new cost functionJ(θ). WhereJ(θ) here is

the cost function we defined on a previous that does use regularization.

that takes us input the parameter vector theta and once again in the equations we've been writing here we used 0 index vectors.

about how to implementation logistic regression with regularization to addressing overfitting.

Regularized logistic regression

For logistic regression, we previously talked about two types of optimization algorithms. We talked about how to use gradient descent to optimize as cost function J of theta. And we also talked about advanced optimization methods.In this section, we'll show how you can adapt both of those techniques, both gradient descent and the more advanced optimization techniques in order to have them work for regularized logistic regression.

So long as you apply regularization and keep the parameters small you're more likely to get a decision boundary in pink.

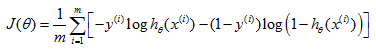

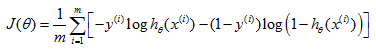

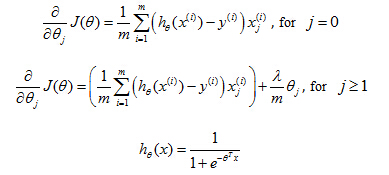

1. Cost Function of Logistic Regression

This is the original cost function for logistic regression without regularization

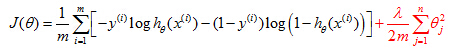

And if we want to

modify it to use regularization, all we need to do is add to it the following term plusλ/2m,

sum from j = 1, and as usual sum fromj = 1 soon up to theta

n from being too large.

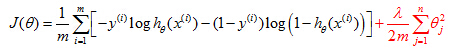

This is the cost function for logistic regression with regularization

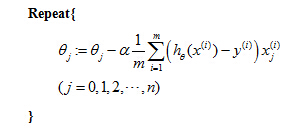

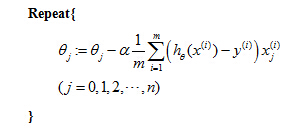

2. Gradient descent for logistic regression

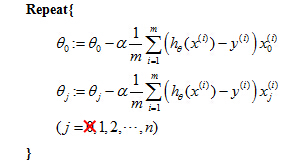

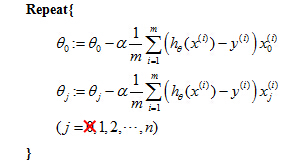

Gradient descent for logistic regression (without regularization)

we can write the equation separately as follow

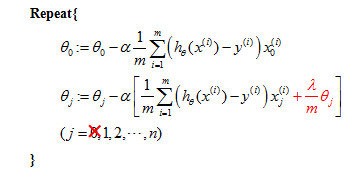

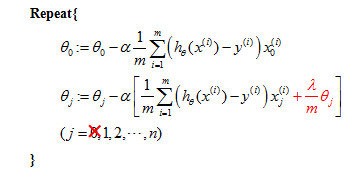

Gradient descent for logistic regression (with regularization)

We're working out gradient descent for regularized linear regression. And of course, just to wrap up this discussion,this term here in the square brackets is the new partial derivative for respect

ofθj of the new cost functionJ(θ). WhereJ(θ) here is

the cost function we defined on a previous that does use regularization.

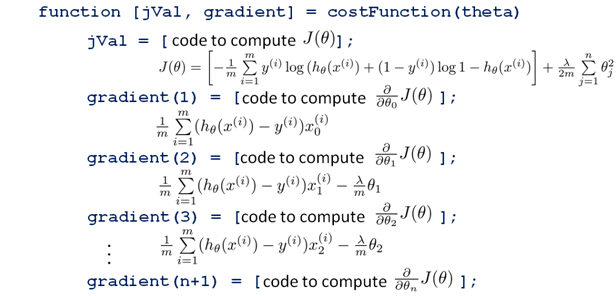

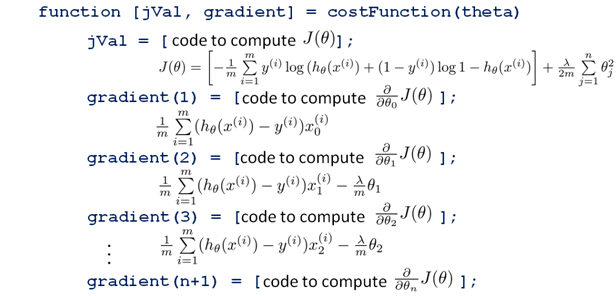

3. Advanced Optimization

Let's talk about how to get regularized linear regression to work using the more advanced optimization methods. And just to remind you for those methods what we needed to do was to define the function that's called the cost function,that takes us input the parameter vector theta and once again in the equations we've been writing here we used 0 index vectors.

相关文章推荐

- Javascript SHA-1:Secure Hash Algorithm

- 用Python从零实现贝叶斯分类器的机器学习的教程

- My Machine Learning

- 机器学习---学习首页 3ff0

- 反向传播(Backpropagation)算法的数学原理

- 也谈 机器学习到底有没有用 ?

- 如何用70行代码实现深度神经网络算法

- 量子计算机编程原理简介 和 机器学习

- 近200篇机器学习&深度学习资料分享(含各种文档,视频,源码等)

- 已经证实提高机器学习模型准确率的八大方法

- 初识机器学习算法有哪些?

- 机器学习相关的库和工具

- 10个关于人工智能和机器学习的有趣开源项目

- 机器学习实践中应避免的7种常见错误

- [转]可视化的数据结构和算法

- 机器学习书单

- 北美常用的机器学习/自然语言处理/语音处理经典书籍

- 如何提升COBOL系统代码分析效率

- 统计文件中不小于某一长度的单词的个数(泛型算法实现)

- 自动编程体系设想(一)