Wee5-3PP attachment 2

2015-11-30 17:03

281 查看

Algorithms for PP attachment

Alg0

Dumb one, to label all labels as a default label, say lowAlg1: random baseline

A random unsupervised baseline would have to label each instance in the test data with a random label, 0 or 1.Practically, it is the lower bound of all the other algorithms

The evaluation is performed on the test set, not training set, and test set should never be used until you completely finished your algorithm, and you could use development set to tune the parameters or add features

Observations

Data set has the characteristics of domain specificationToo complicate method will lead to overfit

Upper Bound

Usually, human performance is used for the upper bound. For PP attachment, using the 4 previous features, human accuracy is around 88%. So we could expect an upper bound of 87%Using linguistic knowledge

For example, we know the preposition of is much more likely to be associated with low attachment than high attachment (98.7% with 5000+ instances in training set). Therefore, the feature prep_of is very valuable(informative and frequent)Alg2

If the prep is 'of', label the tuple as 'low' Else If the prep is 'to', label the tuple as 'high' Else label the tuple as 'low'(default)

The accuracy will be around 60%, proving that using linguistic knowledge will improve the algorithm

Alg2a

If the prep is 'of', label the tuple as 'low' Else If the prep is 'to', label the tuple as 'high' Else label the tuple as 'high'(default)

After looking at prep ‘of’ and ‘to’, the remaining data set contains more high attachment than low attachment on average, and the accuracy will be around 74%.

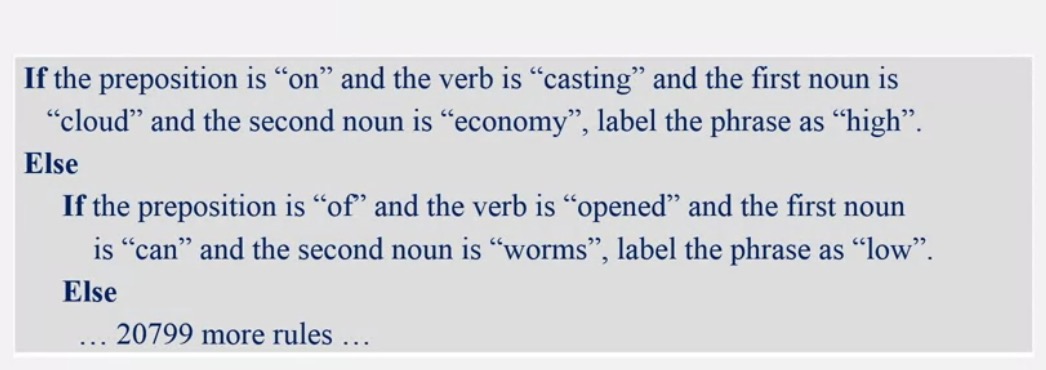

Alg3

We will set rules for every case in the training set, too specific.

Remark: Due to inconsistent human labelers, more context is needed.

相关文章推荐

- 嗅探、劫持 3389 端口、远程桌面、rdp 协议的一些经验技巧总结

- 电脑蓝屏代码大全

- 十四周 项目二 二叉树排序树中查找的路径

- Struct配置

- Struts2返回JSON对象的方法总结

- 元博二年级作文

- MySQL数据库出现The server quit without updating PID file.

- 反转排序

- 初学c++总结

- ORACLE 创建用户,表空间,导入导出实践

- 基于AForge.Net框架的扑克牌识别

- Masonry使用详解

- CheckBox全选的实现

- Araxis Merge基本配置及操作

- 第十四周项目--是否二叉排序树

- 第十二周项目4 输出从顶点u到v的一条简单路径

- 第7周项目6-停车场模拟

- 第14周 项目1(4)-平衡二叉树

- Codeforces 599C Day at the Beach(想法题,排序)

- NSOperation自定义