在RHEL5.8下使用RHCS实现Web HA

2014-06-15 21:38

197 查看

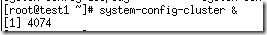

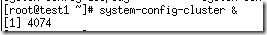

记录使用Red Hat Cluster Suite实现高可用集群,利用web服务实验。实现RHCS的集群,有三个前提条件:①每个集群都有惟一集群名称;②至少有一个fence设备;③至少应该有三个节点;两个节点的场景中要使用qdisk (仲裁磁盘);实验环境介绍:集群主机:192.168.1.6 test1; 192.168.1.7 test2 ; 192.168.1.8 test3共享存储(NFS):192.168.1.170配置一个Web 的HA,资源主要有vip,共享存储,以及httpd服务;同样的,需配置主机名称解析,时间同步,以及ssh互信;(ssh互信配置,在rhcs官网安装文档中并没有提到,反正配置也不难,配置上也无可厚非)一、首先配置共享存储 图形GUI,提示没有/etc/cluster/cluster.conf,选择create new configuration

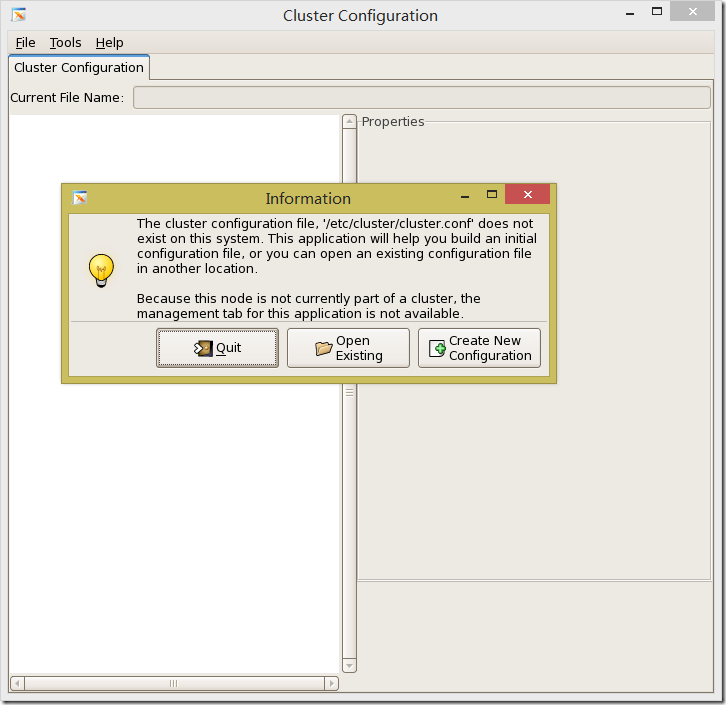

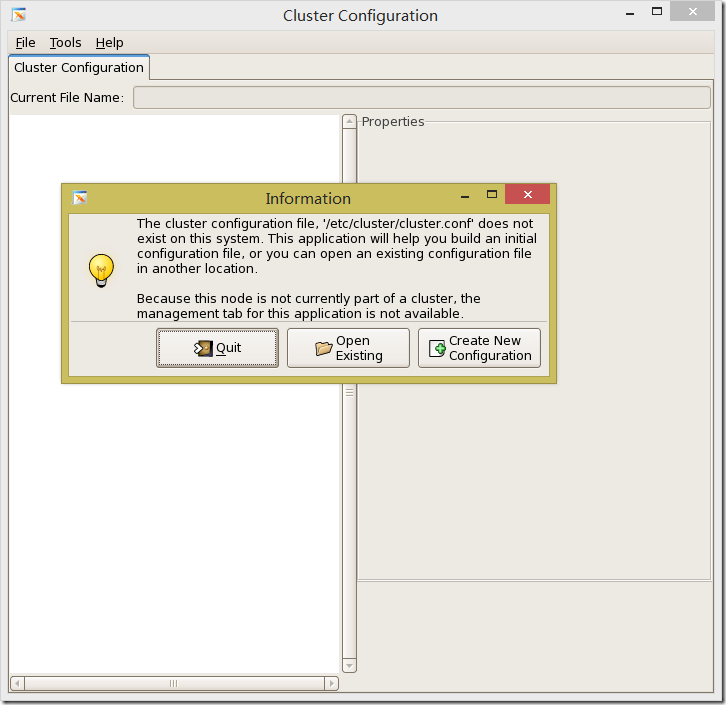

图形GUI,提示没有/etc/cluster/cluster.conf,选择create new configuration ⑴输入唯一集群此处可定义多播地址,不定义会自动分配,以及定义qdisk,由于实验使用的是三台节点,不管集群如何分裂,votes票数都有效。

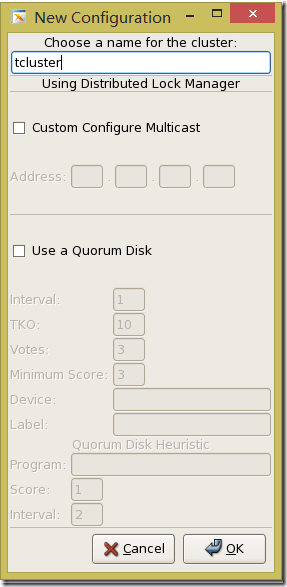

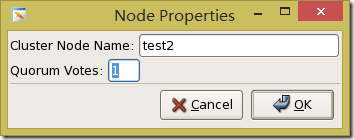

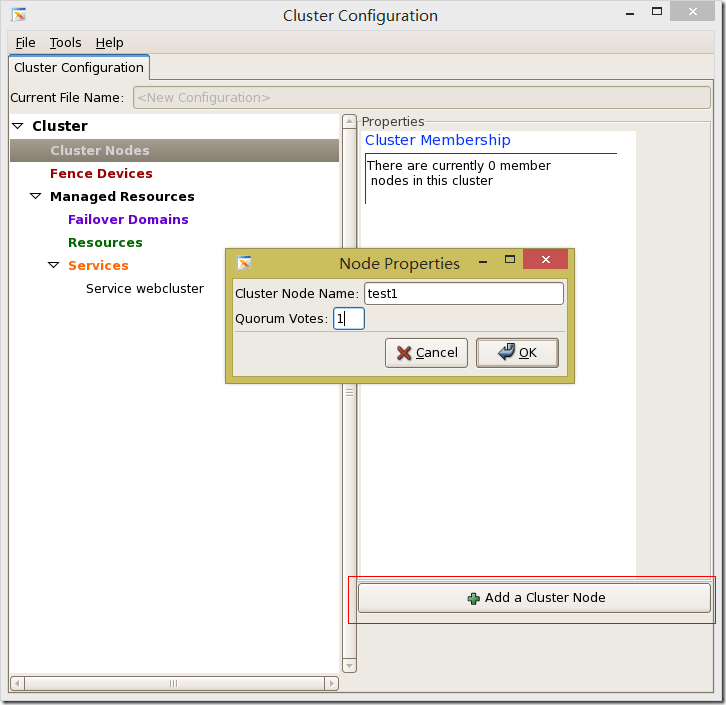

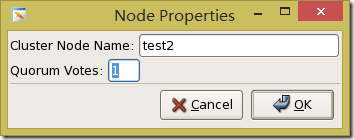

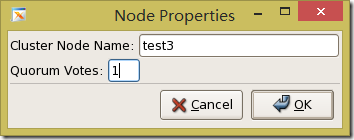

⑴输入唯一集群此处可定义多播地址,不定义会自动分配,以及定义qdisk,由于实验使用的是三台节点,不管集群如何分裂,votes票数都有效。 ⑵添加集群节点输入节点的主机名,以及节点拥有的法定票数

⑵添加集群节点输入节点的主机名,以及节点拥有的法定票数

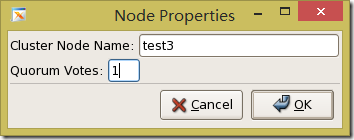

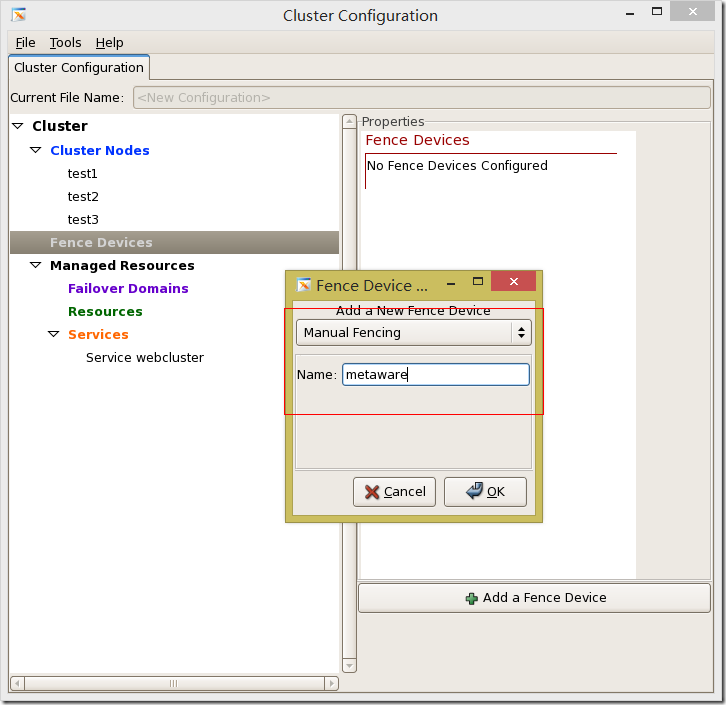

⑶添加fance设备此处将fance设备定义为手动,手动开关机,俗称的“肉键”;

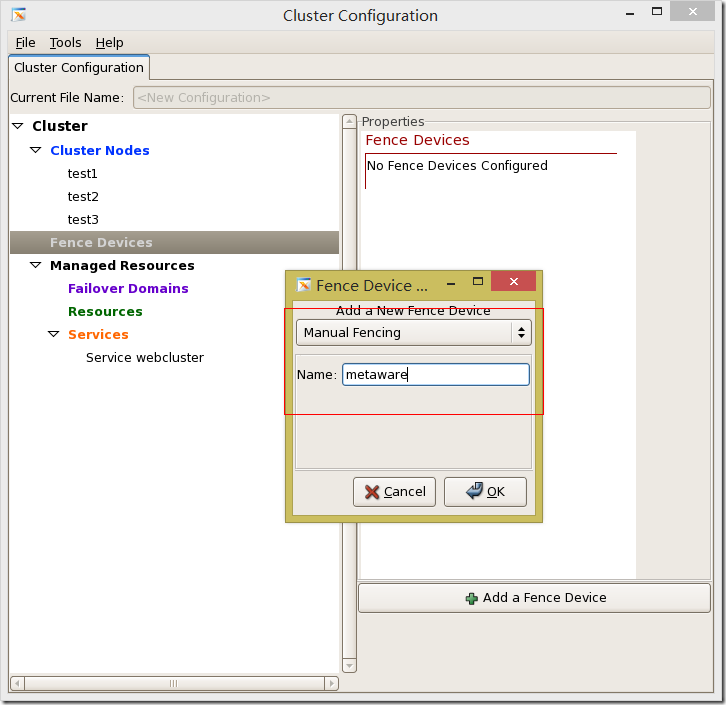

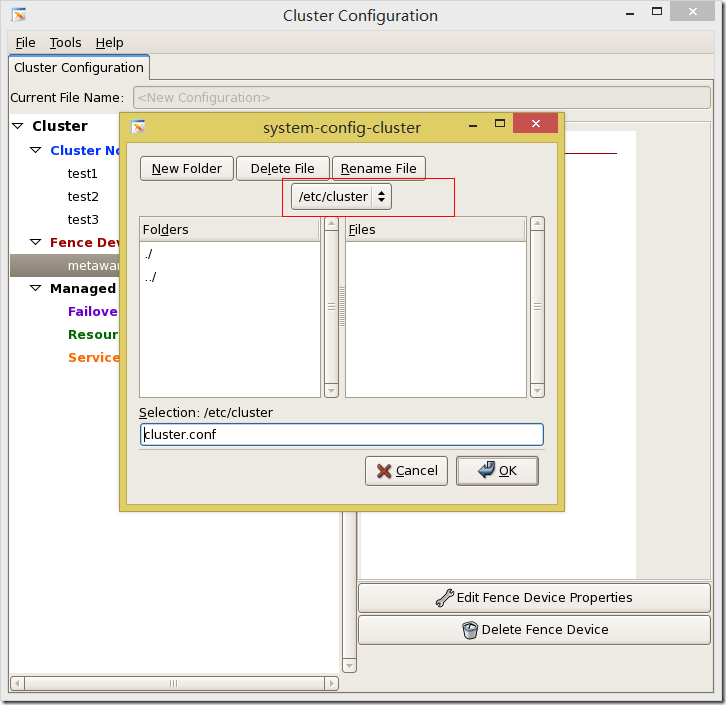

⑶添加fance设备此处将fance设备定义为手动,手动开关机,俗称的“肉键”; ⑷保存配置文件

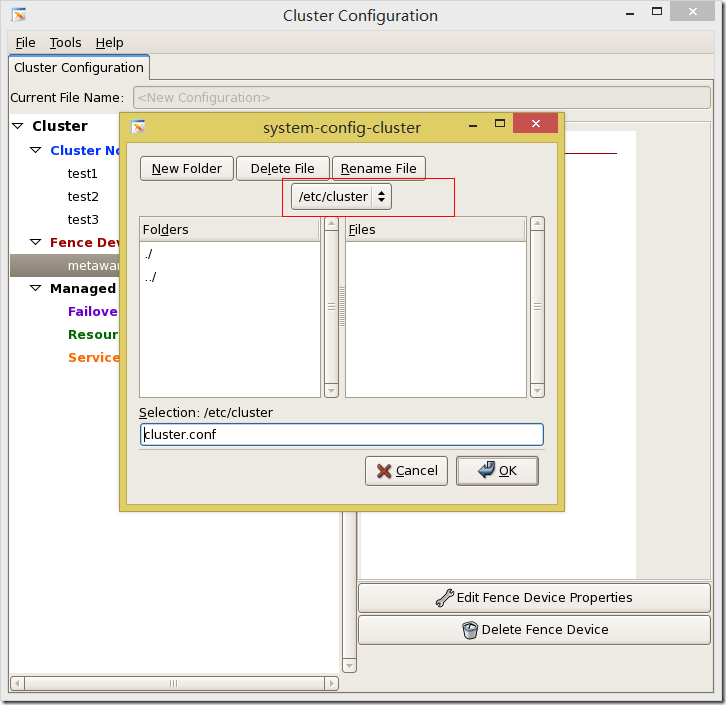

⑷保存配置文件 ⑸查看配置文件以上操作都在test1上操作的,故此刻只有test1上,存在cluster.conf集群配置文件只有等集群服务启动后,ccs才会自动同步配置文件至其他节点。

⑸查看配置文件以上操作都在test1上操作的,故此刻只有test1上,存在cluster.conf集群配置文件只有等集群服务启动后,ccs才会自动同步配置文件至其他节点。 ⑵选择为这个services创建resource

⑵选择为这个services创建resource ⑶添加vip

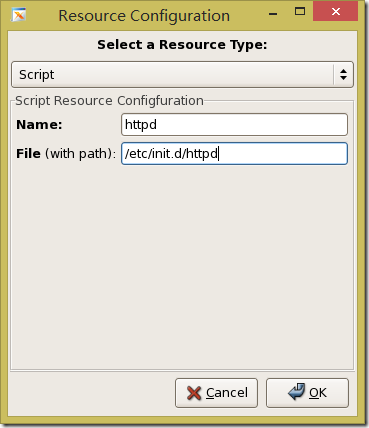

⑶添加vip ⑷添加web服务启动脚本在rhcs集群中,所有的lsb类型的ra,都是scripts

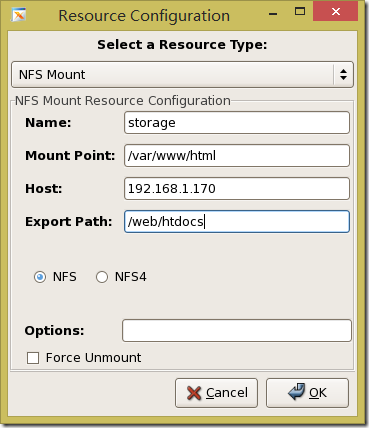

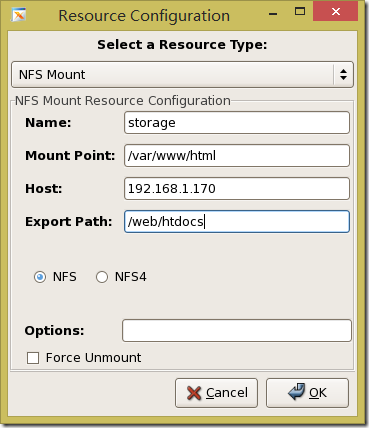

⑷添加web服务启动脚本在rhcs集群中,所有的lsb类型的ra,都是scripts ⑸添加共享存储

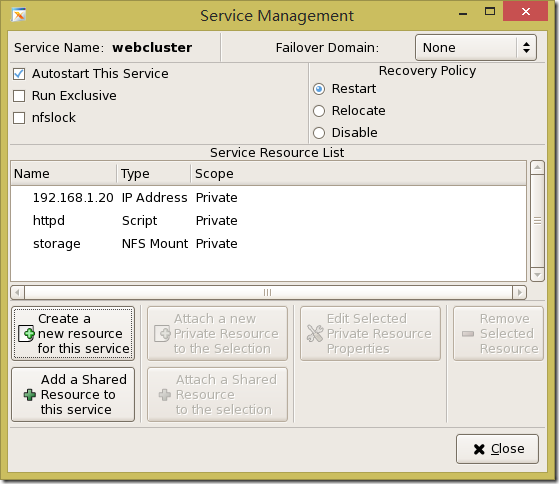

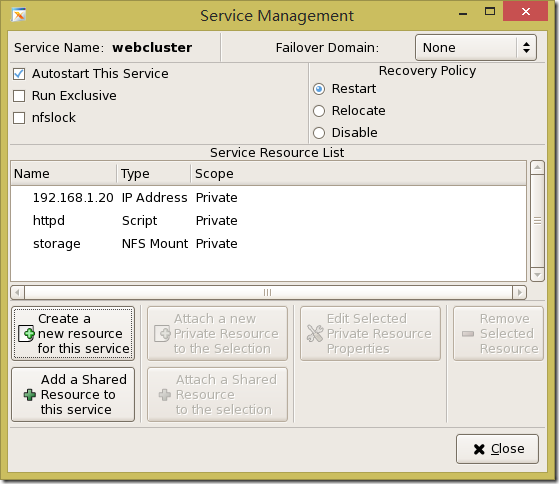

⑸添加共享存储 ⑹Service 资源预览留意旁边,可设置故障转移域

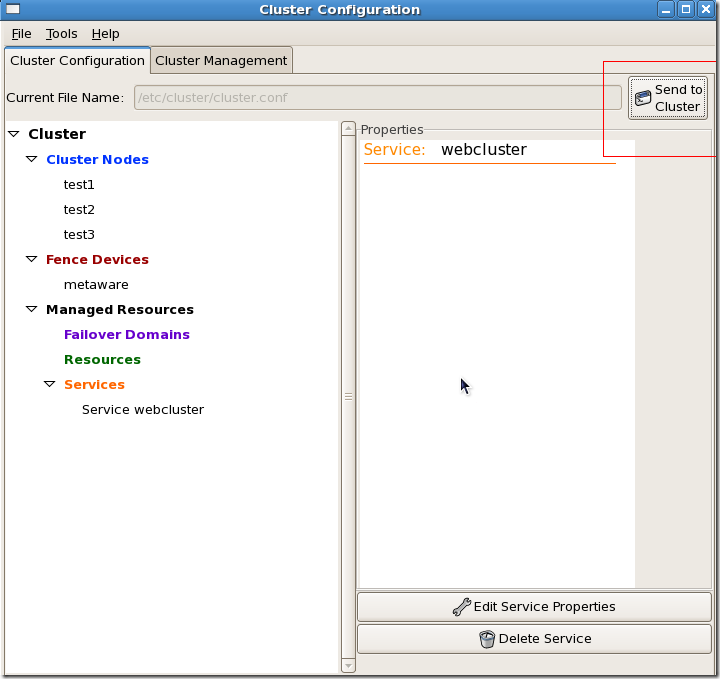

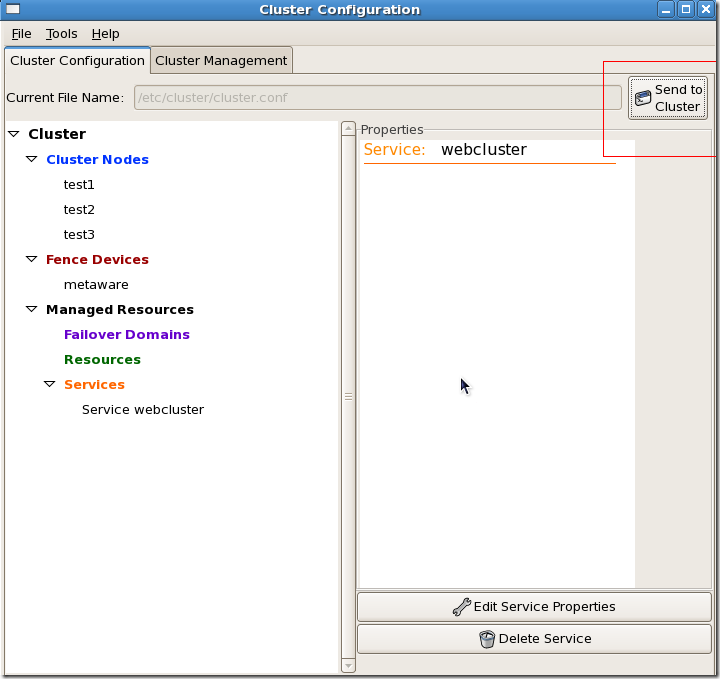

⑹Service 资源预览留意旁边,可设置故障转移域 ⑺将资源配置信息,同步至其他节点

⑺将资源配置信息,同步至其他节点 查看集群,以及资源状态

查看集群,以及资源状态 httpd,80端口监听

httpd,80端口监听 共享存储

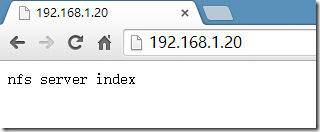

共享存储 客户端访问web测试

客户端访问web测试 迁移资源到test2上,客户端继续访问

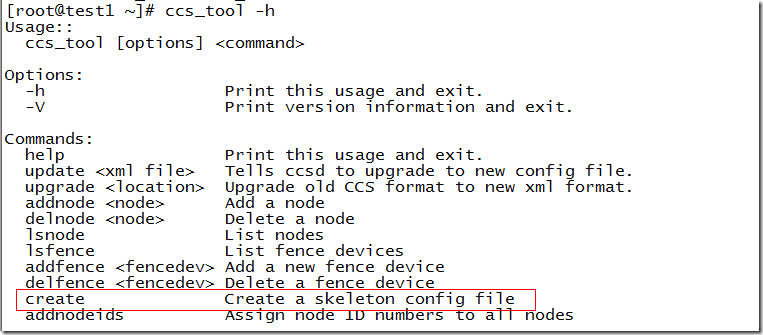

迁移资源到test2上,客户端继续访问 web ha 集群搭建完成。四、RHCS命令行配置工具rhcs不仅提供了gui界面配置集群,更提供了命令行工具,现在把所有的配置全都清空,利用命令行配置集群

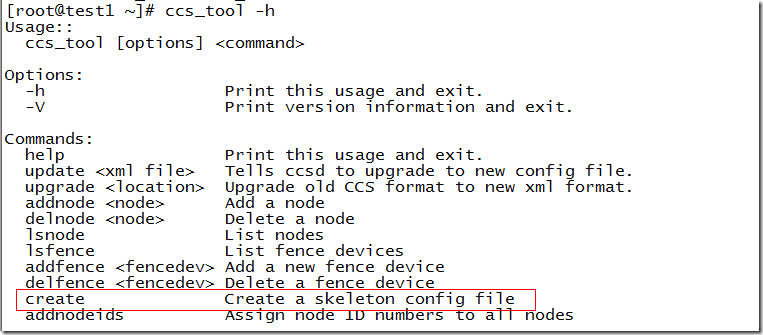

web ha 集群搭建完成。四、RHCS命令行配置工具rhcs不仅提供了gui界面配置集群,更提供了命令行工具,现在把所有的配置全都清空,利用命令行配置集群

[root@one ~]# mkdir -p /web/htdocs [root@one ~]# echo > /web/htdocs/index.html [root@one ~]# echo >> /etc/exports [root@one ~]# service nfs restart Shutting down NFS daemon: [FAILED] Shutting down NFS mountd: [FAILED] Shutting down NFS quotas: [FAILED] Starting NFS services: [ OK ] Starting NFS quotas: [ OK ] Starting NFS mountd: [ OK ] Stopping RPC idmapd: [ OK ] Starting RPC idmapd: [ OK ] Starting NFS daemon: [ OK ] [root@one ~]# showmount -e 192.168.1.170 Export list 192.168.1.170: /web/htdocs 192.168.1.0/255.255.255.0二、安装httpd服务,并设置开机不自动启动httpd服务属于集群资源,故启动、停止等都应交由集群去控制

[root@test1 ~]# ha ssh test$i ;done [root@test1 ~]# ha ssh test$i ;done三、配置RHCS⒈安装RHCS群集,基础软件配置yum

[root@test1 ~]# vi /etc/yum.repos.d/aeon.repo [Server] name=Server baseurl=file: enabled=1 gpgcheck=0 gpgkey= [Cluster] name=Cluster baseurl=file: enabled=1 gpgcheck=0 gpgkey= [ClusterStorage] name=ClusterStorage baseurl=file: enabled=1 gpgcheck=0 gpgkey= [VT] name=VT baseurl=file: enabled=1 gpgcheck=0 gpgkey= [root@testrac1 ~]# [root@testrac1 /]# cd media/ [root@testrac1 media]# mount -o loop RHEL_5.5_X86_64.iso /redhat安装rhcs集群软件,cman、rgmanager、system-config-clustercman rhcs的通讯基础,Cluster Infrastructurergmanager rhcs的资源管理器,CRMsystem-config-cluster rhcs GUI配置工具

[root@test1 ~]# alias ha= [root@test1 ~]# ha ssh test$i ;done The authenticity of host cantest1s password: Loaded plugins: katello, product-id, security, subscription-manager Updating certificate-based repositories. Unable to read consumer identity Setting up Install Process Resolving Dependencies --> Running transaction check ---> Package cman.x86_64 0:2.0.115-96.el5 set to be updated --> Processing Dependency: perl(XML::LibXML) package: cman --> Processing Dependency: libSaCkpt.so.2(OPENAIS_CKPT_B.01.01)(64bit) package: cman --> Processing Dependency: perl(Net::Telnet) package: cman --> Processing Dependency: python-pycurl package: cman --> Processing Dependency: pexpect package: cman --> Processing Dependency: openais package: cman --> Processing Dependency: python-suds package: cman --> Processing Dependency: libcpg.so.2(OPENAIS_CPG_1.0)(64bit) package: cman --> Processing Dependency: libSaCkpt.so.2()(64bit) package: cman --> Processing Dependency: libcpg.so.2()(64bit) package: cman ---> Package rgmanager.x86_64 0:2.0.52-28.el5 set to be updated ---> Package system-config-cluster.noarch 0:1.0.57-12 set to be updated --> Running transaction check ---> Package openais.x86_64 0:0.80.6-36.el5 set to be updated ---> Package perl-Net-Telnet.noarch 0:3.03-5 set to be updated ---> Package perl-XML-LibXML.x86_64 0:1.58-6 set to be updated --> Processing Dependency: perl-XML-NamespaceSupport package: perl-XML-LibXML --> Processing Dependency: perl(XML::SAX::Exception) package: perl-XML-LibXML --> Processing Dependency: perl(XML::LibXML::Common) package: perl-XML-LibXML --> Processing Dependency: perl-XML-SAX package: perl-XML-LibXML --> Processing Dependency: perl-XML-LibXML-Common package: perl-XML-LibXML --> Processing Dependency: perl(XML::SAX::DocumentLocator) package: perl-XML-LibXML --> Processing Dependency: perl(XML::SAX::Base) package: perl-XML-LibXML --> Processing Dependency: perl(XML::NamespaceSupport) package: perl-XML-LibXML ---> Package pexpect.noarch 0:2.3-3.el5 set to be updated ---> Package python-pycurl.x86_64 0:7.15.5.1-8.el5 set to be updated ---> Package python-suds.noarch 0:0.4.1-2.el5 set to be updated --> Running transaction check ---> Package perl-XML-LibXML-Common.x86_64 0:0.13-8.2.2 set to be updated ---> Package perl-XML-NamespaceSupport.noarch 0:1.09-1.2.1 set to be updated ---> Package perl-XML-SAX.noarch 0:0.14-11 set to be updated --> Finished Dependency Resolution Dependencies Resolved ================================================================================ Package Arch Version Repository Size ================================================================================ Installing: cman x86_64 2.0.115-96.el5 Server 766 k rgmanager x86_64 2.0.52-28.el5 Cluster 346 k system-config-cluster noarch 1.0.57-12 Cluster 325 k Installing dependencies: openais x86_64 0.80.6-36.el5 Server 409 k perl-Net-Telnet noarch 3.03-5 Server 56 k perl-XML-LibXML x86_64 1.58-6 Server 229 k perl-XML-LibXML-Common x86_64 0.13-8.2.2 Server 16 k perl-XML-NamespaceSupport noarch 1.09-1.2.1 Server 15 k perl-XML-SAX noarch 0.14-11 Server 77 k pexpect noarch 2.3-3.el5 Server 214 k python-pycurl x86_64 7.15.5.1-8.el5 Server 73 k python-suds noarch 0.4.1-2.el5 Server 251 k Transaction Summary ================================================================================ Install 12 Package(s) Upgrade 0 Package(s) Total download size: 2.7 M Downloading Packages: -------------------------------------------------------------------------------- Total 188 MB/s | 2.7 MB 00:00 Running rpm_check_debug Running Transaction Test Finished Transaction Test Transaction Test Succeeded Running Transaction Installing : perl-XML-LibXML-Common 1/12 Installing : python-pycurl 2/12 Installing : openais 3/12 Installing : perl-XML-NamespaceSupport 4/12 Installing : perl-XML-SAX 5/12 Installing : perl-XML-LibXML 6/12 Installing : python-suds 7/12 Installing : pexpect 8/12 Installing : perl-Net-Telnet 9/12 Installing : cman 10/12 Installing : system-config-cluster 11/12 Installing : rgmanager 12/12 Installed products updated. Installed: cman.x86_64 0:2.0.115-96.el5 rgmanager.x86_64 0:2.0.52-28.el5 system-config-cluster.noarch 0:1.0.57-12 Dependency Installed: openais.x86_64 0:0.80.6-36.el5 perl-Net-Telnet.noarch 0:3.03-5 perl-XML-LibXML.x86_64 0:1.58-6 perl-XML-LibXML-Common.x86_64 0:0.13-8.2.2 perl-XML-NamespaceSupport.noarch 0:1.09-1.2.1 perl-XML-SAX.noarch 0:0.14-11 pexpect.noarch 0:2.3-3.el5 python-pycurl.x86_64 0:7.15.5.1-8.el5 python-suds.noarch 0:0.4.1-2.el5 Complete!

⒉配置RHCS运行system-config-cluster配置集群资源

图形GUI,提示没有/etc/cluster/cluster.conf,选择create new configuration

图形GUI,提示没有/etc/cluster/cluster.conf,选择create new configuration ⑴输入唯一集群此处可定义多播地址,不定义会自动分配,以及定义qdisk,由于实验使用的是三台节点,不管集群如何分裂,votes票数都有效。

⑴输入唯一集群此处可定义多播地址,不定义会自动分配,以及定义qdisk,由于实验使用的是三台节点,不管集群如何分裂,votes票数都有效。 ⑵添加集群节点输入节点的主机名,以及节点拥有的法定票数

⑵添加集群节点输入节点的主机名,以及节点拥有的法定票数

⑶添加fance设备此处将fance设备定义为手动,手动开关机,俗称的“肉键”;

⑶添加fance设备此处将fance设备定义为手动,手动开关机,俗称的“肉键”; ⑷保存配置文件

⑷保存配置文件 ⑸查看配置文件以上操作都在test1上操作的,故此刻只有test1上,存在cluster.conf集群配置文件只有等集群服务启动后,ccs才会自动同步配置文件至其他节点。

⑸查看配置文件以上操作都在test1上操作的,故此刻只有test1上,存在cluster.conf集群配置文件只有等集群服务启动后,ccs才会自动同步配置文件至其他节点。[root@test1 ~]# cat /etc/cluster/cluster.conf<?xml version= ?><cluster alias= config_version= name=><fence_daemon post_fail_delay= post_join_delay=/><clusternodes><clusternode name= nodeid= votes=><fence/></clusternode><clusternode name= nodeid= votes=><fence/></clusternode><clusternode name= nodeid= votes=><fence/></clusternode></clusternodes><cman/><fencedevices><fencedevice agent= name=/></fencedevices><rm><failoverdomains/><resources/></rm></cluster>You have mail /var/spool/mail/root⑹启动服务在test1上启动服务,一直卡在”starting fencing … done”这是由于,集群配置文件中定义了三个节点,而其它二个节点没有启动集群服务,故一致等待。三个节点,同时启动cman服务。

[root@test1 ~]# service cman startStarting cluster:Loading modules... doneMounting configfs... doneStarting ccsd... doneStarting cman... doneStarting daemons... doneStarting fencing... done[ OK ][root@test2 ~]# service cman startStarting cluster:Loading modules... doneMounting configfs... doneStarting ccsd... doneStarting cman... doneStarting daemons... doneStarting fencing... done[ OK ][root@test3 ~]# service cman startStarting cluster:Loading modules... doneMounting configfs... doneStarting ccsd... doneStarting cman... doneStarting daemons... doneStarting fencing... done[ OK ]

启动rgmanager

[root@test1 ~]# ha ssh test$i ;doneStarting Cluster Service Manager: [ OK ]Starting Cluster Service Manager: [ OK ]Starting Cluster Service Manager: [ OK ][root@test1 ~]#ha ssh test$i ;done

[root@test1 ~]# ha ssh test$i ;doneWarning: Permanently added the RSA host key IP address to the list of known hosts.total 4-rw-r--r-- 1 root root 568 Jun 16 02:31 cluster.conftotal 4-rw-r----- 1 root root 567 Jun 16 02:34 cluster.conftotal 4-rw-r----- 1 root root 567 Jun 16 02:34 cluster.conf查看集群服务启动情况可看出ccsd监听本机的50006端口,不仅有tcp,更有udp的。

[root@test1 ~]# netstat -tnlpActive Internet connections (only servers)Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program nametcp 0 0 127.0.0.1:2208 0.0.0.0:* LISTEN 3108/./hpiodtcp 0 0 192.168.1.6:21064 0.0.0.0:* LISTEN -tcp 0 0 0.0.0.0:943 0.0.0.0:* LISTEN 2881/rpc.statdtcp 0 0 0.0.0.0:111 0.0.0.0:* LISTEN 2845/portmaptcp 0 0 127.0.0.1:50006 0.0.0.0:* LISTEN 18170/ccsdtcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 3128/sshdtcp 0 0 127.0.0.1:631 0.0.0.0:* LISTEN 3139/cupsdtcp 0 0 0.0.0.0:50008 0.0.0.0:* LISTEN 18170/ccsdtcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 3174/sendmailtcp 0 0 127.0.0.1:6010 0.0.0.0:* LISTEN 3794/sshdtcp 0 0 127.0.0.1:2207 0.0.0.0:* LISTEN 3113/python[root@test1 ~]# netstat -tunlpActive Internet connections (only servers)Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program nametcp 0 0 127.0.0.1:2208 0.0.0.0:* LISTEN 3108/./hpiodtcp 0 0 192.168.1.6:21064 0.0.0.0:* LISTEN -tcp 0 0 0.0.0.0:943 0.0.0.0:* LISTEN 2881/rpc.statdtcp 0 0 0.0.0.0:111 0.0.0.0:* LISTEN 2845/portmaptcp 0 0 127.0.0.1:50006 0.0.0.0:* LISTEN 18170/ccsdtcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 3128/sshdtcp 0 0 127.0.0.1:631 0.0.0.0:* LISTEN 3139/cupsdtcp 0 0 0.0.0.0:50008 0.0.0.0:* LISTEN 18170/ccsdtcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 3174/sendmailtcp 0 0 127.0.0.1:6010 0.0.0.0:* LISTEN 3794/sshdtcp 0 0 127.0.0.1:2207 0.0.0.0:* LISTEN 3113/pythonudp 0 0 192.168.1.6:5405 0.0.0.0:* 18208/aisexecudp 0 0 192.168.1.6:5149 0.0.0.0:* 18208/aisexecudp 0 0 239.192.110.162:5405 0.0.0.0:* 18208/aisexecudp 0 0 0.0.0.0:937 0.0.0.0:* 2881/rpc.statdudp 0 0 0.0.0.0:940 0.0.0.0:* 2881/rpc.statdudp 0 0 0.0.0.0:46399 0.0.0.0:* 3293/avahi-daemonudp 0 0 0.0.0.0:50007 0.0.0.0:* 18170/ccsdudp 0 0 0.0.0.0:5353 0.0.0.0:* 3293/avahi-daemonudp 0 0 0.0.0.0:111 0.0.0.0:* 2845/portmapudp 0 0 0.0.0.0:631 0.0.0.0:* 3139/cups查看集群状态信息

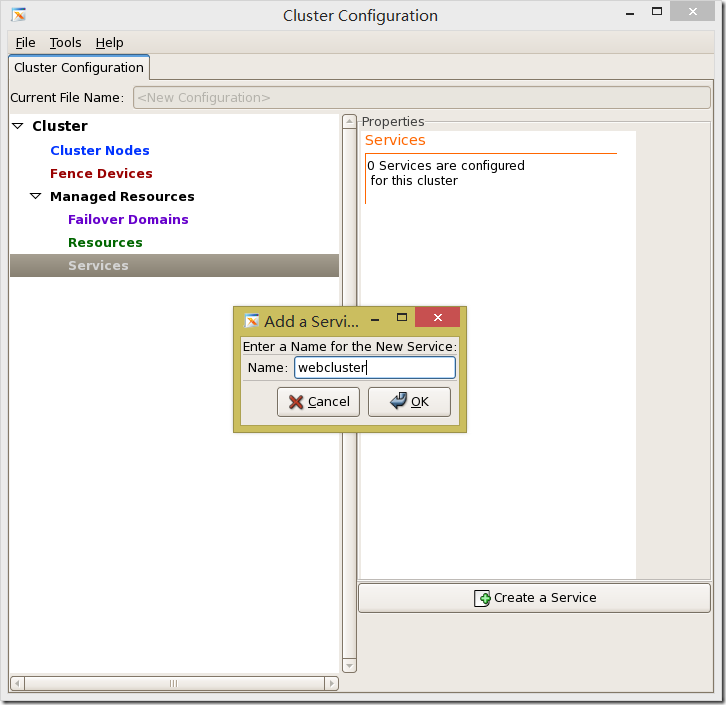

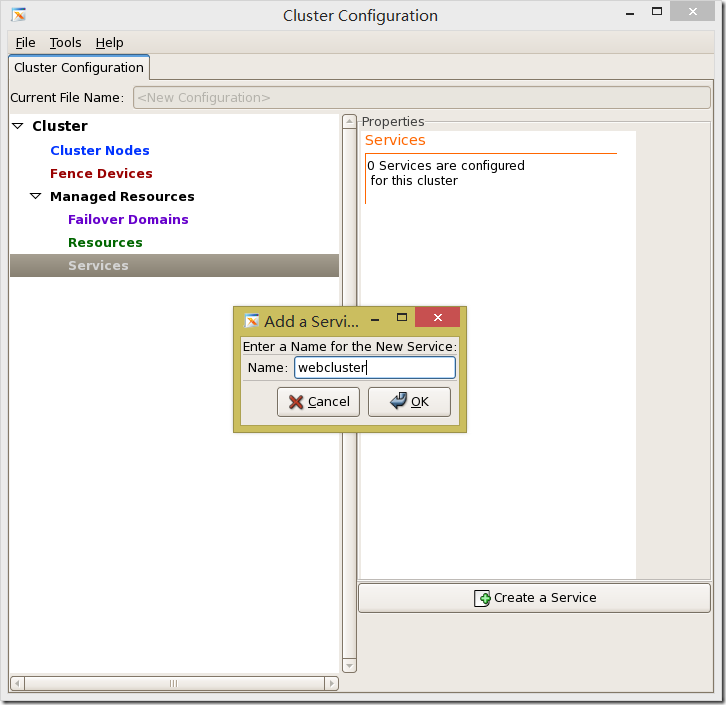

[root@test1 ~]# cman_tool statusVersion: 6.2.0Config Version: 2Cluster Name: tclusterCluster Id: 28212Cluster Member: YesCluster Generation: 12Membership state: Cluster-MemberNodes: 3Expected votes: 3Total votes: 3Node votes: 1Quorum: 2Active subsystems: 8Flags: DirtyPorts Bound: 0 177Node name: test1Node ID: 1Multicast addresses: 239.192.110.162Node addresses: 192.168.1.6[root@test1 ~]# clustatCluster Status tcluster @ Mon Jun 16 02:40:08 2014Member Status: QuorateMember Name ID Status------ ---- ---- ------test1 1 Online, Localtest2 2 Onlinetest3 3 Online三、添加资源⑴定义Services Name

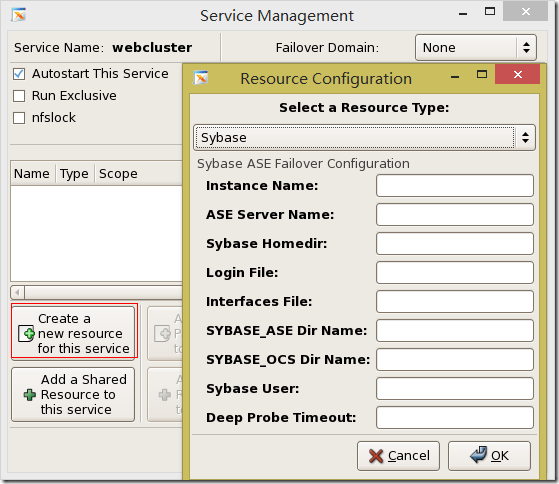

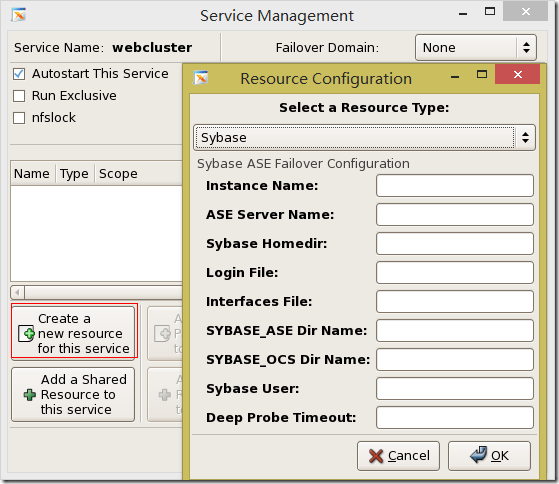

⑵选择为这个services创建resource

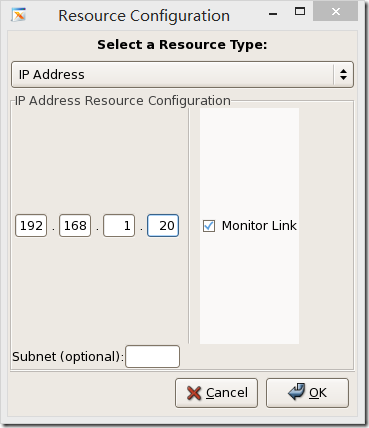

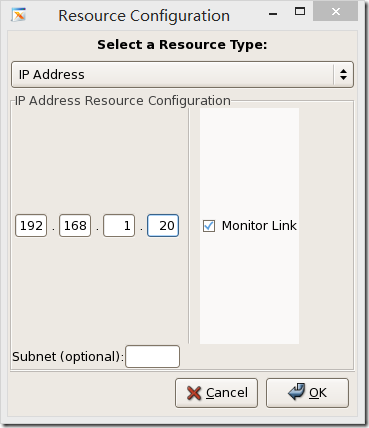

⑵选择为这个services创建resource ⑶添加vip

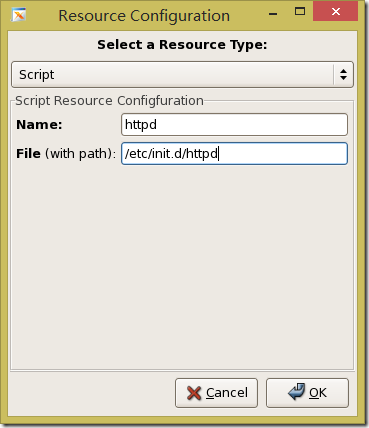

⑶添加vip ⑷添加web服务启动脚本在rhcs集群中,所有的lsb类型的ra,都是scripts

⑷添加web服务启动脚本在rhcs集群中,所有的lsb类型的ra,都是scripts ⑸添加共享存储

⑸添加共享存储 ⑹Service 资源预览留意旁边,可设置故障转移域

⑹Service 资源预览留意旁边,可设置故障转移域 ⑺将资源配置信息,同步至其他节点

⑺将资源配置信息,同步至其他节点 查看集群,以及资源状态

查看集群,以及资源状态[root@test1 ~]# clustatCluster Status tcluster @ Mon Jun 16 04:26:11 2014Member Status: QuorateMember Name ID Status------ ---- ---- ------test1 1 Online, Local, rgmanagertest2 2 Online, rgmanagertest3 3 Online, rgmanagerService Name Owner (Last) State------- ---- ----- ------ -----service:webcluster test1 started

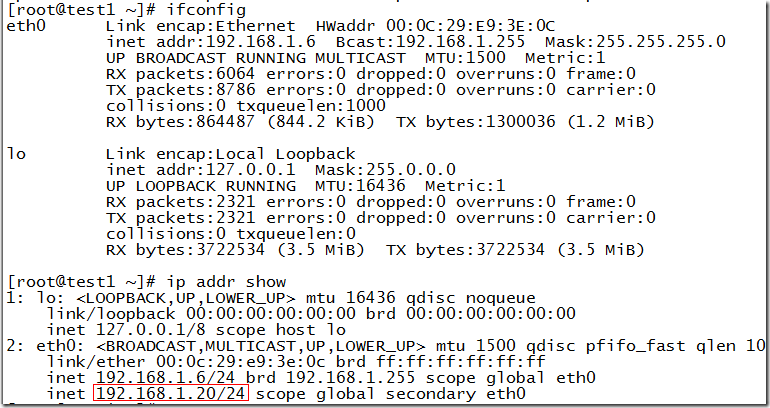

显示资源位于test1主机上,

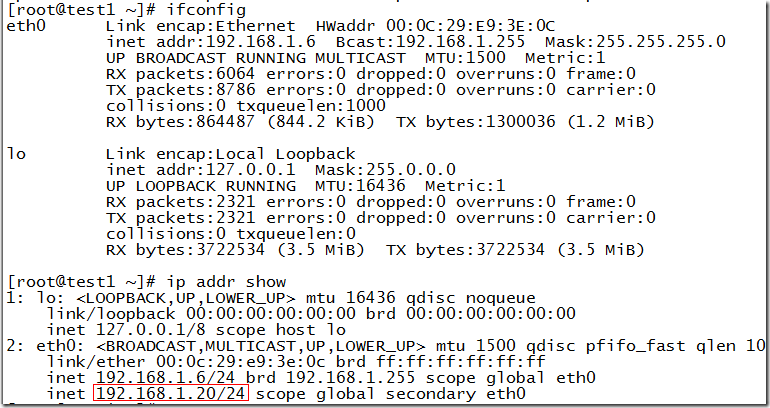

到test1主机上,检查下资源是否存在,

虚拟IP

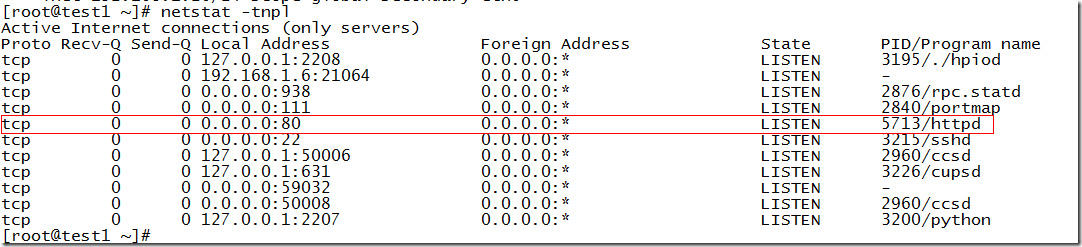

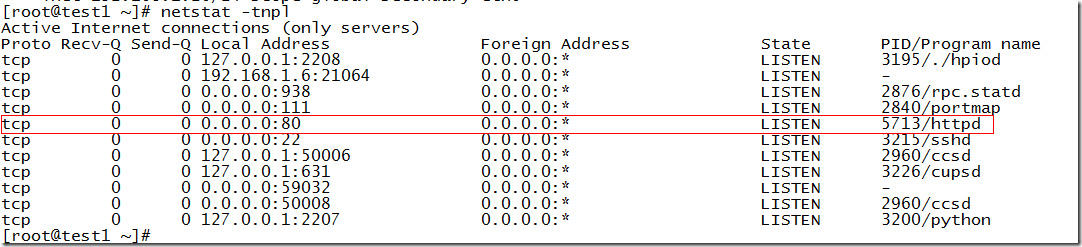

httpd,80端口监听

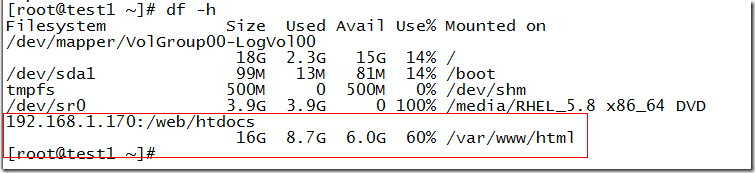

httpd,80端口监听 共享存储

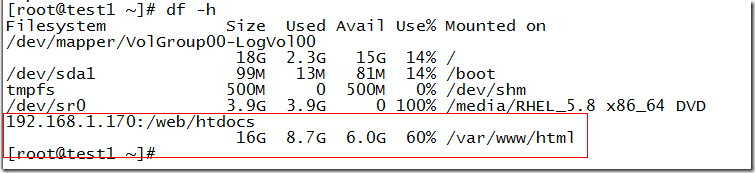

共享存储 客户端访问web测试

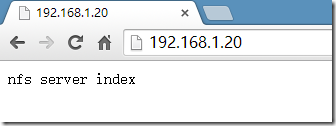

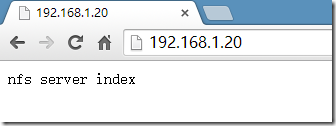

客户端访问web测试 迁移资源到test2上,客户端继续访问

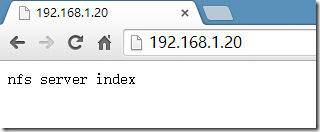

迁移资源到test2上,客户端继续访问[root@test1 ~]# clusvcadm -r webcluster -m test2Trying to relocate service:webcluster to test2...Successservice:webcluster now running on test2[root@test1 ~]# clustatCluster Status tcluster @ Mon Jun 16 04:34:56 2014Member Status: QuorateMember Name ID Status------ ---- ---- ------test1 1 Online, Local, rgmanagertest2 2 Online, rgmanagertest3 3 Online, rgmanagerService Name Owner (Last) State------- ---- ----- ------ -----service:webcluster test2 started

web ha 集群搭建完成。四、RHCS命令行配置工具rhcs不仅提供了gui界面配置集群,更提供了命令行工具,现在把所有的配置全都清空,利用命令行配置集群

web ha 集群搭建完成。四、RHCS命令行配置工具rhcs不仅提供了gui界面配置集群,更提供了命令行工具,现在把所有的配置全都清空,利用命令行配置集群[root@test1 ~]# ha ssh test$i ;donergmanaget: unrecognized servicergmanaget: unrecognized servicergmanaget: unrecognized service[root@test1 ~]# ha ssh test$i ;doneShutting down Cluster Service Manager...Services are stopped.Cluster Service Manager stopped.Shutting down Cluster Service Manager...Waiting services to stop: [ OK ]Cluster Service Manager stopped.Shutting down Cluster Service Manager...Waiting services to stop: [ OK ]Cluster Service Manager stopped.[root@test1 ~]# ha ssh test$i ;doneStopping cluster:Stopping fencing... doneStopping cman... doneStopping ccsd... doneUnmounting configfs... done[ OK ]Stopping cluster:Stopping fencing... doneStopping cman... doneStopping ccsd... doneUnmounting configfs... done[ OK ]Stopping cluster:Stopping fencing... doneStopping cman... doneStopping ccsd... doneUnmounting configfs... done[ OK ][root@test1 ~]# ha ssh test$i ;done⒈首先需要配置集群集群名称

[root@test1 ~]# ccs_tool create tcluster[root@test1 ~]# cat /etc/cluster/cluster.conf<?xml version=?><cluster name= config_version=><clusternodes/><fencedevices/><rm><failoverdomains/><resources/></rm></cluster>⒉配置fence设备

[root@test1 ~]# fencefence_ack_manual fence_cisco_mds fence_ifmib fence_mcdata fence_sanbox2 fence_vmwarefence_apc fence_cisco_ucs fence_ilo fence_node fence_scsi fence_vmware_helperfence_apc_snmp fenced fence_ilo_mp fence_rhevm fence_scsi_test fence_vmware_soapfence_bladecenter fence_drac fence_ipmilan fence_rps10 fence_tool fence_wtifence_brocade fence_drac5 fence_lpar fence_rsa fence_virsh fence_xvmfence_bullpap fence_egenera fence_manual fence_rsb fence_vixel fence_xvmd[root@test1 ~]# ccs_tool addfenceUsage: ccs_tool addfence [options] <name> <agent> [param=]-c --configfile Name of configuration file (/etc/cluster/cluster.conf)-o --outputfile Name of output file (defaults to same --configfile)-C --no_ccs Don't tell CCSD about change: run file updated place)-F --force_ccs Force even input & output files differ-h --help Display help text[root@test1 ~]# ccs_tool addfence metaware fence_manualrunning ccs_tool update...[root@test1 ~]# ccs_tool lsfenceName Agentmetaware fence_manual⒊添加节点信息

[root@test1 ~]# ccs_tool addnodeUsage: ccs_tool addnode [options] <nodename> [<fencearg>=<>]...-n --nodeid Nodeid (required)-v --votes Number of votes node ( 1)-a --altname Alternative name/ multihomed hosts-f --fence_type Type of fencing to use-c --configfile Name of configuration file (/etc/cluster/cluster.conf)-o --outputfile Name of output file (defaults to same --configfile)-C --no_ccs Don't tell CCSD about change: run file updated place)-F --force_ccs Force even input & output files differ-h --help Display help textExamples:Add a node to configuration file:ccs_tool addnode -n 1 -f manual ipaddr=newnodeAdd a node and dump config file to stdout rather than save itccs_tool addnode -n 2 -f apc -o- newnode.temp.net port=1[root@test1 ~]# ccs_tool addnode -n 1 -v 1 -f metaware test1running ccs_tool update...Segmentation fault[root@test1 ~]# ccs_tool addnode -n 2 -v 1 -f metaware test2running ccs_tool update...Segmentation fault[root@test1 ~]# ccs_tool addnode -n 3 -v 1 -f metaware test3running ccs_tool update...Segmentation fault[root@test1 ~]# cat /etc/cluster/cluster.conf<?xml version=?><cluster name= config_version=><clusternodes><clusternode name= votes= nodeid=><fence><method name=><device name=/></method></fence></clusternode><clusternode name= votes= nodeid=><fence><method name=><device name=/></method></fence></clusternode><clusternode name= votes= nodeid=><fence><method name=><device name=/></method></fence></clusternode></clusternodes><fencedevices><fencedevice name= agent=/></fencedevices><rm><failoverdomains/><resources/></rm></cluster>[root@test1 ~]# ccs_tool lsnodeCluster name: tcluster, config_version: 5Nodename Votes Nodeid Fencetypetest1 1 1 metawaretest2 1 2 metawaretest3 1 3 metaware⒋启动集群

[root@test3 ~]# service cman startStarting cluster:Loading modules... doneMounting configfs... doneStarting ccsd... doneStarting cman... doneStarting daemons... doneStarting fencing... done[ OK ]⒌启动rgmanager

[root@test1 ~]# service rgmanager startStarting Cluster Service Manager: [ OK ]⒍集群启动后,就可以配置资源了/etc/cluster/cluster.conf是xml格式的文件,RHCS并没有提供工具去编辑/etc/cluster/cluster.conf,而/etc/cluster/cluster.conf是xml格式的文件,可以使用vim之间编辑,从而添加资源。RHCS还支持一种图形界面配置方式:luci/ricci;后续在研究。本文出自 “小风” 博客,请务必保留此出处http://renfeng.blog.51cto.com/7280443/1426725

相关文章推荐

- 在 RHEL 5.5 下应用 RHCS 实现 Oracle HA

- linux下使用rhcs实现高可用性的web群集

- 使用两台IBM BladeCenter做RHEL5.5的RHCS

- RHEL5.5使用SAMBA配置文件共享-隐含共享的实现

- rhel使用iptables实现端口映射

- 使用LVS-NAT+ipvsadm实现RHEL 5.7上的服务集群

- 使用RHEL6.4部署PXE+kickstart,实现自动安装linux系统

- 使用RHCS实现HA集群

- RHEL6实现RHCS集群的安装、配置、管理和维护

- RHEL7 -- 使用team替换bonding实现链路聚合网卡绑定

- rhel6.4中使用cact_spine监控主机实现发送邮件报警

- 使用ansible快速配置RHCS 集群 实现WEB站负载均衡高可用(手记)

- RHEL6---VSFTPD服务器配置之一:使用mysql实现虚拟用户的访问

- 使用RHEL5.5配置DNS服务,实现主辅DNS服务器同步以及DNS转发服务器的配置

- 使用kickstart、dchp、tftp、http,实现RHEL 5.5操作系统的无人值守自动化安装

- 使用PHP4中的 IntegratedTemplate类实现BLOCK功能

- 使用内存映象实现进程间全局变量

- 使用纯资源DLL文件实现多语言菜单、界面文字、Tooltips等

- 使用虚列表和自画实现文件夹的缩略图显示

- 实现Interface的方法不能使用static修饰符