操作系统 进程管理

2014-06-08 17:01

495 查看

系统的分类:

1批处理系统

一个作业可以长时间地占用cpu。将作业按照它们的性质分组(或分批),然后再成组(或成批)地提交给计算机系统,由计算机自动完成后再输出结果,从而减少作业建立和结束过程中的时间浪费。

单道批处理系统:只允许存放一个作业,即当前正在运行的作业才能驻留内存,作业的执行顺序是先进先出,即按顺序执行。

优点:减少作业间转换时的人工操作,从而减少CPU的等待时间。

缺点:一个作业单独进入内存并独占系统资源,直到运行结束后下一个作业才能进入内存,当作业进行I/O操作时,CPU只能处于等待状态,因此,CPU利用率较低

多道批处理系统(多道程序设计):作业是通过一定的作业调度算法来使用CPU的,一个作业在等待I/O处理时,CPU调度另外一个作业运行

优点:交替使用CPU,提高了CPU及其他系统资源的利用率,同时也提高了系统的效率。

缺点:延长了作业的周转时间,用户不能进行直接干预,缺少交互性,不利于程序的开发与调试。

2分时系统

一个作业只能在一个时间片(Time

Slice,一般取100ms)的时间内使用cpu

单道分时操作系统,多道分时操作系统,具有前台和后台的分时操作系统

常见的通用操作系统是分时系统与批处理系统的结合。其原则是:分时优先,批处理在后。“前台”响应需频繁交互的作业,如终端的要求;

“后台”处理时间性要求不强的作业。

进程的定义:运行的程序

Process – a program in execution; process execution must progress in sequential fashion

进程的构成: program counter(指令) stack(堆栈) data section(数据)

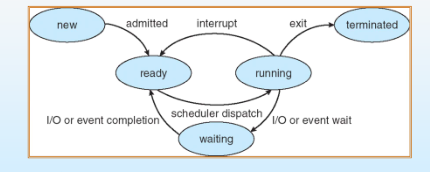

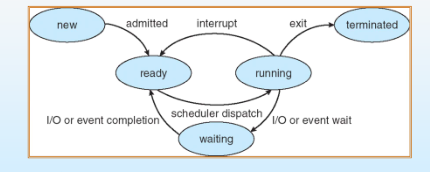

进程状态变化:

Process Control Block (PCB)

1 Process state

2 Program counter

3 CPU registers

4 CPU scheduling information

5 Memory-management information

6 Accounting information

7 I/O status information

进程调度:

队列管理进程

Job queue – set of all processes in the system(创建之后,进程属于工作队列)

Ready queue – set of all processes residing in main memory, ready and waiting to execute (就绪队列,新建进程获得CPU)

Device queues – set of processes waiting for an I/O device(设备队列,运行队列中进程需要I/O操作)

调度方式:

Long-term scheduler (or job scheduler) – selects which processes should be brought into the ready queue 分配资源

The long-term scheduler controls the degree of multiprogramming

Short-term scheduler (or CPU scheduler) – selects which process should be executed next and allocates CPU 分配CPU

Medium-Term Scheduling-将进程从内存中移除,并移除对CPU激烈的竞争。之后可以将进程重新调入内存,并从中断处继续执行。

Processes can be described as either: 进程类型

1 I/O-bound process – spends more time doing I/O than computations, many short CPU bursts

2 CPU-bound process – spends more time doing computations; few very long CPU bursts

进程同步问题

1Producer-Consumer Problem

问题来源:缓冲区大小,传递速率不一致

1unbounded-buffer places no practical limit on the size of the buffer

2 bounded-buffer assumes that there is a fixed buffer size

问题解决方法:

Mechanism for processes to communicate and to synchronize their actions (通信,同步)

Message system – processes communicate with each other without resorting to shared variables

具体方式1:Direct Communication(直接通信)

If P and Q wish to communicate, they need to:

1establish a communication link between them

2 exchange messages via send/receive

send (P, message) – send a message to process P

receive(Q, message) – receive a message from process Q

具体方式2:Indirect Communication(间接通信,发送至中间邮箱)

Messages are directed and received from mailboxes (also referred to as ports)

Each mailbox has a unique id

Processes can communicate only if they share a mailbox

send(A, message) – send a message to mailbox A

receive(A, message) – receive a message from mailbox A

沟通同步异步问题:

Message passing may be either blocking or non-blocking

1 Blocking is considered synchronous 同步

Blocking send (阻塞发送)has the sender block until the message is received

Blocking receive (阻塞接收)has the receiver block until a message is available

2 Non-blocking is considered asynchronous

Non-blocking send (非阻塞发送)has the sender send the message and continue

Non-blocking receive (非阻塞接收)has the receiver receive a valid message or null

缓冲区大小问题:

1.Zero capacity – 0 messages

Sender must wait for receiver (rendezvous)

2.Bounded capacity – finite length of n messages

Sender must wait if link full

3.Unbounded capacity – infinite length

Sender never waits

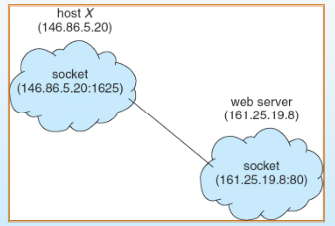

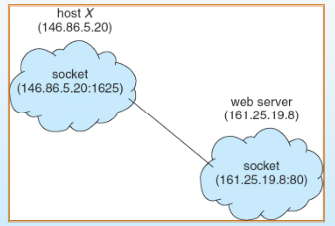

客户端与服务端进程通信方式:

Sockets

Remote Procedure Calls

Remote Method Invocation (Java)

进程与线程的差异

Process: Ownership of memory, files, other resources

进程:运行的程序实体,拥有独立的内存、文件等系统资源

Thread: Unit of execution we use to dispatch

线程:进程的一个实体,比进程更小的能独立运行的基本单位,不拥有独立的系统资源

多线程优点:

1Responsiveness 响应能力

2 Resource Sharing资源共享

3 Economy 经济

4 Utilization of MP Architectures多线程更适用于多

e37a

处理机架构

用户级进程与内核级进程

User threads - Thread management done by user-level threads library.

Kernel threads - Threads directly supported by the kernel

用户级线程与内核级线程的数量关系:

1 Many-to-One

2 One-to-One

3 Many-to-Many

线程问题:

Semantics of fork() and exec() system calls

Thread cancellation

Signal handling

Thread pools

Thread specific data

Scheduler activations

线程池作用:

Create a number of threads in a pool where they await work

Advantages:

1Usually slightly faster to service a request with an existing thread than create a new thread 运行已有的线程比新建线程更快

2 Allows the number of threads in the application(s) to be bound to the size of the pool

CPU 调度

CPU调度发生场景:CPU scheduling decisions may take place when a process:

1. Switches from running to waiting state

2. Switches from running to ready state

3. Switches from waiting to ready

4. Terminates

分配器:Dispatcher module gives control of the CPU to the process selected by the short-term scheduler; this involves:

1 switching context 上下文切换

2 switching to user mode 模式切换

3 jumping to the proper location in the user program to restart that program 重启程序,跳转到程序合适位置

调度标准:

1CPU utilization – keep the CPU as busy as possible CPU利用率

2 Throughput – # of processes that complete their execution per time unit 吞吐量

3 Turnaround time – amount of time to execute a particular process 周转时间

4 Waiting time – amount of time a process has been waiting in the ready queue 等待时间

5 Response time – amount of time it takes from when a request was submitted until the first response is produced, not output (for time-sharing environment) 响应时间

调度原则:

1 Max CPU utilization

2 Max throughput

3Min turnaround time

4 Min waiting time

5 Min response time

调度算法:

1First-Come, First-Served (FCFS) Scheduling

2Shortest-Job-First (SJF) Scheduling

非抢占式SJF :

Nonpreemptive – once CPU given to the process it cannot be preempted until completes its CPU burst

抢占式SJF:

Preemptive – if a new process arrives with CPU burst length less than remaining time of current executing process, preempt. This scheme is know as the Shortest-Remaining-Time-First (SRTF)

3Priority Scheduling

The CPU is allocated to the process with the highest priority (smallest integer highest priority)

1 Preemptive

2 nonpreemptive

4Round Robin (RR)

Performance:

q large FIFO

q small q must be large with respect to context switch, otherwise overhead is too high

5 Multilevel Queue

Each queue has its own scheduling algorithm

foreground – RR

background – FCFS

Scheduling must be done between the queues

Fixed priority scheduling; (i.e., serve all from foreground then from background). Possibility of starvation.

Time slice – each queue gets a certain amount of CPU time which it can schedule amongst its processes; i.e., 80% to foreground in RR 20% to background in FCFS

6Multilevel Feedback Queue

Three queues:

1 Q0– RR with time quantum 8 milliseconds

2 Q1– RR time quantum 16 milliseconds

3Q2– FCFS

Scheduling

1A new job enters queue Q0which is served FCFS. When it gains CPU, job receives 8 milliseconds. If it does not finish in 8 milliseconds, job is moved to queue Q1.

2At Q1job is again served FCFS and receives 16 additional milliseconds. If it still does not complete, it is preempted and moved to queue Q2

Real-Time Scheduling

1Hard real-time systems硬实时 – required to complete a critical task within a guaranteed amount of time

2 Soft real-time computing 软实时– requires that critical processes receive priority over less fortunate ones

Thread Scheduling

1 Local Scheduling – How the threads library decides which thread to put onto an available LWP

2Global Scheduling – How the kernel decides which kernel thread to run next

调度算法的选择 优缺点分析: CPU利用率 用户体验(等待时间、响应时间)、上下文切换代价、饿死现象

进程同步问题:

Concurrent access to shared data may result in data inconsistency (数据不一致性)

解决方法:进程同步

1临界区

1必须强制实施互斥:在具有关于相同资源或共享对象的临界区的所有进程中,一次只允许一个进程进入临界区;

2 一个在非临界区的进程必须不干涉其他进程;

3 不允许一个需要访问临界区的进程被无限延迟;

4 没有进程在临界区时,任何需要进入临界区的进程必须能够立即进入;

5 相关进程的速度和处理器数目没有任何要求和限制;

6 一个进程阻留在临界区中的时间必须是有限的;

总结:

有空让进;

无空等待;

择一而入;

算法可行;

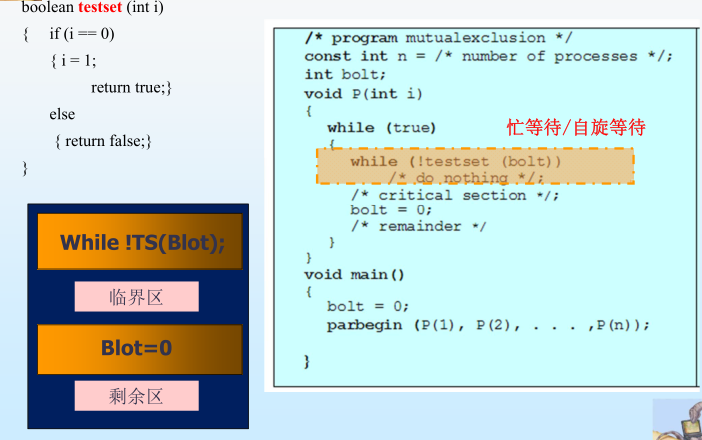

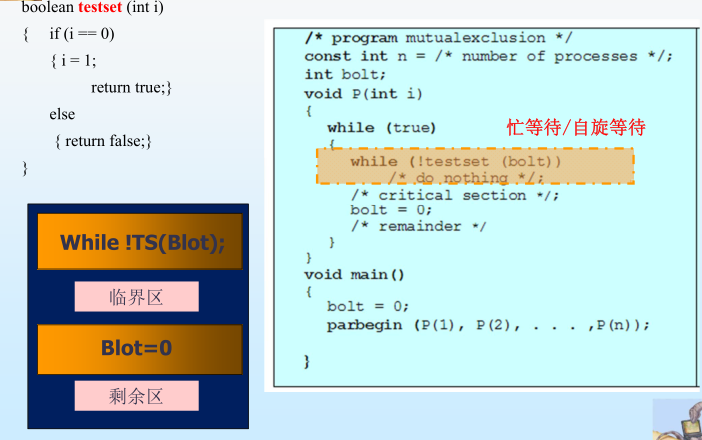

例子:

如果临界区可用,则使用,否则循环查询临界区状态,直至可用

临界区问题:

1)对不能进入临界区的进程,采用忙式等待测试法,浪费CPU时间。

2)将测试能否进入临界区的责任推给各个竞争的进程会削弱系统的可靠性,加重了用户编程负担。

2信号量

信号量机制的变量:可用资源状态变量、等待队列

信号机制的原子操作:P V

Implementation of wait:

wait (S){

value--;

if (value < 0) {

add this process to waiting queue

block(); }

}

如果当前资源可用,则保持进程原先运行状态,否则阻塞进程,压入等待队列

Implementation of signal:

Signal (S){

value++;

if (value <= 0) {

remove a process P from the waiting queue

wakeup(P); }

}

如果当前资源可用,则唤醒进程,否则保持原先进程的状态(已被阻塞的进程的阻塞状态)

若信号量s.count为负值,则其绝对值等于登记排列在该信号量s.queue队列之中等待的进程个数、亦即恰好等于对信号量s实施semSignal操作而被封锁起来并进入信号量s.queue队列的进程数。

信号量 s.count

>=0 表示可用临界资源的实体数;

<0 表示挂起在s.queue对列中的进程数

Deadlock – two or more processes are waiting indefinitely for an event that can be caused by only one of the waiting processes

Starvation – indefinite blocking. A process may never be removed from the semaphore queue in which it is suspended.

进程同步的经典问题:信号量的具体使用实例

1有限缓冲区问题(生产者消费者问题)

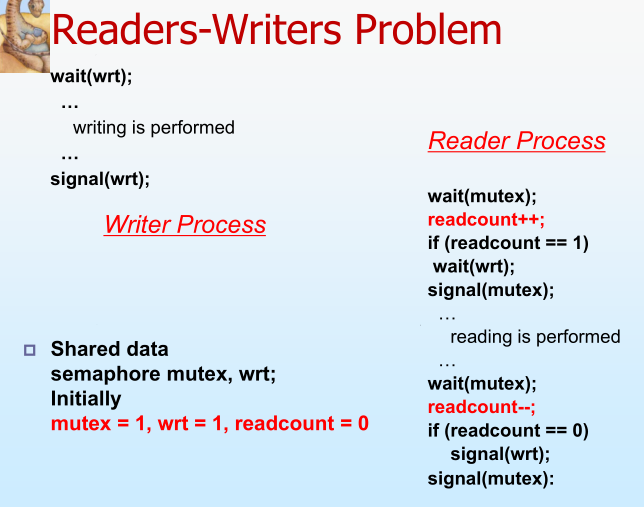

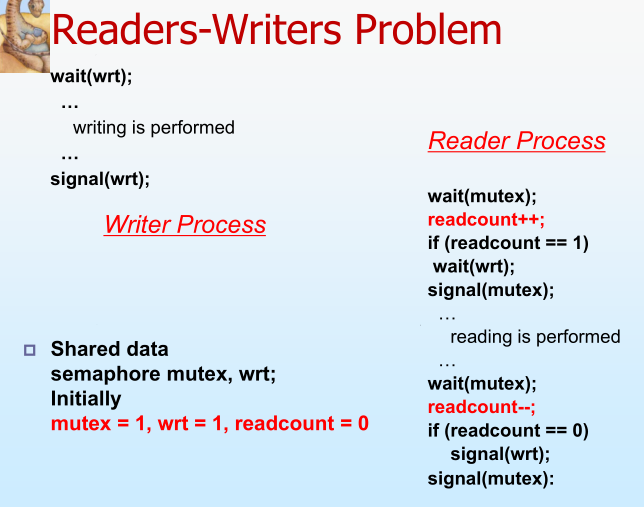

2读者-写者问题 Readers-Writers Problem

问题:在计算机系统中当若干个并发进程都要访问某个共享文件时应区分是读还是写。

1.允许多个进程同时读文件(读-读允许);

2.不允许在进程读文件时让另外一进程去写文件;有进程在写文件时不让另外一个进程去读该文件(“读-写”互斥);

3.更不允许多个写进程同时写同一文件(“写-写”互斥)。因此读-写进程之间关系为:“读-写”互斥、和“读-读”允许。

读者优先:当存在读者时,写操作将被延迟,并且只要有一个读者活跃,随后而来的读者都将被允许访问文件。从而,导致了写进程长时间等待,并有可能出现写进程被饿死。

实际的系统为写者优先:即当有进程在读文件时,如果有进程请求写,那么新的读进程被拒绝,待现有的读者完成读操作后,立即让写者运行,只有当无写者工作时,才让读者工作。

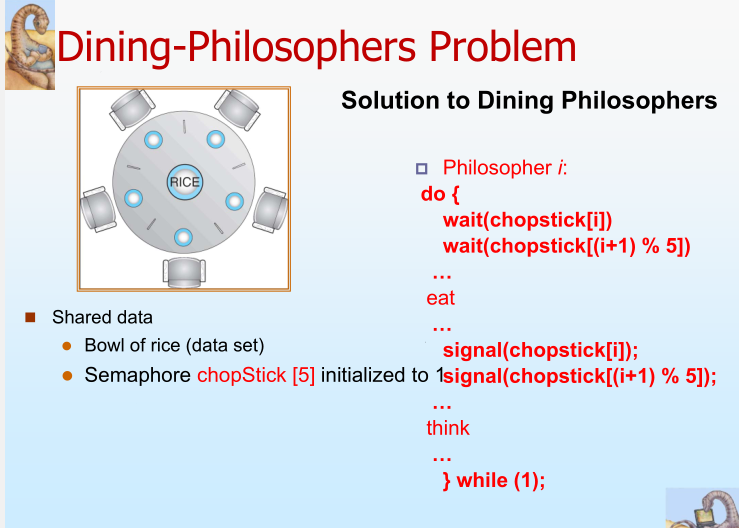

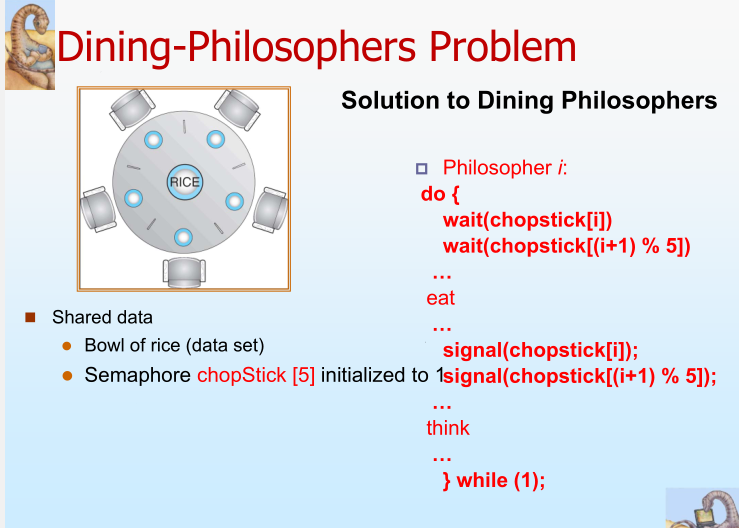

3哲学家问题

解决方法:

为每个共享资源设立一个“秘书”来管理对它的访问,一切来访者都要通过秘书,而秘书每次仅允许一个来访者(进程)访问共享资源。这样既便于系统管理共享资源,有能保证呼哧访问和进程间同步。

管程定义了一个数据结构和能为并发进程所执行的一组操作,这组操作能同步进程和改变管程中的数据。

1 局部数据变量只能被管程的过程访问,任何外部过程都不能访问;

2 一个进程通过调用管程的一个过程进入管程;

3 在任何时候,只能有一个进程在管程中执行,调用管程的任何其他进程都被挂起,以等待管程变为可用;

死锁问题

死锁特征:

1Mutual exclusion

2 Hold and wait

3 No preemption

4Circular wait

死锁问题的基本概念:

资源类型:CPU cycles, memory space, I/O devices

资源与进程关系: request、use、 release

对待死锁的方法(Methods for Handling Deadlocks):

1. Ensure that the system will never enter a deadlock state.

死锁预防的方法:破坏形成死锁的必要条件

1Mutual Exclusion – not required for sharable resources; must hold for nonsharable resources.

2 Hold and Wait – must guarantee that whenever a process requests a resource, it does not hold any other resources.

Require process to request and be allocated all its resources before it begins execution, or allow process to request resources only when the process has none.

Low resource utilization; starvation possible.

3 No Preemption –

If a process that is holding some resources requests another resource that cannot be immediately allocated to it, then all resources currently being held are released.

Preempted resources are added to the list of resources for which the process is waiting.

Process will be restarted only when it can regain its old resources, as well as the new ones that it is requesting.

4Circular Wait – impose a total ordering of all resource types, and require that each process requests resources in an increasing order of enumeration.

2. Allow the system to enter a deadlock state and then recover.

死锁避免的概念:

安全状态与不安全状态:

If a system is in safe state no deadlocks.

If a system is in unsafe state possibility of deadlock.

Avoidance : ensure that a system will never enter an unsafe state.

死锁避免的算法:

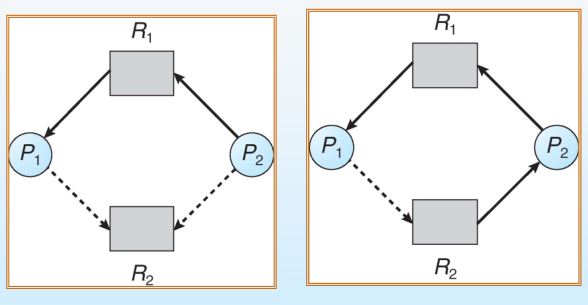

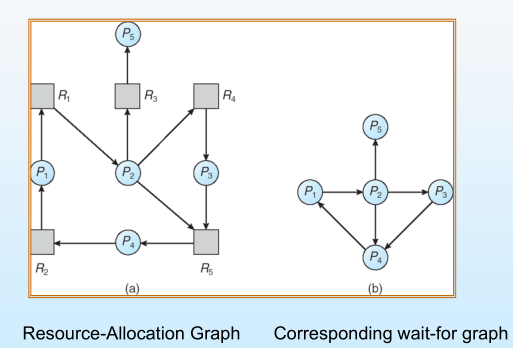

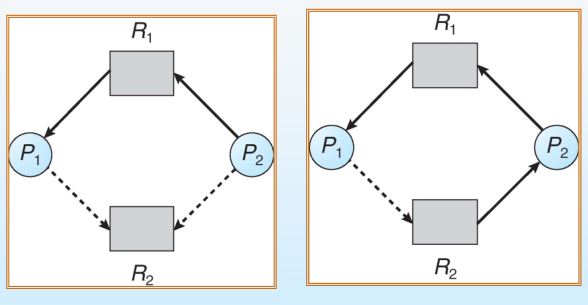

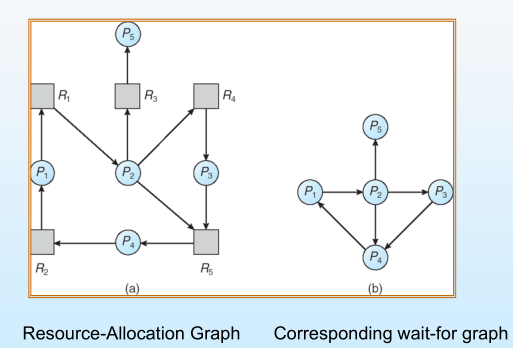

Single instance of a resource type. Use a resource-allocation graph

资源分配(实线 R-P)资源需求(虚线 P—R )资源申请(实线 P-R)

只有将申请边变成分配边而不会导致资源分配图形成环时,才允许申请。通过采用循环检测算法,检测安全性。

Multiple instances of a resource type. Use the banker’s algorithm

算法的数据结构:

Data Structures for the Banker’s Algorithm

Available: Vector of length m. If available [j] = k, there are k instances

of resource type Rjavailable.

Max: n x m matrix. If Max [i,j] = k, then process Pimay request at most

k instances of resource type Rj.

Allocation: n x m matrix. If Allocation[i,j] = k then Piis currently

allocated k instances of Rj.

Need: n x m matrix. If Need[i,j] = k, then Pimay need k more instances

of Rjto complete its task.

Need [i,j] = Max[i,j] – Allocation [i,j].

算法的流程:

Resource-Request Algorithm for Process Pi

Request = request vector for process Pi. If Requesti[j] = k then process

Piwants k instances of resource type Rj.

1. If Requesti Needigo to step 2. Otherwise, raise error condition,

since process has exceeded its maximum claim.

2. If Requesti Available, go to step 3. Otherwise Pimust wait,

since resources are not available.(没有可用资源)

3. Pretend to allocate requested resources to Piby modifying the

state as follows:

Available = Available – Request;(满足需求)

Allocationi= Allocationi+ Requesti;(分配资源)

Needi= Needi– Requesti;(进程剩余所需)

If safe the resources are allocated to Pi.

If unsafe Pi must wait, and the old resource-allocation state

is restored

3. Ignore the problem and pretend that deadlocks never occur in the system; used by most operating systems, including UNIX.

死锁检测:

Single Instance of Each Resource Type

死锁检测算法:(单个资源类型)Periodically invoke an algorithm that searches for a cycle in the graph. If there is a cycle, there exists a deadlock.

Detection Algorithm

1. Let Work and Finish be vectors of length m and n,

respectively Initialize:

(a) Work = Available

(b)For i = 1,2, …, n, if Allocationi 0, then

Finish[i] = false;otherwise, Finish[i] = true.(是否完成)

2. Find an index i such that both:

(a)Finish[i] == false

(b)Requesti Work

If no such i exists, go to step 4.

3. Work = Work + Allocationi

Finish[i] = true

go to step 2.

4. If Finish[i] == false, for some i, 1 i n, then the

system is in deadlock state. Moreover, if Finish[i]

== false, then Piis deadlocked.

死锁检测算法(多个资源类型)【银行家算法】

死锁恢复:

ecovery from Deadlock: Process Termination

1Abort all deadlocked processes.

2 Abort one process at a time until the deadlock cycle is eliminated.

In which order should we choose to abort?

Priority of the process.

How long process has computed, and how much

longer to completion.

Resources the process has used.

Resources process needs to complete.

How many processes will need to be terminated.

Is process interactive or batch?

3Selecting a victim – minimize cost.

4 Rollback – return to some safe state, restart process for that state.

缺点:Starvation – same process may always be picked as

victim, include number of rollback in cost factor.

1批处理系统

一个作业可以长时间地占用cpu。将作业按照它们的性质分组(或分批),然后再成组(或成批)地提交给计算机系统,由计算机自动完成后再输出结果,从而减少作业建立和结束过程中的时间浪费。

单道批处理系统:只允许存放一个作业,即当前正在运行的作业才能驻留内存,作业的执行顺序是先进先出,即按顺序执行。

优点:减少作业间转换时的人工操作,从而减少CPU的等待时间。

缺点:一个作业单独进入内存并独占系统资源,直到运行结束后下一个作业才能进入内存,当作业进行I/O操作时,CPU只能处于等待状态,因此,CPU利用率较低

多道批处理系统(多道程序设计):作业是通过一定的作业调度算法来使用CPU的,一个作业在等待I/O处理时,CPU调度另外一个作业运行

优点:交替使用CPU,提高了CPU及其他系统资源的利用率,同时也提高了系统的效率。

缺点:延长了作业的周转时间,用户不能进行直接干预,缺少交互性,不利于程序的开发与调试。

2分时系统

一个作业只能在一个时间片(Time

Slice,一般取100ms)的时间内使用cpu

单道分时操作系统,多道分时操作系统,具有前台和后台的分时操作系统

常见的通用操作系统是分时系统与批处理系统的结合。其原则是:分时优先,批处理在后。“前台”响应需频繁交互的作业,如终端的要求;

“后台”处理时间性要求不强的作业。

进程的定义:运行的程序

Process – a program in execution; process execution must progress in sequential fashion

进程的构成: program counter(指令) stack(堆栈) data section(数据)

进程状态变化:

Process Control Block (PCB)

1 Process state

2 Program counter

3 CPU registers

4 CPU scheduling information

5 Memory-management information

6 Accounting information

7 I/O status information

进程调度:

队列管理进程

Job queue – set of all processes in the system(创建之后,进程属于工作队列)

Ready queue – set of all processes residing in main memory, ready and waiting to execute (就绪队列,新建进程获得CPU)

Device queues – set of processes waiting for an I/O device(设备队列,运行队列中进程需要I/O操作)

调度方式:

Long-term scheduler (or job scheduler) – selects which processes should be brought into the ready queue 分配资源

The long-term scheduler controls the degree of multiprogramming

Short-term scheduler (or CPU scheduler) – selects which process should be executed next and allocates CPU 分配CPU

Medium-Term Scheduling-将进程从内存中移除,并移除对CPU激烈的竞争。之后可以将进程重新调入内存,并从中断处继续执行。

Processes can be described as either: 进程类型

1 I/O-bound process – spends more time doing I/O than computations, many short CPU bursts

2 CPU-bound process – spends more time doing computations; few very long CPU bursts

进程同步问题

1Producer-Consumer Problem

问题来源:缓冲区大小,传递速率不一致

1unbounded-buffer places no practical limit on the size of the buffer

2 bounded-buffer assumes that there is a fixed buffer size

问题解决方法:

Mechanism for processes to communicate and to synchronize their actions (通信,同步)

Message system – processes communicate with each other without resorting to shared variables

具体方式1:Direct Communication(直接通信)

If P and Q wish to communicate, they need to:

1establish a communication link between them

2 exchange messages via send/receive

send (P, message) – send a message to process P

receive(Q, message) – receive a message from process Q

具体方式2:Indirect Communication(间接通信,发送至中间邮箱)

Messages are directed and received from mailboxes (also referred to as ports)

Each mailbox has a unique id

Processes can communicate only if they share a mailbox

send(A, message) – send a message to mailbox A

receive(A, message) – receive a message from mailbox A

沟通同步异步问题:

Message passing may be either blocking or non-blocking

1 Blocking is considered synchronous 同步

Blocking send (阻塞发送)has the sender block until the message is received

Blocking receive (阻塞接收)has the receiver block until a message is available

2 Non-blocking is considered asynchronous

Non-blocking send (非阻塞发送)has the sender send the message and continue

Non-blocking receive (非阻塞接收)has the receiver receive a valid message or null

缓冲区大小问题:

1.Zero capacity – 0 messages

Sender must wait for receiver (rendezvous)

2.Bounded capacity – finite length of n messages

Sender must wait if link full

3.Unbounded capacity – infinite length

Sender never waits

客户端与服务端进程通信方式:

Sockets

Remote Procedure Calls

Remote Method Invocation (Java)

进程与线程的差异

Process: Ownership of memory, files, other resources

进程:运行的程序实体,拥有独立的内存、文件等系统资源

Thread: Unit of execution we use to dispatch

线程:进程的一个实体,比进程更小的能独立运行的基本单位,不拥有独立的系统资源

多线程优点:

1Responsiveness 响应能力

2 Resource Sharing资源共享

3 Economy 经济

4 Utilization of MP Architectures多线程更适用于多

e37a

处理机架构

用户级进程与内核级进程

User threads - Thread management done by user-level threads library.

Kernel threads - Threads directly supported by the kernel

用户级线程与内核级线程的数量关系:

1 Many-to-One

2 One-to-One

3 Many-to-Many

线程问题:

Semantics of fork() and exec() system calls

Thread cancellation

Signal handling

Thread pools

Thread specific data

Scheduler activations

线程池作用:

Create a number of threads in a pool where they await work

Advantages:

1Usually slightly faster to service a request with an existing thread than create a new thread 运行已有的线程比新建线程更快

2 Allows the number of threads in the application(s) to be bound to the size of the pool

CPU 调度

CPU调度发生场景:CPU scheduling decisions may take place when a process:

1. Switches from running to waiting state

2. Switches from running to ready state

3. Switches from waiting to ready

4. Terminates

分配器:Dispatcher module gives control of the CPU to the process selected by the short-term scheduler; this involves:

1 switching context 上下文切换

2 switching to user mode 模式切换

3 jumping to the proper location in the user program to restart that program 重启程序,跳转到程序合适位置

调度标准:

1CPU utilization – keep the CPU as busy as possible CPU利用率

2 Throughput – # of processes that complete their execution per time unit 吞吐量

3 Turnaround time – amount of time to execute a particular process 周转时间

4 Waiting time – amount of time a process has been waiting in the ready queue 等待时间

5 Response time – amount of time it takes from when a request was submitted until the first response is produced, not output (for time-sharing environment) 响应时间

调度原则:

1 Max CPU utilization

2 Max throughput

3Min turnaround time

4 Min waiting time

5 Min response time

调度算法:

1First-Come, First-Served (FCFS) Scheduling

2Shortest-Job-First (SJF) Scheduling

非抢占式SJF :

Nonpreemptive – once CPU given to the process it cannot be preempted until completes its CPU burst

抢占式SJF:

Preemptive – if a new process arrives with CPU burst length less than remaining time of current executing process, preempt. This scheme is know as the Shortest-Remaining-Time-First (SRTF)

3Priority Scheduling

The CPU is allocated to the process with the highest priority (smallest integer highest priority)

1 Preemptive

2 nonpreemptive

4Round Robin (RR)

Performance:

q large FIFO

q small q must be large with respect to context switch, otherwise overhead is too high

5 Multilevel Queue

Each queue has its own scheduling algorithm

foreground – RR

background – FCFS

Scheduling must be done between the queues

Fixed priority scheduling; (i.e., serve all from foreground then from background). Possibility of starvation.

Time slice – each queue gets a certain amount of CPU time which it can schedule amongst its processes; i.e., 80% to foreground in RR 20% to background in FCFS

6Multilevel Feedback Queue

Three queues:

1 Q0– RR with time quantum 8 milliseconds

2 Q1– RR time quantum 16 milliseconds

3Q2– FCFS

Scheduling

1A new job enters queue Q0which is served FCFS. When it gains CPU, job receives 8 milliseconds. If it does not finish in 8 milliseconds, job is moved to queue Q1.

2At Q1job is again served FCFS and receives 16 additional milliseconds. If it still does not complete, it is preempted and moved to queue Q2

Real-Time Scheduling

1Hard real-time systems硬实时 – required to complete a critical task within a guaranteed amount of time

2 Soft real-time computing 软实时– requires that critical processes receive priority over less fortunate ones

Thread Scheduling

1 Local Scheduling – How the threads library decides which thread to put onto an available LWP

2Global Scheduling – How the kernel decides which kernel thread to run next

调度算法的选择 优缺点分析: CPU利用率 用户体验(等待时间、响应时间)、上下文切换代价、饿死现象

进程同步问题:

Concurrent access to shared data may result in data inconsistency (数据不一致性)

解决方法:进程同步

1临界区

1必须强制实施互斥:在具有关于相同资源或共享对象的临界区的所有进程中,一次只允许一个进程进入临界区;

2 一个在非临界区的进程必须不干涉其他进程;

3 不允许一个需要访问临界区的进程被无限延迟;

4 没有进程在临界区时,任何需要进入临界区的进程必须能够立即进入;

5 相关进程的速度和处理器数目没有任何要求和限制;

6 一个进程阻留在临界区中的时间必须是有限的;

总结:

有空让进;

无空等待;

择一而入;

算法可行;

例子:

如果临界区可用,则使用,否则循环查询临界区状态,直至可用

临界区问题:

1)对不能进入临界区的进程,采用忙式等待测试法,浪费CPU时间。

2)将测试能否进入临界区的责任推给各个竞争的进程会削弱系统的可靠性,加重了用户编程负担。

2信号量

信号量机制的变量:可用资源状态变量、等待队列

信号机制的原子操作:P V

Implementation of wait:

wait (S){

value--;

if (value < 0) {

add this process to waiting queue

block(); }

}

如果当前资源可用,则保持进程原先运行状态,否则阻塞进程,压入等待队列

Implementation of signal:

Signal (S){

value++;

if (value <= 0) {

remove a process P from the waiting queue

wakeup(P); }

}

如果当前资源可用,则唤醒进程,否则保持原先进程的状态(已被阻塞的进程的阻塞状态)

若信号量s.count为负值,则其绝对值等于登记排列在该信号量s.queue队列之中等待的进程个数、亦即恰好等于对信号量s实施semSignal操作而被封锁起来并进入信号量s.queue队列的进程数。

信号量 s.count

>=0 表示可用临界资源的实体数;

<0 表示挂起在s.queue对列中的进程数

Deadlock – two or more processes are waiting indefinitely for an event that can be caused by only one of the waiting processes

Starvation – indefinite blocking. A process may never be removed from the semaphore queue in which it is suspended.

进程同步的经典问题:信号量的具体使用实例

1有限缓冲区问题(生产者消费者问题)

2读者-写者问题 Readers-Writers Problem

问题:在计算机系统中当若干个并发进程都要访问某个共享文件时应区分是读还是写。

1.允许多个进程同时读文件(读-读允许);

2.不允许在进程读文件时让另外一进程去写文件;有进程在写文件时不让另外一个进程去读该文件(“读-写”互斥);

3.更不允许多个写进程同时写同一文件(“写-写”互斥)。因此读-写进程之间关系为:“读-写”互斥、和“读-读”允许。

读者优先:当存在读者时,写操作将被延迟,并且只要有一个读者活跃,随后而来的读者都将被允许访问文件。从而,导致了写进程长时间等待,并有可能出现写进程被饿死。

实际的系统为写者优先:即当有进程在读文件时,如果有进程请求写,那么新的读进程被拒绝,待现有的读者完成读操作后,立即让写者运行,只有当无写者工作时,才让读者工作。

3哲学家问题

解决方法:

为每个共享资源设立一个“秘书”来管理对它的访问,一切来访者都要通过秘书,而秘书每次仅允许一个来访者(进程)访问共享资源。这样既便于系统管理共享资源,有能保证呼哧访问和进程间同步。

管程定义了一个数据结构和能为并发进程所执行的一组操作,这组操作能同步进程和改变管程中的数据。

1 局部数据变量只能被管程的过程访问,任何外部过程都不能访问;

2 一个进程通过调用管程的一个过程进入管程;

3 在任何时候,只能有一个进程在管程中执行,调用管程的任何其他进程都被挂起,以等待管程变为可用;

死锁问题

死锁特征:

1Mutual exclusion

2 Hold and wait

3 No preemption

4Circular wait

死锁问题的基本概念:

资源类型:CPU cycles, memory space, I/O devices

资源与进程关系: request、use、 release

对待死锁的方法(Methods for Handling Deadlocks):

1. Ensure that the system will never enter a deadlock state.

死锁预防的方法:破坏形成死锁的必要条件

1Mutual Exclusion – not required for sharable resources; must hold for nonsharable resources.

2 Hold and Wait – must guarantee that whenever a process requests a resource, it does not hold any other resources.

Require process to request and be allocated all its resources before it begins execution, or allow process to request resources only when the process has none.

Low resource utilization; starvation possible.

3 No Preemption –

If a process that is holding some resources requests another resource that cannot be immediately allocated to it, then all resources currently being held are released.

Preempted resources are added to the list of resources for which the process is waiting.

Process will be restarted only when it can regain its old resources, as well as the new ones that it is requesting.

4Circular Wait – impose a total ordering of all resource types, and require that each process requests resources in an increasing order of enumeration.

2. Allow the system to enter a deadlock state and then recover.

死锁避免的概念:

安全状态与不安全状态:

If a system is in safe state no deadlocks.

If a system is in unsafe state possibility of deadlock.

Avoidance : ensure that a system will never enter an unsafe state.

死锁避免的算法:

Single instance of a resource type. Use a resource-allocation graph

资源分配(实线 R-P)资源需求(虚线 P—R )资源申请(实线 P-R)

只有将申请边变成分配边而不会导致资源分配图形成环时,才允许申请。通过采用循环检测算法,检测安全性。

Multiple instances of a resource type. Use the banker’s algorithm

算法的数据结构:

Data Structures for the Banker’s Algorithm

Available: Vector of length m. If available [j] = k, there are k instances

of resource type Rjavailable.

Max: n x m matrix. If Max [i,j] = k, then process Pimay request at most

k instances of resource type Rj.

Allocation: n x m matrix. If Allocation[i,j] = k then Piis currently

allocated k instances of Rj.

Need: n x m matrix. If Need[i,j] = k, then Pimay need k more instances

of Rjto complete its task.

Need [i,j] = Max[i,j] – Allocation [i,j].

算法的流程:

Resource-Request Algorithm for Process Pi

Request = request vector for process Pi. If Requesti[j] = k then process

Piwants k instances of resource type Rj.

1. If Requesti Needigo to step 2. Otherwise, raise error condition,

since process has exceeded its maximum claim.

2. If Requesti Available, go to step 3. Otherwise Pimust wait,

since resources are not available.(没有可用资源)

3. Pretend to allocate requested resources to Piby modifying the

state as follows:

Available = Available – Request;(满足需求)

Allocationi= Allocationi+ Requesti;(分配资源)

Needi= Needi– Requesti;(进程剩余所需)

If safe the resources are allocated to Pi.

If unsafe Pi must wait, and the old resource-allocation state

is restored

3. Ignore the problem and pretend that deadlocks never occur in the system; used by most operating systems, including UNIX.

死锁检测:

Single Instance of Each Resource Type

死锁检测算法:(单个资源类型)Periodically invoke an algorithm that searches for a cycle in the graph. If there is a cycle, there exists a deadlock.

Detection Algorithm

1. Let Work and Finish be vectors of length m and n,

respectively Initialize:

(a) Work = Available

(b)For i = 1,2, …, n, if Allocationi 0, then

Finish[i] = false;otherwise, Finish[i] = true.(是否完成)

2. Find an index i such that both:

(a)Finish[i] == false

(b)Requesti Work

If no such i exists, go to step 4.

3. Work = Work + Allocationi

Finish[i] = true

go to step 2.

4. If Finish[i] == false, for some i, 1 i n, then the

system is in deadlock state. Moreover, if Finish[i]

== false, then Piis deadlocked.

死锁检测算法(多个资源类型)【银行家算法】

死锁恢复:

ecovery from Deadlock: Process Termination

1Abort all deadlocked processes.

2 Abort one process at a time until the deadlock cycle is eliminated.

In which order should we choose to abort?

Priority of the process.

How long process has computed, and how much

longer to completion.

Resources the process has used.

Resources process needs to complete.

How many processes will need to be terminated.

Is process interactive or batch?

3Selecting a victim – minimize cost.

4 Rollback – return to some safe state, restart process for that state.

缺点:Starvation – same process may always be picked as

victim, include number of rollback in cost factor.

相关文章推荐

- java做的操作系统进程管理模拟(操作系统作业)

- windows mobile 5.0 进程管理、窗体管理、重启和关闭操作系统

- 读书笔记-操作系统-概论和进程管理-1

- 操作系统——进程管理

- 操作系统——进程管理2-读书笔记

- 操作系统编程:进程管理(优先级)

- 通过Linux理解操作系统(三):进程管理(下)

- Linux操作系统的进程管理详解

- windows mobile 5.0 进程管理、窗体管理、重启和关闭操作系统

- 通过Linux理解操作系统(二):进程管理(上)

- windows mobile 5.0 进程管理、窗体管理、重启和关闭操作系统

- 操作系统之进程管理

- windows mobile 5.0 进程管理、窗体管理、重启和关闭操作系统

- 总结操作系统进程管理部分知识

- Unix操作系统基础5-进程、系统管理

- 操作系统进程管理实验(FC5下)

- windows mobile 5.0 进程管理、窗体管理、重启和关闭操作系统

- windows mobile 5.0 进程管理、窗体管理、重启和关闭操作系统

- 操作系统原理学习笔记--进程管理

- 通过Linux理解操作系统(三):进程管理(下)