3DShader之阴影贴图(Shadow Mapping)

2014-05-06 19:07

661 查看

好久没写shader了,一直被实验室要求作java开发,中间准备了一个月雅思,最近又被老师拉到东莞做Hadoop开发。马上面临毕业的问题了,突然人生苦短,趁有生之年多做点自己喜欢的事情吧,所以最近又开始拾起自己喜欢的Shader了。继续自己伟大的航程。以后我觉得一个星期应该写一个Shader,不管有什么大事发生。

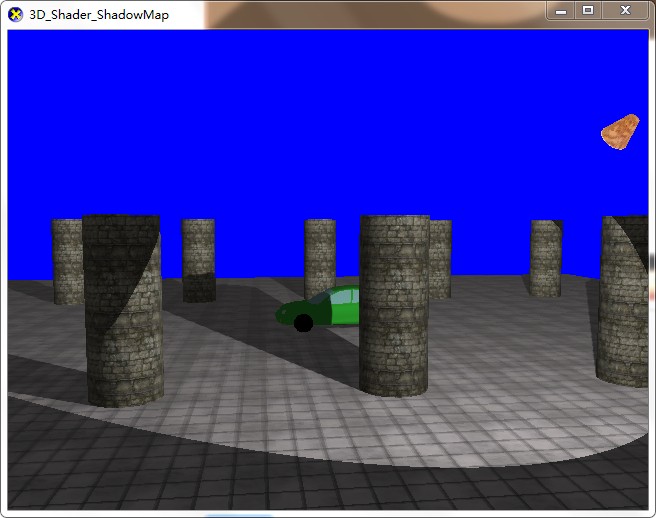

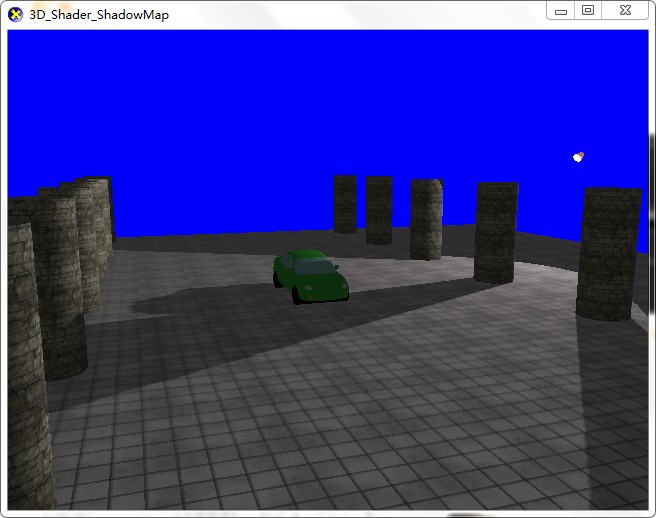

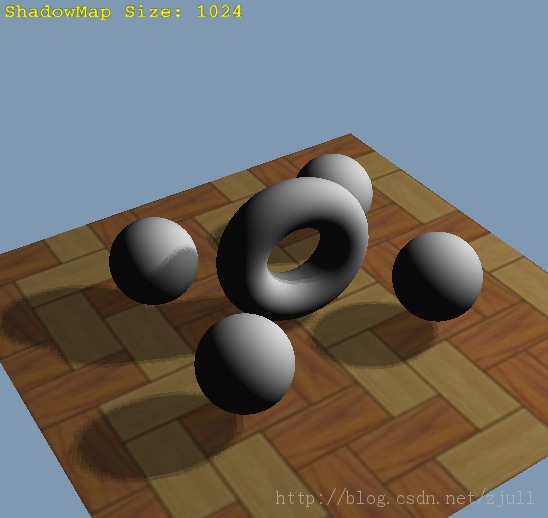

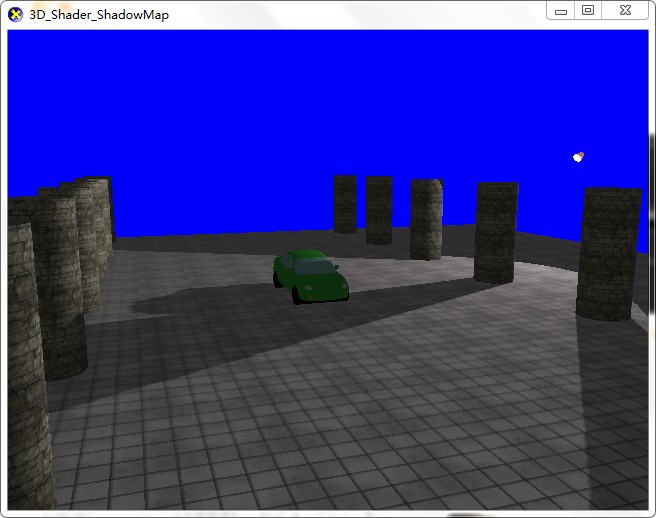

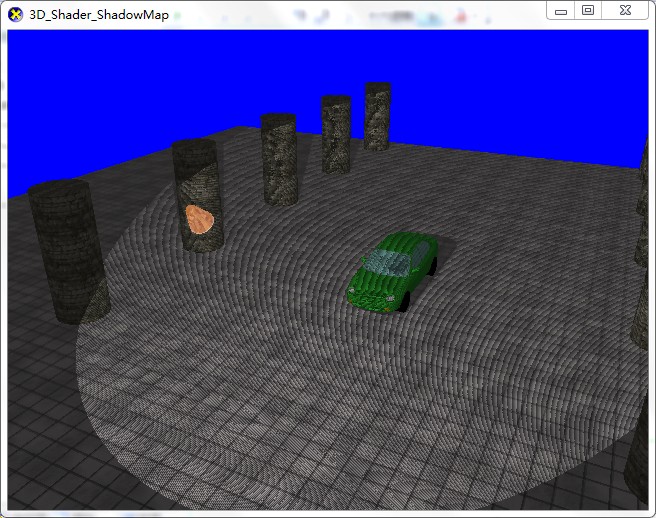

今天的Shader讲解下Shadow Mapping.国际惯例,上图先:

好了,讲下原理

先从灯光的视角出发,将场景渲染到纹理shadow map,这里储存的各像素到光源的距离。

然后从摄相机的视角出发,渲染每个像素,将每个像素的坐标从摄相机的view空间转成灯光的标准设置坐标,其x,y分量除以w就可以对shadow map进行采样了。如果此点到灯光的距离大于采样出来的值说明处于阴影当中。否则应该照亮。

另外,有几个小问题需要讲解下:

先插入另外一仁兄的博文:http://blog.csdn.net/zjull/article/details/11740505

==================引入开始===================

0、简介

Shadow Mapping是一种基于图像空间的阴影实现方法,其优点是实现简单,适应于大型动态场景;缺点是由于shadow map的分辨率有限,使得阴影边缘容易出现锯齿(Aliasing);关于SM的研究很活跃,主要围绕着阴影抗锯齿,出现了很多SM变种,如PSM,LPSM,VSM等等,在这里http://en.wikipedia.org/wiki/Shadow_mapping可以找到很多SM变种的链接;;SM的实现分为两个pass,第一个pass以投射阴影的灯光为视点渲染得到一幅深度图纹理,该纹理就叫Shadow

Map;第二个pass从摄像机渲染场景,但必须在ps中计算像素在灯光坐标系中的深度值,并与Shadow Map中的相应深度值进行比较以确定该像素是否处于阴影区;经过这两个pass最终就可以为场景打上阴影。这篇文章主要总结一下自己在实现基本SM的过程中遇到的一些问题以及解决方法,下面进入正题。

1、生成Shadow Map

为了从灯光角度渲染生成Shadow Map,有两个问题需要解决:一是要渲染哪些物体,二是摄像机的参数怎么设置。对于问题一,显然我们没必要渲染场景中的所有物体,但是只渲染当前摄像机视景体中的物体又不够,因为视景体之外的有些物体也可能投射阴影到视景体之内的物体,所以渲染Shadow Map时,这些物体必须考虑进来,否则可能会出现阴影随着摄像机的移动时有时无的现象,综上,我们只需要渲染位于当前摄像机视景体内的所有物体以及视景体之外但是会投射阴影到视景体之内的物体上的物体,把它们的集合称为阴影投射集,为了确定阴影投射集,可以根据灯光位置以及当前的视景体计算出一个凸壳,位于该凸壳中的物体才需要渲染,如图1所示。对于问题二,灯光处摄像机的视景体应该包含阴影投射集中的所有物体,另外应该让物体尽量占据设置的视口,以提高Shadow

Map的精度;对于方向光和聚光灯,摄像机的look向量可以设置为光的发射发向,摄像机的位置设置为灯光所在的位置,为了包含阴影集中的所有物体,可以计算阴影投射集在灯光视图空间中的轴向包围盒,然后根据面向光源的那个面设置正交投影参数,就可以保证投射集中的所有物体都位于灯光视景体中,并且刚好占据整个视口,如图2所示。更详细的信息可以参考《Mathematics for 3D Game Programming and Computer Graphics, Third Edition》一书的10.2节。

图1:灯光位置与视景体构成一个凸壳,与该凸壳相交的物体称为阴影投射集

图2:根据阴影投射集在灯光视图空间中的包围盒来计算正交投影参数

2、生成阴影场景

生成了ShadowMap之后,就可以根据相应灯光的视图矩阵和投影矩阵从摄像机角度渲染带有阴影的场景了。下面把相关的shader代码贴上:

vertex shader:

pixel shader(版本一):

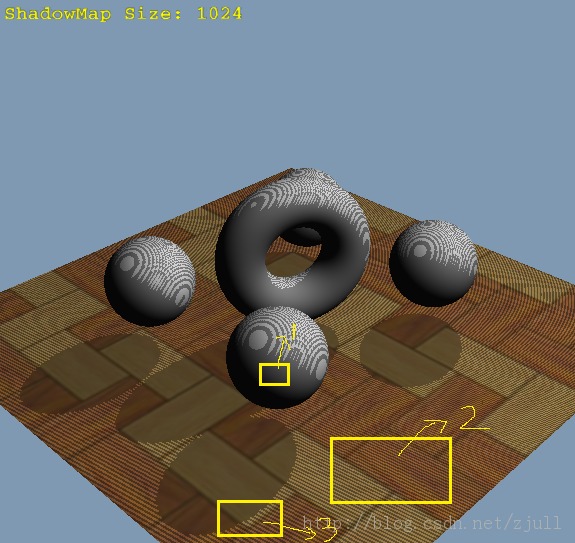

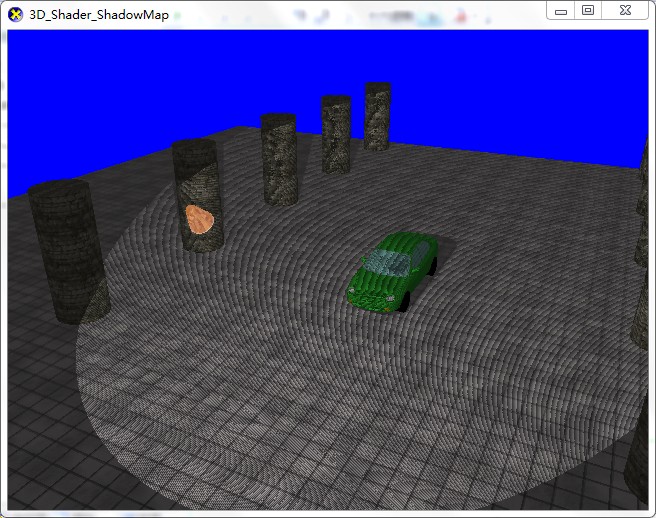

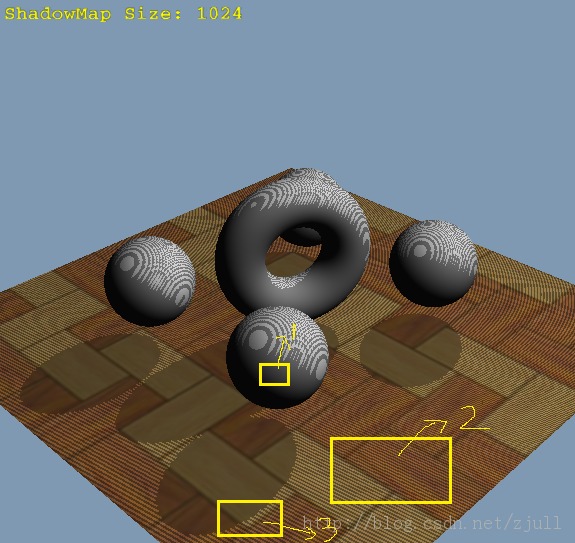

这样就实现了最基本的Shadow Mapping,对于每个像素只采样一个深度texel,看看效果吧。

图3:最基本的Shadow

Mapping

可以看到效果不尽如人意,主要有三个问题(分别对应上图标记):1、物体的背光面当作阴影处理了;2、正对着光的一些像素也划到阴影区去了(Self-shadowing);3、阴影边缘有比较强的锯齿效果。对于问题一,可以判断当前像素是否背着灯光,方法是求得像素在灯光视图空间中的位置以及法线,然后求一个点积就可以了,对于这种像素,不用进行阴影计算即可;问题二产生的原因是深度误差导致的,当物体表面在灯光视图空间中的倾斜度越大时,误差也越大;解决办法有多种,第一种是在进行深度比较时,将深度值减去一个阈值再进行比较,第二种是在生成shadow

map时,只绘制背面,即将背面设置反转,第三种方法是使用OpenGL提供的depth offset,在生成shadow map时,给深度值都加上一个跟像素斜率相关的值;第一种方法阈值比较难确定,无法完全解决Self-shadowing问题,第二种方法在场景中都是二维流形物体时可以工作的很好,第三种方法在绝大多数情况都可以工作得很好,这里使用这种方法,如下面代码所示:

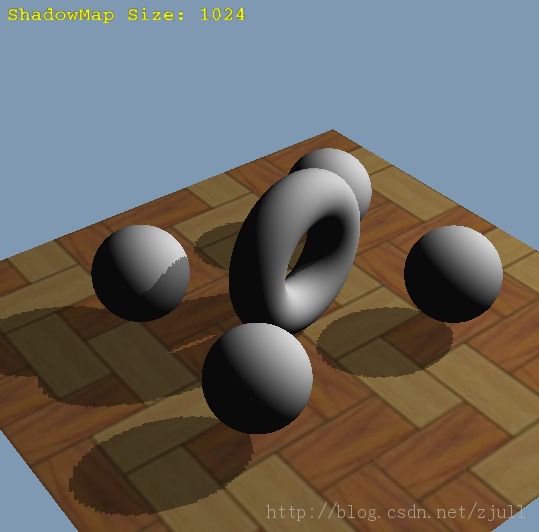

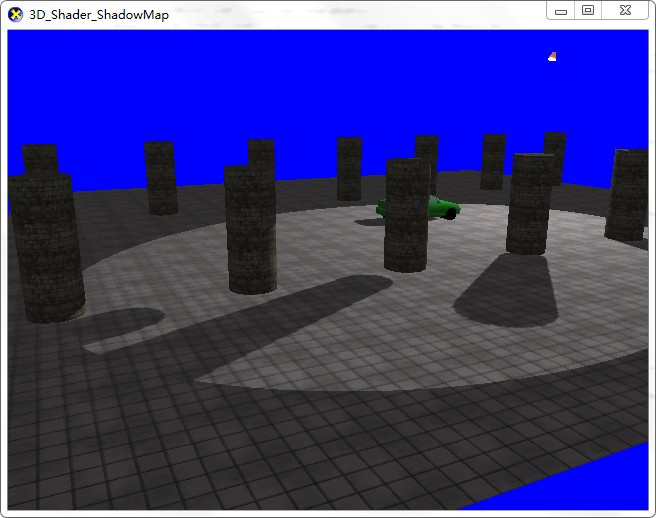

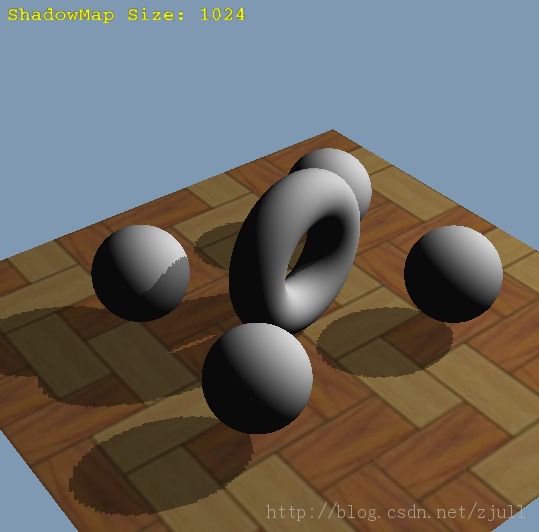

解决第一和第二个问题后的效果如下面所示图4所示:

图4:解决背光面阴影和Self-shadowing问题之后的效果

还有最后一个问题未解决,从上面的图也可看得出来,阴影边缘锯齿比较严重,解决这个问题也有两种较常用方法,第一种方法是用PCF(Percentage Closer Filtering),基本思想是对每个像素从shadow map中采样相邻的多个值,然后对每个值都进行深度比较,如果该像素处于阴影区就把比较结果记为0,否则记为1,最后把比较结果全部加起来除以采样点的个数就可以得到一个百分比p,表示其处在阴影区的可能性,若p为0代表该像素完全处于阴影区,若p为1表示完全不处于阴影区,最后根据p值设定混合系数即可。第二种方法是在阴影渲染pass中不计算光照,只计算阴影,可以得到一幅黑白二值图像,黑色的表示阴影,白色表示非阴影,然后对这幅图像进行高斯模糊,以对阴影边缘进行平滑,以减小据齿效果,最后在光照pass中将像素的光照值与相应二值图像中的值相乘就可以得到打上柔和阴影的场景了;在理论上来说,两种方法都能达到柔和阴影的效果,本文采用的PCF方法,第二种方法后面会尝试,并在效果和速度上与PCF做一下比较,等测试完了会贴到这里来。下面贴上加了PCF的像素shader的代码:

另外注意要对shadow map纹理设置以下纹理参数:

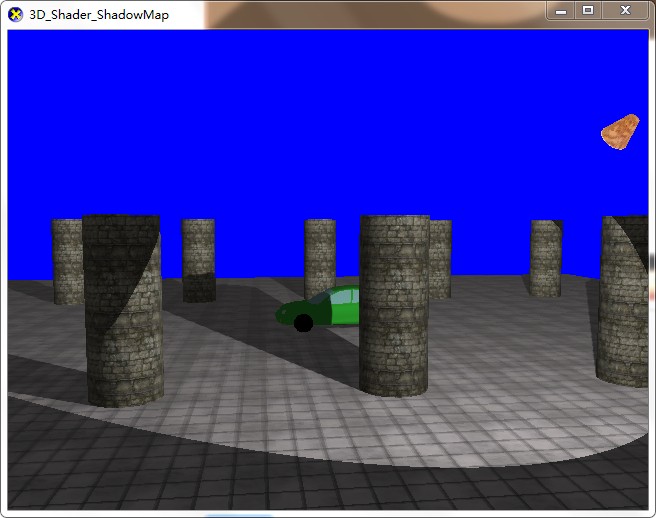

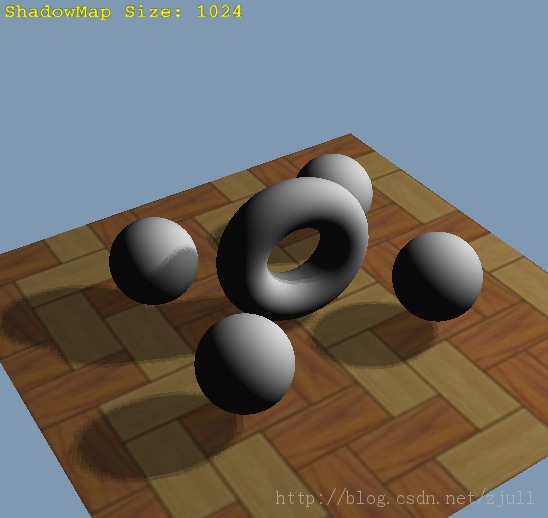

PCF效果如下图 所示,可以看到阴影边缘变柔和了:

图5:柔和的阴影边缘(PCF:采样pattern:左上,左下,右下,右上)

3、结语

关于Shadow Map的变种有很多,不同的变种针对不同情况不同场景提供SM阴影抗锯齿解决方案,本文实现的只是基本的SM,后面考虑实现某种SM变种,进一步提高阴影的效果。

==================引入结束===================

我先贴下源代码,后面我会关于第二个问题着重讲下:

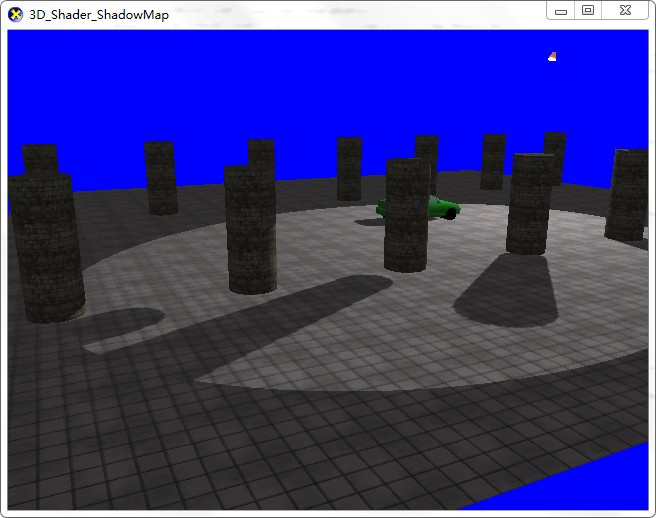

注意:这里我们第一个问题和第三个问题都解决了,我们着重看下第二个问题,将SHADOW_EPSILON改为0.00000f,整个效果如下:

这是由于Shadow Map精度造成的自投影。解决方案:如前面仁兄说的那样,可以把SHADOW_EPSILON改成0.00005f,但是这样又会出现一个问题

当灯光距离物理较远时,阴影容易与物体分离。

由于DX API中的偏移只改变了物体在标准设备坐标的Z值,并未改变shader里面的shadow map记录的值,所以具体的解决方法有待进一步研究。以后有进展了我再补充吧。

可执行程序以及相关源代码请点击这里下载

今天的Shader讲解下Shadow Mapping.国际惯例,上图先:

好了,讲下原理

先从灯光的视角出发,将场景渲染到纹理shadow map,这里储存的各像素到光源的距离。

然后从摄相机的视角出发,渲染每个像素,将每个像素的坐标从摄相机的view空间转成灯光的标准设置坐标,其x,y分量除以w就可以对shadow map进行采样了。如果此点到灯光的距离大于采样出来的值说明处于阴影当中。否则应该照亮。

另外,有几个小问题需要讲解下:

先插入另外一仁兄的博文:http://blog.csdn.net/zjull/article/details/11740505

==================引入开始===================

0、简介

Shadow Mapping是一种基于图像空间的阴影实现方法,其优点是实现简单,适应于大型动态场景;缺点是由于shadow map的分辨率有限,使得阴影边缘容易出现锯齿(Aliasing);关于SM的研究很活跃,主要围绕着阴影抗锯齿,出现了很多SM变种,如PSM,LPSM,VSM等等,在这里http://en.wikipedia.org/wiki/Shadow_mapping可以找到很多SM变种的链接;;SM的实现分为两个pass,第一个pass以投射阴影的灯光为视点渲染得到一幅深度图纹理,该纹理就叫Shadow

Map;第二个pass从摄像机渲染场景,但必须在ps中计算像素在灯光坐标系中的深度值,并与Shadow Map中的相应深度值进行比较以确定该像素是否处于阴影区;经过这两个pass最终就可以为场景打上阴影。这篇文章主要总结一下自己在实现基本SM的过程中遇到的一些问题以及解决方法,下面进入正题。

1、生成Shadow Map

为了从灯光角度渲染生成Shadow Map,有两个问题需要解决:一是要渲染哪些物体,二是摄像机的参数怎么设置。对于问题一,显然我们没必要渲染场景中的所有物体,但是只渲染当前摄像机视景体中的物体又不够,因为视景体之外的有些物体也可能投射阴影到视景体之内的物体,所以渲染Shadow Map时,这些物体必须考虑进来,否则可能会出现阴影随着摄像机的移动时有时无的现象,综上,我们只需要渲染位于当前摄像机视景体内的所有物体以及视景体之外但是会投射阴影到视景体之内的物体上的物体,把它们的集合称为阴影投射集,为了确定阴影投射集,可以根据灯光位置以及当前的视景体计算出一个凸壳,位于该凸壳中的物体才需要渲染,如图1所示。对于问题二,灯光处摄像机的视景体应该包含阴影投射集中的所有物体,另外应该让物体尽量占据设置的视口,以提高Shadow

Map的精度;对于方向光和聚光灯,摄像机的look向量可以设置为光的发射发向,摄像机的位置设置为灯光所在的位置,为了包含阴影集中的所有物体,可以计算阴影投射集在灯光视图空间中的轴向包围盒,然后根据面向光源的那个面设置正交投影参数,就可以保证投射集中的所有物体都位于灯光视景体中,并且刚好占据整个视口,如图2所示。更详细的信息可以参考《Mathematics for 3D Game Programming and Computer Graphics, Third Edition》一书的10.2节。

图1:灯光位置与视景体构成一个凸壳,与该凸壳相交的物体称为阴影投射集

图2:根据阴影投射集在灯光视图空间中的包围盒来计算正交投影参数

2、生成阴影场景

生成了ShadowMap之后,就可以根据相应灯光的视图矩阵和投影矩阵从摄像机角度渲染带有阴影的场景了。下面把相关的shader代码贴上:

vertex shader:

// for projective texturing

uniform mat4 worldMatrix;

uniform mat4 lightViewMatrix;

uniform mat4 lightProjMatrix;

varying vec4 projTexCoord;

void main()

{

// for projective texture mapping

projTexCoord = lightProjMatrix*lightViewMatrix*worldMatrix*pos;

// map project tex coord to [0,1]

projTexCoord.x = (projTexCoord.x + projTexCoord.w)*0.5;

projTexCoord.y = (projTexCoord.y + projTexCoord.w)*0.5;

projTexCoord.z = (projTexCoord.z + projTexCoord.w)*0.5;

gl_Position = gl_ModelViewProjectionMatrix * pos;pixel shader(版本一):

// The shadow map

uniform sampler2DShadow shadowDepthMap;

varying vec4 projTexCoord;

void main()

{

// light computing

vec4 lightColor = Lighting(...);

vec4 texcoord = projTexCoord;

texcoord.x /= texcoord.w;

texcoord.y /= texcoord.w;

texcoord.z /= texcoord.w;

// depth comparison

vec4 color = vec4(1.0,1.0,1.0,1.0);

float depth = texture(shadowDepthMap,vec3(texcoord.xy,0.0));

if(texcoord.z > depth)

{

// this pixel is in shadow area

color = vec4(0.6,0.6,0.6,1.0);

}

gl_FragColor = lightColor*color;

}这样就实现了最基本的Shadow Mapping,对于每个像素只采样一个深度texel,看看效果吧。

图3:最基本的Shadow

Mapping

可以看到效果不尽如人意,主要有三个问题(分别对应上图标记):1、物体的背光面当作阴影处理了;2、正对着光的一些像素也划到阴影区去了(Self-shadowing);3、阴影边缘有比较强的锯齿效果。对于问题一,可以判断当前像素是否背着灯光,方法是求得像素在灯光视图空间中的位置以及法线,然后求一个点积就可以了,对于这种像素,不用进行阴影计算即可;问题二产生的原因是深度误差导致的,当物体表面在灯光视图空间中的倾斜度越大时,误差也越大;解决办法有多种,第一种是在进行深度比较时,将深度值减去一个阈值再进行比较,第二种是在生成shadow

map时,只绘制背面,即将背面设置反转,第三种方法是使用OpenGL提供的depth offset,在生成shadow map时,给深度值都加上一个跟像素斜率相关的值;第一种方法阈值比较难确定,无法完全解决Self-shadowing问题,第二种方法在场景中都是二维流形物体时可以工作的很好,第三种方法在绝大多数情况都可以工作得很好,这里使用这种方法,如下面代码所示:

// handle depth precision problem glEnable(GL_POLYGON_OFFSET_FILL); glPolygonOffset(1.0f,1.0f); // 绘制阴影投射集中的物体 glDisable(GL_POLYGON_OFFSET_FILL);

解决第一和第二个问题后的效果如下面所示图4所示:

图4:解决背光面阴影和Self-shadowing问题之后的效果

还有最后一个问题未解决,从上面的图也可看得出来,阴影边缘锯齿比较严重,解决这个问题也有两种较常用方法,第一种方法是用PCF(Percentage Closer Filtering),基本思想是对每个像素从shadow map中采样相邻的多个值,然后对每个值都进行深度比较,如果该像素处于阴影区就把比较结果记为0,否则记为1,最后把比较结果全部加起来除以采样点的个数就可以得到一个百分比p,表示其处在阴影区的可能性,若p为0代表该像素完全处于阴影区,若p为1表示完全不处于阴影区,最后根据p值设定混合系数即可。第二种方法是在阴影渲染pass中不计算光照,只计算阴影,可以得到一幅黑白二值图像,黑色的表示阴影,白色表示非阴影,然后对这幅图像进行高斯模糊,以对阴影边缘进行平滑,以减小据齿效果,最后在光照pass中将像素的光照值与相应二值图像中的值相乘就可以得到打上柔和阴影的场景了;在理论上来说,两种方法都能达到柔和阴影的效果,本文采用的PCF方法,第二种方法后面会尝试,并在效果和速度上与PCF做一下比较,等测试完了会贴到这里来。下面贴上加了PCF的像素shader的代码:

// The shadow map

uniform sampler2DShadow shadowDepthMap;

varying vec4 projTexCoord;

void main()

{

// light computing

vec4 lightColor = Lighting(...);

float shadeFactor = 0.0;

shadeFactor += textureProjOffset(shadowDepthMap, projTexCoord, ivec2(-1, -1));

shadeFactor += textureProjOffset(shadowDepthMap, projTexCoord, ivec2(-1, 1));

shadeFactor += textureProjOffset(shadowDepthMap, projTexCoord, ivec2( 1, -1));

shadeFactor += textureProjOffset(shadowDepthMap, projTexCoord, ivec2( 1, 1));

shadeFactor *= 0.25;

// map from [0.0,1.0] to [0.6,1.0]

shadeFactor = shadeFactor * 0.4 + 0.6;

gl_FragColor = lightColor*shadeFactor;

}另外注意要对shadow map纹理设置以下纹理参数:

glTexParameteri(GL_TEXTURE_2D,GL_TEXTURE_COMPARE_MODE,GL_COMPARE_REF_TO_TEXTURE); glTexParameteri(GL_TEXTURE_2D,GL_TEXTURE_COMPARE_FUNC,GL_LEQUAL);

PCF效果如下图 所示,可以看到阴影边缘变柔和了:

图5:柔和的阴影边缘(PCF:采样pattern:左上,左下,右下,右上)

3、结语

关于Shadow Map的变种有很多,不同的变种针对不同情况不同场景提供SM阴影抗锯齿解决方案,本文实现的只是基本的SM,后面考虑实现某种SM变种,进一步提高阴影的效果。

==================引入结束===================

我先贴下源代码,后面我会关于第二个问题着重讲下:

/*------------------------------------------------------------

ShadowMap.cpp -- achieve shadow map

(c) Seamanj.2014/4/28

------------------------------------------------------------*/

// phase1 : add view camera & light camera

// phase2 : add objects

// phase3 : add light

// phase4 : add shadow map effect

// phase5 : render the shadow map

// phase6 : render the scene

#include "DXUT.h"

#include "resource.h"

#define phase1 1

#define phase2 1

#define phase3 1

#define phase4 1

#define phase5 1

#define phase6 1

#if phase1

#include "DXUTcamera.h"

CFirstPersonCamera g_VCamera;

CFirstPersonCamera g_LCamera;

bool g_bRightMouseDown = false;// Indicates whether right mouse button is held

#endif

#if phase2

#include "SDKmesh.h"

#include "SDKmisc.h"

LPCWSTR g_aszMeshFile[] =

{

L"BasicColumnScene.x",

L"car.x"

};

#define NUM_OBJ (sizeof(g_aszMeshFile)/sizeof(g_aszMeshFile[0]))

D3DXMATRIXA16 g_amInitObjWorld[NUM_OBJ] =

{

D3DXMATRIXA16( 1.0f, 0.0f, 0.0f, 0.0f, 0.0f, 1.0f, 0.0f, 0.0f, 0.0f, 0.0f, 1.0f, 0.0f, 0.0f, 0.0f, 0.0f,

1.0f ),

D3DXMATRIXA16( 1.0f, 0.0f, 0.0f, 0.0f, 0.0f, 1.0f, 0.0f, 0.0f, 0.0f, 0.0f, 1.0f, 0.0f, 0.0f, 2.35f, 0.0f,

1.0f )

};

D3DVERTEXELEMENT9 g_aVertDecl[] =

{

{ 0, 0, D3DDECLTYPE_FLOAT3, D3DDECLMETHOD_DEFAULT, D3DDECLUSAGE_POSITION, 0 },

{ 0, 12, D3DDECLTYPE_FLOAT3, D3DDECLMETHOD_DEFAULT, D3DDECLUSAGE_NORMAL, 0 },

{ 0, 24, D3DDECLTYPE_FLOAT2, D3DDECLMETHOD_DEFAULT, D3DDECLUSAGE_TEXCOORD, 0 },

D3DDECL_END()

};

//-----------------------------------------------------------------------------

// Name: class CObj

// Desc: Encapsulates a mesh object in the scene by grouping its world matrix

// with the mesh.

//-----------------------------------------------------------------------------

#pragma warning( disable : 4324 )

struct CObj

{

CDXUTXFileMesh m_Mesh;

D3DXMATRIXA16 m_mWorld;

};

CObj g_Obj[NUM_OBJ]; // Scene object meshes

#endif

#if phase3

D3DLIGHT9 g_Light; // The spot light in the scene

CDXUTXFileMesh g_LightMesh;

float g_fLightFov; // FOV of the spot light (in radian)

#endif

#if phase4

ID3DXEffect* g_pEffect = NULL; // D3DX effect interface

#endif

#if phase5

#define ShadowMap_SIZE 512

LPDIRECT3DTEXTURE9 g_pTexDef = NULL; // Default texture for objects

LPDIRECT3DTEXTURE9 g_pShadowMap = NULL; // Texture to which the shadow map is rendered

LPDIRECT3DSURFACE9 g_pDSShadow = NULL; // Depth-stencil buffer for rendering to shadow map

D3DXMATRIXA16 g_mShadowProj; // Projection matrix for shadow map

#endif

//--------------------------------------------------------------------------------------

// Rejects any D3D9 devices that aren't acceptable to the app by returning false

//--------------------------------------------------------------------------------------

bool CALLBACK IsD3D9DeviceAcceptable( D3DCAPS9* pCaps, D3DFORMAT AdapterFormat, D3DFORMAT BackBufferFormat,

bool bWindowed, void* pUserContext )

{

// Typically want to skip back buffer formats that don't support alpha blending

IDirect3D9* pD3D = DXUTGetD3D9Object();

if( FAILED( pD3D->CheckDeviceFormat( pCaps->AdapterOrdinal, pCaps->DeviceType,

AdapterFormat, D3DUSAGE_QUERY_POSTPIXELSHADER_BLENDING,

D3DRTYPE_TEXTURE, BackBufferFormat ) ) )

return false;

// Must support pixel shader 2.0

if( pCaps->PixelShaderVersion < D3DPS_VERSION( 2, 0 ) )

return false;

// need to support D3DFMT_R32F render target

if( FAILED( pD3D->CheckDeviceFormat( pCaps->AdapterOrdinal, pCaps->DeviceType,

AdapterFormat, D3DUSAGE_RENDERTARGET,

D3DRTYPE_CUBETEXTURE, D3DFMT_R32F ) ) )

return false;

// need to support D3DFMT_A8R8G8B8 render target

if( FAILED( pD3D->CheckDeviceFormat( pCaps->AdapterOrdinal, pCaps->DeviceType,

AdapterFormat, D3DUSAGE_RENDERTARGET,

D3DRTYPE_CUBETEXTURE, D3DFMT_A8R8G8B8 ) ) )

return false;

return true;

}

//--------------------------------------------------------------------------------------

// Before a device is created, modify the device settings as needed

//--------------------------------------------------------------------------------------

bool CALLBACK ModifyDeviceSettings( DXUTDeviceSettings* pDeviceSettings, void* pUserContext )

{assert( DXUT_D3D9_DEVICE == pDeviceSettings->ver );

HRESULT hr;

IDirect3D9* pD3D = DXUTGetD3D9Object();

D3DCAPS9 caps;

V( pD3D->GetDeviceCaps( pDeviceSettings->d3d9.AdapterOrdinal,

pDeviceSettings->d3d9.DeviceType,

&caps ) );

// Turn vsync off

pDeviceSettings->d3d9.pp.PresentationInterval = D3DPRESENT_INTERVAL_IMMEDIATE;

// If device doesn't support HW T&L or doesn't support 1.1 vertex shaders in HW

// then switch to SWVP.

if( ( caps.DevCaps & D3DDEVCAPS_HWTRANSFORMANDLIGHT ) == 0 ||

caps.VertexShaderVersion < D3DVS_VERSION( 1, 1 ) )

{

pDeviceSettings->d3d9.BehaviorFlags = D3DCREATE_SOFTWARE_VERTEXPROCESSING;

}

// Debugging vertex shaders requires either REF or software vertex processing

// and debugging pixel shaders requires REF.

#ifdef DEBUG_VS

if( pDeviceSettings->d3d9.DeviceType != D3DDEVTYPE_REF )

{

pDeviceSettings->d3d9.BehaviorFlags &= ~D3DCREATE_HARDWARE_VERTEXPROCESSING;

pDeviceSettings->d3d9.BehaviorFlags &= ~D3DCREATE_PUREDEVICE;

pDeviceSettings->d3d9.BehaviorFlags |= D3DCREATE_SOFTWARE_VERTEXPROCESSING;

}

#endif

#ifdef DEBUG_PS

pDeviceSettings->d3d9.DeviceType = D3DDEVTYPE_REF;

#endif

// For the first device created if its a REF device, optionally display a warning dialog box

static bool s_bFirstTime = true;

if( s_bFirstTime )

{

s_bFirstTime = false;

if( pDeviceSettings->d3d9.DeviceType == D3DDEVTYPE_REF )

DXUTDisplaySwitchingToREFWarning( pDeviceSettings->ver );

}

return true;

}

//--------------------------------------------------------------------------------------

// Create any D3D9 resources that will live through a device reset (D3DPOOL_MANAGED)

// and aren't tied to the back buffer size

//--------------------------------------------------------------------------------------

HRESULT CALLBACK OnD3D9CreateDevice( IDirect3DDevice9* pd3dDevice, const D3DSURFACE_DESC* pBackBufferSurfaceDesc,

void* pUserContext )

{

HRESULT hr;

#if phase2

WCHAR str[MAX_PATH];

// Initialize the meshes

for( int i = 0; i < NUM_OBJ; ++i )

{

V_RETURN( DXUTFindDXSDKMediaFileCch( str, MAX_PATH, g_aszMeshFile[i] ) );

if( FAILED( g_Obj[i].m_Mesh.Create( pd3dDevice, str ) ) )

return DXUTERR_MEDIANOTFOUND;

V_RETURN( g_Obj[i].m_Mesh.SetVertexDecl( pd3dDevice, g_aVertDecl ) );

g_Obj[i].m_mWorld = g_amInitObjWorld[i];

}

#endif

#if phase3

// Initialize the light mesh

V_RETURN( DXUTFindDXSDKMediaFileCch( str, MAX_PATH, L"spotlight.x" ) );

if( FAILED( g_LightMesh.Create( pd3dDevice, str ) ) )

return DXUTERR_MEDIANOTFOUND;

V_RETURN( g_LightMesh.SetVertexDecl( pd3dDevice, g_aVertDecl ) );

#endif

#if phase4

// Read the D3DX effect file

V_RETURN( DXUTFindDXSDKMediaFileCch( str, MAX_PATH, L"ShadowMap.fx" ) );

// Create the effect

LPD3DXBUFFER pErrorBuff;

V_RETURN( D3DXCreateEffectFromFile(

pd3dDevice, // associated device

str, // effect filename

NULL, // no preprocessor definitions

NULL, // no ID3DXInclude interface

D3DXSHADER_DEBUG, // compile flags

NULL, // don't share parameters

&g_pEffect, // return effect

&pErrorBuff // return error messages

) );

#endif

return S_OK;

}

//--------------------------------------------------------------------------------------

// Create any D3D9 resources that won't live through a device reset (D3DPOOL_DEFAULT)

// or that are tied to the back buffer size

//--------------------------------------------------------------------------------------

HRESULT CALLBACK OnD3D9ResetDevice( IDirect3DDevice9* pd3dDevice, const D3DSURFACE_DESC* pBackBufferSurfaceDesc,

void* pUserContext )

{

HRESULT hr;

#if phase1

// Setup the camera's projection parameters

float fAspectRatio = pBackBufferSurfaceDesc->Width / ( FLOAT )pBackBufferSurfaceDesc->Height;

g_VCamera.SetProjParams( D3DX_PI / 4, fAspectRatio, 0.1f, 500.0f );

g_LCamera.SetProjParams( D3DX_PI / 4, fAspectRatio, 0.1f, 500.0f );

#endif

#if phase2

// Restore the scene objects

for( int i = 0; i < NUM_OBJ; ++i )

V_RETURN( g_Obj[i].m_Mesh.RestoreDeviceObjects( pd3dDevice ) );

#endif

#if phase3

V_RETURN( g_LightMesh.RestoreDeviceObjects( pd3dDevice ) );

#endif

#if phase4

if( g_pEffect )

V_RETURN( g_pEffect->OnResetDevice() );

#endif

#if phase5

// Create the default texture (used when a triangle does not use a texture)

V_RETURN( pd3dDevice->CreateTexture( 1, 1, 1, D3DUSAGE_DYNAMIC, D3DFMT_A8R8G8B8, D3DPOOL_DEFAULT, &g_pTexDef,

NULL ) );

D3DLOCKED_RECT lr;

V_RETURN( g_pTexDef->LockRect( 0, &lr, NULL, 0 ) );

*( LPDWORD )lr.pBits = D3DCOLOR_RGBA( 255, 255, 255, 255 );

V_RETURN( g_pTexDef->UnlockRect( 0 ) );

// Create the shadow map texture

V_RETURN( pd3dDevice->CreateTexture( ShadowMap_SIZE, ShadowMap_SIZE,

1, D3DUSAGE_RENDERTARGET,

D3DFMT_R32F,

D3DPOOL_DEFAULT,

&g_pShadowMap,

NULL ) );

// Create the depth-stencil buffer to be used with the shadow map

// We do this to ensure that the depth-stencil buffer is large

// enough and has correct multisample type/quality when rendering

// the shadow map. The default depth-stencil buffer created during

// device creation will not be large enough if the user resizes the

// window to a very small size. Furthermore, if the device is created

// with multisampling, the default depth-stencil buffer will not

// work with the shadow map texture because texture render targets

// do not support multisample.

DXUTDeviceSettings d3dSettings = DXUTGetDeviceSettings();

V_RETURN( pd3dDevice->CreateDepthStencilSurface( ShadowMap_SIZE,

ShadowMap_SIZE,

d3dSettings.d3d9.pp.AutoDepthStencilFormat,

D3DMULTISAMPLE_NONE,

0,

TRUE,

&g_pDSShadow,

NULL ) );

// Initialize the shadow projection matrix

D3DXMatrixPerspectiveFovLH( &g_mShadowProj, g_fLightFov, 1, 0.01f, 100.0f );

#endif

#if phase6

// Restore the effect variables

V_RETURN( g_pEffect->SetVector( "g_vLightDiffuse", ( D3DXVECTOR4* )&g_Light.Diffuse ) );

V_RETURN( g_pEffect->SetFloat( "g_fCosTheta", cosf( g_Light.Theta ) ) );

#endif

return S_OK;

}

//--------------------------------------------------------------------------------------

// Handle updates to the scene. This is called regardless of which D3D API is used

//--------------------------------------------------------------------------------------

void CALLBACK OnFrameMove( double fTime, float fElapsedTime, void* pUserContext )

{

#if phase1

// Update the camera's position based on user input

g_VCamera.FrameMove( fElapsedTime );

g_LCamera.FrameMove( fElapsedTime );

#endif

}

#if phase5

//--------------------------------------------------------------------------------------

// Renders the scene onto the current render target using the current

// technique in the effect.

//--------------------------------------------------------------------------------------

void RenderScene( IDirect3DDevice9* pd3dDevice, bool bRenderShadow, float fElapsedTime, const D3DXMATRIX* pmView,

const D3DXMATRIX* pmProj )

{

HRESULT hr;

// Set the projection matrix

V( g_pEffect->SetMatrix( "g_mProj", pmProj ) );

// Freely moveable light. Get light parameter

// from the light camera.

D3DXVECTOR3 v = *g_LCamera.GetEyePt();

D3DXVECTOR4 v4;//(0,0,0,1);

D3DXVec3Transform( &v4, &v, pmView );

V( g_pEffect->SetVector( "g_vLightPos", &v4 ) );

*( D3DXVECTOR3* )&v4 = *g_LCamera.GetWorldAhead();

v4.w = 0.0f; // Set w 0 so that the translation part doesn't come to play

D3DXVec4Transform( &v4, &v4, pmView ); // Direction in view space

D3DXVec3Normalize( ( D3DXVECTOR3* )&v4, ( D3DXVECTOR3* )&v4 );

V( g_pEffect->SetVector( "g_vLightDir", &v4 ) );

// Clear the render buffers

V( pd3dDevice->Clear( 0L, NULL, D3DCLEAR_TARGET | D3DCLEAR_ZBUFFER,

0x000000ff, 1.0f, 0L ) );

if( bRenderShadow )

V( g_pEffect->SetTechnique( "RenderShadow" ) );

// Begin the scene

if( SUCCEEDED( pd3dDevice->BeginScene() ) )

{

if( !bRenderShadow )

V( g_pEffect->SetTechnique( "RenderScene" ) );

// Render the objects

for( int obj = 0; obj < NUM_OBJ; ++obj )

{

D3DXMATRIXA16 mWorldView = g_Obj[obj].m_mWorld;

D3DXMatrixMultiply( &mWorldView, &mWorldView, pmView );

V( g_pEffect->SetMatrix( "g_mWorldView", &mWorldView ) );

LPD3DXMESH pMesh = g_Obj[obj].m_Mesh.GetMesh();

UINT cPass;

V( g_pEffect->Begin( &cPass, 0 ) );

for( UINT p = 0; p < cPass; ++p )

{

V( g_pEffect->BeginPass( p ) );

for( DWORD i = 0; i < g_Obj[obj].m_Mesh.m_dwNumMaterials; ++i )

{

D3DXVECTOR4 vDif( g_Obj[obj].m_Mesh.m_pMaterials[i].Diffuse.r,

g_Obj[obj].m_Mesh.m_pMaterials[i].Diffuse.g,

g_Obj[obj].m_Mesh.m_pMaterials[i].Diffuse.b,

g_Obj[obj].m_Mesh.m_pMaterials[i].Diffuse.a );

V( g_pEffect->SetVector( "g_vMaterial", &vDif ) );

if( g_Obj[obj].m_Mesh.m_pTextures[i] )

V( g_pEffect->SetTexture( "g_txScene", g_Obj[obj].m_Mesh.m_pTextures[i] ) )

else

V( g_pEffect->SetTexture( "g_txScene", g_pTexDef ) )

V( g_pEffect->CommitChanges() );

V( pMesh->DrawSubset( i ) );

}

V( g_pEffect->EndPass() );

}

V( g_pEffect->End() );

}

#if phase6

// Render light

if( !bRenderShadow )

V( g_pEffect->SetTechnique( "RenderLight" ) );

D3DXMATRIXA16 mWorldView = *g_LCamera.GetWorldMatrix();

D3DXMatrixMultiply( &mWorldView, &mWorldView, pmView );

V( g_pEffect->SetMatrix( "g_mWorldView", &mWorldView ) );

UINT cPass;

LPD3DXMESH pMesh = g_LightMesh.GetMesh();

V( g_pEffect->Begin( &cPass, 0 ) );

for( UINT p = 0; p < cPass; ++p )

{

V( g_pEffect->BeginPass( p ) );

for( DWORD i = 0; i < g_LightMesh.m_dwNumMaterials; ++i )

{

D3DXVECTOR4 vDif( g_LightMesh.m_pMaterials[i].Diffuse.r,

g_LightMesh.m_pMaterials[i].Diffuse.g,

g_LightMesh.m_pMaterials[i].Diffuse.b,

g_LightMesh.m_pMaterials[i].Diffuse.a );

V( g_pEffect->SetVector( "g_vMaterial", &vDif ) );

V( g_pEffect->SetTexture( "g_txScene", g_LightMesh.m_pTextures[i] ) );

V( g_pEffect->CommitChanges() );

V( pMesh->DrawSubset( i ) );

}

V( g_pEffect->EndPass() );

}

V( g_pEffect->End() );

#endif

V( pd3dDevice->EndScene() );

}

}

#endif

//--------------------------------------------------------------------------------------

// Render the scene using the D3D9 device

//--------------------------------------------------------------------------------------

void CALLBACK OnD3D9FrameRender( IDirect3DDevice9* pd3dDevice, double fTime, float fElapsedTime, void* pUserContext )

{

HRESULT hr;

#if phase2 & !phase4 // Clear the render target and the zbuffer

V( pd3dDevice->Clear( 0, NULL, D3DCLEAR_TARGET | D3DCLEAR_ZBUFFER, D3DCOLOR_ARGB( 0, 45, 50, 170 ), 1.0f, 0 ) );

// Render the scene

if( SUCCEEDED( pd3dDevice->BeginScene() ) )

{

// Set world matrix

D3DXMATRIX M;

D3DXMatrixIdentity( &M ); // M = identity matrix

pd3dDevice->SetTransform(D3DTS_WORLD, &M) ;

// Set view matrix

D3DXMATRIX view = *g_VCamera.GetViewMatrix() ;

pd3dDevice->SetTransform(D3DTS_VIEW, &view) ;

// Set projection matrix

D3DXMATRIX proj = *g_VCamera.GetProjMatrix() ;

pd3dDevice->SetTransform(D3DTS_PROJECTION, &proj) ;

// Render the objects

LPD3DXMESH pMeshObj;

for(int obj = 0; obj < NUM_OBJ; ++obj)

{

pMeshObj = g_Obj[obj].m_Mesh.GetMesh();

for( DWORD m = 0; m < g_Obj[obj].m_Mesh.m_dwNumMaterials; ++m )

{

V( pd3dDevice->SetTexture(0,g_Obj[obj].m_Mesh.m_pTextures[m]));

V( pMeshObj->DrawSubset( m ) );

}

}

V( pd3dDevice->EndScene() );

}

#endif

#if phase5

D3DXMATRIXA16 mLightView;

mLightView = *g_LCamera.GetViewMatrix();

//

// Render the shadow map

//

LPDIRECT3DSURFACE9 pOldRT = NULL;

V( pd3dDevice->GetRenderTarget( 0, &pOldRT ) );

LPDIRECT3DSURFACE9 pShadowSurf;

if( SUCCEEDED( g_pShadowMap->GetSurfaceLevel( 0, &pShadowSurf ) ) )

{

pd3dDevice->SetRenderTarget( 0, pShadowSurf );

SAFE_RELEASE( pShadowSurf );

}

LPDIRECT3DSURFACE9 pOldDS = NULL;

if( SUCCEEDED( pd3dDevice->GetDepthStencilSurface( &pOldDS ) ) )

pd3dDevice->SetDepthStencilSurface( g_pDSShadow );

{

CDXUTPerfEventGenerator g( DXUT_PERFEVENTCOLOR, L"Shadow Map" );

RenderScene( pd3dDevice, true, fElapsedTime, &mLightView, &g_mShadowProj );

}

if( pOldDS )

{

pd3dDevice->SetDepthStencilSurface( pOldDS );

pOldDS->Release();

}

pd3dDevice->SetRenderTarget( 0, pOldRT );

SAFE_RELEASE( pOldRT );

#endif

#if phase6

//

// Now that we have the shadow map, render the scene.

//

const D3DXMATRIX* pmView = g_VCamera.GetViewMatrix();

// Initialize required parameter

V( g_pEffect->SetTexture( "g_txShadow", g_pShadowMap ) );

// Compute the matrix to transform from view space to

// light projection space. This consists of

// the inverse of view matrix * view matrix of light * light projection matrix

D3DXMATRIXA16 mViewToLightProj;

mViewToLightProj = *pmView;

D3DXMatrixInverse( &mViewToLightProj, NULL, &mViewToLightProj );

D3DXMatrixMultiply( &mViewToLightProj, &mViewToLightProj, &mLightView );

D3DXMatrixMultiply( &mViewToLightProj, &mViewToLightProj, &g_mShadowProj );

V( g_pEffect->SetMatrix( "g_mViewToLightProj", &mViewToLightProj ) );

{

CDXUTPerfEventGenerator g( DXUT_PERFEVENTCOLOR, L"Scene" );

RenderScene( pd3dDevice, false, fElapsedTime, pmView, g_VCamera.GetProjMatrix() );

}

g_pEffect->SetTexture( "g_txShadow", NULL );

#endif

}

#if phase1

void CALLBACK MouseProc( bool bLeftButtonDown, bool bRightButtonDown, bool bMiddleButtonDown, bool bSideButton1Down,

bool bSideButton2Down, int nMouseWheelDelta, int xPos, int yPos, void* pUserContext )

{

g_bRightMouseDown = bRightButtonDown;

}

#endif

//--------------------------------------------------------------------------------------

// Handle messages to the application

//--------------------------------------------------------------------------------------

LRESULT CALLBACK MsgProc( HWND hWnd, UINT uMsg, WPARAM wParam, LPARAM lParam,

bool* pbNoFurtherProcessing, void* pUserContext )

{

#if phase1

// Pass all windows messages to camera and dialogs so they can respond to user input

if( WM_KEYDOWN != uMsg || g_bRightMouseDown )

g_LCamera.HandleMessages( hWnd, uMsg, wParam, lParam );

if( WM_KEYDOWN != uMsg || !g_bRightMouseDown )

{

g_VCamera.HandleMessages( hWnd, uMsg, wParam, lParam );

}

#endif

return 0;

}

//--------------------------------------------------------------------------------------

// Release D3D9 resources created in the OnD3D9ResetDevice callback

//--------------------------------------------------------------------------------------

void CALLBACK OnD3D9LostDevice( void* pUserContext )

{

#if phase2

for( int i = 0; i < NUM_OBJ; ++i )

g_Obj[i].m_Mesh.InvalidateDeviceObjects();

#endif

#if phase3

g_LightMesh.InvalidateDeviceObjects();

#endif

#if phase4

if( g_pEffect )

g_pEffect->OnLostDevice();

#endif

#if phase5

SAFE_RELEASE( g_pDSShadow );

SAFE_RELEASE( g_pShadowMap );

SAFE_RELEASE( g_pTexDef );

#endif

}

//--------------------------------------------------------------------------------------

// Release D3D9 resources created in the OnD3D9CreateDevice callback

//--------------------------------------------------------------------------------------

void CALLBACK OnD3D9DestroyDevice( void* pUserContext )

{

#if phase2

for( int i = 0; i < NUM_OBJ; ++i )

g_Obj[i].m_Mesh.Destroy();

#endif

#if phase3

g_LightMesh.Destroy();

#endif

#if phase4

SAFE_RELEASE(g_pEffect);

#endif

}

//--------------------------------------------------------------------------------------

// Initialize everything and go into a render loop

//--------------------------------------------------------------------------------------

INT WINAPI wWinMain( HINSTANCE, HINSTANCE, LPWSTR, int )

{

// Enable run-time memory check for debug builds.

#if defined(DEBUG) | defined(_DEBUG)

_CrtSetDbgFlag( _CRTDBG_ALLOC_MEM_DF | _CRTDBG_LEAK_CHECK_DF );

#endif

#if phase1

// Initialize the camera

g_VCamera.SetScalers( 0.01f, 15.0f );

g_LCamera.SetScalers( 0.01f, 8.0f );

g_VCamera.SetRotateButtons( true, false, false );

g_LCamera.SetRotateButtons( false, false, true );

// Set up the view parameters for the camera

D3DXVECTOR3 vFromPt = D3DXVECTOR3( 0.0f, 5.0f, -18.0f );

D3DXVECTOR3 vLookatPt = D3DXVECTOR3( 0.0f, -1.0f, 0.0f );

g_VCamera.SetViewParams( &vFromPt, &vLookatPt );

vFromPt = D3DXVECTOR3( 0.0f, 0.0f, -12.0f );

vLookatPt = D3DXVECTOR3( 0.0f, -2.0f, 1.0f );

g_LCamera.SetViewParams( &vFromPt, &vLookatPt );

#endif

#if phase3

// Initialize the spot light

g_fLightFov = D3DX_PI / 2.0f;

g_Light.Diffuse.r = 1.0f;

g_Light.Diffuse.g = 1.0f;

g_Light.Diffuse.b = 1.0f;

g_Light.Diffuse.a = 1.0f;

g_Light.Position = D3DXVECTOR3( -8.0f, -8.0f, 0.0f );

g_Light.Direction = D3DXVECTOR3( 1.0f, -1.0f, 0.0f );

D3DXVec3Normalize( ( D3DXVECTOR3* )&g_Light.Direction, ( D3DXVECTOR3* )&g_Light.Direction );

g_Light.Range = 10.0f;

g_Light.Theta = g_fLightFov / 2.0f;

g_Light.Phi = g_fLightFov / 2.0f;

#endif

// Set the callback functions

DXUTSetCallbackD3D9DeviceAcceptable( IsD3D9DeviceAcceptable );

DXUTSetCallbackD3D9DeviceCreated( OnD3D9CreateDevice );

DXUTSetCallbackD3D9DeviceReset( OnD3D9ResetDevice );

DXUTSetCallbackD3D9FrameRender( OnD3D9FrameRender );

DXUTSetCallbackD3D9DeviceLost( OnD3D9LostDevice );

DXUTSetCallbackD3D9DeviceDestroyed( OnD3D9DestroyDevice );

DXUTSetCallbackDeviceChanging( ModifyDeviceSettings );

DXUTSetCallbackMsgProc( MsgProc );

DXUTSetCallbackFrameMove( OnFrameMove );

#if phase1

DXUTSetCallbackMouse( MouseProc );

#endif

// TODO: Perform any application-level initialization here

// Initialize DXUT and create the desired Win32 window and Direct3D device for the application

DXUTInit( true, true ); // Parse the command line and show msgboxes

DXUTSetHotkeyHandling( true, true, true ); // handle the default hotkeys

DXUTSetCursorSettings( true, true ); // Show the cursor and clip it when in full screen

DXUTCreateWindow( L"3D_Shader_ShadowMap" );

DXUTCreateDevice( true, 640, 480 );

// Start the render loop

DXUTMainLoop();

// TODO: Perform any application-level cleanup here

return DXUTGetExitCode();

}/*------------------------------------------------------------

ShadowMap.fx -- achieve shadow map

(c) Seamanj.2014/4/28

------------------------------------------------------------*/

#define SMAP_SIZE 512

#define SHADOW_EPSILON 0.00005f

float4x4 g_mWorldView;

float4x4 g_mProj;

float4x4 g_mViewToLightProj; // Transform from view space to light projection space

float4 g_vMaterial;

texture g_txScene;

texture g_txShadow;

float3 g_vLightPos; // Light position in view space

float3 g_vLightDir; // Light direction in view space

float4 g_vLightDiffuse = float4( 1.0f, 1.0f, 1.0f, 1.0f ); // Light diffuse color

float4 g_vLightAmbient = float4( 0.3f, 0.3f, 0.3f, 1.0f ); // Use an ambient light of 0.3

float g_fCosTheta; // Cosine of theta of the spot light

sampler2D g_samScene =

sampler_state

{

Texture = <g_txScene>;

MinFilter = Point;

MagFilter = Linear;

MipFilter = Linear;

};

sampler2D g_samShadow =

sampler_state

{

Texture = <g_txShadow>;

MinFilter = Point;

MagFilter = Point;

MipFilter = Point;

AddressU = Clamp;

AddressV = Clamp;

};

//-----------------------------------------------------------------------------

// Vertex Shader: VertScene

// Desc: Process vertex for scene

//-----------------------------------------------------------------------------

void VertScene( float4 iPos : POSITION,

float3 iNormal : NORMAL,

float2 iTex : TEXCOORD0,

out float4 oPos : POSITION,

out float2 Tex : TEXCOORD0,

out float4 vPos : TEXCOORD1,

out float3 vNormal : TEXCOORD2,

out float4 vPosLight : TEXCOORD3 )

{

//

// Transform position to view space

//

vPos = mul( iPos, g_mWorldView );

//

// Transform to screen coord

//

oPos = mul( vPos, g_mProj );

//

// Compute view space normal

//

vNormal = mul( iNormal, (float3x3)g_mWorldView );

//

// Propagate texture coord

//

Tex = iTex;

//

// Transform the position to light projection space, or the

// projection space as if the camera is looking out from

// the spotlight.

//

vPosLight = mul( vPos, g_mViewToLightProj );

}

//-----------------------------------------------------------------------------

// Pixel Shader: PixScene

// Desc: Process pixel (do per-pixel lighting) for enabled scene

//-----------------------------------------------------------------------------

float4 PixScene( float2 Tex : TEXCOORD0,

float4 vPos : TEXCOORD1,

float3 vNormal : TEXCOORD2,

float4 vPosLight : TEXCOORD3 ) : COLOR

{

float4 Diffuse;

// vLight is the unit vector from the light to this pixel

float3 vLight = normalize( float3( vPos - g_vLightPos ) );

// Compute diffuse from the light

if( dot( vLight, g_vLightDir ) > g_fCosTheta ) // Light must face the pixel (within Theta)

{

// Pixel is in lit area. Find out if it's

// in shadow using 2x2 percentage closest filtering

//transform from RT space to texture space.

float2 ShadowTexC = 0.5 * vPosLight.xy / vPosLight.w + float2( 0.5, 0.5 );

ShadowTexC.y = 1.0f - ShadowTexC.y;

// transform to texel space

float2 texelpos = SMAP_SIZE * ShadowTexC;

// Determine the lerp amounts

float2 lerps = frac( texelpos );

//read in bilerp stamp, doing the shadow checks

float sourcevals[4];

sourcevals[0] = (tex2D( g_samShadow, ShadowTexC ) + SHADOW_EPSILON < vPosLight.z / vPosLight.w)? 0.0f: 1.0f;

sourcevals[1] = (tex2D( g_samShadow, ShadowTexC + float2(1.0/SMAP_SIZE, 0) ) + SHADOW_EPSILON < vPosLight.z / vPosLight.w)? 0.0f: 1.0f;

sourcevals[2] = (tex2D( g_samShadow, ShadowTexC + float2(0, 1.0/SMAP_SIZE) ) + SHADOW_EPSILON < vPosLight.z / vPosLight.w)? 0.0f: 1.0f;

sourcevals[3] = (tex2D( g_samShadow, ShadowTexC + float2(1.0/SMAP_SIZE, 1.0/SMAP_SIZE) ) + SHADOW_EPSILON < vPosLight.z / vPosLight.w)? 0.0f: 1.0f;

// lerp between the shadow values to calculate our light amount

float LightAmount = lerp( lerp( sourcevals[0], sourcevals[1], lerps.x ),

lerp( sourcevals[2], sourcevals[3], lerps.x ),

lerps.y );

// Light it

Diffuse = ( saturate( dot( -vLight, normalize( vNormal ) ) ) * LightAmount * ( 1 - g_vLightAmbient ) + g_vLightAmbient )

* g_vMaterial;

} else

{

Diffuse = g_vLightAmbient * g_vMaterial;

}

return tex2D( g_samScene, Tex ) * Diffuse;

}

//-----------------------------------------------------------------------------

// Vertex Shader: VertLight

// Desc: Process vertex for the light object

//-----------------------------------------------------------------------------

void VertLight( float4 iPos : POSITION,

float3 iNormal : NORMAL,

float2 iTex : TEXCOORD0,

out float4 oPos : POSITION,

out float2 Tex : TEXCOORD0 )

{

//

// Transform position to view space

//

oPos = mul( iPos, g_mWorldView );

//

// Transform to screen coord

//

oPos = mul( oPos, g_mProj );

//

// Propagate texture coord

//

Tex = iTex;

}

//-----------------------------------------------------------------------------

// Pixel Shader: PixLight

// Desc: Process pixel for the light object

//-----------------------------------------------------------------------------

float4 PixLight( float2 Tex : TEXCOORD0,

float4 vPos : TEXCOORD1 ) : COLOR

{

return tex2D( g_samScene, Tex );

}

//-----------------------------------------------------------------------------

// Vertex Shader: VertShadow

// Desc: Process vertex for the shadow map

//-----------------------------------------------------------------------------

void VertShadow( float4 Pos : POSITION,

float3 Normal : NORMAL,

out float4 oPos : POSITION,

out float2 Depth : TEXCOORD0 )

{

//

// Compute the projected coordinates

//

oPos = mul( Pos, g_mWorldView );

oPos = mul( oPos, g_mProj );

//

// Store z and w in our spare texcoord

//

Depth.xy = oPos.zw;

}

//-----------------------------------------------------------------------------

// Pixel Shader: PixShadow

// Desc: Process pixel for the shadow map

//-----------------------------------------------------------------------------

void PixShadow( float2 Depth : TEXCOORD0,

out float4 Color : COLOR )

{

//

// Depth is z / w

//

Color = Depth.x / Depth.y;//不要看输出是白色,结果是有效的

//float a = Depth.x / Depth.y;

//Color = a * 100 - 99;

//Color = (1, 1, 1, a * 100 - 9);//这种赋值方法相当于将最后一个值赋给Color的所有分量

//Color = float4(a * 100 - 99,a * 100 - 99,a * 100 - 99,a * 100 - 99);

}

//-----------------------------------------------------------------------------

// Technique: RenderScene

// Desc: Renders scene objects

//-----------------------------------------------------------------------------

technique RenderScene

{

pass p0

{

VertexShader = compile vs_2_0 VertScene();

PixelShader = compile ps_2_0 PixScene();

}

}

//-----------------------------------------------------------------------------

// Technique: RenderLight

// Desc: Renders the light object

//-----------------------------------------------------------------------------

technique RenderLight

{

pass p0

{

VertexShader = compile vs_2_0 VertLight();

PixelShader = compile ps_2_0 PixLight();

}

}

//-----------------------------------------------------------------------------

// Technique: RenderShadow

// Desc: Renders the shadow map

//-----------------------------------------------------------------------------

technique RenderShadow

{

pass p0

{

VertexShader = compile vs_2_0 VertShadow();

PixelShader = compile ps_2_0 PixShadow();

}

}注意:这里我们第一个问题和第三个问题都解决了,我们着重看下第二个问题,将SHADOW_EPSILON改为0.00000f,整个效果如下:

这是由于Shadow Map精度造成的自投影。解决方案:如前面仁兄说的那样,可以把SHADOW_EPSILON改成0.00005f,但是这样又会出现一个问题

当灯光距离物理较远时,阴影容易与物体分离。

由于DX API中的偏移只改变了物体在标准设备坐标的Z值,并未改变shader里面的shadow map记录的值,所以具体的解决方法有待进一步研究。以后有进展了我再补充吧。

可执行程序以及相关源代码请点击这里下载

相关文章推荐

- untiy 3d ShaderLab_第8章_4_ 单光照贴图在Deferred 渲染路径下的实时阴影

- 3DShader之移位贴图(Displacement Mapping)

- 3DShader之投影贴图(Projective Texturing)

- 3DShader之法线贴图(normal mapping)

- Shader 1:能接受阴影的透明shader

- 用shader写伪3D

- Unity之Shader Pass 通道显示贴图的几种方法- 六

- Windows Phone 7 3D开发中使用纹理贴图

- shader之旅-7-平面阴影(planar shadow)

- opengl es2.0 入门教程推荐(正方体,案例解说,Shader介绍,纹理贴图)

- ogre3D学习基础5 -- 阴影与动画

- 3D MAX眼睛贴图制作过程

- 火云开发课堂 - 《Shader从入门到精通》系列 第十七节:在Shader中对3D模型进行色彩与纹理色的混合

- 【Shader】shader接收和产生阴影的条件以及必需代码

- ShaderLab学习小结(十五)法线贴图的简单Shader

- Shadow Map阴影贴图技术之探 【转】

- 【Unity Shader】结合Projector和Rendertexture实现实时阴影

- ios 3D引擎 SceneKit 开发(2) --贴图篇

- 解决wpf中加入阴影效果之后贴图模糊的问题

- UnityShader之纹理贴图滚动实现(修改UV坐标)