HTML5 MSE(Media Source Extensions)

2014-04-04 11:17

465 查看

转自:https://dvcs.w3.org/hg/html-media/raw-file/tip/media-source/media-source.html

If you wish to make comments or file bugs regarding this document in a manner that is tracked by the W3C, please submit them via our

public bug database.

report can be found in the W3C technical reports index at http://www.w3.org/TR/.

The working groups maintains a list of all bug reports that the editors have not yet tried to address. This draft highlights some of the pending issues that are still to be discussed in the working group.

No decision has been taken on the outcome of these issues including whether they are valid.

Implementors should be aware that this specification is not stable. Implementors who are not taking part in the discussions are likely to find the specification changing out from under them in incompatible ways. Vendors interested in implementing

this specification before it eventually reaches the Candidate Recommendation stage should join the mailing list mentioned below and take part in the discussions.

The following features are at risk and may be removed due to lack of implementation.

This document was published by the HTML Working Group as an Editor's Draft. If you wish to make comments regarding this document, please send them to public-html-media@w3.org(subscribe, archives).

All comments are welcome.

Publication as an Editor's Draft does not imply endorsement by the W3C Membership. This is a draft document and may be updated, replaced or obsoleted by other documents at any time. It is inappropriate to cite

this document as other than work in progress.

This document was produced by a group operating under the 5 February 2004 W3C Patent

Policy. W3C maintains a public list of any patent disclosures made in connection with the deliverables of the group;

that page also includes instructions for disclosing a patent. An individual who has actual knowledge of a patent which the individual believes contains Essential Claim(s) must

disclose the information in accordance with section 6 of the W3C Patent Policy.

1.1 Goals

1.2 Definitions

2. MediaSource Object

2.1 Attributes

2.2 Methods

2.3 Event Summary

2.4 Algorithms

2.4.1 Attaching to

a media element

2.4.2 Detaching from

a media element

2.4.3 Seeking

2.4.4 SourceBuffer

Monitoring

2.4.5 Changes

to selected/enabled track state

2.4.6 Duration

change

2.4.7 End of

stream algorithm

3. SourceBuffer Object

3.1 Attributes

3.2 Methods

3.3 Track Buffers

3.4 Event Summary

3.5 Algorithms

3.5.1 Segment

Parser Loop

3.5.2 Reset

Parser State

3.5.3 Append

Error Algorithm

3.5.4 Prepare

Append Algorithm

3.5.5 Buffer

Append Algorithm

3.5.6 Stream

Append Loop

3.5.7 Initialization

Segment Received

3.5.8 Coded

Frame Processing

3.5.9 Coded

Frame Removal Algorithm

3.5.10 Coded

Frame Eviction Algorithm

3.5.11 Audio

Splice Frame Algorithm

3.5.12 Audio

Splice Rendering Algorithm

3.5.13 Text

Splice Frame Algorithm

4. SourceBufferList

Object

4.1 Attributes

4.2 Methods

4.3 Event Summary

5. VideoPlaybackQuality

Object

5.1 Attributes

6. URL Object Extensions

6.1 Methods

7. HTMLMediaElement

Extensions

8. HTMLVideoElement

Extensions

8.1 Methods

9. AudioTrack

Extensions

9.1 Attributes

10. VideoTrack

Extensions

10.1 Attributes

11. TextTrack

Extensions

11.1 Attributes

12. Byte Stream

Formats

13. Examples

14. Acknowledgments

15. Revision History

A. References

A.1 Normative references

A buffering model is also included to describe how the user agent acts when different media segments are appended at different times. Byte stream specifications used with these extensions are available in the byte

stream format registry.

Allow JavaScript to construct media streams independent of how the media is fetched.

Define a splicing and buffering model that facilitates use cases like adaptive streaming, ad-insertion, time-shifting, and video editing.

Minimize the need for media parsing in JavaScript.

Leverage the browser cache as much as possible.

Provide requirements for byte stream format specifications.

Not require support for any particular media format or codec.

This specification defines:

Normative behavior for user agents to enable interoperability between user agents and web applications when processing media data.

Normative requirements to enable other specifications to define media formats to be used within this specification.

The track buffers that provide coded

frames for the

the

and the

All these tracks are associated with

in the

Append Window

A presentation timestamp range used to filter out coded

frames while appending. The append window represents a single continuous time range with a single start time and end time. Coded frames with presentation

timestamp within this range are allowed to be appended to the

coded frames outside this range are filtered out. The append window start and end times are controlled by the

respectively.

Coded Frame

A unit of media data that has a presentation timestamp, a decode

timestamp, and a coded frame duration.

Coded Frame Duration

The duration of a coded frame. For video and text, the duration indicates how long the video frame or text should be displayed. For audio,

the duration represents the sum of all the samples contained within the coded frame. For example, if an audio frame contained 441 samples @44100Hz the frame duration would be 10 milliseconds.

Coded Frame End Timestamp

The sum of a coded frame presentation

timestamp and its coded frame duration. It represents the presentation

timestamp that immediately follows the coded frame.

Coded Frame Group

A group of coded frames that are adjacent and have monotonically increasing decode

timestamps without any gaps. Discontinuities detected by the coded frame processing algorithm and

trigger the start of a new coded frame group.

Decode Timestamp

The decode timestamp indicates the latest time at which the frame needs to be decoded assuming instantaneous decoding and rendering of this and any dependant frames (this is equal to the presentation

timestamp of the earliest frame, in presentation order, that is dependant on this frame). If frames can be decoded out of presentation

order, then the decode timestamp must be present in or derivable from the byte stream. The user agent must run the end of stream

algorithm with the error parameter set to

not the case. If frames cannot be decoded out of presentation order and a decode timestamp is not present in the byte stream, then

the decode timestamp is equal to the presentation timestamp.

Displayed Frame Delay

The delay between a frame's presentation time and the actual time it was displayed, in a double-precision value in seconds & rounded to the nearest display refresh interval. This delay is always greater than or equal to zero since frames must never be displayed

before their presentation time. Non-zero delays are a sign of playback jitter and possible loss of A/V sync.

Initialization Segment

A sequence of bytes that contain all of the initialization information required to decode a sequence of media segments. This includes

codec initialization data, Track IDmappings for multiplexed segments, and timestamp offsets (e.g. edit lists).

NOTE

The byte stream format specifications in the byte

stream format registry contain format specific examples.

Media Segment

A sequence of bytes that contain packetized & timestamped media data for a portion of the media timeline. Media segments are always associated with the most recently

appended initialization segment.

NOTE

The byte stream format specifications in the byte

stream format registry contain format specific examples.

MediaSource object URL

A MediaSource object URL is a unique Blob URI [FILE-API]

created by

a

These URLs are the same as a Blob URI, except that anything in the definition of that feature that refers to File and Blob objects

is hereby extended to also apply to

The origin of the MediaSource object URL is the effective script origin of the

document that called

NOTE

For example, the origin of the MediaSource object URL affects the way that the media element is consumed

by canvas.

Parent Media Source

The parent media source of a

is the

created it.

Presentation Start Time

The presentation start time is the earliest time point in the presentation and specifies the initial playback position and earliest

possible position. All presentations created using this specification have a presentation start time of 0.

Presentation Interval

The presentation interval of a coded frame is the time interval from its presentation

timestamp to the presentation timestamp plus the coded

frame's duration. For example, if a coded frame has a presentation timestamp of 10 seconds and a coded frame duration of 100

milliseconds, then the presentation interval would be [10-10.1). Note that the start of the range is inclusive, but the end of the range is exclusive.

Presentation Order

The order that coded frames are rendered in the presentation. The presentation order is achieved by ordering coded

frames in monotonically increasing order by theirpresentation timestamps.

Presentation Timestamp

A reference to a specific time in the presentation. The presentation timestamp in a coded frame indicates when the frame must be rendered.

Random Access Point

A position in a media segment where decoding and continuous playback can begin without relying on any previous data in the segment.

For video this tends to be the location of I-frames. In the case of audio, most audio frames can be treated as a random access point. Since video tracks tend to have a more sparse distribution of random access points, the location of these points are usually

considered the random access points for multiplexed streams.

SourceBuffer byte stream format specification

The specific byte stream format specification that describes the format of the byte stream accepted by a

The byte stream format specification, for a

is selected based on the type passed to the

that created the object.

Track Description

A byte stream format specific structure that provides the Track ID, codec configuration, and other metadata for a single track. Each track

description inside a singleinitialization segment has a unique Track

ID. The user agent must run the end of stream algorithm with the error parameter set to

the Track ID is not unique within the initialization

segment .

Track ID

A Track ID is a byte stream format specific identifier that marks sections of the byte stream as being part of a specific track. The Track ID in a track

descriptionidentifies which sections of a media segment belong to that track.

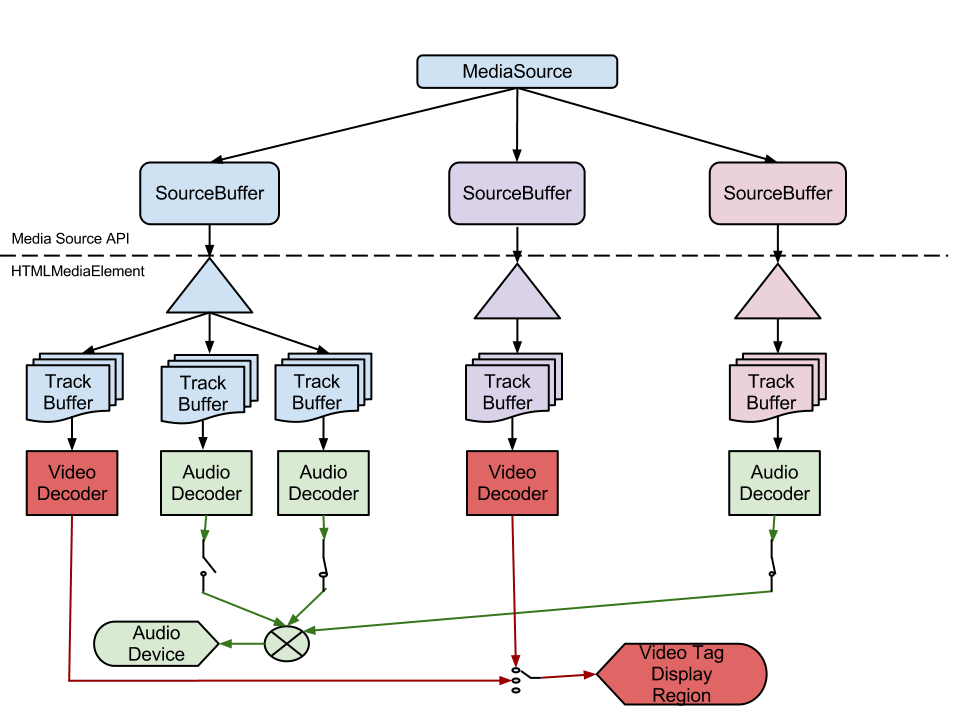

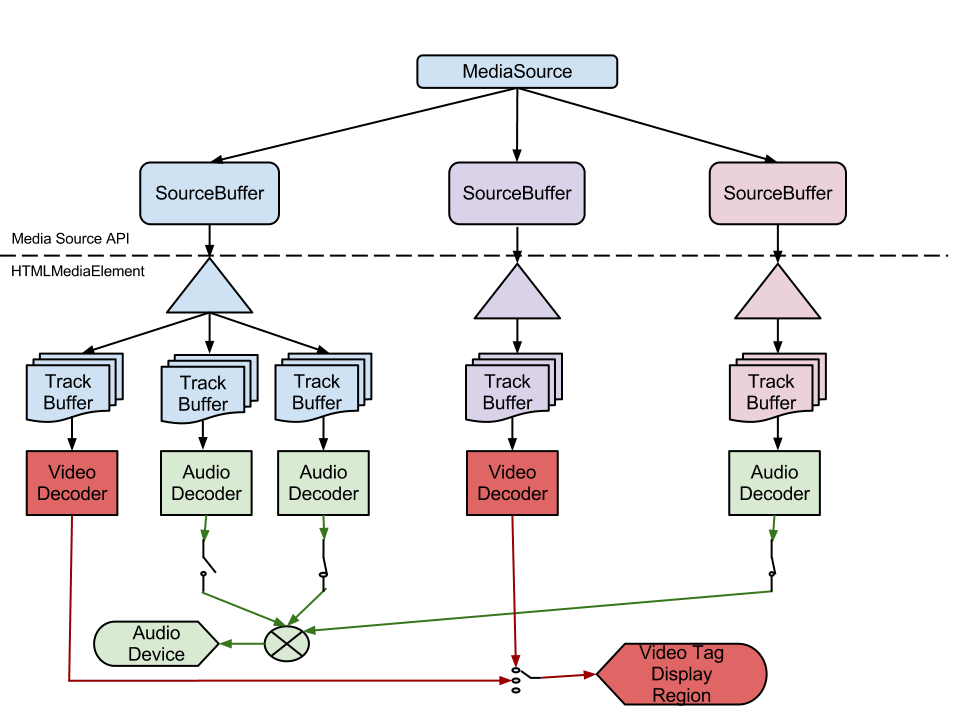

Abstract

This specification extends HTMLMediaElement to allow JavaScript to generate media streams for playback. Allowing JavaScript to generate streams facilitates a variety of use cases like adaptive streaming and time shifting live streams.If you wish to make comments or file bugs regarding this document in a manner that is tracked by the W3C, please submit them via our

public bug database.

Status of This Document

This section describes the status of this document at the time of its publication. Other documents may supersede this document. A list of current W3C publications and the latest revision of this technicalreport can be found in the W3C technical reports index at http://www.w3.org/TR/.

The working groups maintains a list of all bug reports that the editors have not yet tried to address. This draft highlights some of the pending issues that are still to be discussed in the working group.

No decision has been taken on the outcome of these issues including whether they are valid.

Implementors should be aware that this specification is not stable. Implementors who are not taking part in the discussions are likely to find the specification changing out from under them in incompatible ways. Vendors interested in implementing

this specification before it eventually reaches the Candidate Recommendation stage should join the mailing list mentioned below and take part in the discussions.

The following features are at risk and may be removed due to lack of implementation.

totalFrameDelay

This document was published by the HTML Working Group as an Editor's Draft. If you wish to make comments regarding this document, please send them to public-html-media@w3.org(subscribe, archives).

All comments are welcome.

Publication as an Editor's Draft does not imply endorsement by the W3C Membership. This is a draft document and may be updated, replaced or obsoleted by other documents at any time. It is inappropriate to cite

this document as other than work in progress.

This document was produced by a group operating under the 5 February 2004 W3C Patent

Policy. W3C maintains a public list of any patent disclosures made in connection with the deliverables of the group;

that page also includes instructions for disclosing a patent. An individual who has actual knowledge of a patent which the individual believes contains Essential Claim(s) must

disclose the information in accordance with section 6 of the W3C Patent Policy.

Table of Contents

1. Introduction1.1 Goals

1.2 Definitions

2. MediaSource Object

2.1 Attributes

2.2 Methods

2.3 Event Summary

2.4 Algorithms

2.4.1 Attaching to

a media element

2.4.2 Detaching from

a media element

2.4.3 Seeking

2.4.4 SourceBuffer

Monitoring

2.4.5 Changes

to selected/enabled track state

2.4.6 Duration

change

2.4.7 End of

stream algorithm

3. SourceBuffer Object

3.1 Attributes

3.2 Methods

3.3 Track Buffers

3.4 Event Summary

3.5 Algorithms

3.5.1 Segment

Parser Loop

3.5.2 Reset

Parser State

3.5.3 Append

Error Algorithm

3.5.4 Prepare

Append Algorithm

3.5.5 Buffer

Append Algorithm

3.5.6 Stream

Append Loop

3.5.7 Initialization

Segment Received

3.5.8 Coded

Frame Processing

3.5.9 Coded

Frame Removal Algorithm

3.5.10 Coded

Frame Eviction Algorithm

3.5.11 Audio

Splice Frame Algorithm

3.5.12 Audio

Splice Rendering Algorithm

3.5.13 Text

Splice Frame Algorithm

4. SourceBufferList

Object

4.1 Attributes

4.2 Methods

4.3 Event Summary

5. VideoPlaybackQuality

Object

5.1 Attributes

6. URL Object Extensions

6.1 Methods

7. HTMLMediaElement

Extensions

8. HTMLVideoElement

Extensions

8.1 Methods

9. AudioTrack

Extensions

9.1 Attributes

10. VideoTrack

Extensions

10.1 Attributes

11. TextTrack

Extensions

11.1 Attributes

12. Byte Stream

Formats

13. Examples

14. Acknowledgments

15. Revision History

A. References

A.1 Normative references

1. Introduction

This specification allows JavaScript to dynamically construct media streams for <audio> and <video>. It defines objects that allow JavaScript to pass media segments to anHTMLMediaElement [HTML5].A buffering model is also included to describe how the user agent acts when different media segments are appended at different times. Byte stream specifications used with these extensions are available in the byte

stream format registry.

1.1 Goals

This specification was designed with the following goals in mind:Allow JavaScript to construct media streams independent of how the media is fetched.

Define a splicing and buffering model that facilitates use cases like adaptive streaming, ad-insertion, time-shifting, and video editing.

Minimize the need for media parsing in JavaScript.

Leverage the browser cache as much as possible.

Provide requirements for byte stream format specifications.

Not require support for any particular media format or codec.

This specification defines:

Normative behavior for user agents to enable interoperability between user agents and web applications when processing media data.

Normative requirements to enable other specifications to define media formats to be used within this specification.

1.2 Definitions

Active Track BuffersThe track buffers that provide coded

frames for the

enabled

audioTracks,

the

selected

videoTracks,

and the

"showing"or

"hidden"

textTracks.

All these tracks are associated with

SourceBufferobjects

in the

activeSourceBufferslist.

Append Window

A presentation timestamp range used to filter out coded

frames while appending. The append window represents a single continuous time range with a single start time and end time. Coded frames with presentation

timestamp within this range are allowed to be appended to the

SourceBufferwhile

coded frames outside this range are filtered out. The append window start and end times are controlled by the

appendWindowStartand

appendWindowEndattributes

respectively.

Coded Frame

A unit of media data that has a presentation timestamp, a decode

timestamp, and a coded frame duration.

Coded Frame Duration

The duration of a coded frame. For video and text, the duration indicates how long the video frame or text should be displayed. For audio,

the duration represents the sum of all the samples contained within the coded frame. For example, if an audio frame contained 441 samples @44100Hz the frame duration would be 10 milliseconds.

Coded Frame End Timestamp

The sum of a coded frame presentation

timestamp and its coded frame duration. It represents the presentation

timestamp that immediately follows the coded frame.

Coded Frame Group

A group of coded frames that are adjacent and have monotonically increasing decode

timestamps without any gaps. Discontinuities detected by the coded frame processing algorithm and

abort()calls

trigger the start of a new coded frame group.

Decode Timestamp

The decode timestamp indicates the latest time at which the frame needs to be decoded assuming instantaneous decoding and rendering of this and any dependant frames (this is equal to the presentation

timestamp of the earliest frame, in presentation order, that is dependant on this frame). If frames can be decoded out of presentation

order, then the decode timestamp must be present in or derivable from the byte stream. The user agent must run the end of stream

algorithm with the error parameter set to

"decode"if this is

not the case. If frames cannot be decoded out of presentation order and a decode timestamp is not present in the byte stream, then

the decode timestamp is equal to the presentation timestamp.

Displayed Frame Delay

The delay between a frame's presentation time and the actual time it was displayed, in a double-precision value in seconds & rounded to the nearest display refresh interval. This delay is always greater than or equal to zero since frames must never be displayed

before their presentation time. Non-zero delays are a sign of playback jitter and possible loss of A/V sync.

Initialization Segment

A sequence of bytes that contain all of the initialization information required to decode a sequence of media segments. This includes

codec initialization data, Track IDmappings for multiplexed segments, and timestamp offsets (e.g. edit lists).

NOTE

The byte stream format specifications in the byte

stream format registry contain format specific examples.

Media Segment

A sequence of bytes that contain packetized & timestamped media data for a portion of the media timeline. Media segments are always associated with the most recently

appended initialization segment.

NOTE

The byte stream format specifications in the byte

stream format registry contain format specific examples.

MediaSource object URL

A MediaSource object URL is a unique Blob URI [FILE-API]

created by

createObjectURL(). It is used to attach

a

MediaSourceobject to an HTMLMediaElement.

These URLs are the same as a Blob URI, except that anything in the definition of that feature that refers to File and Blob objects

is hereby extended to also apply to

MediaSourceobjects.

The origin of the MediaSource object URL is the effective script origin of the

document that called

createObjectURL().

NOTE

For example, the origin of the MediaSource object URL affects the way that the media element is consumed

by canvas.

Parent Media Source

The parent media source of a

SourceBufferobject

is the

MediaSourceobject that

created it.

Presentation Start Time

The presentation start time is the earliest time point in the presentation and specifies the initial playback position and earliest

possible position. All presentations created using this specification have a presentation start time of 0.

Presentation Interval

The presentation interval of a coded frame is the time interval from its presentation

timestamp to the presentation timestamp plus the coded

frame's duration. For example, if a coded frame has a presentation timestamp of 10 seconds and a coded frame duration of 100

milliseconds, then the presentation interval would be [10-10.1). Note that the start of the range is inclusive, but the end of the range is exclusive.

Presentation Order

The order that coded frames are rendered in the presentation. The presentation order is achieved by ordering coded

frames in monotonically increasing order by theirpresentation timestamps.

Presentation Timestamp

A reference to a specific time in the presentation. The presentation timestamp in a coded frame indicates when the frame must be rendered.

Random Access Point

A position in a media segment where decoding and continuous playback can begin without relying on any previous data in the segment.

For video this tends to be the location of I-frames. In the case of audio, most audio frames can be treated as a random access point. Since video tracks tend to have a more sparse distribution of random access points, the location of these points are usually

considered the random access points for multiplexed streams.

SourceBuffer byte stream format specification

The specific byte stream format specification that describes the format of the byte stream accepted by a

SourceBufferinstance.

The byte stream format specification, for a

SourceBufferobject,

is selected based on the type passed to the

addSourceBuffer()call

that created the object.

Track Description

A byte stream format specific structure that provides the Track ID, codec configuration, and other metadata for a single track. Each track

description inside a singleinitialization segment has a unique Track

ID. The user agent must run the end of stream algorithm with the error parameter set to

"decode"if

the Track ID is not unique within the initialization

segment .

Track ID

A Track ID is a byte stream format specific identifier that marks sections of the byte stream as being part of a specific track. The Track ID in a track

descriptionidentifies which sections of a media segment belong to that track.

相关文章推荐

- html5移动应用开发的优势

- HTML5做不到的5件事

- html5 长按

- HTML5排版 语义化标签

- 4种检测是否支持HTML5的方法,你知道几个?

- 定位Canvas上的坐标

- 对HTML5中LocalStorage的一些使用建议

- html5

- html5学习之一

- html5 canvas 画图基本教程

- 为你的html5网页添加音效示例

- HTMl5+CSS3 知识汇总【待更新整理...】

- What's new in HTML5?

- HTML5性能测试

- html5 语音搜索

- html5+css3实现抽屉菜单

- HTML5 <article> Tag,html5常见的哦

- HTML5 <address> Tag,这个标签貌似挺常用

- HTML5 < !DOCTYPE >声明

- HTML5 Tutorial,老生常谈了吗?