OpenCV(3)ML库->Expectation - Maximization 算法

2014-04-03 10:25

337 查看

最大期望算法(Expectation-maximization algorithm,又译期望最大化算法)在统计中被用于寻找,依赖于不可观察的隐性变量的概率模型中,参数的最大似然估计。

在统计计算中,最大期望(EM)算法是在概率(probabilistic)模型中寻找参数最大似然估计或者最大后验估计的算法,其中概率模型依赖于无法观测的隐藏变量(Latent Variable)。最大期望经常用在机器学习和计算机视觉的数据聚类(Data Clustering)领域。

最大期望算法经过两个步骤交替进行计算:

第一步是计算期望(E),利用对隐藏变量的现有估计值,计算其最大似然估计值;

第二步是最大化(M),最大化在 E 步上求得的最大似然值来计算参数的值。

M 步上找到的参数估计值被用于下一个 E 步计算中,这个过程不断交替进行。

总体来说,EM的算法流程如下:

1.初始化分布参数

2.重复直到收敛:

E步骤:估计未知参数的期望值,给出当前的参数估计。

M步骤:重新估计分布参数,以使得数据的似然性最大,给出未知变量的期望估计。

循环重复直到收敛 {

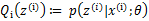

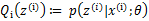

(E步)对于每一个i,计算

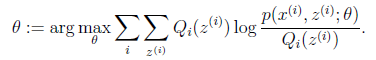

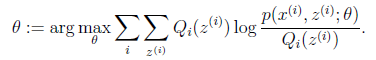

(M步)计算

参考:http://www.cnblogs.com/jerrylead/archive/2011/04/06/2006936.html

http://zhidao.baidu.com/link?url=12xrCFpWm1U-bYb4V8uxf3uu2ZDTFlwpDzbWe7HjOrNWXdsCQTlA466N78ZUDWP-jFAcVsTQo9JyKW28o86ng_

http://www.360doc.com/content/13/0624/13/10942270_295158557.shtml

http://fuliang.iteye.com/blog/1621633

http://wiki.opencv.org.cn/index.php/%E6%9C%BA%E5%99%A8%E5%AD%A6%E4%B9%A0%E4%B8%AD%E6%96%87%E5%8F%82%E8%80%83%E6%89%8B%E5%86%8C#CvEMParams

http://www.seas.upenn.edu/~bensapp/opencvdocs/ref/opencvref_ml.htm#ch_em

http://hi.baidu.com/darkhorse/item/cc58043eb19800159dc65e70

在统计计算中,最大期望(EM)算法是在概率(probabilistic)模型中寻找参数最大似然估计或者最大后验估计的算法,其中概率模型依赖于无法观测的隐藏变量(Latent Variable)。最大期望经常用在机器学习和计算机视觉的数据聚类(Data Clustering)领域。

最大期望算法经过两个步骤交替进行计算:

第一步是计算期望(E),利用对隐藏变量的现有估计值,计算其最大似然估计值;

第二步是最大化(M),最大化在 E 步上求得的最大似然值来计算参数的值。

M 步上找到的参数估计值被用于下一个 E 步计算中,这个过程不断交替进行。

总体来说,EM的算法流程如下:

1.初始化分布参数

2.重复直到收敛:

E步骤:估计未知参数的期望值,给出当前的参数估计。

M步骤:重新估计分布参数,以使得数据的似然性最大,给出未知变量的期望估计。

/****************************************************************************************\

* Expectation - Maximization *

\****************************************************************************************/

struct CV_EXPORTS CvEMParams //参数设定 EM算法估计混合高斯模型所需要的参数

{

CvEMParams() : nclusters(10), cov_mat_type(1/*CvEM::COV_MAT_DIAGONAL*/),

start_step(0/*CvEM::START_AUTO_STEP*/), probs(0), weights(0), means(0), covs(0)

{

term_crit=cvTermCriteria( CV_TERMCRIT_ITER+CV_TERMCRIT_EPS, 100, FLT_EPSILON );

}

CvEMParams( int _nclusters, int _cov_mat_type=1/*CvEM::COV_MAT_DIAGONAL*/,

int _start_step=0/*CvEM::START_AUTO_STEP*/,

CvTermCriteria _term_crit=cvTermCriteria(CV_TERMCRIT_ITER+CV_TERMCRIT_EPS, 100, FLT_EPSILON),

const CvMat* _probs=0, const CvMat* _weights=0, const CvMat* _means=0, const CvMat** _covs=0 ) :

nclusters(_nclusters), cov_mat_type(_cov_mat_type), start_step(_start_step),

probs(_probs), weights(_weights), means(_means), covs(_covs), term_crit(_term_crit)

{}

int nclusters;

int cov_mat_type;

int start_step;

const CvMat* probs; //初始的后验概率

const CvMat* weights; //初始的各个成分的概率

const CvMat* means; //初始的均值

const CvMat** covs; //初始的协方差矩阵

CvTermCriteria term_crit; //E步和M步 迭代停止的准则。 EM算法会在一定的迭代次数之后(term_crit.num_iter),或者当模型参数在两次迭代之间的变化小于预定值(term_crit.epsilon)时停止

};

class CV_EXPORTS CvEM : public CvStatModel //模型设置

{

public:

// Type of covariation matrices

enum { COV_MAT_SPHERICAL=0, COV_MAT_DIAGONAL=1, COV_MAT_GENERIC=2 }; //

// The initial step

enum { START_E_STEP=1, START_M_STEP=2, START_AUTO_STEP=0 };

CvEM();

CvEM( const CvMat* samples, const CvMat* sample_idx=0,

CvEMParams params=CvEMParams(), CvMat* labels=0 );

//CvEM (CvEMParams params, CvMat * means, CvMat ** covs, CvMat * weights, CvMat * probs, CvMat * log_weight_div_det, CvMat * inv_eigen_values, CvMat** cov_rotate_mats);

virtual ~CvEM();

virtual bool train( const CvMat* samples, const CvMat* sample_idx=0,

CvEMParams params=CvEMParams(), CvMat* labels=0 );

virtual float predict( const CvMat* sample, CvMat* probs ) const;

#ifndef SWIG

CvEM( const cv::Mat& samples, const cv::Mat& sample_idx=cv::Mat(),

CvEMParams params=CvEMParams(), cv::Mat* labels=0 );

virtual bool train( const cv::Mat& samples, const cv::Mat& sample_idx=cv::Mat(),

CvEMParams params=CvEMParams(), cv::Mat* labels=0 );

virtual float predict( const cv::Mat& sample, cv::Mat* probs ) const;

#endif

virtual void clear();

int get_nclusters() const;

const CvMat* get_means() const;

const CvMat** get_covs() const;

const CvMat* get_weights() const;

const CvMat* get_probs() const;

inline double get_log_likelihood () const { return log_likelihood; };

// inline const CvMat * get_log_weight_div_det () const { return log_weight_div_det; };

// inline const CvMat * get_inv_eigen_values () const { return inv_eigen_values; };

// inline const CvMat ** get_cov_rotate_mats () const { return cov_rotate_mats; };

protected:

virtual void set_params( const CvEMParams& params,

const CvVectors& train_data );

virtual void init_em( const CvVectors& train_data );

virtual double run_em( const CvVectors& train_data );

virtual void init_auto( const CvVectors& samples );

virtual void kmeans( const CvVectors& train_data, int nclusters,

CvMat* labels, CvTermCriteria criteria,

const CvMat* means );

CvEMParams params;

double log_likelihood;

CvMat* means;

CvMat** covs;

CvMat* weights;

CvMat* probs;

CvMat* log_weight_div_det;

CvMat* inv_eigen_values;

CvMat** cov_rotate_mats;

};循环重复直到收敛 {

(E步)对于每一个i,计算

(M步)计算

#include "stdafx.h"

#include <ml.h>

#include <iostream>

#include <highgui.h>

#include <cv.h>

#include <cxcore.h>

using namespace cv;

using namespace std;

int main( int argc, char** argv )

{

const int N = 4;

const int N1 = (int)sqrt((double)N);

const CvScalar colors[] = {{{0,0,255}},{{0,255,0}},{{0,255,255}},{{255,255,0}}};

int i, j;

int nsamples = 100;

CvRNG rng_state = cvRNG(-1);

CvMat* samples = cvCreateMat( nsamples, 2, CV_32FC1 );

CvMat* labels = cvCreateMat( nsamples, 1, CV_32SC1 );

IplImage* img = cvCreateImage( cvSize( 500, 500 ), 8, 3 );

float _sample[2];

CvMat sample = cvMat( 1, 2, CV_32FC1, _sample );

//EM算法初始化

CvEM em_model;

CvEMParams params;

CvMat samples_part;

cvReshape( samples, samples, 2, 0 );

for( i = 0; i < N; i++ )

{

CvScalar mean, sigma;

// form the training samples

cvGetRows( samples, &samples_part, i*nsamples/N, (i+1)*nsamples/N );

mean = cvScalar(((i%N1)+1.)*img->width/(N1+1), ((i/N1)+1.)*img->height/(N1+1));

sigma = cvScalar(30,30);

cvRandArr( &rng_state, &samples_part, CV_RAND_NORMAL, mean, sigma );

}

cvReshape( samples, samples, 1, 0 );

// initialize model's parameters

params.covs = NULL;

params.means = NULL;

params.weights = NULL;

params.probs = NULL;

params.nclusters = N;

params.cov_mat_type = CvEM::COV_MAT_SPHERICAL;

params.start_step = CvEM::START_AUTO_STEP;

params.term_crit.max_iter = 10;

params.term_crit.epsilon = 0.1;

params.term_crit.type = CV_TERMCRIT_ITER|CV_TERMCRIT_EPS;

// cluster the data

em_model.train( samples, 0, params, labels );

#if 0

// the piece of code shows how to repeatedly optimize the model

// with less-constrained parameters (COV_MAT_DIAGONAL instead of COV_MAT_SPHERICAL)

// when the output of the first stage is used as input for the second.

CvEM em_model2;

params.cov_mat_type = CvEM::COV_MAT_DIAGONAL;

params.start_step = CvEM::START_E_STEP;

params.means = em_model.get_means();

params.covs = (const CvMat**)em_model.get_covs();

params.weights = em_model.get_weights();

em_model2.train( samples, 0, params, labels );

// to use em_model2, replace em_model.predict() with em_model2.predict() below

#endif

// classify every image pixel

cvZero( img );

for( i = 0; i < img->height; i++ )

{

for( j = 0; j < img->width; j++ )

{

CvPoint pt = cvPoint(j, i);

sample.data.fl[0] = (float)j;

sample.data.fl[1] = (float)i;

int response = cvRound(em_model.predict( &sample, NULL ));

CvScalar c = colors[response];

cvCircle( img, pt, 1, cvScalar(c.val[0]*0.75,c.val[1]*0.75,c.val[2]*0.75), CV_FILLED );

}

}

//draw the clustered samples

for( i = 0; i < nsamples; i++ )

{

CvPoint pt;

pt.x = cvRound(samples->data.fl[i*2]);

pt.y = cvRound(samples->data.fl[i*2+1]);

cvCircle( img, pt, 1, colors[labels->data.i[i]], CV_FILLED );

}

cvNamedWindow( "EM-clustering result", 1 );

cvShowImage( "EM-clustering result", img );

cvWaitKey(0);

cvReleaseMat( &samples );

cvReleaseMat( &labels );

return 0;

}参考:http://www.cnblogs.com/jerrylead/archive/2011/04/06/2006936.html

http://zhidao.baidu.com/link?url=12xrCFpWm1U-bYb4V8uxf3uu2ZDTFlwpDzbWe7HjOrNWXdsCQTlA466N78ZUDWP-jFAcVsTQo9JyKW28o86ng_

http://www.360doc.com/content/13/0624/13/10942270_295158557.shtml

http://fuliang.iteye.com/blog/1621633

http://wiki.opencv.org.cn/index.php/%E6%9C%BA%E5%99%A8%E5%AD%A6%E4%B9%A0%E4%B8%AD%E6%96%87%E5%8F%82%E8%80%83%E6%89%8B%E5%86%8C#CvEMParams

http://www.seas.upenn.edu/~bensapp/opencvdocs/ref/opencvref_ml.htm#ch_em

http://hi.baidu.com/darkhorse/item/cc58043eb19800159dc65e70

相关文章推荐

- OpenCV(3)ML库->Expectation - Maximization 算法

- OpenCV 2 学习笔记(12): 算法的基本设计模式<3>:单例模式(Singleton pattern)

- OpenCV——KNN分类算法 <摘>

- Expectation-Maximization(EM) 算法

- OpenCV(4)ML库->Decision Tree决策树

- OpenCV(4)ML库->Decision Tree决策树

- EM(Expectation-Maximization)算法

- EM(Expectation-Maximization)算法

- OpenCV(2)ML库->K-Nearest Neighbour分类器

- OpenCV(1)ML库->Normal Bayes分类器

- OpenCV(2)ML库->K-Nearest Neighbour分类器

- Expectation Maximization Algorithm(EM)算法

- OpenCV(1)ML库->Normal Bayes分类器

- OpenCV——KNN分类算法 <摘>

- EM(Expectation-Maximization)算法的浅显理解

- Expectation Maximization Algorithm---opencv2.4.11

- OpenCV 2 学习笔记(10): 算法的基本设计模式<1>:策略模式(strategy pattern)

- OpenCV 2 学习笔记(11): 算法的基本设计模式<2>:使用Controller

- 期望极大算法:Expectation Maximization Algorithm

- EM(Expectation-Maximization)算法-问题引入