Hadoop完全分布式环境搭建

2014-03-07 09:18

615 查看

一. 准备工作

实验环境:Vmware虚拟出的3台主机,系统为CentOS_6.4_i386

用到的软件:hadoop-1.2.1-1.i386.rpm,jdk-7u9-linux-i586.rpm

主机规划:

IP地址 主机名 角色

192.168.2.22 master.flyence.tk NameNode,JobTracker

192.168.2.42 datanode.flyence.tk DataNode,TaskTracker

192.168.2.32 snn.flyence.tk SecondaryNameNode

1. hostname命令修改主机名,并修改/etc/sysconfig/network中的主机

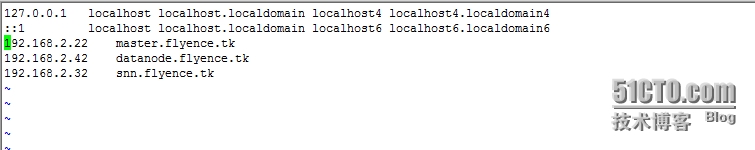

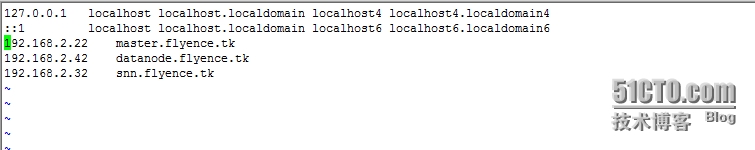

2. 在/etc/hosts中,记录3台主机的IP和主机名

3. 在3台主机上添加hadoop用户,并设定密码

4. master节点的hadoop用户能够以基于密钥的验证方式登录其他节点,以便启动进程并执行监控等额外的管理工作。

二. 安装JDK

3台主机上都要安装,以下步骤要重复三遍

编辑/etc/profile.d/java.sh,在文件中添加如下内容:

切换至hadoop用户,并执行如下命令测试jdk环境配置是否就绪

三. 安装Hadoop

集群中的每个节点都要安装Hadoop。

切换至hadoop用户,验证Hadoop是否安装完成

四. 配置Hadoop

3台主机上的配置均相同,Hadoop在启动时会根据配置文件判定当前节点的角色,并启动其相应的服务。因此以下的修改在每个节点上都要进行。

1. 修改/etc/hadoop/core-site.xml,内容如下

2. 修改/etc/hadoop/mapred-site.xml

3. 修改/etc/hadoop/hdfs-site.xml

4. 修改/etc/hadoop/masters

5. 修改/etc/hadoop/slaves

6. 在master上初始化数据节点

五. 启动Hadoop

1. 首先为执行脚本增加执行权限

2. 启动Hadoop

注意:很奇怪,用rpm格式的hadoop包,安装后的执行脚本竟然是在/usr/sbin下的,而且没有执行权限。而在启动Hadoop时,一般都是用的非系统用户,不明白为什么,用源码包安装的时候,不会有这个问题。

如果要停止Hadoop的各进程,则使用stop-all.sh脚本即可,当然也要赋予执行权限。

六. 测试Hadoop

Hadoop提供了MapReduce编程框架,其并行处理能力的发挥需要通过开发Map及Reduce程序实现。为了便于系统测试,Hadoop提供了一个单词统计的应用程序算法样例,其位于Hadoop安装目录下名称类似hadoop-examples-*.jar的文件中。除了单词统计,这个jar文件还包含了分布式运行的grep等功能的实现,这可以通过如下命令查看。

注:rpm包安装后,其算法样例位于/usr/share/hadoop/hadoop-examples-1.2.1.jar

首先创建in文件夹,put两个文件进去,然后进行测试

测试:

七. 各种错误的总结

1. map 100%,reduce 0%

一般是由于主机名和IP地址不对应造成的,仔细检查3个节点的/etc/hosts文件

2. Error: Java heap space

分配的堆内存不够,在mapred-site.xml中,将mapred.child.java.opts的值改大,改为1024试试看

3. namenode无法启动

请修改默认的临时目录,在上面的文章中有提到

4. Name node is in safe mode,或者JobTracker is in safe mode

Namenode并不会持久存储数据块与其存储位置的对应信息,因为这些信息是在HDFS集群启动由Namenode根据各Datanode发来的信息进行重建而来。这个重建过程被称为HDFS的安全模式。

这种情况下只需等待会就好

本文出自 “footage of linux” 博客,请务必保留此出处http://evanlinux.blog.51cto.com/7247558/1369613

实验环境:Vmware虚拟出的3台主机,系统为CentOS_6.4_i386

用到的软件:hadoop-1.2.1-1.i386.rpm,jdk-7u9-linux-i586.rpm

主机规划:

IP地址 主机名 角色

192.168.2.22 master.flyence.tk NameNode,JobTracker

192.168.2.42 datanode.flyence.tk DataNode,TaskTracker

192.168.2.32 snn.flyence.tk SecondaryNameNode

1. hostname命令修改主机名,并修改/etc/sysconfig/network中的主机

这里以master节点为例 [root@localhost ~]# hostname master.flyence.tk [root@localhost ~]# vim /etc/sysconfig/network [root@localhost ~]# logout - 下面为/etc/sysconfig/network中的内容 NETWORKING=yes HOSTNAME=master.flyence.tk

2. 在/etc/hosts中,记录3台主机的IP和主机名

3. 在3台主机上添加hadoop用户,并设定密码

# useradd hadoop # echo "hadoop" | passwd --stdin hadoop

4. master节点的hadoop用户能够以基于密钥的验证方式登录其他节点,以便启动进程并执行监控等额外的管理工作。

[root@master ~]# su - hadoop [hadoop@master ~]$ ssh-keygen -t rsa -P '' [hadoop@master ~]$ ssh-copy-id -i .ssh/id_rsa.pub hadoop@datanode.flyence.tk [hadoop@master ~]$ ssh-copy-id -i .ssh/id_rsa.pub hadoop@snn.flyence.tk

二. 安装JDK

3台主机上都要安装,以下步骤要重复三遍

[root@master ~]# rpm -ivh jdk-7u9-linux-i586.rpm

编辑/etc/profile.d/java.sh,在文件中添加如下内容:

export PATH=/usr/java/latest/bin:$PATH

切换至hadoop用户,并执行如下命令测试jdk环境配置是否就绪

[hadoop@master ~]$ java -version java version "1.7.0_09" Java(TM) SE Runtime Environment (build 1.7.0_09-b05) Java HotSpot(TM) Client VM (build 23.5-b02, mixed mode, sharing)

三. 安装Hadoop

集群中的每个节点都要安装Hadoop。

[root@master ~]# rpm -ivh hadoop-1.2.1-1.i386.rpm

切换至hadoop用户,验证Hadoop是否安装完成

[hadoop@master ~]$ hadoop version Hadoop 1.2.1 Subversion https://svn.apache.org/repos/asf/hadoop/common/branches/branch-1.2 -r 1503152 Compiled by mattf on Mon Jul 22 15:17:22 PDT 2013 From source with checksum 6923c86528809c4e7e6f493b6b413a9a This command was run using /usr/share/hadoop/hadoop-core-1.2.1.jar

四. 配置Hadoop

3台主机上的配置均相同,Hadoop在启动时会根据配置文件判定当前节点的角色,并启动其相应的服务。因此以下的修改在每个节点上都要进行。

1. 修改/etc/hadoop/core-site.xml,内容如下

<?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Put site-specific property overrides in this file. --> <configuration> <property> <name>fs.default.name</name> <value>hdfs://master.flyence.tk:8020</value> <final>true</final> <description>The name of the default file system. A URI whose scheme and authority determine the FileSystem implimentation.</description> </property> <property> <name>hadoop.tmp.dir</name> <value>/hadoop/temp</value> <description>A base for other temporary directories.</description> </property> </configuration>注:这里修改Hadoop的临时目录,则在3个节点上都需新建/hadoop文件夹,并赋予hadoop用户rwx权限,可用setfacl语句。

2. 修改/etc/hadoop/mapred-site.xml

<?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Put site-specific property overrides in this file. --> <configuration> <property> <name>mapred.job.tracker</name> <value>master.flyence.tk:8021</value> <final>true</final> <description>The host and port that the MapReduce JobTracker runs at. </description> </property> <property> <name>mapred.child.java.opts</name> <value>-Xmx512m</value> </property> </configuration>

3. 修改/etc/hadoop/hdfs-site.xml

<?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Put site-specific property overrides in this file. --> <configuration> <property> <name>dfs.replication</name> <value>1</value> <description>The actual number of replications can be specified when the file is created.</description></property> </configuration>

4. 修改/etc/hadoop/masters

snn.flyence.tk

5. 修改/etc/hadoop/slaves

datanode.flyence.tk

6. 在master上初始化数据节点

[root@master ~]# hadoop namenode -format

五. 启动Hadoop

1. 首先为执行脚本增加执行权限

[root@master ~]# chmod +x /usr/sbin/start-all.sh [root@master ~]# chmod +x /usr/sbin/start-dfs.sh [root@master ~]# chmod +x /usr/sbin/start-mapred.sh [root@master ~]# chmod +x /usr/sbin/slaves.sh

2. 启动Hadoop

[hadoop@master ~]$ start-all.sh starting namenode, logging to /var/log/hadoop/hadoop/hadoop-hadoop-namenode-master.flyence.tk.out datanode.flyence.tk: starting datanode, logging to /var/log/hadoop/hadoop/hadoop-hadoop-datanode-snn.flyence.tk.out snn.flyence.tk: starting secondarynamenode, logging to /var/log/hadoop/hadoop/hadoop-hadoop-secondarynamenode-datanode.flyence.tk.out starting jobtracker, logging to /var/log/hadoop/hadoop/hadoop-hadoop-jobtracker-master.flyence.tk.out datanode.flyence.tk: starting tasktracker, logging to /var/log/hadoop/hadoop/hadoop-hadoop-tasktracker-snn.flyence.tk.out

注意:很奇怪,用rpm格式的hadoop包,安装后的执行脚本竟然是在/usr/sbin下的,而且没有执行权限。而在启动Hadoop时,一般都是用的非系统用户,不明白为什么,用源码包安装的时候,不会有这个问题。

如果要停止Hadoop的各进程,则使用stop-all.sh脚本即可,当然也要赋予执行权限。

六. 测试Hadoop

Hadoop提供了MapReduce编程框架,其并行处理能力的发挥需要通过开发Map及Reduce程序实现。为了便于系统测试,Hadoop提供了一个单词统计的应用程序算法样例,其位于Hadoop安装目录下名称类似hadoop-examples-*.jar的文件中。除了单词统计,这个jar文件还包含了分布式运行的grep等功能的实现,这可以通过如下命令查看。

注:rpm包安装后,其算法样例位于/usr/share/hadoop/hadoop-examples-1.2.1.jar

[hadoop@master ~]$ hadoop jar /usr/share/hadoop/hadoop-examples-1.2.1.jar An example program must be given as the first argument. Valid program names are: aggregatewordcount: An Aggregate based map/reduce program that counts the words in the input files. aggregatewordhist: An Aggregate based map/reduce program that computes the histogram of the words in the input files. dbcount: An example job that count the pageview counts from a database. grep: A map/reduce program that counts the matches of a regex in the input. join: A job that effects a join over sorted, equally partitioned datasets multifilewc: A job that counts words from several files. pentomino: A map/reduce tile laying program to find solutions to pentomino problems. pi: A map/reduce program that estimates Pi using monte-carlo method. randomtextwriter: A map/reduce program that writes 10GB of random textual data per node. randomwriter: A map/reduce program that writes 10GB of random data per node. secondarysort: An example defining a secondary sort to the reduce. sleep: A job that sleeps at each map and reduce task. sort: A map/reduce program that sorts the data written by the random writer. sudoku: A sudoku solver. teragen: Generate data for the terasort terasort: Run the terasort teravalidate: Checking results of terasort wordcount: A map/reduce program that counts the words in the input files.

首先创建in文件夹,put两个文件进去,然后进行测试

[hadoop@master ~]$ hadoop fs -mkdir in [hadoop@master ~]$ hadoop fs -put /etc/fstab /etc/profile in

测试:

[hadoop@master ~]$ hadoop jar /usr/share/hadoop/hadoop-examples-1.2.1.jar wordcount in out 14/03/06 11:26:42 INFO input.FileInputFormat: Total input paths to process : 2 14/03/06 11:26:42 INFO util.NativeCodeLoader: Loaded the native-hadoop library 14/03/06 11:26:42 WARN snappy.LoadSnappy: Snappy native library not loaded 14/03/06 11:26:43 INFO mapred.JobClient: Running job: job_201403061123_0001 14/03/06 11:26:44 INFO mapred.JobClient: map 0% reduce 0% 14/03/06 11:26:50 INFO mapred.JobClient: map 100% reduce 0% 14/03/06 11:26:57 INFO mapred.JobClient: map 100% reduce 33% 14/03/06 11:26:58 INFO mapred.JobClient: map 100% reduce 100% 14/03/06 11:26:59 INFO mapred.JobClient: Job complete: job_201403061123_0001 14/03/06 11:26:59 INFO mapred.JobClient: Counters: 29 14/03/06 11:26:59 INFO mapred.JobClient: Job Counters 14/03/06 11:26:59 INFO mapred.JobClient: Launched reduce tasks=1 14/03/06 11:26:59 INFO mapred.JobClient: SLOTS_MILLIS_MAPS=7329 14/03/06 11:26:59 INFO mapred.JobClient: Total time spent by all reduces waiting after reserving slots (ms)=0 14/03/06 11:26:59 INFO mapred.JobClient: Total time spent by all maps waiting after reserving slots (ms)=0 14/03/06 11:26:59 INFO mapred.JobClient: Launched map tasks=2 14/03/06 11:26:59 INFO mapred.JobClient: Data-local map tasks=2 14/03/06 11:26:59 INFO mapred.JobClient: SLOTS_MILLIS_REDUCES=8587 14/03/06 11:26:59 INFO mapred.JobClient: File Output Format Counters 14/03/06 11:26:59 INFO mapred.JobClient: Bytes Written=2076 14/03/06 11:26:59 INFO mapred.JobClient: FileSystemCounters 14/03/06 11:26:59 INFO mapred.JobClient: FILE_BYTES_READ=2948 14/03/06 11:26:59 INFO mapred.JobClient: HDFS_BYTES_READ=3139 14/03/06 11:26:59 INFO mapred.JobClient: FILE_BYTES_WRITTEN=167810 14/03/06 11:26:59 INFO mapred.JobClient: HDFS_BYTES_WRITTEN=2076 14/03/06 11:26:59 INFO mapred.JobClient: File Input Format Counters 14/03/06 11:26:59 INFO mapred.JobClient: Bytes Read=2901 14/03/06 11:26:59 INFO mapred.JobClient: Map-Reduce Framework 14/03/06 11:26:59 INFO mapred.JobClient: Map output materialized bytes=2954 14/03/06 11:26:59 INFO mapred.JobClient: Map input records=97 14/03/06 11:26:59 INFO mapred.JobClient: Reduce shuffle bytes=2954 14/03/06 11:26:59 INFO mapred.JobClient: Spilled Records=426 14/03/06 11:26:59 INFO mapred.JobClient: Map output bytes=3717 14/03/06 11:26:59 INFO mapred.JobClient: Total committed heap usage (bytes)=336994304 14/03/06 11:26:59 INFO mapred.JobClient: CPU time spent (ms)=2090 14/03/06 11:26:59 INFO mapred.JobClient: Combine input records=360 14/03/06 11:26:59 INFO mapred.JobClient: SPLIT_RAW_BYTES=238 14/03/06 11:26:59 INFO mapred.JobClient: Reduce input records=213 14/03/06 11:26:59 INFO mapred.JobClient: Reduce input groups=210 14/03/06 11:26:59 INFO mapred.JobClient: Combine output records=213 14/03/06 11:26:59 INFO mapred.JobClient: Physical memory (bytes) snapshot=331116544 14/03/06 11:26:59 INFO mapred.JobClient: Reduce output records=210 14/03/06 11:26:59 INFO mapred.JobClient: Virtual memory (bytes) snapshot=3730141184 14/03/06 11:26:59 INFO mapred.JobClient: Map output records=360注:这里out文件夹需没有创建

七. 各种错误的总结

1. map 100%,reduce 0%

一般是由于主机名和IP地址不对应造成的,仔细检查3个节点的/etc/hosts文件

2. Error: Java heap space

分配的堆内存不够,在mapred-site.xml中,将mapred.child.java.opts的值改大,改为1024试试看

3. namenode无法启动

请修改默认的临时目录,在上面的文章中有提到

4. Name node is in safe mode,或者JobTracker is in safe mode

Namenode并不会持久存储数据块与其存储位置的对应信息,因为这些信息是在HDFS集群启动由Namenode根据各Datanode发来的信息进行重建而来。这个重建过程被称为HDFS的安全模式。

这种情况下只需等待会就好

本文出自 “footage of linux” 博客,请务必保留此出处http://evanlinux.blog.51cto.com/7247558/1369613

相关文章推荐

- hadoop之spark完全分布式环境搭建

- Hadoop2.x部署之完全分布式集群环境搭建

- Hadoop2.7.3+Spark2.1.0 完全分布式环境 搭建全过程

- Hadoop-2.4.1完全分布式环境搭建

- HADOOP(3.0.0)在CENTOS7(RED HAT 7)下完全分布式环境搭建

- Hadoop-2.7.3完全分布式环境搭建及环境部署脚本编写

- hadoop2.x完全分布式环境搭建

- Hadoop入门基础教程 Hadoop之完全分布式环境搭建

- Hadoop2.7.2 Centos 完全分布式集群环境搭建 (3) - 问题汇总

- Hadoop Hbase完全分布式环境搭建

- CentOS6.0+Hadoop2.6.0搭建完全分布式环境

- [虚拟机VM][Ubuntu12.04]搭建Hadoop完全分布式环境(二)

- 【分布式编程】一——基于VirtualBox的Hadoop完全分布式环境搭建

- Hadoop-2.3.0-cdh5.0.1完全分布式环境搭建(NameNode,ResourceManager HA)

- vmware ubuntu12.04 hadoop 完全分布式环境搭建记录(1)

- hadoop2.6完全分布式环境搭建(上)

- 在oracle Virtual Box 虚拟机中搭建hadoop1.2.1完全分布式环境

- CentOS7搭建Hadoop2.6完全分布式集群环境

- Hadoop2.7.2 Centos 完全分布式集群环境搭建 (1) - 基础环境准备-2

- hadoop2.6完全分布式环境搭建(下-->配置文件)