UFLDL Tutorial_Working with Large Images

2013-12-12 20:36

246 查看

Feature extraction using convolution

Contents[hide]1 Overview2 FullyConnected Networks3 LocallyConnected Networks4 Convolutions |

Overview

In the previous exercises, you worked through problems which involved images that were relatively low in resolution, such as small image patches and small images of hand-written digits. In this section, we will develop methods which will allow us to scale upthese methods to more realistic datasets that have larger images.Fully Connected Networks

In the sparse autoencoder, one design choice that we had made was to "fully connect" all the hidden units to all the input units. On the relatively small images that we were working with (e.g., 8x8 patches for the sparse autoencoder assignment, 28x28 imagesfor the MNIST dataset), it was computationally feasible to learn features on the entire image. However, with larger images (e.g., 96x96 images) learning features that span the entire image (fully connected networks) is very computationally expensive--you wouldhave about 104 input units, and assuming you want to learn 100 features, you would have on the order of 106 parameters to learn.The feedforward and backpropagation computations would also be about 102 times slower, compared to 28x28 images.Locally Connected Networks

One simple solution to this problem is to restrict the connections between the hidden units and the input units, allowing each hidden unit to connect to only a small subset of the input units. Specifically, each hidden unit will connect to only a small contiguousregion of pixels in the input. (For input modalities different than images, there is often also a natural way to select "contiguous groups" of input units to connect to a single hidden unit as well; for example, for audio, a hidden unit might be connectedto only the input units corresponding to a certain time span of the input audio clip.)This idea of having locally connected networks also draws inspiration from how the early visual system is wired up in biology. Specifically, neurons in the visual cortex have localized receptive fields (i.e., they respond only to stimuli in a certain location).Convolutions

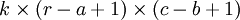

Natural images have the property of being stationary, meaning that the statistics of one part of the image are the same as any other part. This suggests that the features that we learn at one part of the image can also be applied to other partsof the image, and we can use the same features at all locations.More precisely, having learned features over small (say 8x8) patches sampled randomly from the larger image, we can then apply this learned 8x8 feature detector anywhere in the image. Specifically, we can take the learned 8x8 features and convolve themwith the larger image, thus obtaining a different feature activation value at each location in the image.To give a concrete example, suppose you have learned features on 8x8 patches sampled from a 96x96 image. Suppose further this was done with an autoencoder that has 100 hidden units. To get the convolved features, for every 8x8 region of the 96x96 image, thatis, the 8x8 regions starting at , you would extractthe 8x8 patch, and run it through your trained sparse autoencoder to get the feature activations. This would result in 100 sets 89x89 convolved features.

, you would extractthe 8x8 patch, and run it through your trained sparse autoencoder to get the feature activations. This would result in 100 sets 89x89 convolved features. Formally, given some large

Formally, given some large  images xlarge,we first train a sparse autoencoder on small

images xlarge,we first train a sparse autoencoder on small  patches xsmall sampledfrom these images, learning k features f = σ(W(1)xsmall + b(1)) (where σ isthe sigmoid function), given by the weights W(1) and biases b(1)from the visible units to the hidden units.For every

patches xsmall sampledfrom these images, learning k features f = σ(W(1)xsmall + b(1)) (where σ isthe sigmoid function), given by the weights W(1) and biases b(1)from the visible units to the hidden units.For every  patch xs inthe large image, we compute fs = σ(W(1)xs + b(1)), giving usfconvolved,a

patch xs inthe large image, we compute fs = σ(W(1)xs + b(1)), giving usfconvolved,a  array of convolved features.In the next section, we further describe how to "pool" these features together to get even better features for classification.

array of convolved features.In the next section, we further describe how to "pool" these features together to get even better features for classification.Pooling

Pooling: Overview

After obtaining features using convolution, we would next like to use them for classification. In theory, one could use all the extracted features with a classifier such as a softmax classifier,but this can be computationally challenging. Consider for instance images of size 96x96 pixels, and suppose we have learned 400 features over 8x8 inputs. Each convolution results in an output of size (96 − 8+ 1) * (96 − 8 + 1) = 7921, and since we have 400 features, this results in a vector of 892 * 400 = 3,168,400 features per example. Learning a classifier with inputs having 3+ millionfeatures can be unwieldy, and can also be prone to over-fitting.To address this, first recall that we decided to obtain convolved features because images have the "stationarity" property, which implies that features that are useful in one region are alsolikely to be useful for other regions. Thus, to describe a large image, one natural approach is to aggregate statistics of these features at various locations. For example, one could compute the mean (or max) value of a particular feature over a region ofthe image. These summary statistics are much lower in dimension (compared to using all of the extracted features) and can also improve results (less over-fitting). We aggregation operation is called this operation pooling, or sometimes meanpooling or max pooling (depending on the pooling operation applied).The following image shows how pooling is done over 4 non-overlapping regions of the image.

Pooling for Invariance

If one chooses the pooling regions to be contiguous areas in the image and only pools features generated from the same (replicated) hidden units. Then, these pooling units will then be translationinvariant. This means that the same (pooled) feature will be active even when the image undergoes (small) translations. Translation-invariant features are often desirable; in many tasks (e.g., object detection, audio recognition), the label of theexample (image) is the same even when the image is translated. For example, if you were to take an MNIST digit and translate it left or right, you would want your classifier to still accurately classify it as the same digit regardless of its final position.Formal description

Formally, after obtaining our convolved features as described earlier, we decide the size of the region, say topool our convolved features over. Then, we divide our convolved features into disjoint

topool our convolved features over. Then, we divide our convolved features into disjoint  regions,and take the mean (or maximum) feature activation over these regions to obtain the pooled convolved features. These pooled features can then be used for classification.

regions,and take the mean (or maximum) feature activation over these regions to obtain the pooled convolved features. These pooled features can then be used for classification.Exercise:Convolution and Pooling

Contents[hide]1 Convolutionand Pooling1.1 Dependencies1.2 Step1: Load learned features1.3 Step2: Implement and test convolution and pooling1.3.1 Step2a: Implement convolution1.3.2 Step2b: Check your convolution1.3.3 Step2c: Pooling1.3.4 Step2d: Check your pooling1.4 Step3: Convolve and pool with the dataset1.5 Step4: Use pooled features for classification1.6 Step5: Test classifier |

Convolution and Pooling

In this exercise you will use the features you learned on 8x8 patches sampled from images from the STL-10 dataset in theearlier exercise on linear decoders for classifying images from a reduced STL-10 dataset applying convolution and pooling.The reduced STL-10 dataset comprises 64x64 images from 4 classes (airplane, car, cat, dog).In the file cnn_exercise.zip wehave provided some starter code. You should write your code at the places indicated "YOUR CODE HERE" in the files.For this exercise, you will need to modify cnnConvolve.m and cnnPool.m.Dependencies

The following additional files are required for this exercise:A subset of the STL10 Dataset(stlSubset.zip)Starter Code (cnn_exercise.zip)You will also need:sparseAutoencoderLinear.m or your saved features from Exercise:Learningcolor features with Sparse AutoencodersfeedForwardAutoencoder.m (and related functions) from Exercise:Self-TaughtLearningsoftmaxTrain.m (and related functions) from Exercise:SoftmaxRegressionIf you have not completed the exercises listed above, we strongly suggest you complete them first.Step 1: Load learned features

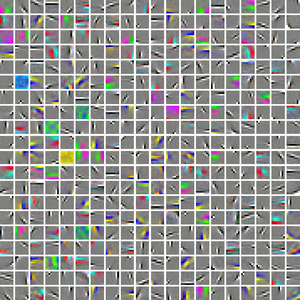

In this step, you will use the features from Exercise:Learningcolor features with Sparse Autoencoders. If you have completed that exercise, you can load the color features that were previously saved. To verify that the features are good, the visualized features should look like the following:

Step 2: Implement and test convolution and pooling

In this step, you will implement convolution and pooling, and test them on a small part of the data set to ensure that you have implemented these two functions correctly. In the next step,you will actually convolve and pool the features with the STL-10 images.Step 2a: Implement convolution

Implement convolution, as described in featureextraction using convolution, in the function cnnConvolve in cnnConvolve.m. Implementing convolution is somewhat involved, so we will guide you through the process below.First, we want to compute σ(Wx(r,c) + b) for all valid (r,c) (valid meaningthat the entire 8x8 patch is contained within the image; this is as opposed to a full convolution, which allows the patch to extend outside the image, with the area outside the image assumed to be 0), where W and b arethe learned weights and biases from the input layer to the hidden layer, and x(r,c) is the 8x8 patch with the upper left corner at (r,c).To accomplish this, one naive method is to loop over all such patches and computeσ(Wx(r,c) + b) for each of them; while this is fine in theory, it can veryslow. Hence, we usually use Matlab's built in convolution functions, which are well optimized.Observe that the convolution above can be broken down into the following three small steps. First, compute Wx(r,c) forall (r,c). Next, add b to all the computed values. Finally, apply the sigmoid function to the resulting values. This doesn't seem to buy you anything, since the first step still requiresa loop. However, you can replace the loop in the first step with one of MATLAB's optimized convolution functions, conv2, speeding up the process significantly.However, there are two important points to note in using conv2.First, conv2 performs a 2-D convolution, but you have 5 "dimensions" - image number, feature number, row of image, column of image, and (color) channel of image - that you want toconvolve over. Because of this, you will have to convolve each feature and image channel separately for each image, using the row and column of the image as the 2 dimensions you convolve over. This means that you will need three outer loops over the imagenumber imageNum, feature number featureNum, and the channel number of the image channel. Inside the three nested for-loops, you will perform a conv2 2-D convolution, using the weight matrix for the featureNum-thfeature andchannel-th channel, and the image matrix for the imageNum-th image.Second, because of the mathematical definition of convolution, the feature matrix must be "flipped" before passing it to conv2. The following implementation tip explains the "flipping"of feature matrices when using MATLAB's convolution functions:Implementation tip: Using conv2 and convnBecause the mathematical definition of convolution involves "flipping" the matrix to convolve with (reversing its rows and its columns), to use MATLAB's convolution functions, you must first"flip" the weight matrix so that when MATLAB "flips" it according to the mathematical definition the entries will be at the correct place. For example, suppose you wanted to convolve two matricesimage (a large image) and W (the feature) using conv2(image,W), and W is a 3x3 matrix as below: If you use conv2(image, W), MATLAB will first "flip" W, reversing its rows and columns, before convolving W with image, as below:

If you use conv2(image, W), MATLAB will first "flip" W, reversing its rows and columns, before convolving W with image, as below: If the original layout of W was correct, after flipping, it would be incorrect. For the layout to be correct after flipping, you will have to flip W before passing it into conv2,so that after MATLAB flips W in conv2, the layout will be correct. For conv2, this means reversing the rows and columns, which can be done with flipud and fliplr, as shown below:

If the original layout of W was correct, after flipping, it would be incorrect. For the layout to be correct after flipping, you will have to flip W before passing it into conv2,so that after MATLAB flips W in conv2, the layout will be correct. For conv2, this means reversing the rows and columns, which can be done with flipud and fliplr, as shown below:% Flip W for use in conv2 W = flipud(fliplr(W));Next, to each of the convolvedFeatures, you should then add b, the corresponding bias for the featureNum-th feature.However, there is one additional complication. If we had not done any preprocessing of the input patches, you could just follow the procedure as described above, and apply the sigmoid functionto obtain the convolved features, and we'd be done. However, because you preprocessed the patches before learning features on them, you must also apply the same preprocessing steps to the convolved patches to get the correct feature activations.In particular, you did the following to the patches:subtract the mean patch, meanPatch to zero the mean of the patchesZCA whiten using the whitening matrix ZCAWhite.These same three steps must also be applied to the input image patches.Taking the preprocessing steps into account, the feature activations that you should compute is

,where T is the whitening matrix and

,where T is the whitening matrix and  isthe mean patch. Expanding this, you obtain

isthe mean patch. Expanding this, you obtain  , whichsuggests that you should convolve the images with WT rather than W as earlier, and you should add

, whichsuggests that you should convolve the images with WT rather than W as earlier, and you should add  ,rather than just b to convolvedFeatures, before finally applying the sigmoid function.

,rather than just b to convolvedFeatures, before finally applying the sigmoid function.Step 2b: Check your convolution

We have provided some code for you to check that you have done the convolution correctly. The code randomly checks the convolved values for a number of (feature, row, column) tuples by computingthe feature activations using feedForwardAutoencoder for the selected features and patches directly using the sparse autoencoder.Step 2c: Pooling

Implement pooling in the function cnnPool in cnnPool.m.You should implement mean pooling (i.e., averaging over feature responses) for this part.Step 2d: Check your pooling

We have provided some code for you to check that you have done the pooling correctly. The code runs cnnPool against a test matrix to see if it produces the expected result.Step 3: Convolve and pool with the dataset

In this step, you will convolve each of the features you learned with the full 64x64 images from the STL-10 dataset to obtain the convolved features for both the training and test sets. Youwill then pool the convolved features to obtain the pooled features for both training and test sets. The pooled features for the training set will be used to train your classifier, which you can then test on the test set.Because the convolved features matrix is very large, the code provided does the convolution and pooling 50 features at a time to avoid running out of memory.Step 4: Use pooled features for classification

In this step, you will use the pooled features to train a softmax classifier to map the pooled features to the class labels. The code in this section uses softmaxTrain from the softmaxexercise to train a softmax classifier on the pooled features for 500 iterations, which should take around a few minutes.Step 5: Test classifier

Now that you have a trained softmax classifier, you can see how well it performs on the test set. These pooled features for the test set will be run through the softmax classifier, and theaccuracy of the predictions will be computed. You should expect to get an accuracy of around 80%.Contents

CS294A/CS294W Convolutional Neural Networks ExerciseSTEP 0: InitializationSTEP 1: Train a sparse autoencoder (with a linear decoder) to learnSTEP 2: Implement and test convolution and poolingSTEP 2a: Implement convolutionUse only the first 8 images for testingSTEP 2b: Checking your convolutionSTEP 2c: Implement poolingSTEP 2d: Checking your poolingSTEP 3: Convolve and pool with the datasetSTEP 4: Use pooled features for classificationSTEP 5: Test classiferCS294A/CS294W Convolutional Neural Networks Exercise

% Instructions % ------------ % % This file contains code that helps you get started on the % convolutional neural networks exercise. In this exercise, you will only % need to modify cnnConvolve.m and cnnPool.m. You will not need to modify % this file. %%======================================================================

STEP 0: Initialization

Here we initialize some parameters used for the exercise.

imageDim = 64; % image dimension imageChannels = 3; % number of channels (rgb, so 3) patchDim = 8; % patch dimension numPatches = 50000; % number of patches numPatches = 1000; visibleSize = patchDim * patchDim * imageChannels; % number of input units ,8*8*3=192 outputSize = visibleSize; % number of output units hiddenSize = 400; % number of hidden units epsilon = 0.1; % epsilon for ZCA whitening poolDim = 19; % dimension of pooling region %%======================================================================

STEP 1: Train a sparse autoencoder (with a linear decoder) to learn

features from color patches. If you have completed the linear decoder execise, use the features that you have obtained from that exercise, loading them into optTheta. Recall that we have to keep around the parameters used in whitening (i.e., the ZCA whitening matrix and the meanPatch)

% --------------------------- YOUR CODE HERE -------------------------- % Train the sparse autoencoder and fill the following variables with % the optimal parameters: optTheta = zeros(2*hiddenSize*visibleSize+hiddenSize+visibleSize, 1);%对patch网络作用的所有参数个数 ZCAWhite = zeros(visibleSize, visibleSize); meanPatch = zeros(visibleSize, 1); load STL10Features.mat; % -------------------------------------------------------------------- % Display and check to see that the features look good W = reshape(optTheta(1:visibleSize * hiddenSize), hiddenSize, visibleSize); b = optTheta(2*hiddenSize*visibleSize+1:2*hiddenSize*visibleSize+hiddenSize); displayColorNetwork( (W*ZCAWhite)');%以前的博客中有解释 %%======================================================================

STEP 2: Implement and test convolution and pooling

In this step, you will implement convolution and pooling, and test them on a small part of the data set to ensure that you have implemented these two functions correctly. In the next step, you will actually convolve and pool the features with the STL10 images.

STEP 2a: Implement convolution

Implement convolution in the function cnnConvolve in cnnConvolve.m

% Note that we have to preprocess the images in the exact same way % we preprocessed the patches before we can obtain the feature activations. load stlTrainSubset.mat % loads numTrainImages, trainImages, trainLabels

Use only the first 8 images for testing

convImages = trainImages(:, :, :, 1:8); % NOTE: Implement cnnConvolve in cnnConvolve.m first!w和b已经是矩阵或向量的形式了 convolvedFeatures = cnnConvolve(patchDim, hiddenSize, convImages, W, b, ZCAWhite, meanPatch);

STEP 2b: Checking your convolution

To ensure that you have convolved the features correctly, we have provided some code to compare the results of your convolution with activations from the sparse autoencoder

% For 1000 random points

for i = 1:1000

featureNum = randi([1, hiddenSize]);%随机选取一个特征

imageNum = randi([1, 8]);%随机选取一个样本

imageRow = randi([1, imageDim - patchDim + 1]);%随机选取一个点

imageCol = randi([1, imageDim - patchDim + 1]);

%在那8张图片中随机选取1张图片,然后又根据随机选取的左上角点选取1个patch

patch = convImages(imageRow:imageRow + patchDim - 1, imageCol:imageCol + patchDim - 1, :, imageNum);

patch = patch(:); %这样是按照列的顺序来排列的

patch = patch - meanPatch;

patch = ZCAWhite * patch;%用同样的参数对该patch进行白化处理

features = feedForwardAutoencoder(optTheta, hiddenSize, visibleSize, patch); %计算出该patch的输出值

if abs(features(featureNum, 1) - convolvedFeatures(featureNum, imageNum, imageRow, imageCol)) > 1e-9

fprintf('Convolved feature does not match activation from autoencoder\n');

fprintf('Feature Number : %d\n', featureNum);

fprintf('Image Number : %d\n', imageNum);

fprintf('Image Row : %d\n', imageRow);

fprintf('Image Column : %d\n', imageCol);

fprintf('Convolved feature : %0.5f\n', convolvedFeatures(featureNum, imageNum, imageRow, imageCol));

fprintf('Sparse AE feature : %0.5f\n', features(featureNum, 1));

error('Convolved feature does not match activation from autoencoder');

end

end

disp('Congratulations! Your convolution code passed the test.');Congratulations! Your convolution code passed the test.

STEP 2c: Implement pooling

Implement pooling in the function cnnPool in cnnPool.m

% NOTE: Implement cnnPool in cnnPool.m first! pooledFeatures = cnnPool(poolDim, convolvedFeatures);

STEP 2d: Checking your pooling

To ensure that you have implemented pooling, we will use your pooling function to pool over a test matrix and check the results.

testMatrix = reshape(1:64, 8, 8);%将1~64这64个数字弄成一个矩阵,按列的方向依次递增

%直接计算均值pooling值

expectedMatrix = [mean(mean(testMatrix(1:4, 1:4))) mean(mean(testMatrix(1:4, 5:8))); ...

mean(mean(testMatrix(5:8, 1:4))) mean(mean(testMatrix(5:8, 5:8))); ];

testMatrix = reshape(testMatrix, 1, 1, 8, 8);

%squeeze去掉维度为1的那一维

pooledFeatures = squeeze(cnnPool(4, testMatrix));%参数值为4表明是对4*4的区域进行pooling

if ~isequal(pooledFeatures, expectedMatrix)

disp('Pooling incorrect');

disp('Expected');

disp(expectedMatrix);

disp('Got');

disp(pooledFeatures);

else

disp('Congratulations! Your pooling code passed the test.');

end

%%======================================================================Congratulations! Your pooling code passed the test.

STEP 3: Convolve and pool with the dataset

In this step, you will convolve each of the features you learned with the full large images to obtain the convolved features. You will then pool the convolved features to obtain the pooled features for classification.

Because the convolved features matrix is very large, we will do the convolution and pooling 50 features at a time to avoid running out of memory. Reduce this number if necessary

stepSize = 50;

assert(mod(hiddenSize, stepSize) == 0, 'stepSize should divide hiddenSize');%hiddenSize/stepSize为整数,这里分8次进行

load stlTrainSubset.mat % loads numTrainImages, trainImages, trainLabels

trainImages=trainImages(:,:,:,1:100);

trainLabels=trainLabels(1:100,:);

numTrainImages=100;

load stlTestSubset.mat % loads numTestImages, testImages, testLabels

testImages=testImages(:,:,:,1:100);

testLabels=testLabels(1:100,:);

numTestImages=100;

pooledFeaturesTrain = zeros(hiddenSize, numTrainImages, ...%image是大图片的尺寸,这里为64

floor((imageDim - patchDim + 1) / poolDim), ... %.poolDim为多大的区域pool一次,这里为19,即19*19大小pool一次.

floor((imageDim - patchDim + 1) / poolDim) );%最后算出的pooledFeaturesTrain大小为400*2000*3*3

pooledFeaturesTest = zeros(hiddenSize, numTestImages, ...

floor((imageDim - patchDim + 1) / poolDim), ...

floor((imageDim - patchDim + 1) / poolDim) );%pooledFeaturesTest大小为400*3200*3*3

tic();

for convPart = 1:(hiddenSize / stepSize)%stepSize表示分批次进行原始图片数据的特征提取,一次进行stepSize个隐含层节点

featureStart = (convPart - 1) * stepSize + 1;%选取起始的特征

featureEnd = convPart * stepSize;%选取结束的特征

fprintf('Step %d: features %d to %d\n', convPart, featureStart, featureEnd);

Wt = W(featureStart:featureEnd, :);

bt = b(featureStart:featureEnd);

fprintf('Convolving and pooling train images\n');

convolvedFeaturesThis = cnnConvolve(patchDim, stepSize, ...%参数2表示的是当前"隐含层"节点的个数

trainImages, Wt, bt, ZCAWhite, meanPatch);

pooledFeaturesThis = cnnPool(poolDim, convolvedFeaturesThis);

pooledFeaturesTrain(featureStart:featureEnd, :, :, :) = pooledFeaturesThis;

toc();

clear convolvedFeaturesThis pooledFeaturesThis;%这些大的变量在不用的情况下全部删除掉,因为后面用的是test部分

fprintf('Convolving and pooling test images\n');

convolvedFeaturesThis = cnnConvolve(patchDim, stepSize, ...

testImages, Wt, bt, ZCAWhite, meanPatch);

pooledFeaturesThis = cnnPool(poolDim, convolvedFeaturesThis);

pooledFeaturesTest(featureStart:featureEnd, :, :, :) = pooledFeaturesThis;

toc();

clear convolvedFeaturesThis pooledFeaturesThis;

end

% You might want to save the pooled features since convolution and pooling takes a long time

save('cnnPooledFeatures.mat', 'pooledFeaturesTrain', 'pooledFeaturesTest');

toc();

%%======================================================================Step 1: features 1 to 50 Convolving and pooling train images Elapsed time is 6.838325 seconds. Convolving and pooling test images Elapsed time is 13.707608 seconds. Step 2: features 51 to 100 Convolving and pooling train images Elapsed time is 20.541126 seconds. Convolving and pooling test images Elapsed time is 27.396527 seconds. Step 3: features 101 to 150 Convolving and pooling train images Elapsed time is 34.230506 seconds. Convolving and pooling test images Elapsed time is 41.067128 seconds. Step 4: features 151 to 200 Convolving and pooling train images Elapsed time is 47.919055 seconds. Convolving and pooling test images Elapsed time is 54.761084 seconds. Step 5: features 201 to 250 Convolving and pooling train images Elapsed time is 61.612447 seconds. Convolving and pooling test images Elapsed time is 68.444096 seconds. Step 6: features 251 to 300 Convolving and pooling train images Elapsed time is 75.310140 seconds. Convolving and pooling test images Elapsed time is 82.159562 seconds. Step 7: features 301 to 350 Convolving and pooling train images Elapsed time is 89.018126 seconds. Convolving and pooling test images Elapsed time is 95.882877 seconds. Step 8: features 351 to 400 Convolving and pooling train images Elapsed time is 102.730060 seconds. Convolving and pooling test images Elapsed time is 109.564732 seconds. Elapsed time is 109.898775 seconds.

STEP 4: Use pooled features for classification

Now, you will use your pooled features to train a softmax classifier, using softmaxTrain from the softmax exercise. Training the softmax classifer for 1000 iterations should take less than 10 minutes.

% Add the path to your softmax solution, if necessary % addpath /path/to/solution/ % Setup parameters for softmax softmaxLambda = 1e-4;%权值惩罚系数 numClasses = 4; % Reshape the pooledFeatures to form an input vector for softmax softmaxX = permute(pooledFeaturesTrain, [1 3 4 2]);%permute是调整顺序,把图片放在最后 softmaxX = reshape(softmaxX, numel(pooledFeaturesTrain) / numTrainImages,...%numel(pooledFeaturesTrain) / numTrainImages numTrainImages); %为每一张图片得到的特征向量长度 softmaxY = trainLabels; options = struct; options.maxIter = 200; softmaxModel = softmaxTrain(numel(pooledFeaturesTrain) / numTrainImages,...%第一个参数为inputSize numClasses, softmaxLambda, softmaxX, softmaxY, options); %%======================================================================

STEP 5: Test classifer

Now you will test your trained classifer against the test images

softmaxX = permute(pooledFeaturesTest, [1 3 4 2]);softmaxX = reshape(softmaxX, numel(pooledFeaturesTest) / numTestImages, numTestImages);softmaxY = testLabels;[pred] = softmaxPredict(softmaxModel, softmaxX);acc = (pred(:) == softmaxY(:));acc = sum(acc) / size(acc, 1);fprintf('Accuracy: %2.3f%%\n', acc * 100);%计算预测准确度% You should expect to get an accuracy of around 80% on the test images.function convolvedFeatures = cnnConvolve(patchDim, numFeatures, images, W, b, ZCAWhite, meanPatch)%cnnConvolve Returns the convolution of the features given by W and b with%the given images%% Parameters:% patchDim - patch (feature) dimension% numFeatures - number of features% images - large images to convolve with, matrix in the form% images(r, c, channel, image number)% W, b - W, b for features from the sparse autoencoder% ZCAWhite, meanPatch - ZCAWhitening and meanPatch matrices used for% preprocessing%% Returns:% convolvedFeatures - matrix of convolved features in the form% convolvedFeatures(featureNum, imageNum, imageRow, imageCol)patchSize = patchDim*patchDim;assert(numFeatures == size(W,1), 'W should have numFeatures rows');numImages = size(images, 4);%第4维的大小,即图片的样本数imageDim = size(images, 1);%第1维的大小,即图片的行数imageChannels = size(images, 3);%第3维的大小,即图片的通道数assert(patchSize*imageChannels == size(W,2), 'W should have patchSize*imageChannels cols');% Instructions:% Convolve every feature with every large image here to produce the% numFeatures x numImages x (imageDim - patchDim + 1) x (imageDim - patchDim + 1)% matrix convolvedFeatures, such that% convolvedFeatures(featureNum, imageNum, imageRow, imageCol) is the% value of the convolved featureNum feature for the imageNum image over% the region (imageRow, imageCol) to (imageRow + patchDim - 1, imageCol + patchDim - 1)%% Expected running times:% Convolving with 100 images should take less than 3 minutes% Convolving with 5000 images should take around an hour% (So to save time when testing, you should convolve with less images, as% described earlier)% -------------------- YOUR CODE HERE --------------------% Precompute the matrices that will be used during the convolution. Recall% that you need to take into account the whitening and mean subtraction% stepsWT = W*ZCAWhite;%等效的网络参数b_mean = b - WT*meanPatch;%针对未均值化的输入数据需要加入该项% --------------------------------------------------------convolvedFeatures = zeros(numFeatures, numImages, imageDim - patchDim + 1, imageDim - patchDim + 1);for imageNum = 1:numImagesfor featureNum = 1:numFeatures% convolution of image with feature matrix for each channelconvolvedImage = zeros(imageDim - patchDim + 1, imageDim - patchDim + 1);for channel = 1:imageChannels% Obtain the feature (patchDim x patchDim) needed during the convolution% ---- YOUR CODE HERE ----offset = (channel-1)*patchSize;feature = reshape(WT(featureNum,offset+1:offset+patchSize), patchDim, patchDim);%取一个权值图像块出来im = images(:,:,channel,imageNum);% Flip the feature matrix because of the definition of convolution, as explained later% feature = flipud(fliplr(squeeze(feature)));feature = flipud(fliplr(feature));% Obtain the image%im = squeeze(images(:, :, channel, imageNum));%取一张图片出来im = images(:, :, channel, imageNum);%取一张图片出来% Convolve "feature" with "im", adding the result to convolvedImage% be sure to do a 'valid' convolution% ---- YOUR CODE HERE ----convolvedoneChannel = conv2(im, feature, 'valid');convolvedImage = convolvedImage + convolvedoneChannel;%直接把3通道的值加起来,理由?% ------------------------end% Subtract the bias unit (correcting for the mean subtraction as well)% Then, apply the sigmoid function to get the hidden activation% ---- YOUR CODE HERE ----convolvedImage = sigmoid(convolvedImage+b_mean(featureNum));% ------------------------% The convolved feature is the sum of the convolved values for all channelsconvolvedFeatures(featureNum, imageNum, :, :) = convolvedImage;endendendfunction sigm = sigmoid(x)sigm = 1./(1+exp(-x));end

function pooledFeatures = cnnPool(poolDim, convolvedFeatures)%cnnPool Pools the given convolved features%% Parameters:% poolDim - dimension of pooling region% convolvedFeatures - convolved features to pool (as given by cnnConvolve)% convolvedFeatures(featureNum, imageNum, imageRow, imageCol)%% Returns:% pooledFeatures - matrix of pooled features in the form% pooledFeatures(featureNum, imageNum, poolRow, poolCol)%numImages = size(convolvedFeatures, 2);%图片数numFeatures = size(convolvedFeatures, 1);%特征数convolvedDim = size(convolvedFeatures, 3);%图片的行数resultDim = floor(convolvedDim / poolDim);pooledFeatures = zeros(numFeatures, numImages, resultDim, resultDim);% -------------------- YOUR CODE HERE --------------------% Instructions:% Now pool the convolved features in regions of poolDim x poolDim,% to obtain the% numFeatures x numImages x (convolvedDim/poolDim) x (convolvedDim/poolDim)% matrix pooledFeatures, such that% pooledFeatures(featureNum, imageNum, poolRow, poolCol) is the% value of the featureNum feature for the imageNum image pooled over the% corresponding (poolRow, poolCol) pooling region% (see http://ufldl/wiki/index.php/Pooling )%% Use mean pooling here.% -------------------- YOUR CODE HERE --------------------for imageNum = 1:numImagesfor featureNum = 1:numFeaturesfor poolRow = 1:resultDimoffsetRow = 1+(poolRow-1)*poolDim;for poolCol = 1:resultDimoffsetCol = 1+(poolCol-1)*poolDim;patch = convolvedFeatures(featureNum,imageNum,offsetRow:offsetRow+poolDim-1,...offsetCol:offsetCol+poolDim-1);%取出一个patchpooledFeatures(featureNum,imageNum,poolRow,poolCol) = mean(patch(:));%使用均值poolendendendendend

相关文章推荐

- UFLDL学习笔记7(Working with Large Images)

- 深度学习笔记7 Working with Large Images 卷积特征提取

- Working with large data sets in MySQL

- Working with binary large objects (BLOBs)

- Working with JSON in iOS 5 Tutorial

- opencv_tutorial 2.2 - How to scan Images,Lookup tables and time measurement with opencv

- Working with large lists in MOSS2007(四)

- Working with Docker Images

- docker offical docs:Working with Docker Images

- SharePoint Designer Tutorial: Working with SharePoint Websites

- SQLite Tutorial 3 : Working with important SqLite Queries (SELECT, INSERT, DELETE, UPDATE,WHERE...)

- Working with BLOB (Binary Large Object) database fields with Delphi.

- Working with JSON in iOS 5 Tutorial

- WP7 working with Images: Content vs Resource build action

- Working with large datasets

- Working with Images in Google's Android

- Working with System Permissions

- [转]Working with Transactions (EF6 Onwards)

- Working With Taxonomy Field in CSOM

- QTP -20 Working with web Tables 与webTable交互