Hadoop 1.0.2 安装 使用 单机模式 Hadoop_1

2012-05-15 19:13

746 查看

装完的HDFS用IE打开访问,查看其磁盘使用情况:http://192.168.33.10:50070/dfshealth.jsp

http://www.xueit.com/html/2009-08/33_4398_00.html http://www.json.org/json-zh.html http://blog.csdn.net/huangwuyi/article/details/5412500 http://blog.csdn.net/id19870510/article/details/5626783 http://blog.csdn.net/momomoge/article/details/7333327 http://hi.baidu.com/55842223/blog/item/9d1780ec41c0943e269791b2.html http://hi.baidu.com/a393060727/blog/item/be295f08cf32439b0a7b820f.html http://xiaohewoai.iteye.com/blog/517227 http://tide2046.blog.hexun.com/6197877_d.html http://blog.51cto.com/zt/218 http://metooxi.iteye.com/category/208528 http://blog.csdn.net/whuqin/article/details/6662378 http://blog.nosqlfan.com/html/2552.html http://www.easyigloo.org/?p=1014 http://wiki.huihoo.com/wiki/HDFS http://www.txdnet.cn/log/20821434000001.xhtm http://tntxia.iteye.com/blog/826193 http://tntxia.iteye.com/blog/826193 http://developer.51cto.com/art/200907/134303.htm http://jersey.group.iteye.com/group/topic/9351 http://www.ibm.com/developerworks/cn/web/wa-aj-tomcat/ http://www.cnblogs.com/forfuture1978/archive/2010/03/14/1685351.html http://jersey.group.iteye.com/group/topic/9351 http://blog.csdn.net/zhangzhaokun/article/details/5597433 http://wenku.baidu.com/view/788d3a81d4d8d15abe234e94.html http://subject.csdn.net/hadoop/ http://cloud.csdn.net/a/20100907/279146.html http://wenku.baidu.com/view/788d3a81d4d8d15abe234e94.html http://www.cnblogs.com/forfuture1978/archive/2010/03/14/1685351.html http://rdc.taobao.com/team/top/tag/hadoop-hive-%E5%8D%81%E5%88%86%E9%92%9F%E6%95%99%E7%A8%8B/ http://wenku.baidu.com/view/dcc5570bf78a6529647d533b.html?from=rec&pos=1&weight=10&lastweight=10&count=3 http://wenku.baidu.com/view/47d426b91a37f111f1855bbd.html?from=rec&pos=2&weight=10&lastweight=10&count=3 http://www.cnblogs.com/xuqiang/archive/2011/06/03/2042526.html http://blog.csdn.net/shirdrn/article/details/5781776 http://peopleyun.com/?p=1098 http://www.cnblogs.com/wly603/archive/2012/04/10/2441336.html

step 1. 为Hadoop增加用户,记住设定的密码

Java代码

$ sudo addgroup hadoop $ sudo adduser --ingroup hadoop hduser

step 2. ssh的安装与设置

由于Hadoop用ssh 通信,因此首先要安装SSH Server

Java代码

$ sudo apt-get install ssh

下面进行免密码登录设定,su 命令执行后,输入刚才设定的密码

Java代码

$ su - hduser

$ ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

$ ssh localhost

生成用于SSH的密钥 ,完成后请登入确认不用输入密码,(第一次登入需按enter键,第二次就可以直接登入到系统。

Java代码

~$ ssh localhost ~$ exit ~$ ssh localhost ~$ exit

step 3. 安装java

笔者采用的是离线的tar,解压到/opt/java1.7.0/

Java代码

•$ tar zxvf jdk1.7.0.tar.gz •$ sudo mv jdk1.7.0 /opt/java/

配置环境

Java代码

•$ sudo gedit /opt/profile

在 "umask 022"之前输入 as below

export JAVA_HOME=/opt/java/jdk1.7.0

export JRE_HOME=$JAVA_HOME/jre

export CLASSPATH=.:$JAVA_HOME/lib:$JRE_HOME/lib

export PATH=$PATH:$JRE_HOME/bin:$JAVA_HOME/bin

step 4. 下载安装Hadoop

•下载 Hadoop-1.0.2,并解开压缩文件到 /opt 路径。

Java代码

•$ tar zxvf Hadoop-1.0.2.tar.gz •$ sudo mv Hadoop-1.0.2 /opt/ •$ sudo chown -R hduser:hadoop /opt/Hadoop-1.0.2

step 5. 设定 hadoop-env.sh

•进入 hadoop 目录,做进一步的设定。我们需要修改两个档案,第一个是 hadoop-env.sh,需要设定 JAVA_HOME, HADOOP_HOME, PATH 三个环境变量。

/opt$ cd Hadoop-1.0.2/

/opt/Hadoop-1.0.2$ cat >> conf/hadoop-env.sh << EOF

贴上以下信息

export JAVA_HOME=/opt/java/jdk1.7.0

export HADOOP_HOME=/opt/Hadoop-1.0.2

export PATH=$PATH:$HADOOP_HOME/bin

EOF

这里我有一点不明白,明明/etc/profile里已经指定了JAVA_HOME,这里为什么还需要指定?

step 6. 设定 hadoop配置文件

•编辑 $HADOOP_HOME/conf/core-site.xml

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/tmp/hadoop/hadoop-${user.name}</value>

</property>

</configuration>

•编辑 HADOOP_HOME/conf/hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

• 编辑 HADOOP_HOME/conf/mapred-site.xml

<configuration>

<property>

<name>mapred.job.tracker</name>

<value>localhost:9001</value>

</property>

</configuration>

step 7. 格式化HDFS

•以上我们已经设定好 Hadoop 单机测试的环境,接着让我们来启动 Hadoop 相关服务,格式化 namenode, secondarynamenode, tasktracker

Java代码

•$ cd /opt/Hadoop-1.0.2 •$ source /opt/Hadoop-1.0.2/conf/hadoop-env.sh •$ hadoop namenode -format

执行上面的语句会报空指针错误,因为默认 hadoop.tmp.dir= tmp/hadoop/hadoop-${user.name}

如果你要修改的话可以

Java代码

•/opt/hadoop-1.0.2/conf$ sudo gedit core-site.xml

<!-- In: conf/core-site.xml -->

<property>

<name>hadoop.tmp.dir</name>

<value>/tmp/hadoop/hadoop-${user.name}</value>

<description>A base for other temporary directories.</description>

</property>

给此路径路径设定权限

Java代码

$ sudo mkdir -p /tmp/hadoop/hadoop-hduser $ sudo chown hduser:hadoop /tmp/hadoop/hadoop-hduser # ...and if you want to tighten up security, chmod from 755 to 750... $ sudo chmod 750 /tmp/hadoop/hadoop-hduser

在执行的格式化就会看到

执行画面如:

[: 107: namenode: unexpected operator

12/05/07 20:47:40 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = seven7-laptop/127.0.1.1

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 1.0.2

STARTUP_MSG: build = https://svn.apache.org/repos/asf/hadoop/common/branches/branch-1.0.2 -r 1304954; compiled by 'hortonfo' on Sat Mar 24 23:58:21 UTC 2012

************************************************************/

12/05/07 20:47:41 INFO util.GSet: VM type = 32-bit

12/05/07 20:47:41 INFO util.GSet: 2% max memory = 17.77875 MB

12/05/07 20:47:41 INFO util.GSet: capacity = 2^22 = 4194304 entries

12/05/07 20:47:41 INFO util.GSet: recommended=4194304, actual=4194304

12/05/07 20:47:41 INFO namenode.FSNamesystem: fsOwner=hduser

12/05/07 20:47:41 INFO namenode.FSNamesystem: supergroup=supergroup

12/05/07 20:47:41 INFO namenode.FSNamesystem: isPermissionEnabled=true

12/05/07 20:47:41 INFO namenode.FSNamesystem: dfs.block.invalidate.limit=100

12/05/07 20:47:41 INFO namenode.FSNamesystem: isAccessTokenEnabled=false accessKeyUpdateInterval=0 min(s), accessTokenLifetime=0 min(s)

12/05/07 20:47:41 INFO namenode.NameNode: Caching file names occuring more than 10 times

12/05/07 20:47:42 INFO common.Storage: Image file of size 112 saved in 0 seconds.

12/05/07 20:47:42 INFO common.Storage: Storage directory /tmp/hadoop/hadoop-hduser/dfs/name has been successfully formatted.

12/05/07 20:47:42 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at seven7-laptop/127.0.1.1

************************************************************/

step 7. 启动Hadoop

•接着用 start-all.sh 来启动所有服务,包含 namenode, datanode,

$HADOOP_HOME/bin/start-all.sh

Java代码

•opt/hadoop-1.0.2/bin$ sh ./start-all.sh

执行画面如:

•starting namenode, logging to /opt/hadoop-1.0.2/logs/hadoop-hduser-namenode-seven7-laptop.out

localhost:

localhost: starting datanode, logging to /opt/hadoop-1.0.2/logs/hadoop-hduser-datanode-seven7-laptop.out

localhost:

localhost: starting secondarynamenode, logging to /opt/hadoop-1.0.2/logs/hadoop-hduser-secondarynamenode-seven7-laptop.out

starting jobtracker, logging to /opt/hadoop-1.0.2/logs/hadoop-hduser-jobtracker-seven7-laptop.out

localhost:

localhost: starting tasktracker, logging to /opt/hadoop-1.0.2/logs/hadoop-hduser-tasktracker-seven7-laptop.out

step 8. 安装完毕测试

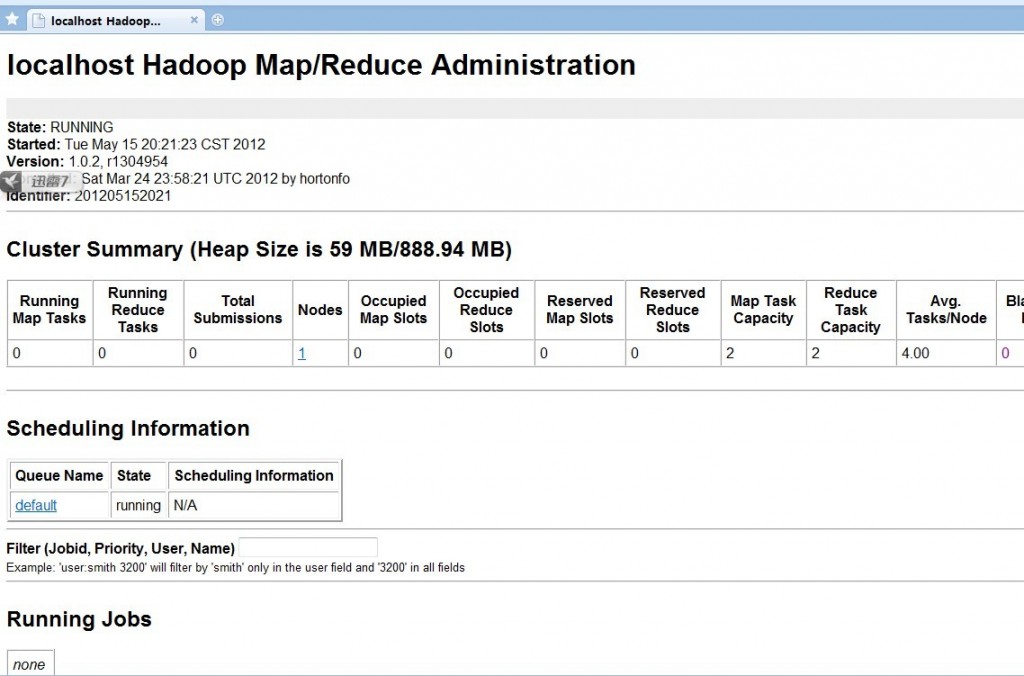

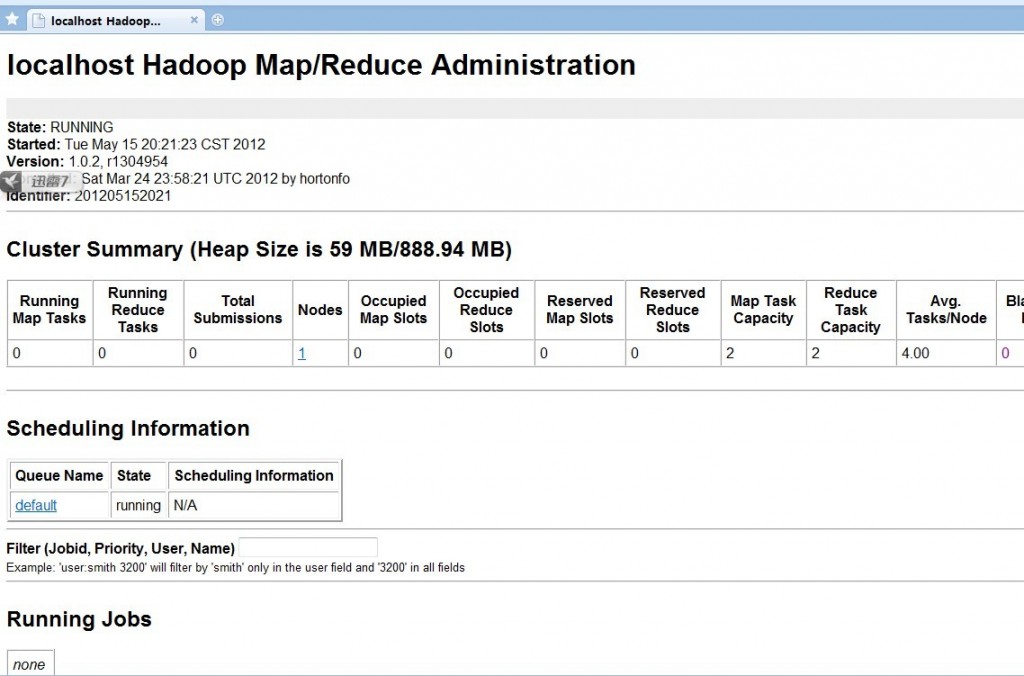

•启动之后,可以检查以下网址,来观看服务是否正常。 Hadoop 管理接口 Hadoop Task Tracker 状态 Hadoop DFS 状态

•http://localhost:50030/ - Hadoop 管理接口

至此

Hadoop单节点安装完成,下面将在次单节点集群上进行作业

<1>. Hadoop简介 hadoop是apache的开源项目,开发的主要目的是为了构建可靠,可拓展scalable,分布式的系统,hadoop是一系列的子工程的总和,其中包含。

1. hadoop common:为其他项目提供基础设施

2. HDFS:分布式的文件系统

3. MapReduce:A software framework for distributed processing of large data sets on compute clusters。一个简化分布式编程的框架。

4. 其他工程包含:Avro(序列化系统),Cassandra(数据库项目)等

从此学习网 http://www.congci.com/item/596hadoop http://www.congci.com/item/596hadoop

在单机来模拟Hadoop基于分布式运行,最终通过在本机创建多个线程来模拟。主要就是实现运行Hadoop自带的WordCount这个例子,具体实现过程将在下面详细叙述。

(PS:因为我是一个新手,刚接触Hadoop不久,在学习Hadoop过程中遇到很多问题,特别将自己的实践过程写得非常详细,为更多对Hadoop感兴趣的朋友提供尽可能多的信息,仅此而已。)

模拟Linux环境配置

使用cygwin来模拟Linux运行环境,安装好cygwin后,配置好OpenSSH以后才能进行下面的操作。

Hadoop配置

首先进行Hadoop配置:

1、conf/hadoop-env.sh文件中最基本需要指定JAVA_HOME,例如我的如下:

如果路径中存在空格,需要使用双引号。

2、只需要修改conf/hadoop-site.xml文件即可,默认情况下,hadoop-site.xml并没有被配置,如果是基于单机运行,就会按照hadoop-default.xml中的基本配置选项执行任务。

将hadoop-site.xml文件修改为如下所示:

实现过程

1、认证配置

启动cygwin,同时使用下面的命令启动ssh:

如图所示:

接着,需要对身份加密认证这一部分进行配置,这也是非常关键的,因为基于分布式的多个Datanode结点需要向Namenode提供任务执行报告信息,如果每次访问Namenode结点都需要密码验证的话就麻烦了,当然我要说的就是基于无密码认证的方式的配置,可以参考我的其他文章。

生成RSA公钥的命令如下:

生成过程如图所示:

上面执行到如下步骤时需要进行设置:

直接按回车键即可,按照默认的选项将生成的RSA公钥保存在/home/SHIYANJUN/.ssh/id_rsa文件中,以便结点之间进行通讯认证。

继续执行,又会提示进行输入选择密码短语passphrase,在如下这里:

直接按回车键,而且一定要这么做,因为空密码短语就会在后面执行过程中免去结点之间通讯进行的认证,直接通过RSA公钥(事实上,我们使用的是DSA认证,当然RSA也可以进行认证,继续看后面)认证。

RSA公钥主要是对结点之间的通讯信息加密的。如果RSA公钥生成过程如上图,说明正确生成了RSA公钥。

接着生成DSA公钥,使用如下命令:

生成过程与前面的RSA类似,如图所示:

然后,需要将DSA公钥加入到公钥授权文件authorized_keys中,使用如下命令:

如图所示,没有任何信息输出:

到此,就可以进行Hadoop的运行工作了。

2、Hadoop处理的数据文件准备

我使用的是hadoop-0.16.4版本,直接拷贝到G:\根目录下面,同时,我的cygwin直接安装在G:\Cygwin里面。

在目录G:\hadoop-0.16.4中创建一个input目录,里面准备几个TXT文件,我准备了7个,文件中内容就是使用空格分隔的多个英文单词,因为是运行WordCount这个例子,后面可以看到我存入了多少内容。

3、运行过程

下面,切换到G:\hadoop-0.16.4目录下面

其中通过cygdrive(位于Cygwin根目录中)可以直接映射到Windows下的各个逻辑磁盘分区中。

在执行任务中,使用HDFS,即Hadoop的分布式文件系统,因此这时要做的就是格式化这个文件系统,使用下面命令可以完成:

格式化过程如图所示:

此时,应该启动Namenode、Datanode、SecondaryNamenode、JobTracer,使用这个命令启动:

启动过程如图所示:

如果你没有配置前面ssh的无密码认证,或者配置了但是输入了密码短语,那么到这里就会每启动一个进程就提示输入密码,试想,如果有N多进程的话,那岂不是要命了。

然后,需要把上面我们在本地的input目录中准备的文件复制到HDFS中的input目录中,以便在分布式文件系统管理这些待处理的数据文件,使用下面命令:

执行上述命令如果没有信息输出就复制成功。

现在,才可以执行Hadoop自带的WordCount列子了,使用下面命令开始提交任务,进入运行:

最后面两个参数分别为数据输入目录和数据处理完成后的输出目录,这里,不能在你的G:\hadoop-0.16.4目录中存在output这个目录,否则会报错的。

运行过程如图所示:

通过上图,可以看出在运行一个Job的过程中,WordCount工具执行任务的进度情况,非常详细。

最后查看执行任务后,处理数据的结果,使用的命令行如下所示:

输出结果如图所示:

最后,停止Hadoop进程,使用如下命令:

如图所示:

以上就是全部的过程了。

http://hi.baidu.com/shirdrn/blog/item/33c762fecf9811375c600892.html

[Hadoop] Error: JAVA_HOME is not set

2011年01月22日 星期六 19:40

hadoop 1.0.0版本,安装完之后敲入hadoop命令时,老是提示这个警告:

Warning: $HADOOP_HOME is deprecated.

经查hadoop-1.0.0/bin/hadoop脚本和hadoop-config.sh脚本,发现脚本中对HADOOP_HOME的环境变量设置做了判断,笔者的环境根本不需要设置HADOOP_HOME环境变量。

参考文章:

HADOOP-7398

解决方案一:到HOME目录编辑.bash_profile文件,去掉HADOOP_HOME的变量设定,重新输入hadoop fs命令,警告消失。

解决方案二:到HOME目录编辑.bash_profile文件,添加一个环境变量,之后警告消失:

export HADOOP_HOME_WARN_SUPPRESS=1

login as: root

root@192.168.33.10's password:

Access denied

root@192.168.33.10's password:

Last login: Tue May 15 17:33:30 2012 from yunwei2.uid5a.cn

[root@yunwei2 ~]# ls

============== jdk-6u31-linux-amd64.rpm

1 jdk-6u31-linux-x64-rpm.bin

102 linux.x64_11gR2_database_1of2.zip

141 linux.x64_11gR2_database_2of2.zip

160 ll.txt

25 ***Load

36 packet

81 ReadPacket

90 Remote

anaconda-ks.cfg root@192.168.33.9

compat-libstdc++-33-3.2.3-61.i386.rpm sql

compat-libstdc++-33-3.2.3-61.x86_64.rpm squid-2.6.STABLE23

Desktop squid-2.6.STABLE23.tar.gz

End squid-3.1.19

esx-3.5.0-64607 squid-3.1.19.tar.gz

esx-3.5.0-64607.zip subversion-1.7.4

========findAllBarcode subversion-1.7.4.tar.gz

getCitySql Templates

getProvince UID

index.html Unexpected

install.log webbench-1.5

install.log.syslog webbench-1.5.tar.gz

[root@yunwei2 ~]# java -version

java version "1.6.0_31"

Java(TM) SE Runtime Environment (build 1.6.0_31-b04)

Java HotSpot(TM) 64-Bit Server VM (build 20.6-b01, mixed mode)

[root@yunwei2 ~]# cd /opt

[root@yunwei2 opt]# ls

apache-tomcat-6.0.35 jk.log.3987.lock jk.log.6205.lock

apache-tomcat-6.0.35.tar.gz jk.log.4398 jprofiler6

apr-0.9.20.tar.gz jk.log.4398.lock jprofiler_linux_6_0_2.tar.gz

apr-1.4.6 jk.log.5969 oracle

apr-1.4.6.tar.gz jk.log.5969.lock oraInventory

apr-iconv-1.2.1 jk.log.5972 sqlite-amalgamation-3071100

apr-iconv-1.2.1.tar.gz jk.log.5972.lock sqlite-amalgamation-3071100.zip

apr-util-1.4.1 jk.log.5977 subversion-1.7.4

apr-util-1.4.1.tar.gz jk.log.5977.lock subversion-1.7.4.tar.gz

hadoop-1.0.2 jk.log.5981 sun

hadoop-1.0.2-bin.tar.gz jk.log.5981.lock tomcatSS

jk.log.3864 jk.log.5991 webbench

jk.log.3864.lock jk.log.5991.lock

jk.log.3987 jk.log.6205

[root@yunwei2 opt]# rm -rf hadoop-1.0.2

[root@yunwei2 opt]# tar -zxvf hadoop-1.0.2-bin.tar.gz

hadoop-1.0.2/

hadoop-1.0.2/bin/

hadoop-1.0.2/c++/

hadoop-1.0.2/c++/Linux-amd64-64/

hadoop-1.0.2/c++/Linux-amd64-64/include/

hadoop-1.0.2/c++/Linux-amd64-64/include/hadoop/

hadoop-1.0.2/c++/Linux-amd64-64/lib/

hadoop-1.0.2/c++/Linux-i386-32/

hadoop-1.0.2/c++/Linux-i386-32/include/

hadoop-1.0.2/c++/Linux-i386-32/include/hadoop/

hadoop-1.0.2/c++/Linux-i386-32/lib/

hadoop-1.0.2/conf/

hadoop-1.0.2/contrib/

hadoop-1.0.2/contrib/datajoin/

hadoop-1.0.2/contrib/failmon/

hadoop-1.0.2/contrib/gridmix/

hadoop-1.0.2/contrib/hdfsproxy/

hadoop-1.0.2/contrib/hdfsproxy/bin/

hadoop-1.0.2/contrib/hdfsproxy/conf/

hadoop-1.0.2/contrib/hdfsproxy/logs/

hadoop-1.0.2/contrib/hod/

hadoop-1.0.2/contrib/hod/bin/

hadoop-1.0.2/contrib/hod/conf/

hadoop-1.0.2/contrib/hod/hodlib/

hadoop-1.0.2/contrib/hod/hodlib/AllocationManagers/

hadoop-1.0.2/contrib/hod/hodlib/Common/

hadoop-1.0.2/contrib/hod/hodlib/GridServices/

hadoop-1.0.2/contrib/hod/hodlib/Hod/

hadoop-1.0.2/contrib/hod/hodlib/HodRing/

hadoop-1.0.2/contrib/hod/hodlib/NodePools/

hadoop-1.0.2/contrib/hod/hodlib/RingMaster/

hadoop-1.0.2/contrib/hod/hodlib/Schedulers/

hadoop-1.0.2/contrib/hod/hodlib/ServiceProxy/

hadoop-1.0.2/contrib/hod/hodlib/ServiceRegistry/

hadoop-1.0.2/contrib/hod/ivy/

hadoop-1.0.2/contrib/hod/support/

hadoop-1.0.2/contrib/hod/testing/

hadoop-1.0.2/contrib/index/

hadoop-1.0.2/contrib/streaming/

hadoop-1.0.2/contrib/vaidya/

hadoop-1.0.2/contrib/vaidya/bin/

hadoop-1.0.2/contrib/vaidya/conf/

hadoop-1.0.2/ivy/

hadoop-1.0.2/lib/

hadoop-1.0.2/lib/jdiff/

hadoop-1.0.2/lib/jsp-2.1/

hadoop-1.0.2/lib/native/

hadoop-1.0.2/lib/native/Linux-amd64-64/

hadoop-1.0.2/lib/native/Linux-i386-32/

hadoop-1.0.2/libexec/

hadoop-1.0.2/sbin/

hadoop-1.0.2/share/

hadoop-1.0.2/share/hadoop/

hadoop-1.0.2/share/hadoop/templates/

hadoop-1.0.2/share/hadoop/templates/conf/

hadoop-1.0.2/webapps/

hadoop-1.0.2/webapps/datanode/

hadoop-1.0.2/webapps/datanode/WEB-INF/

hadoop-1.0.2/webapps/hdfs/

hadoop-1.0.2/webapps/hdfs/WEB-INF/

hadoop-1.0.2/webapps/history/

hadoop-1.0.2/webapps/history/WEB-INF/

hadoop-1.0.2/webapps/job/

hadoop-1.0.2/webapps/job/WEB-INF/

hadoop-1.0.2/webapps/secondary/

hadoop-1.0.2/webapps/secondary/WEB-INF/

hadoop-1.0.2/webapps/static/

hadoop-1.0.2/webapps/task/

hadoop-1.0.2/webapps/task/WEB-INF/

hadoop-1.0.2/CHANGES.txt

hadoop-1.0.2/LICENSE.txt

hadoop-1.0.2/NOTICE.txt

hadoop-1.0.2/README.txt

hadoop-1.0.2/build.xml

hadoop-1.0.2/c++/Linux-amd64-64/include/hadoop/Pipes.hh

hadoop-1.0.2/c++/Linux-amd64-64/include/hadoop/SerialUtils.hh

hadoop-1.0.2/c++/Linux-amd64-64/include/hadoop/StringUtils.hh

hadoop-1.0.2/c++/Linux-amd64-64/include/hadoop/TemplateFactory.hh

hadoop-1.0.2/c++/Linux-amd64-64/lib/libhadooppipes.a

hadoop-1.0.2/c++/Linux-amd64-64/lib/libhadooputils.a

hadoop-1.0.2/c++/Linux-amd64-64/lib/libhdfs.la

hadoop-1.0.2/c++/Linux-amd64-64/lib/libhdfs.so

hadoop-1.0.2/c++/Linux-amd64-64/lib/libhdfs.so.0

hadoop-1.0.2/c++/Linux-amd64-64/lib/libhdfs.so.0.0.0

hadoop-1.0.2/c++/Linux-i386-32/include/hadoop/Pipes.hh

hadoop-1.0.2/c++/Linux-i386-32/include/hadoop/SerialUtils.hh

hadoop-1.0.2/c++/Linux-i386-32/include/hadoop/StringUtils.hh

hadoop-1.0.2/c++/Linux-i386-32/include/hadoop/TemplateFactory.hh

hadoop-1.0.2/c++/Linux-i386-32/lib/libhadooppipes.a

hadoop-1.0.2/c++/Linux-i386-32/lib/libhadooputils.a

hadoop-1.0.2/c++/Linux-i386-32/lib/libhdfs.la

hadoop-1.0.2/c++/Linux-i386-32/lib/libhdfs.so

hadoop-1.0.2/c++/Linux-i386-32/lib/libhdfs.so.0

hadoop-1.0.2/c++/Linux-i386-32/lib/libhdfs.so.0.0.0

hadoop-1.0.2/conf/capacity-scheduler.xml

hadoop-1.0.2/conf/configuration.xsl

hadoop-1.0.2/conf/core-site.xml

hadoop-1.0.2/conf/fair-scheduler.xml

hadoop-1.0.2/conf/hadoop-env.sh

hadoop-1.0.2/conf/hadoop-metrics2.properties

hadoop-1.0.2/conf/hadoop-policy.xml

hadoop-1.0.2/conf/hdfs-site.xml

hadoop-1.0.2/conf/log4j.properties

hadoop-1.0.2/conf/mapred-queue-acls.xml

hadoop-1.0.2/conf/mapred-site.xml

hadoop-1.0.2/conf/masters

hadoop-1.0.2/conf/slaves

hadoop-1.0.2/conf/ssl-client.xml.example

hadoop-1.0.2/conf/ssl-server.xml.example

hadoop-1.0.2/conf/taskcontroller.cfg

hadoop-1.0.2/contrib/datajoin/hadoop-datajoin-1.0.2.jar

hadoop-1.0.2/contrib/failmon/hadoop-failmon-1.0.2.jar

hadoop-1.0.2/contrib/gridmix/hadoop-gridmix-1.0.2.jar

hadoop-1.0.2/contrib/hdfsproxy/README

hadoop-1.0.2/contrib/hdfsproxy/build.xml

hadoop-1.0.2/contrib/hdfsproxy/conf/configuration.xsl

hadoop-1.0.2/contrib/hdfsproxy/conf/hdfsproxy-default.xml

hadoop-1.0.2/contrib/hdfsproxy/conf/hdfsproxy-env.sh

hadoop-1.0.2/contrib/hdfsproxy/conf/hdfsproxy-env.sh.template

hadoop-1.0.2/contrib/hdfsproxy/conf/hdfsproxy-hosts

hadoop-1.0.2/contrib/hdfsproxy/conf/log4j.properties

hadoop-1.0.2/contrib/hdfsproxy/conf/ssl-server.xml

hadoop-1.0.2/contrib/hdfsproxy/conf/tomcat-forward-web.xml

hadoop-1.0.2/contrib/hdfsproxy/conf/tomcat-web.xml

hadoop-1.0.2/contrib/hdfsproxy/conf/user-certs.xml

hadoop-1.0.2/contrib/hdfsproxy/conf/user-permissions.xml

hadoop-1.0.2/contrib/hdfsproxy/hdfsproxy-2.0.jar

hadoop-1.0.2/contrib/hod/CHANGES.txt

hadoop-1.0.2/contrib/hod/README

hadoop-1.0.2/contrib/hod/build.xml

hadoop-1.0.2/contrib/hod/conf/hodrc

hadoop-1.0.2/contrib/hod/config.txt

hadoop-1.0.2/contrib/hod/getting_started.txt

hadoop-1.0.2/contrib/hod/hodlib/AllocationManagers/__init__.py

hadoop-1.0.2/contrib/hod/hodlib/AllocationManagers/goldAllocationManager.py

hadoop-1.0.2/contrib/hod/hodlib/Common/__init__.py

hadoop-1.0.2/contrib/hod/hodlib/Common/allocationManagerUtil.py

hadoop-1.0.2/contrib/hod/hodlib/Common/desc.py

hadoop-1.0.2/contrib/hod/hodlib/Common/descGenerator.py

hadoop-1.0.2/contrib/hod/hodlib/Common/hodsvc.py

hadoop-1.0.2/contrib/hod/hodlib/Common/logger.py

hadoop-1.0.2/contrib/hod/hodlib/Common/miniHTMLParser.py

hadoop-1.0.2/contrib/hod/hodlib/Common/nodepoolutil.py

hadoop-1.0.2/contrib/hod/hodlib/Common/setup.py

hadoop-1.0.2/contrib/hod/hodlib/Common/socketServers.py

hadoop-1.0.2/contrib/hod/hodlib/Common/tcp.py

hadoop-1.0.2/contrib/hod/hodlib/Common/threads.py

hadoop-1.0.2/contrib/hod/hodlib/Common/types.py

hadoop-1.0.2/contrib/hod/hodlib/Common/util.py

hadoop-1.0.2/contrib/hod/hodlib/Common/xmlrpc.py

hadoop-1.0.2/contrib/hod/hodlib/GridServices/__init__.py

hadoop-1.0.2/contrib/hod/hodlib/GridServices/hdfs.py

hadoop-1.0.2/contrib/hod/hodlib/GridServices/mapred.py

hadoop-1.0.2/contrib/hod/hodlib/GridServices/service.py

hadoop-1.0.2/contrib/hod/hodlib/Hod/__init__.py

hadoop-1.0.2/contrib/hod/hodlib/Hod/hadoop.py

hadoop-1.0.2/contrib/hod/hodlib/Hod/hod.py

hadoop-1.0.2/contrib/hod/hodlib/Hod/nodePool.py

hadoop-1.0.2/contrib/hod/hodlib/HodRing/__init__.py

hadoop-1.0.2/contrib/hod/hodlib/HodRing/hodRing.py

hadoop-1.0.2/contrib/hod/hodlib/NodePools/__init__.py

hadoop-1.0.2/contrib/hod/hodlib/NodePools/torque.py

hadoop-1.0.2/contrib/hod/hodlib/RingMaster/__init__.py

hadoop-1.0.2/contrib/hod/hodlib/RingMaster/idleJobTracker.py

hadoop-1.0.2/contrib/hod/hodlib/RingMaster/ringMaster.py

hadoop-1.0.2/contrib/hod/hodlib/Schedulers/__init__.py

hadoop-1.0.2/contrib/hod/hodlib/Schedulers/torque.py

hadoop-1.0.2/contrib/hod/hodlib/ServiceProxy/__init__.py

hadoop-1.0.2/contrib/hod/hodlib/ServiceProxy/serviceProxy.py

hadoop-1.0.2/contrib/hod/hodlib/ServiceRegistry/__init__.py

hadoop-1.0.2/contrib/hod/hodlib/ServiceRegistry/serviceRegistry.py

hadoop-1.0.2/contrib/hod/hodlib/__init__.py

hadoop-1.0.2/contrib/hod/ivy.xml

hadoop-1.0.2/contrib/hod/ivy/libraries.properties

hadoop-1.0.2/contrib/hod/support/checklimits.sh

hadoop-1.0.2/contrib/hod/support/logcondense.py

hadoop-1.0.2/contrib/hod/testing/__init__.py

hadoop-1.0.2/contrib/hod/testing/helper.py

hadoop-1.0.2/contrib/hod/testing/lib.py

hadoop-1.0.2/contrib/hod/testing/main.py

hadoop-1.0.2/contrib/hod/testing/testHadoop.py

hadoop-1.0.2/contrib/hod/testing/testHod.py

hadoop-1.0.2/contrib/hod/testing/testHodCleanup.py

hadoop-1.0.2/contrib/hod/testing/testHodRing.py

hadoop-1.0.2/contrib/hod/testing/testModule.py

hadoop-1.0.2/contrib/hod/testing/testRingmasterRPCs.py

hadoop-1.0.2/contrib/hod/testing/testThreads.py

hadoop-1.0.2/contrib/hod/testing/testTypes.py

hadoop-1.0.2/contrib/hod/testing/testUtil.py

hadoop-1.0.2/contrib/hod/testing/testXmlrpc.py

hadoop-1.0.2/contrib/index/hadoop-index-1.0.2.jar

hadoop-1.0.2/contrib/streaming/hadoop-streaming-1.0.2.jar

hadoop-1.0.2/contrib/vaidya/conf/postex_diagnosis_tests.xml

hadoop-1.0.2/contrib/vaidya/hadoop-vaidya-1.0.2.jar

hadoop-1.0.2/hadoop-ant-1.0.2.jar

hadoop-1.0.2/hadoop-client-1.0.2.jar

hadoop-1.0.2/hadoop-core-1.0.2.jar

hadoop-1.0.2/hadoop-examples-1.0.2.jar

hadoop-1.0.2/hadoop-minicluster-1.0.2.jar

hadoop-1.0.2/hadoop-test-1.0.2.jar

hadoop-1.0.2/hadoop-tools-1.0.2.jar

hadoop-1.0.2/ivy.xml

hadoop-1.0.2/ivy/hadoop-client-pom-template.xml

hadoop-1.0.2/ivy/hadoop-core-pom-template.xml

hadoop-1.0.2/ivy/hadoop-core.pom

hadoop-1.0.2/ivy/hadoop-examples-pom-template.xml

hadoop-1.0.2/ivy/hadoop-minicluster-pom-template.xml

hadoop-1.0.2/ivy/hadoop-streaming-pom-template.xml

hadoop-1.0.2/ivy/hadoop-test-pom-template.xml

hadoop-1.0.2/ivy/hadoop-tools-pom-template.xml

hadoop-1.0.2/ivy/ivy-2.1.0.jar

hadoop-1.0.2/ivy/ivysettings.xml

hadoop-1.0.2/ivy/libraries.properties

hadoop-1.0.2/lib/asm-3.2.jar

hadoop-1.0.2/lib/aspectjrt-1.6.5.jar

hadoop-1.0.2/lib/aspectjtools-1.6.5.jar

hadoop-1.0.2/lib/commons-beanutils-1.7.0.jar

hadoop-1.0.2/lib/commons-beanutils-core-1.8.0.jar

hadoop-1.0.2/lib/commons-cli-1.2.jar

hadoop-1.0.2/lib/commons-codec-1.4.jar

hadoop-1.0.2/lib/commons-collections-3.2.1.jar

hadoop-1.0.2/lib/commons-configuration-1.6.jar

hadoop-1.0.2/lib/commons-daemon-1.0.1.jar

hadoop-1.0.2/lib/commons-digester-1.8.jar

hadoop-1.0.2/lib/commons-el-1.0.jar

hadoop-1.0.2/lib/commons-httpclient-3.0.1.jar

hadoop-1.0.2/lib/commons-lang-2.4.jar

hadoop-1.0.2/lib/commons-logging-1.1.1.jar

hadoop-1.0.2/lib/commons-logging-api-1.0.4.jar

hadoop-1.0.2/lib/commons-math-2.1.jar

hadoop-1.0.2/lib/commons-net-1.4.1.jar

hadoop-1.0.2/lib/core-3.1.1.jar

hadoop-1.0.2/lib/hadoop-capacity-scheduler-1.0.2.jar

hadoop-1.0.2/lib/hadoop-fairscheduler-1.0.2.jar

hadoop-1.0.2/lib/hadoop-thriftfs-1.0.2.jar

hadoop-1.0.2/lib/hsqldb-1.8.0.10.LICENSE.txt

hadoop-1.0.2/lib/hsqldb-1.8.0.10.jar

hadoop-1.0.2/lib/jackson-core-asl-1.8.8.jar

hadoop-1.0.2/lib/jackson-mapper-asl-1.8.8.jar

hadoop-1.0.2/lib/jasper-compiler-5.5.12.jar

hadoop-1.0.2/lib/jasper-runtime-5.5.12.jar

hadoop-1.0.2/lib/jdeb-0.8.jar

hadoop-1.0.2/lib/jdiff/hadoop_0.17.0.xml

hadoop-1.0.2/lib/jdiff/hadoop_0.18.1.xml

hadoop-1.0.2/lib/jdiff/hadoop_0.18.2.xml

hadoop-1.0.2/lib/jdiff/hadoop_0.18.3.xml

hadoop-1.0.2/lib/jdiff/hadoop_0.19.0.xml

hadoop-1.0.2/lib/jdiff/hadoop_0.19.1.xml

hadoop-1.0.2/lib/jdiff/hadoop_0.19.2.xml

hadoop-1.0.2/lib/jdiff/hadoop_0.20.1.xml

hadoop-1.0.2/lib/jdiff/hadoop_0.20.205.0.xml

hadoop-1.0.2/lib/jdiff/hadoop_1.0.0.xml

hadoop-1.0.2/lib/jdiff/hadoop_1.0.1.xml

hadoop-1.0.2/lib/jdiff/hadoop_1.0.2.xml

hadoop-1.0.2/lib/jersey-core-1.8.jar

hadoop-1.0.2/lib/jersey-json-1.8.jar

hadoop-1.0.2/lib/jersey-server-1.8.jar

hadoop-1.0.2/lib/jets3t-0.6.1.jar

hadoop-1.0.2/lib/jetty-6.1.26.jar

hadoop-1.0.2/lib/jetty-util-6.1.26.jar

hadoop-1.0.2/lib/jsch-0.1.42.jar

hadoop-1.0.2/lib/jsp-2.1/jsp-2.1.jar

hadoop-1.0.2/lib/jsp-2.1/jsp-api-2.1.jar

hadoop-1.0.2/lib/junit-4.5.jar

hadoop-1.0.2/lib/kfs-0.2.2.jar

hadoop-1.0.2/lib/kfs-0.2.LICENSE.txt

hadoop-1.0.2/lib/log4j-1.2.15.jar

hadoop-1.0.2/lib/mockito-all-1.8.5.jar

hadoop-1.0.2/lib/native/Linux-amd64-64/libhadoop.a

hadoop-1.0.2/lib/native/Linux-amd64-64/libhadoop.la

hadoop-1.0.2/lib/native/Linux-amd64-64/libhadoop.so

hadoop-1.0.2/lib/native/Linux-amd64-64/libhadoop.so.1

hadoop-1.0.2/lib/native/Linux-amd64-64/libhadoop.so.1.0.0

hadoop-1.0.2/lib/native/Linux-i386-32/libhadoop.a

hadoop-1.0.2/lib/native/Linux-i386-32/libhadoop.la

hadoop-1.0.2/lib/native/Linux-i386-32/libhadoop.so

hadoop-1.0.2/lib/native/Linux-i386-32/libhadoop.so.1

hadoop-1.0.2/lib/native/Linux-i386-32/libhadoop.so.1.0.0

hadoop-1.0.2/lib/oro-2.0.8.jar

hadoop-1.0.2/lib/servlet-api-2.5-20081211.jar

hadoop-1.0.2/lib/slf4j-api-1.4.3.jar

hadoop-1.0.2/lib/slf4j-log4j12-1.4.3.jar

hadoop-1.0.2/lib/xmlenc-0.52.jar

hadoop-1.0.2/share/hadoop/templates/conf/capacity-scheduler.xml

hadoop-1.0.2/share/hadoop/templates/conf/commons-logging.properties

hadoop-1.0.2/share/hadoop/templates/conf/core-site.xml

hadoop-1.0.2/share/hadoop/templates/conf/hadoop-env.sh

hadoop-1.0.2/share/hadoop/templates/conf/hadoop-metrics2.properties

hadoop-1.0.2/share/hadoop/templates/conf/hadoop-policy.xml

hadoop-1.0.2/share/hadoop/templates/conf/hdfs-site.xml

hadoop-1.0.2/share/hadoop/templates/conf/log4j.properties

hadoop-1.0.2/share/hadoop/templates/conf/mapred-queue-acls.xml

hadoop-1.0.2/share/hadoop/templates/conf/mapred-site.xml

hadoop-1.0.2/share/hadoop/templates/conf/taskcontroller.cfg

hadoop-1.0.2/webapps/datanode/WEB-INF/web.xml

hadoop-1.0.2/webapps/hdfs/WEB-INF/web.xml

hadoop-1.0.2/webapps/hdfs/index.html

hadoop-1.0.2/webapps/history/WEB-INF/web.xml

hadoop-1.0.2/webapps/job/WEB-INF/web.xml

hadoop-1.0.2/webapps/job/analysejobhistory.jsp

hadoop-1.0.2/webapps/job/gethistory.jsp

hadoop-1.0.2/webapps/job/index.html

hadoop-1.0.2/webapps/job/job_authorization_error.jsp

hadoop-1.0.2/webapps/job/jobblacklistedtrackers.jsp

hadoop-1.0.2/webapps/job/jobconf.jsp

hadoop-1.0.2/webapps/job/jobconf_history.jsp

hadoop-1.0.2/webapps/job/jobdetails.jsp

hadoop-1.0.2/webapps/job/jobdetailshistory.jsp

hadoop-1.0.2/webapps/job/jobfailures.jsp

hadoop-1.0.2/webapps/job/jobhistory.jsp

hadoop-1.0.2/webapps/job/jobhistoryhome.jsp

hadoop-1.0.2/webapps/job/jobqueue_details.jsp

hadoop-1.0.2/webapps/job/jobtasks.jsp

hadoop-1.0.2/webapps/job/jobtaskshistory.jsp

hadoop-1.0.2/webapps/job/jobtracker.jsp

hadoop-1.0.2/webapps/job/legacyjobhistory.jsp

hadoop-1.0.2/webapps/job/loadhistory.jsp

hadoop-1.0.2/webapps/job/machines.jsp

hadoop-1.0.2/webapps/job/taskdetails.jsp

hadoop-1.0.2/webapps/job/taskdetailshistory.jsp

hadoop-1.0.2/webapps/job/taskstats.jsp

hadoop-1.0.2/webapps/job/taskstatshistory.jsp

hadoop-1.0.2/webapps/static/hadoop-logo.jpg

hadoop-1.0.2/webapps/static/hadoop.css

hadoop-1.0.2/webapps/static/jobconf.xsl

hadoop-1.0.2/webapps/static/jobtracker.js

hadoop-1.0.2/webapps/static/sorttable.js

hadoop-1.0.2/webapps/task/WEB-INF/web.xml

hadoop-1.0.2/webapps/task/index.html

hadoop-1.0.2/src/contrib/ec2/bin/image/

hadoop-1.0.2/bin/hadoop

hadoop-1.0.2/bin/hadoop-config.sh

hadoop-1.0.2/bin/hadoop-daemon.sh

hadoop-1.0.2/bin/hadoop-daemons.sh

hadoop-1.0.2/bin/rcc

hadoop-1.0.2/bin/slaves.sh

hadoop-1.0.2/bin/start-all.sh

hadoop-1.0.2/bin/start-balancer.sh

hadoop-1.0.2/bin/start-dfs.sh

hadoop-1.0.2/bin/start-jobhistoryserver.sh

hadoop-1.0.2/bin/start-mapred.sh

hadoop-1.0.2/bin/stop-all.sh

hadoop-1.0.2/bin/stop-balancer.sh

hadoop-1.0.2/bin/stop-dfs.sh

hadoop-1.0.2/bin/stop-jobhistoryserver.sh

hadoop-1.0.2/bin/stop-mapred.sh

hadoop-1.0.2/bin/task-controller

hadoop-1.0.2/contrib/hdfsproxy/bin/hdfsproxy

hadoop-1.0.2/contrib/hdfsproxy/bin/hdfsproxy-config.sh

hadoop-1.0.2/contrib/hdfsproxy/bin/hdfsproxy-daemon.sh

hadoop-1.0.2/contrib/hdfsproxy/bin/hdfsproxy-daemons.sh

hadoop-1.0.2/contrib/hdfsproxy/bin/hdfsproxy-slaves.sh

hadoop-1.0.2/contrib/hdfsproxy/bin/start-hdfsproxy.sh

hadoop-1.0.2/contrib/hdfsproxy/bin/stop-hdfsproxy.sh

hadoop-1.0.2/contrib/hod/bin/VERSION

hadoop-1.0.2/contrib/hod/bin/checknodes

hadoop-1.0.2/contrib/hod/bin/hod

hadoop-1.0.2/contrib/hod/bin/hodcleanup

hadoop-1.0.2/contrib/hod/bin/hodring

hadoop-1.0.2/contrib/hod/bin/ringmaster

hadoop-1.0.2/contrib/hod/bin/verify-account

hadoop-1.0.2/contrib/vaidya/bin/vaidya.sh

hadoop-1.0.2/libexec/hadoop-config.sh

hadoop-1.0.2/libexec/jsvc.amd64

hadoop-1.0.2/sbin/hadoop-create-user.sh

hadoop-1.0.2/sbin/hadoop-setup-applications.sh

hadoop-1.0.2/sbin/hadoop-setup-conf.sh

hadoop-1.0.2/sbin/hadoop-setup-hdfs.sh

hadoop-1.0.2/sbin/hadoop-setup-single-node.sh

hadoop-1.0.2/sbin/hadoop-validate-setup.sh

hadoop-1.0.2/sbin/update-hadoop-env.sh

hadoop-1.0.2/src/contrib/ec2/bin/cmd-hadoop-cluster

hadoop-1.0.2/src/contrib/ec2/bin/create-hadoop-image

hadoop-1.0.2/src/contrib/ec2/bin/delete-hadoop-cluster

hadoop-1.0.2/src/contrib/ec2/bin/hadoop-ec2

hadoop-1.0.2/src/contrib/ec2/bin/hadoop-ec2-env.sh

hadoop-1.0.2/src/contrib/ec2/bin/hadoop-ec2-init-remote.sh

hadoop-1.0.2/src/contrib/ec2/bin/image/create-hadoop-image-remote

hadoop-1.0.2/src/contrib/ec2/bin/image/ec2-run-user-data

hadoop-1.0.2/src/contrib/ec2/bin/launch-hadoop-cluster

hadoop-1.0.2/src/contrib/ec2/bin/launch-hadoop-master

hadoop-1.0.2/src/contrib/ec2/bin/launch-hadoop-slaves

hadoop-1.0.2/src/contrib/ec2/bin/list-hadoop-clusters

hadoop-1.0.2/src/contrib/ec2/bin/terminate-hadoop-cluster

[root@yunwei2 opt]# cd hadoop-1.0.2

[root@yunwei2 hadoop-1.0.2]# ls

bin hadoop-ant-1.0.2.jar hadoop-tools-1.0.2.jar NOTICE.txt

build.xml hadoop-client-1.0.2.jar ivy README.txt

c++ hadoop-core-1.0.2.jar ivy.xml sbin

CHANGES.txt hadoop-examples-1.0.2.jar lib share

conf hadoop-minicluster-1.0.2.jar libexec src

contrib hadoop-test-1.0.2.jar LICENSE.txt webapps

[root@yunwei2 hadoop-1.0.2]# cd conf

[root@yunwei2 conf]# vi hadoop-env.sh

[root@yunwei2 conf]# echo $JAVA_HOME

/usr/java/jdk1.6.0_31/

[root@yunwei2 conf]# /usr/java/jdk1.6.0_31/

-bash: /usr/java/jdk1.6.0_31/: is a directory

[root@yunwei2 conf]# vi hadoop-env.sh

[root@yunwei2 conf]# vi hadoop-site.xml

[root@yunwei2 conf]# ssh localhost

Last login: Tue May 15 19:35:45 2012 from 192.168.33.110

[root@yunwei2 ~]# cd /opt/hadoop-1.0.2

[root@yunwei2 hadoop-1.0.2]# ls

bin hadoop-ant-1.0.2.jar hadoop-tools-1.0.2.jar NOTICE.txt

build.xml hadoop-client-1.0.2.jar ivy README.txt

c++ hadoop-core-1.0.2.jar ivy.xml sbin

CHANGES.txt hadoop-examples-1.0.2.jar lib share

conf hadoop-minicluster-1.0.2.jar libexec src

contrib hadoop-test-1.0.2.jar LICENSE.txt webapps

[root@yunwei2 hadoop-1.0.2]# cd bin

[root@yunwei2 bin]# ./hadoop namenode -format

12/05/15 20:04:14 WARN conf.Configuration: DEPRECATED: hadoop-site.xml found in the classpath. Usage of hadoop-site.xml is deprecated. Instead use core-site.xml, mapred-site.xml and hdfs-site.xml to override properties of core-default.xml, mapred-default.xml

and hdfs-default.xml respectively

12/05/15 20:04:14 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = yunwei2.uid5a.cn/127.0.0.1

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 1.0.2

STARTUP_MSG: build = https://svn.apache.org/repos/asf/hadoop/common/branches/branch-1.0.2 -r 1304954; compiled by 'hortonfo' on Sat Mar 24 23:58:21 UTC 2012

************************************************************/

12/05/15 20:04:15 INFO util.GSet: VM type = 64-bit

12/05/15 20:04:15 INFO util.GSet: 2% max memory = 17.77875 MB

12/05/15 20:04:15 INFO util.GSet: capacity = 2^21 = 2097152 entries

12/05/15 20:04:15 INFO util.GSet: recommended=2097152, actual=2097152

12/05/15 20:04:15 INFO namenode.FSNamesystem: fsOwner=root

12/05/15 20:04:15 INFO namenode.FSNamesystem: supergroup=supergroup

12/05/15 20:04:15 INFO namenode.FSNamesystem: isPermissionEnabled=true

12/05/15 20:04:15 INFO namenode.FSNamesystem: dfs.block.invalidate.limit=100

12/05/15 20:04:15 INFO namenode.FSNamesystem: isAccessTokenEnabled=false accessKeyUpdateInterval=0 min(s), accessTokenLifetime=0 min(s)

12/05/15 20:04:15 INFO namenode.NameNode: Caching file names occuring more than 10 times

12/05/15 20:04:15 INFO common.Storage: Image file of size 110 saved in 0 seconds.

12/05/15 20:04:15 INFO common.Storage: Storage directory /tmp/hadoop-root/dfs/name has been successfully formatted.

12/05/15 20:04:15 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at yunwei2.uid5a.cn/127.0.0.1

************************************************************/

[root@yunwei2 bin]# ./start-all.sh

namenode running as process 6993. Stop it first.

localhost: datanode running as process 7129. Stop it first.

localhost: secondarynamenode running as process 7302. Stop it first.

jobtracker running as process 6666. Stop it first.

localhost: tasktracker running as process 6802. Stop it first.

[root@yunwei2 bin]# ./stop-all.sh

stopping jobtracker

localhost: stopping tasktracker

stopping namenode

localhost: stopping datanode

localhost: stopping secondarynamenode

[root@yunwei2 bin]# ./start-all.sh

starting namenode, logging to /opt/hadoop-1.0.2/libexec/../logs/hadoop-root-namenode-yunwei2.uid5a.cn.out

localhost: starting datanode, logging to /opt/hadoop-1.0.2/libexec/../logs/hadoop-root-datanode-yunwei2.uid5a.cn.out

localhost: starting secondarynamenode, logging to /opt/hadoop-1.0.2/libexec/../logs/hadoop-root-secondarynamenode-yunwei2.uid5a.cn.out

starting jobtracker, logging to /opt/hadoop-1.0.2/libexec/../logs/hadoop-root-jobtracker-yunwei2.uid5a.cn.out

localhost: starting tasktracker, logging to /opt/hadoop-1.0.2/libexec/../logs/hadoop-root-tasktracker-yunwei2.uid5a.cn.out

[root@yunwei2 bin]# ha

hald hal-find-by-property halt

hal-device hal-get-property hash

hal-device-manager hal-set-property

hal-find-by-capability hal-setup-keymap

[root@yunwei2 bin]# ha

hald hal-find-by-property halt

hal-device hal-get-property hash

hal-device-manager hal-set-property

hal-find-by-capability hal-setup-keymap

[root@yunwei2 bin]# ls

hadoop start-all.sh stop-balancer.sh

hadoop-config.sh start-balancer.sh stop-dfs.sh

hadoop-daemon.sh start-dfs.sh stop-jobhistoryserver.sh

hadoop-daemons.sh start-jobhistoryserver.sh stop-mapred.sh

rcc start-mapred.sh task-controller

slaves.sh stop-all.sh

[root@yunwei2 bin]# pwd

/opt/hadoop-1.0.2/bin

[root@yunwei2 bin]# cd ..

[root@yunwei2 hadoop-1.0.2]# find -name hadoop

./bin/hadoop

./c++/Linux-i386-32/include/hadoop

./c++/Linux-amd64-64/include/hadoop

./share/hadoop

[root@yunwei2 hadoop-1.0.2]# cd bin

[root@yunwei2 bin]# ./hadoop dfs -put ./input input

12/05/15 20:06:46 WARN conf.Configuration: DEPRECATED: hadoop-site.xml found in the classpath. Usage of hadoop-site.xml is deprecated. Instead use core-site.xml, mapred-site.xml and hdfs-site.xml to override properties of core-default.xml, mapred-default.xml

and hdfs-default.xml respectively

12/05/15 20:06:46 WARN fs.FileSystem: "localhost:9000" is a deprecated filesystem name. Use "hdfs://localhost:9000/" instead.

12/05/15 20:06:47 WARN fs.FileSystem: "localhost:9000" is a deprecated filesystem name. Use "hdfs://localhost:9000/" instead.

12/05/15 20:06:47 WARN fs.FileSystem: "localhost:9000" is a deprecated filesystem name. Use "hdfs://localhost:9000/" instead.

12/05/15 20:06:47 WARN fs.FileSystem: "localhost:9000" is a deprecated filesystem name. Use "hdfs://localhost:9000/" instead.

put: File input does not exist.

[root@yunwei2 bin]# hdfs://localhost:9000/ WARN conf.Configuration: DEPRECATED: hadoop-site.xml found in the classpath. Usage of hadoop-site.xml is deprecated.

-bash: hdfs://localhost:9000/: 没有那个文件或目录

[root@yunwei2 bin]# cd ..

[root@yunwei2 hadoop-1.0.2]# cd conf

[root@yunwei2 conf]# vi hadoop-site.xml

[root@yunwei2 conf]# vi core-site.xml

[root@yunwei2 conf]# vi mapred-site.xml

[root@yunwei2 conf]# vi hdfs-site.xml

[root@yunwei2 conf]# pwd

/opt/hadoop-1.0.2/conf

[root@yunwei2 conf]# /hadoop-1.0.2

-bash: /hadoop-1.0.2: 没有那个文件或目录

[root@yunwei2 conf]# vi core-site.xml

[root@yunwei2 conf]# vi hdfs-site.xml

[root@yunwei2 conf]# vi mapred-

[root@yunwei2 conf]# vi mapred-site.xml

[root@yunwei2 conf]# vi hadoop-site.xml

[root@yunwei2 conf]# vi core-site.xml

[root@yunwei2 conf]# vi core-site.xml

[root@yunwei2 conf]# mkdir -p /tmp/hadoop

[root@yunwei2 conf]# mkdir -p /tmp/hadoop/hadoop-hduser

[root@yunwei2 conf]# chown hduser:hdoop /tmp/hadoop/hadoop-hduser

chown: “hduser:hdoop”: 无效的用户

[root@yunwei2 conf]# chmod 750 /tmp/hadoop/hadoop-hduser/

[root@yunwei2 conf]# cd ..

[root@yunwei2 hadoop-1.0.2]# cd bin

[root@yunwei2 bin]# ./hadoop namenode -format

12/05/15 20:19:41 WARN conf.Configuration: DEPRECATED: hadoop-site.xml found in the classpath. Usage of hadoop-site.xml is deprecated. Instead use core-site.xml, mapred-site.xml and hdfs-site.xml to override properties of core-default.xml, mapred-default.xml

and hdfs-default.xml respectively

12/05/15 20:19:41 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = yunwei2.uid5a.cn/127.0.0.1

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 1.0.2

STARTUP_MSG: build = https://svn.apache.org/repos/asf/hadoop/common/branches/branch-1.0.2 -r 1304954; compiled by 'hortonfo' on Sat Mar 24 23:58:21 UTC 2012

************************************************************/

12/05/15 20:19:42 INFO util.GSet: VM type = 64-bit

12/05/15 20:19:42 INFO util.GSet: 2% max memory = 17.77875 MB

12/05/15 20:19:42 INFO util.GSet: capacity = 2^21 = 2097152 entries

12/05/15 20:19:42 INFO util.GSet: recommended=2097152, actual=2097152

12/05/15 20:19:42 INFO namenode.FSNamesystem: fsOwner=root

12/05/15 20:19:42 INFO namenode.FSNamesystem: supergroup=supergroup

12/05/15 20:19:42 INFO namenode.FSNamesystem: isPermissionEnabled=true

12/05/15 20:19:42 INFO namenode.FSNamesystem: dfs.block.invalidate.limit=100

12/05/15 20:19:42 INFO namenode.FSNamesystem: isAccessTokenEnabled=false accessKeyUpdateInterval=0 min(s), accessTokenLifetime=0 min(s)

12/05/15 20:19:42 INFO namenode.NameNode: Caching file names occuring more than 10 times

12/05/15 20:19:42 INFO common.Storage: Image file of size 110 saved in 0 seconds.

12/05/15 20:19:42 INFO common.Storage: Storage directory /tmp/hadoop/hadoop-root/dfs/name has been successfully formatted.

12/05/15 20:19:42 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at yunwei2.uid5a.cn/127.0.0.1

************************************************************/

[root@yunwei2 bin]# cd ..

[root@yunwei2 hadoop-1.0.2]# cd conf

[root@yunwei2 conf]# vi core-site.xml

[root@yunwei2 conf]# cd ..

[root@yunwei2 hadoop-1.0.2]# cd bin

[root@yunwei2 bin]# sta

stardict start_udev startx stat states

[root@yunwei2 bin]# sta

stardict start_udev startx stat states

[root@yunwei2 bin]# ./st

start-all.sh stop-all.sh

start-balancer.sh stop-balancer.sh

start-dfs.sh stop-dfs.sh

start-jobhistoryserver.sh stop-jobhistoryserver.sh

start-mapred.sh stop-mapred.sh

[root@yunwei2 bin]# ./st

start-all.sh stop-all.sh

start-balancer.sh stop-balancer.sh

start-dfs.sh stop-dfs.sh

start-jobhistoryserver.sh stop-jobhistoryserver.sh

start-mapred.sh stop-mapred.sh

[root@yunwei2 bin]# ./start-all.sh

namenode running as process 13484. Stop it first.

localhost: datanode running as process 13614. Stop it first.

localhost: secondarynamenode running as process 13771. Stop it first.

jobtracker running as process 13873. Stop it first.

localhost: tasktracker running as process 14016. Stop it first.

[root@yunwei2 bin]# ./stop-all.sh

stopping jobtracker

localhost: stopping tasktracker

stopping namenode

localhost: stopping datanode

localhost: stopping secondarynamenode

[root@yunwei2 bin]# ./stop-all.sh

no jobtracker to stop

localhost: no tasktracker to stop

no namenode to stop

localhost: no datanode to stop

localhost: no secondarynamenode to stop

[root@yunwei2 bin]# ./stop-all.sh

no jobtracker to stop

localhost: no tasktracker to stop

no namenode to stop

localhost: no datanode to stop

localhost: no secondarynamenode to stop

[root@yunwei2 bin]# ./start-all.sh

starting namenode, logging to /opt/hadoop-1.0.2/libexec/../logs/hadoop-root-namenode-yunwei2.uid5a.cn.out

localhost: starting datanode, logging to /opt/hadoop-1.0.2/libexec/../logs/hadoop-root-datanode-yunwei2.uid5a.cn.out

localhost: starting secondarynamenode, logging to /opt/hadoop-1.0.2/libexec/../logs/hadoop-root-secondarynamenode-yunwei2.uid5a.cn.out

starting jobtracker, logging to /opt/hadoop-1.0.2/libexec/../logs/hadoop-root-jobtracker-yunwei2.uid5a.cn.out

localhost: starting tasktracker, logging to /opt/hadoop-1.0.2/libexec/../logs/hadoop-root-tasktracker-yunwei2.uid5a.cn.out

[root@yunwei2 bin]#

http://www.kuqin.com/system-analysis/20081023/24034.html http://www.linezing.com/blog/?p=592 http://www.kuqin.com/system-analysis/20081023/24034.html http://hadoop.apache.org/common/docs/r0.19.2/cn/quickstart.html http://www.cnblogs.com/forfuture1978/archive/2010/03/14/1685351.html http://hadoop.apache.org/common/docs/r0.19.2/cn/hdfs_user_guide.html http://hadoop.apache.org/common/docs/r0.19.2/cn/hdfs_shell.html http://hadoop.apache.org/common/docs/r0.19.2/cn/hdfs_user_guide.html http://hadoop.apache.org/common/docs/r0.19.2/cn/hdfs_user_guide.html http://hadoop.apache.org/common/docs/r0.19.2/cn/hdfs_user_guide.html http://www.linuxidc.com/Linux/2010-08/27483.htm http://www.cnblogs.com/flying5/archive/2011/07/12/2103595.html http://hi.baidu.com/cwbdde/blog/item/82afaaa9c1cd67f61f17a285.html http://blog.csdn.net/singno116/article/details/6298995 http://blog.csdn.net/singno116/article/details/6301288 http://www.lifeba.org/arch/eclipse3-6_hadoop-0-21-0_plugin.html http://heipark.iteye.com/blog/786302 http://bearsorry.iteye.com/blog/1389347 http://blog.csdn.net/liuzhoulong/article/details/6434638 http://hi.baidu.com/xixitie/blog/item/a32f6913cacefb145aaf53dd.html http://blog.csdn.net/wh62592855/article/details/5752199 http://blog.csdn.net/wh62592855/article/details/4712555 http://www.cnblogs.com/wly603/archive/2012/04/10/2441336.html

http://www.xueit.com/html/2009-08/33_4398_00.html http://www.json.org/json-zh.html http://blog.csdn.net/huangwuyi/article/details/5412500 http://blog.csdn.net/id19870510/article/details/5626783 http://blog.csdn.net/momomoge/article/details/7333327 http://hi.baidu.com/55842223/blog/item/9d1780ec41c0943e269791b2.html http://hi.baidu.com/a393060727/blog/item/be295f08cf32439b0a7b820f.html http://xiaohewoai.iteye.com/blog/517227 http://tide2046.blog.hexun.com/6197877_d.html http://blog.51cto.com/zt/218 http://metooxi.iteye.com/category/208528 http://blog.csdn.net/whuqin/article/details/6662378 http://blog.nosqlfan.com/html/2552.html http://www.easyigloo.org/?p=1014 http://wiki.huihoo.com/wiki/HDFS http://www.txdnet.cn/log/20821434000001.xhtm http://tntxia.iteye.com/blog/826193 http://tntxia.iteye.com/blog/826193 http://developer.51cto.com/art/200907/134303.htm http://jersey.group.iteye.com/group/topic/9351 http://www.ibm.com/developerworks/cn/web/wa-aj-tomcat/ http://www.cnblogs.com/forfuture1978/archive/2010/03/14/1685351.html http://jersey.group.iteye.com/group/topic/9351 http://blog.csdn.net/zhangzhaokun/article/details/5597433 http://wenku.baidu.com/view/788d3a81d4d8d15abe234e94.html http://subject.csdn.net/hadoop/ http://cloud.csdn.net/a/20100907/279146.html http://wenku.baidu.com/view/788d3a81d4d8d15abe234e94.html http://www.cnblogs.com/forfuture1978/archive/2010/03/14/1685351.html http://rdc.taobao.com/team/top/tag/hadoop-hive-%E5%8D%81%E5%88%86%E9%92%9F%E6%95%99%E7%A8%8B/ http://wenku.baidu.com/view/dcc5570bf78a6529647d533b.html?from=rec&pos=1&weight=10&lastweight=10&count=3 http://wenku.baidu.com/view/47d426b91a37f111f1855bbd.html?from=rec&pos=2&weight=10&lastweight=10&count=3 http://www.cnblogs.com/xuqiang/archive/2011/06/03/2042526.html http://blog.csdn.net/shirdrn/article/details/5781776 http://peopleyun.com/?p=1098 http://www.cnblogs.com/wly603/archive/2012/04/10/2441336.html

step 1. 为Hadoop增加用户,记住设定的密码

Java代码

$ sudo addgroup hadoop $ sudo adduser --ingroup hadoop hduser

$ sudo addgroup hadoop $ sudo adduser --ingroup hadoop hduser

step 2. ssh的安装与设置

由于Hadoop用ssh 通信,因此首先要安装SSH Server

Java代码

$ sudo apt-get install ssh

$ sudo apt-get install ssh

下面进行免密码登录设定,su 命令执行后,输入刚才设定的密码

Java代码

$ su - hduser

$ ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

$ ssh localhost

生成用于SSH的密钥 ,完成后请登入确认不用输入密码,(第一次登入需按enter键,第二次就可以直接登入到系统。

Java代码

~$ ssh localhost ~$ exit ~$ ssh localhost ~$ exit

~$ ssh localhost ~$ exit ~$ ssh localhost ~$ exit

step 3. 安装java

笔者采用的是离线的tar,解压到/opt/java1.7.0/

Java代码

•$ tar zxvf jdk1.7.0.tar.gz •$ sudo mv jdk1.7.0 /opt/java/

•$ tar zxvf jdk1.7.0.tar.gz •$ sudo mv jdk1.7.0 /opt/java/

配置环境

Java代码

•$ sudo gedit /opt/profile

•$ sudo gedit /opt/profile

在 "umask 022"之前输入 as below

export JAVA_HOME=/opt/java/jdk1.7.0

export JRE_HOME=$JAVA_HOME/jre

export CLASSPATH=.:$JAVA_HOME/lib:$JRE_HOME/lib

export PATH=$PATH:$JRE_HOME/bin:$JAVA_HOME/bin

step 4. 下载安装Hadoop

•下载 Hadoop-1.0.2,并解开压缩文件到 /opt 路径。

Java代码

•$ tar zxvf Hadoop-1.0.2.tar.gz •$ sudo mv Hadoop-1.0.2 /opt/ •$ sudo chown -R hduser:hadoop /opt/Hadoop-1.0.2

•$ tar zxvf Hadoop-1.0.2.tar.gz •$ sudo mv Hadoop-1.0.2 /opt/ •$ sudo chown -R hduser:hadoop /opt/Hadoop-1.0.2

step 5. 设定 hadoop-env.sh

•进入 hadoop 目录,做进一步的设定。我们需要修改两个档案,第一个是 hadoop-env.sh,需要设定 JAVA_HOME, HADOOP_HOME, PATH 三个环境变量。

/opt$ cd Hadoop-1.0.2/

/opt/Hadoop-1.0.2$ cat >> conf/hadoop-env.sh << EOF

贴上以下信息

export JAVA_HOME=/opt/java/jdk1.7.0

export HADOOP_HOME=/opt/Hadoop-1.0.2

export PATH=$PATH:$HADOOP_HOME/bin

EOF

这里我有一点不明白,明明/etc/profile里已经指定了JAVA_HOME,这里为什么还需要指定?

step 6. 设定 hadoop配置文件

•编辑 $HADOOP_HOME/conf/core-site.xml

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/tmp/hadoop/hadoop-${user.name}</value>

</property>

</configuration>

•编辑 HADOOP_HOME/conf/hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

• 编辑 HADOOP_HOME/conf/mapred-site.xml

<configuration>

<property>

<name>mapred.job.tracker</name>

<value>localhost:9001</value>

</property>

</configuration>

step 7. 格式化HDFS

•以上我们已经设定好 Hadoop 单机测试的环境,接着让我们来启动 Hadoop 相关服务,格式化 namenode, secondarynamenode, tasktracker

Java代码

•$ cd /opt/Hadoop-1.0.2 •$ source /opt/Hadoop-1.0.2/conf/hadoop-env.sh •$ hadoop namenode -format

•$ cd /opt/Hadoop-1.0.2 •$ source /opt/Hadoop-1.0.2/conf/hadoop-env.sh •$ hadoop namenode -format

执行上面的语句会报空指针错误,因为默认 hadoop.tmp.dir= tmp/hadoop/hadoop-${user.name}

如果你要修改的话可以

Java代码

•/opt/hadoop-1.0.2/conf$ sudo gedit core-site.xml

•/opt/hadoop-1.0.2/conf$ sudo gedit core-site.xml

<!-- In: conf/core-site.xml -->

<property>

<name>hadoop.tmp.dir</name>

<value>/tmp/hadoop/hadoop-${user.name}</value>

<description>A base for other temporary directories.</description>

</property>

给此路径路径设定权限

Java代码

$ sudo mkdir -p /tmp/hadoop/hadoop-hduser $ sudo chown hduser:hadoop /tmp/hadoop/hadoop-hduser # ...and if you want to tighten up security, chmod from 755 to 750... $ sudo chmod 750 /tmp/hadoop/hadoop-hduser

$ sudo mkdir -p /tmp/hadoop/hadoop-hduser $ sudo chown hduser:hadoop /tmp/hadoop/hadoop-hduser # ...and if you want to tighten up security, chmod from 755 to 750... $ sudo chmod 750 /tmp/hadoop/hadoop-hduser

在执行的格式化就会看到

执行画面如:

[: 107: namenode: unexpected operator

12/05/07 20:47:40 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = seven7-laptop/127.0.1.1

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 1.0.2

STARTUP_MSG: build = https://svn.apache.org/repos/asf/hadoop/common/branches/branch-1.0.2 -r 1304954; compiled by 'hortonfo' on Sat Mar 24 23:58:21 UTC 2012

************************************************************/

12/05/07 20:47:41 INFO util.GSet: VM type = 32-bit

12/05/07 20:47:41 INFO util.GSet: 2% max memory = 17.77875 MB

12/05/07 20:47:41 INFO util.GSet: capacity = 2^22 = 4194304 entries

12/05/07 20:47:41 INFO util.GSet: recommended=4194304, actual=4194304

12/05/07 20:47:41 INFO namenode.FSNamesystem: fsOwner=hduser

12/05/07 20:47:41 INFO namenode.FSNamesystem: supergroup=supergroup

12/05/07 20:47:41 INFO namenode.FSNamesystem: isPermissionEnabled=true

12/05/07 20:47:41 INFO namenode.FSNamesystem: dfs.block.invalidate.limit=100

12/05/07 20:47:41 INFO namenode.FSNamesystem: isAccessTokenEnabled=false accessKeyUpdateInterval=0 min(s), accessTokenLifetime=0 min(s)

12/05/07 20:47:41 INFO namenode.NameNode: Caching file names occuring more than 10 times

12/05/07 20:47:42 INFO common.Storage: Image file of size 112 saved in 0 seconds.

12/05/07 20:47:42 INFO common.Storage: Storage directory /tmp/hadoop/hadoop-hduser/dfs/name has been successfully formatted.

12/05/07 20:47:42 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at seven7-laptop/127.0.1.1

************************************************************/

step 7. 启动Hadoop

•接着用 start-all.sh 来启动所有服务,包含 namenode, datanode,

$HADOOP_HOME/bin/start-all.sh

Java代码

•opt/hadoop-1.0.2/bin$ sh ./start-all.sh

•opt/hadoop-1.0.2/bin$ sh ./start-all.sh

执行画面如:

•starting namenode, logging to /opt/hadoop-1.0.2/logs/hadoop-hduser-namenode-seven7-laptop.out

localhost:

localhost: starting datanode, logging to /opt/hadoop-1.0.2/logs/hadoop-hduser-datanode-seven7-laptop.out

localhost:

localhost: starting secondarynamenode, logging to /opt/hadoop-1.0.2/logs/hadoop-hduser-secondarynamenode-seven7-laptop.out

starting jobtracker, logging to /opt/hadoop-1.0.2/logs/hadoop-hduser-jobtracker-seven7-laptop.out

localhost:

localhost: starting tasktracker, logging to /opt/hadoop-1.0.2/logs/hadoop-hduser-tasktracker-seven7-laptop.out

step 8. 安装完毕测试

•启动之后,可以检查以下网址,来观看服务是否正常。 Hadoop 管理接口 Hadoop Task Tracker 状态 Hadoop DFS 状态

•http://localhost:50030/ - Hadoop 管理接口

至此

Hadoop单节点安装完成,下面将在次单节点集群上进行作业

<1>. Hadoop简介 hadoop是apache的开源项目,开发的主要目的是为了构建可靠,可拓展scalable,分布式的系统,hadoop是一系列的子工程的总和,其中包含。

1. hadoop common:为其他项目提供基础设施

2. HDFS:分布式的文件系统

3. MapReduce:A software framework for distributed processing of large data sets on compute clusters。一个简化分布式编程的框架。

4. 其他工程包含:Avro(序列化系统),Cassandra(数据库项目)等

从此学习网 http://www.congci.com/item/596hadoop http://www.congci.com/item/596hadoop

在单机来模拟Hadoop基于分布式运行,最终通过在本机创建多个线程来模拟。主要就是实现运行Hadoop自带的WordCount这个例子,具体实现过程将在下面详细叙述。

(PS:因为我是一个新手,刚接触Hadoop不久,在学习Hadoop过程中遇到很多问题,特别将自己的实践过程写得非常详细,为更多对Hadoop感兴趣的朋友提供尽可能多的信息,仅此而已。)

模拟Linux环境配置

使用cygwin来模拟Linux运行环境,安装好cygwin后,配置好OpenSSH以后才能进行下面的操作。

Hadoop配置

首先进行Hadoop配置:

1、conf/hadoop-env.sh文件中最基本需要指定JAVA_HOME,例如我的如下:

| export JAVA_HOME="D:\Program Files\Java\jdk1.6.0_07" |

2、只需要修改conf/hadoop-site.xml文件即可,默认情况下,hadoop-site.xml并没有被配置,如果是基于单机运行,就会按照hadoop-default.xml中的基本配置选项执行任务。

将hadoop-site.xml文件修改为如下所示:

| <?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Put site-specific property overrides in this file. --> <configuration> <property> <name>fs.default.name</name> <value>localhost:9000</value> </property> <property> <name>mapred.job.tracker</name> <value>localhost:9001</value> </property> <property> <name>dfs.replication</name> <value>1</value> </property> </configuration> |

1、认证配置

启动cygwin,同时使用下面的命令启动ssh:

| $ net start sshd |

接着,需要对身份加密认证这一部分进行配置,这也是非常关键的,因为基于分布式的多个Datanode结点需要向Namenode提供任务执行报告信息,如果每次访问Namenode结点都需要密码验证的话就麻烦了,当然我要说的就是基于无密码认证的方式的配置,可以参考我的其他文章。

生成RSA公钥的命令如下:

| $ ssh-keygen |

上面执行到如下步骤时需要进行设置:

| Enter file in which to save the key (/home/SHIYANJUN/.ssh/id_rsa): |

继续执行,又会提示进行输入选择密码短语passphrase,在如下这里:

| Enter passphrase (empty for no passphrase): |

RSA公钥主要是对结点之间的通讯信息加密的。如果RSA公钥生成过程如上图,说明正确生成了RSA公钥。

接着生成DSA公钥,使用如下命令:

| $ ssh-keygen -t dsa |

然后,需要将DSA公钥加入到公钥授权文件authorized_keys中,使用如下命令:

| $ cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys |

到此,就可以进行Hadoop的运行工作了。

2、Hadoop处理的数据文件准备

我使用的是hadoop-0.16.4版本,直接拷贝到G:\根目录下面,同时,我的cygwin直接安装在G:\Cygwin里面。

在目录G:\hadoop-0.16.4中创建一个input目录,里面准备几个TXT文件,我准备了7个,文件中内容就是使用空格分隔的多个英文单词,因为是运行WordCount这个例子,后面可以看到我存入了多少内容。

3、运行过程

下面,切换到G:\hadoop-0.16.4目录下面

| $ cd ../../cygdrive/g/hadoop-0.16.4 |

在执行任务中,使用HDFS,即Hadoop的分布式文件系统,因此这时要做的就是格式化这个文件系统,使用下面命令可以完成:

| $ bin/hadoop namenode -format |

此时,应该启动Namenode、Datanode、SecondaryNamenode、JobTracer,使用这个命令启动:

| $ bin/start-all.sh |

如果你没有配置前面ssh的无密码认证,或者配置了但是输入了密码短语,那么到这里就会每启动一个进程就提示输入密码,试想,如果有N多进程的话,那岂不是要命了。

然后,需要把上面我们在本地的input目录中准备的文件复制到HDFS中的input目录中,以便在分布式文件系统管理这些待处理的数据文件,使用下面命令:

| $ bin/hadoop dfs -put ./input input |

现在,才可以执行Hadoop自带的WordCount列子了,使用下面命令开始提交任务,进入运行:

| $ bin/hadoop jar hadoop-0.16.4-examples.jar wordcount input output |

运行过程如图所示:

通过上图,可以看出在运行一个Job的过程中,WordCount工具执行任务的进度情况,非常详细。

最后查看执行任务后,处理数据的结果,使用的命令行如下所示:

| $ bin/hadoop dfs -cat output/part-00000 |

最后,停止Hadoop进程,使用如下命令:

| $ bin/stop-all.sh |

以上就是全部的过程了。

http://hi.baidu.com/shirdrn/blog/item/33c762fecf9811375c600892.html

[Hadoop] Error: JAVA_HOME is not set

2011年01月22日 星期六 19:40

| 在namenode启动脚本%HADOOP_HOME%/bin/start-dfs.sh的时候发现datanode报错: Error: JAVA_HOME is not set 原因是在%HADOOP_HOME%/conf/hadoop-env.sh内缺少JAVA_HOME的定义,只需要在hadoop-env.sh中增加: JAVA_HOME=/your/jdk/root/path 即可 |

Warning: $HADOOP_HOME is deprecated.

经查hadoop-1.0.0/bin/hadoop脚本和hadoop-config.sh脚本,发现脚本中对HADOOP_HOME的环境变量设置做了判断,笔者的环境根本不需要设置HADOOP_HOME环境变量。

参考文章:

HADOOP-7398

解决方案一:到HOME目录编辑.bash_profile文件,去掉HADOOP_HOME的变量设定,重新输入hadoop fs命令,警告消失。

解决方案二:到HOME目录编辑.bash_profile文件,添加一个环境变量,之后警告消失:

export HADOOP_HOME_WARN_SUPPRESS=1

login as: root

root@192.168.33.10's password:

Access denied

root@192.168.33.10's password:

Last login: Tue May 15 17:33:30 2012 from yunwei2.uid5a.cn

[root@yunwei2 ~]# ls

============== jdk-6u31-linux-amd64.rpm

1 jdk-6u31-linux-x64-rpm.bin

102 linux.x64_11gR2_database_1of2.zip

141 linux.x64_11gR2_database_2of2.zip

160 ll.txt

25 ***Load

36 packet

81 ReadPacket

90 Remote

anaconda-ks.cfg root@192.168.33.9

compat-libstdc++-33-3.2.3-61.i386.rpm sql

compat-libstdc++-33-3.2.3-61.x86_64.rpm squid-2.6.STABLE23

Desktop squid-2.6.STABLE23.tar.gz

End squid-3.1.19

esx-3.5.0-64607 squid-3.1.19.tar.gz

esx-3.5.0-64607.zip subversion-1.7.4

========findAllBarcode subversion-1.7.4.tar.gz

getCitySql Templates

getProvince UID

index.html Unexpected

install.log webbench-1.5

install.log.syslog webbench-1.5.tar.gz

[root@yunwei2 ~]# java -version

java version "1.6.0_31"

Java(TM) SE Runtime Environment (build 1.6.0_31-b04)

Java HotSpot(TM) 64-Bit Server VM (build 20.6-b01, mixed mode)

[root@yunwei2 ~]# cd /opt

[root@yunwei2 opt]# ls

apache-tomcat-6.0.35 jk.log.3987.lock jk.log.6205.lock

apache-tomcat-6.0.35.tar.gz jk.log.4398 jprofiler6

apr-0.9.20.tar.gz jk.log.4398.lock jprofiler_linux_6_0_2.tar.gz

apr-1.4.6 jk.log.5969 oracle

apr-1.4.6.tar.gz jk.log.5969.lock oraInventory

apr-iconv-1.2.1 jk.log.5972 sqlite-amalgamation-3071100

apr-iconv-1.2.1.tar.gz jk.log.5972.lock sqlite-amalgamation-3071100.zip

apr-util-1.4.1 jk.log.5977 subversion-1.7.4

apr-util-1.4.1.tar.gz jk.log.5977.lock subversion-1.7.4.tar.gz

hadoop-1.0.2 jk.log.5981 sun

hadoop-1.0.2-bin.tar.gz jk.log.5981.lock tomcatSS

jk.log.3864 jk.log.5991 webbench

jk.log.3864.lock jk.log.5991.lock

jk.log.3987 jk.log.6205

[root@yunwei2 opt]# rm -rf hadoop-1.0.2

[root@yunwei2 opt]# tar -zxvf hadoop-1.0.2-bin.tar.gz

hadoop-1.0.2/

hadoop-1.0.2/bin/

hadoop-1.0.2/c++/

hadoop-1.0.2/c++/Linux-amd64-64/

hadoop-1.0.2/c++/Linux-amd64-64/include/

hadoop-1.0.2/c++/Linux-amd64-64/include/hadoop/

hadoop-1.0.2/c++/Linux-amd64-64/lib/

hadoop-1.0.2/c++/Linux-i386-32/

hadoop-1.0.2/c++/Linux-i386-32/include/

hadoop-1.0.2/c++/Linux-i386-32/include/hadoop/

hadoop-1.0.2/c++/Linux-i386-32/lib/

hadoop-1.0.2/conf/

hadoop-1.0.2/contrib/

hadoop-1.0.2/contrib/datajoin/

hadoop-1.0.2/contrib/failmon/

hadoop-1.0.2/contrib/gridmix/

hadoop-1.0.2/contrib/hdfsproxy/

hadoop-1.0.2/contrib/hdfsproxy/bin/

hadoop-1.0.2/contrib/hdfsproxy/conf/

hadoop-1.0.2/contrib/hdfsproxy/logs/

hadoop-1.0.2/contrib/hod/

hadoop-1.0.2/contrib/hod/bin/

hadoop-1.0.2/contrib/hod/conf/

hadoop-1.0.2/contrib/hod/hodlib/

hadoop-1.0.2/contrib/hod/hodlib/AllocationManagers/

hadoop-1.0.2/contrib/hod/hodlib/Common/

hadoop-1.0.2/contrib/hod/hodlib/GridServices/

hadoop-1.0.2/contrib/hod/hodlib/Hod/

hadoop-1.0.2/contrib/hod/hodlib/HodRing/

hadoop-1.0.2/contrib/hod/hodlib/NodePools/

hadoop-1.0.2/contrib/hod/hodlib/RingMaster/

hadoop-1.0.2/contrib/hod/hodlib/Schedulers/

hadoop-1.0.2/contrib/hod/hodlib/ServiceProxy/

hadoop-1.0.2/contrib/hod/hodlib/ServiceRegistry/

hadoop-1.0.2/contrib/hod/ivy/

hadoop-1.0.2/contrib/hod/support/

hadoop-1.0.2/contrib/hod/testing/

hadoop-1.0.2/contrib/index/

hadoop-1.0.2/contrib/streaming/

hadoop-1.0.2/contrib/vaidya/

hadoop-1.0.2/contrib/vaidya/bin/

hadoop-1.0.2/contrib/vaidya/conf/

hadoop-1.0.2/ivy/

hadoop-1.0.2/lib/

hadoop-1.0.2/lib/jdiff/

hadoop-1.0.2/lib/jsp-2.1/

hadoop-1.0.2/lib/native/

hadoop-1.0.2/lib/native/Linux-amd64-64/

hadoop-1.0.2/lib/native/Linux-i386-32/

hadoop-1.0.2/libexec/

hadoop-1.0.2/sbin/

hadoop-1.0.2/share/

hadoop-1.0.2/share/hadoop/

hadoop-1.0.2/share/hadoop/templates/

hadoop-1.0.2/share/hadoop/templates/conf/

hadoop-1.0.2/webapps/

hadoop-1.0.2/webapps/datanode/

hadoop-1.0.2/webapps/datanode/WEB-INF/

hadoop-1.0.2/webapps/hdfs/

hadoop-1.0.2/webapps/hdfs/WEB-INF/

hadoop-1.0.2/webapps/history/

hadoop-1.0.2/webapps/history/WEB-INF/

hadoop-1.0.2/webapps/job/

hadoop-1.0.2/webapps/job/WEB-INF/

hadoop-1.0.2/webapps/secondary/

hadoop-1.0.2/webapps/secondary/WEB-INF/

hadoop-1.0.2/webapps/static/

hadoop-1.0.2/webapps/task/

hadoop-1.0.2/webapps/task/WEB-INF/

hadoop-1.0.2/CHANGES.txt

hadoop-1.0.2/LICENSE.txt

hadoop-1.0.2/NOTICE.txt

hadoop-1.0.2/README.txt

hadoop-1.0.2/build.xml

hadoop-1.0.2/c++/Linux-amd64-64/include/hadoop/Pipes.hh

hadoop-1.0.2/c++/Linux-amd64-64/include/hadoop/SerialUtils.hh

hadoop-1.0.2/c++/Linux-amd64-64/include/hadoop/StringUtils.hh

hadoop-1.0.2/c++/Linux-amd64-64/include/hadoop/TemplateFactory.hh

hadoop-1.0.2/c++/Linux-amd64-64/lib/libhadooppipes.a

hadoop-1.0.2/c++/Linux-amd64-64/lib/libhadooputils.a

hadoop-1.0.2/c++/Linux-amd64-64/lib/libhdfs.la

hadoop-1.0.2/c++/Linux-amd64-64/lib/libhdfs.so

hadoop-1.0.2/c++/Linux-amd64-64/lib/libhdfs.so.0

hadoop-1.0.2/c++/Linux-amd64-64/lib/libhdfs.so.0.0.0

hadoop-1.0.2/c++/Linux-i386-32/include/hadoop/Pipes.hh

hadoop-1.0.2/c++/Linux-i386-32/include/hadoop/SerialUtils.hh

hadoop-1.0.2/c++/Linux-i386-32/include/hadoop/StringUtils.hh

hadoop-1.0.2/c++/Linux-i386-32/include/hadoop/TemplateFactory.hh

hadoop-1.0.2/c++/Linux-i386-32/lib/libhadooppipes.a

hadoop-1.0.2/c++/Linux-i386-32/lib/libhadooputils.a

hadoop-1.0.2/c++/Linux-i386-32/lib/libhdfs.la

hadoop-1.0.2/c++/Linux-i386-32/lib/libhdfs.so

hadoop-1.0.2/c++/Linux-i386-32/lib/libhdfs.so.0

hadoop-1.0.2/c++/Linux-i386-32/lib/libhdfs.so.0.0.0

hadoop-1.0.2/conf/capacity-scheduler.xml

hadoop-1.0.2/conf/configuration.xsl

hadoop-1.0.2/conf/core-site.xml

hadoop-1.0.2/conf/fair-scheduler.xml

hadoop-1.0.2/conf/hadoop-env.sh

hadoop-1.0.2/conf/hadoop-metrics2.properties

hadoop-1.0.2/conf/hadoop-policy.xml

hadoop-1.0.2/conf/hdfs-site.xml

hadoop-1.0.2/conf/log4j.properties

hadoop-1.0.2/conf/mapred-queue-acls.xml

hadoop-1.0.2/conf/mapred-site.xml

hadoop-1.0.2/conf/masters

hadoop-1.0.2/conf/slaves

hadoop-1.0.2/conf/ssl-client.xml.example

hadoop-1.0.2/conf/ssl-server.xml.example

hadoop-1.0.2/conf/taskcontroller.cfg

hadoop-1.0.2/contrib/datajoin/hadoop-datajoin-1.0.2.jar

hadoop-1.0.2/contrib/failmon/hadoop-failmon-1.0.2.jar

hadoop-1.0.2/contrib/gridmix/hadoop-gridmix-1.0.2.jar

hadoop-1.0.2/contrib/hdfsproxy/README

hadoop-1.0.2/contrib/hdfsproxy/build.xml

hadoop-1.0.2/contrib/hdfsproxy/conf/configuration.xsl

hadoop-1.0.2/contrib/hdfsproxy/conf/hdfsproxy-default.xml

hadoop-1.0.2/contrib/hdfsproxy/conf/hdfsproxy-env.sh

hadoop-1.0.2/contrib/hdfsproxy/conf/hdfsproxy-env.sh.template

hadoop-1.0.2/contrib/hdfsproxy/conf/hdfsproxy-hosts

hadoop-1.0.2/contrib/hdfsproxy/conf/log4j.properties

hadoop-1.0.2/contrib/hdfsproxy/conf/ssl-server.xml

hadoop-1.0.2/contrib/hdfsproxy/conf/tomcat-forward-web.xml

hadoop-1.0.2/contrib/hdfsproxy/conf/tomcat-web.xml

hadoop-1.0.2/contrib/hdfsproxy/conf/user-certs.xml

hadoop-1.0.2/contrib/hdfsproxy/conf/user-permissions.xml

hadoop-1.0.2/contrib/hdfsproxy/hdfsproxy-2.0.jar

hadoop-1.0.2/contrib/hod/CHANGES.txt

hadoop-1.0.2/contrib/hod/README

hadoop-1.0.2/contrib/hod/build.xml

hadoop-1.0.2/contrib/hod/conf/hodrc

hadoop-1.0.2/contrib/hod/config.txt

hadoop-1.0.2/contrib/hod/getting_started.txt

hadoop-1.0.2/contrib/hod/hodlib/AllocationManagers/__init__.py

hadoop-1.0.2/contrib/hod/hodlib/AllocationManagers/goldAllocationManager.py

hadoop-1.0.2/contrib/hod/hodlib/Common/__init__.py

hadoop-1.0.2/contrib/hod/hodlib/Common/allocationManagerUtil.py

hadoop-1.0.2/contrib/hod/hodlib/Common/desc.py

hadoop-1.0.2/contrib/hod/hodlib/Common/descGenerator.py

hadoop-1.0.2/contrib/hod/hodlib/Common/hodsvc.py

hadoop-1.0.2/contrib/hod/hodlib/Common/logger.py

hadoop-1.0.2/contrib/hod/hodlib/Common/miniHTMLParser.py

hadoop-1.0.2/contrib/hod/hodlib/Common/nodepoolutil.py

hadoop-1.0.2/contrib/hod/hodlib/Common/setup.py

hadoop-1.0.2/contrib/hod/hodlib/Common/socketServers.py

hadoop-1.0.2/contrib/hod/hodlib/Common/tcp.py

hadoop-1.0.2/contrib/hod/hodlib/Common/threads.py

hadoop-1.0.2/contrib/hod/hodlib/Common/types.py

hadoop-1.0.2/contrib/hod/hodlib/Common/util.py

hadoop-1.0.2/contrib/hod/hodlib/Common/xmlrpc.py

hadoop-1.0.2/contrib/hod/hodlib/GridServices/__init__.py

hadoop-1.0.2/contrib/hod/hodlib/GridServices/hdfs.py

hadoop-1.0.2/contrib/hod/hodlib/GridServices/mapred.py

hadoop-1.0.2/contrib/hod/hodlib/GridServices/service.py

hadoop-1.0.2/contrib/hod/hodlib/Hod/__init__.py

hadoop-1.0.2/contrib/hod/hodlib/Hod/hadoop.py

hadoop-1.0.2/contrib/hod/hodlib/Hod/hod.py

hadoop-1.0.2/contrib/hod/hodlib/Hod/nodePool.py

hadoop-1.0.2/contrib/hod/hodlib/HodRing/__init__.py

hadoop-1.0.2/contrib/hod/hodlib/HodRing/hodRing.py

hadoop-1.0.2/contrib/hod/hodlib/NodePools/__init__.py

hadoop-1.0.2/contrib/hod/hodlib/NodePools/torque.py

hadoop-1.0.2/contrib/hod/hodlib/RingMaster/__init__.py

hadoop-1.0.2/contrib/hod/hodlib/RingMaster/idleJobTracker.py

hadoop-1.0.2/contrib/hod/hodlib/RingMaster/ringMaster.py

hadoop-1.0.2/contrib/hod/hodlib/Schedulers/__init__.py

hadoop-1.0.2/contrib/hod/hodlib/Schedulers/torque.py

hadoop-1.0.2/contrib/hod/hodlib/ServiceProxy/__init__.py

hadoop-1.0.2/contrib/hod/hodlib/ServiceProxy/serviceProxy.py

hadoop-1.0.2/contrib/hod/hodlib/ServiceRegistry/__init__.py

hadoop-1.0.2/contrib/hod/hodlib/ServiceRegistry/serviceRegistry.py

hadoop-1.0.2/contrib/hod/hodlib/__init__.py

hadoop-1.0.2/contrib/hod/ivy.xml

hadoop-1.0.2/contrib/hod/ivy/libraries.properties

hadoop-1.0.2/contrib/hod/support/checklimits.sh

hadoop-1.0.2/contrib/hod/support/logcondense.py

hadoop-1.0.2/contrib/hod/testing/__init__.py

hadoop-1.0.2/contrib/hod/testing/helper.py

hadoop-1.0.2/contrib/hod/testing/lib.py

hadoop-1.0.2/contrib/hod/testing/main.py

hadoop-1.0.2/contrib/hod/testing/testHadoop.py

hadoop-1.0.2/contrib/hod/testing/testHod.py

hadoop-1.0.2/contrib/hod/testing/testHodCleanup.py

hadoop-1.0.2/contrib/hod/testing/testHodRing.py

hadoop-1.0.2/contrib/hod/testing/testModule.py

hadoop-1.0.2/contrib/hod/testing/testRingmasterRPCs.py

hadoop-1.0.2/contrib/hod/testing/testThreads.py

hadoop-1.0.2/contrib/hod/testing/testTypes.py

hadoop-1.0.2/contrib/hod/testing/testUtil.py

hadoop-1.0.2/contrib/hod/testing/testXmlrpc.py

hadoop-1.0.2/contrib/index/hadoop-index-1.0.2.jar

hadoop-1.0.2/contrib/streaming/hadoop-streaming-1.0.2.jar

hadoop-1.0.2/contrib/vaidya/conf/postex_diagnosis_tests.xml

hadoop-1.0.2/contrib/vaidya/hadoop-vaidya-1.0.2.jar

hadoop-1.0.2/hadoop-ant-1.0.2.jar

hadoop-1.0.2/hadoop-client-1.0.2.jar

hadoop-1.0.2/hadoop-core-1.0.2.jar

hadoop-1.0.2/hadoop-examples-1.0.2.jar

hadoop-1.0.2/hadoop-minicluster-1.0.2.jar

hadoop-1.0.2/hadoop-test-1.0.2.jar

hadoop-1.0.2/hadoop-tools-1.0.2.jar

hadoop-1.0.2/ivy.xml

hadoop-1.0.2/ivy/hadoop-client-pom-template.xml

hadoop-1.0.2/ivy/hadoop-core-pom-template.xml

hadoop-1.0.2/ivy/hadoop-core.pom

hadoop-1.0.2/ivy/hadoop-examples-pom-template.xml

hadoop-1.0.2/ivy/hadoop-minicluster-pom-template.xml

hadoop-1.0.2/ivy/hadoop-streaming-pom-template.xml

hadoop-1.0.2/ivy/hadoop-test-pom-template.xml

hadoop-1.0.2/ivy/hadoop-tools-pom-template.xml

hadoop-1.0.2/ivy/ivy-2.1.0.jar

hadoop-1.0.2/ivy/ivysettings.xml

hadoop-1.0.2/ivy/libraries.properties

hadoop-1.0.2/lib/asm-3.2.jar

hadoop-1.0.2/lib/aspectjrt-1.6.5.jar

hadoop-1.0.2/lib/aspectjtools-1.6.5.jar

hadoop-1.0.2/lib/commons-beanutils-1.7.0.jar

hadoop-1.0.2/lib/commons-beanutils-core-1.8.0.jar

hadoop-1.0.2/lib/commons-cli-1.2.jar

hadoop-1.0.2/lib/commons-codec-1.4.jar

hadoop-1.0.2/lib/commons-collections-3.2.1.jar

hadoop-1.0.2/lib/commons-configuration-1.6.jar

hadoop-1.0.2/lib/commons-daemon-1.0.1.jar

hadoop-1.0.2/lib/commons-digester-1.8.jar

hadoop-1.0.2/lib/commons-el-1.0.jar

hadoop-1.0.2/lib/commons-httpclient-3.0.1.jar

hadoop-1.0.2/lib/commons-lang-2.4.jar

hadoop-1.0.2/lib/commons-logging-1.1.1.jar

hadoop-1.0.2/lib/commons-logging-api-1.0.4.jar

hadoop-1.0.2/lib/commons-math-2.1.jar

hadoop-1.0.2/lib/commons-net-1.4.1.jar

hadoop-1.0.2/lib/core-3.1.1.jar

hadoop-1.0.2/lib/hadoop-capacity-scheduler-1.0.2.jar

hadoop-1.0.2/lib/hadoop-fairscheduler-1.0.2.jar

hadoop-1.0.2/lib/hadoop-thriftfs-1.0.2.jar

hadoop-1.0.2/lib/hsqldb-1.8.0.10.LICENSE.txt

hadoop-1.0.2/lib/hsqldb-1.8.0.10.jar

hadoop-1.0.2/lib/jackson-core-asl-1.8.8.jar

hadoop-1.0.2/lib/jackson-mapper-asl-1.8.8.jar

hadoop-1.0.2/lib/jasper-compiler-5.5.12.jar

hadoop-1.0.2/lib/jasper-runtime-5.5.12.jar

hadoop-1.0.2/lib/jdeb-0.8.jar

hadoop-1.0.2/lib/jdiff/hadoop_0.17.0.xml

hadoop-1.0.2/lib/jdiff/hadoop_0.18.1.xml

hadoop-1.0.2/lib/jdiff/hadoop_0.18.2.xml

hadoop-1.0.2/lib/jdiff/hadoop_0.18.3.xml

hadoop-1.0.2/lib/jdiff/hadoop_0.19.0.xml

hadoop-1.0.2/lib/jdiff/hadoop_0.19.1.xml

hadoop-1.0.2/lib/jdiff/hadoop_0.19.2.xml

hadoop-1.0.2/lib/jdiff/hadoop_0.20.1.xml

hadoop-1.0.2/lib/jdiff/hadoop_0.20.205.0.xml

hadoop-1.0.2/lib/jdiff/hadoop_1.0.0.xml

hadoop-1.0.2/lib/jdiff/hadoop_1.0.1.xml

hadoop-1.0.2/lib/jdiff/hadoop_1.0.2.xml

hadoop-1.0.2/lib/jersey-core-1.8.jar

hadoop-1.0.2/lib/jersey-json-1.8.jar

hadoop-1.0.2/lib/jersey-server-1.8.jar

hadoop-1.0.2/lib/jets3t-0.6.1.jar

hadoop-1.0.2/lib/jetty-6.1.26.jar

hadoop-1.0.2/lib/jetty-util-6.1.26.jar

hadoop-1.0.2/lib/jsch-0.1.42.jar

hadoop-1.0.2/lib/jsp-2.1/jsp-2.1.jar

hadoop-1.0.2/lib/jsp-2.1/jsp-api-2.1.jar

hadoop-1.0.2/lib/junit-4.5.jar

hadoop-1.0.2/lib/kfs-0.2.2.jar

hadoop-1.0.2/lib/kfs-0.2.LICENSE.txt

hadoop-1.0.2/lib/log4j-1.2.15.jar

hadoop-1.0.2/lib/mockito-all-1.8.5.jar

hadoop-1.0.2/lib/native/Linux-amd64-64/libhadoop.a

hadoop-1.0.2/lib/native/Linux-amd64-64/libhadoop.la

hadoop-1.0.2/lib/native/Linux-amd64-64/libhadoop.so

hadoop-1.0.2/lib/native/Linux-amd64-64/libhadoop.so.1

hadoop-1.0.2/lib/native/Linux-amd64-64/libhadoop.so.1.0.0

hadoop-1.0.2/lib/native/Linux-i386-32/libhadoop.a

hadoop-1.0.2/lib/native/Linux-i386-32/libhadoop.la

hadoop-1.0.2/lib/native/Linux-i386-32/libhadoop.so

hadoop-1.0.2/lib/native/Linux-i386-32/libhadoop.so.1

hadoop-1.0.2/lib/native/Linux-i386-32/libhadoop.so.1.0.0

hadoop-1.0.2/lib/oro-2.0.8.jar

hadoop-1.0.2/lib/servlet-api-2.5-20081211.jar

hadoop-1.0.2/lib/slf4j-api-1.4.3.jar

hadoop-1.0.2/lib/slf4j-log4j12-1.4.3.jar

hadoop-1.0.2/lib/xmlenc-0.52.jar

hadoop-1.0.2/share/hadoop/templates/conf/capacity-scheduler.xml

hadoop-1.0.2/share/hadoop/templates/conf/commons-logging.properties

hadoop-1.0.2/share/hadoop/templates/conf/core-site.xml

hadoop-1.0.2/share/hadoop/templates/conf/hadoop-env.sh

hadoop-1.0.2/share/hadoop/templates/conf/hadoop-metrics2.properties

hadoop-1.0.2/share/hadoop/templates/conf/hadoop-policy.xml

hadoop-1.0.2/share/hadoop/templates/conf/hdfs-site.xml

hadoop-1.0.2/share/hadoop/templates/conf/log4j.properties

hadoop-1.0.2/share/hadoop/templates/conf/mapred-queue-acls.xml

hadoop-1.0.2/share/hadoop/templates/conf/mapred-site.xml

hadoop-1.0.2/share/hadoop/templates/conf/taskcontroller.cfg

hadoop-1.0.2/webapps/datanode/WEB-INF/web.xml

hadoop-1.0.2/webapps/hdfs/WEB-INF/web.xml

hadoop-1.0.2/webapps/hdfs/index.html

hadoop-1.0.2/webapps/history/WEB-INF/web.xml

hadoop-1.0.2/webapps/job/WEB-INF/web.xml

hadoop-1.0.2/webapps/job/analysejobhistory.jsp

hadoop-1.0.2/webapps/job/gethistory.jsp

hadoop-1.0.2/webapps/job/index.html

hadoop-1.0.2/webapps/job/job_authorization_error.jsp

hadoop-1.0.2/webapps/job/jobblacklistedtrackers.jsp

hadoop-1.0.2/webapps/job/jobconf.jsp

hadoop-1.0.2/webapps/job/jobconf_history.jsp

hadoop-1.0.2/webapps/job/jobdetails.jsp

hadoop-1.0.2/webapps/job/jobdetailshistory.jsp

hadoop-1.0.2/webapps/job/jobfailures.jsp

hadoop-1.0.2/webapps/job/jobhistory.jsp

hadoop-1.0.2/webapps/job/jobhistoryhome.jsp

hadoop-1.0.2/webapps/job/jobqueue_details.jsp

hadoop-1.0.2/webapps/job/jobtasks.jsp

hadoop-1.0.2/webapps/job/jobtaskshistory.jsp

hadoop-1.0.2/webapps/job/jobtracker.jsp

hadoop-1.0.2/webapps/job/legacyjobhistory.jsp

hadoop-1.0.2/webapps/job/loadhistory.jsp

hadoop-1.0.2/webapps/job/machines.jsp

hadoop-1.0.2/webapps/job/taskdetails.jsp

hadoop-1.0.2/webapps/job/taskdetailshistory.jsp

hadoop-1.0.2/webapps/job/taskstats.jsp

hadoop-1.0.2/webapps/job/taskstatshistory.jsp

hadoop-1.0.2/webapps/static/hadoop-logo.jpg

hadoop-1.0.2/webapps/static/hadoop.css

hadoop-1.0.2/webapps/static/jobconf.xsl

hadoop-1.0.2/webapps/static/jobtracker.js

hadoop-1.0.2/webapps/static/sorttable.js

hadoop-1.0.2/webapps/task/WEB-INF/web.xml

hadoop-1.0.2/webapps/task/index.html

hadoop-1.0.2/src/contrib/ec2/bin/image/

hadoop-1.0.2/bin/hadoop

hadoop-1.0.2/bin/hadoop-config.sh

hadoop-1.0.2/bin/hadoop-daemon.sh

hadoop-1.0.2/bin/hadoop-daemons.sh

hadoop-1.0.2/bin/rcc

hadoop-1.0.2/bin/slaves.sh

hadoop-1.0.2/bin/start-all.sh

hadoop-1.0.2/bin/start-balancer.sh

hadoop-1.0.2/bin/start-dfs.sh

hadoop-1.0.2/bin/start-jobhistoryserver.sh

hadoop-1.0.2/bin/start-mapred.sh

hadoop-1.0.2/bin/stop-all.sh

hadoop-1.0.2/bin/stop-balancer.sh

hadoop-1.0.2/bin/stop-dfs.sh

hadoop-1.0.2/bin/stop-jobhistoryserver.sh

hadoop-1.0.2/bin/stop-mapred.sh

hadoop-1.0.2/bin/task-controller

hadoop-1.0.2/contrib/hdfsproxy/bin/hdfsproxy

hadoop-1.0.2/contrib/hdfsproxy/bin/hdfsproxy-config.sh

hadoop-1.0.2/contrib/hdfsproxy/bin/hdfsproxy-daemon.sh

hadoop-1.0.2/contrib/hdfsproxy/bin/hdfsproxy-daemons.sh

hadoop-1.0.2/contrib/hdfsproxy/bin/hdfsproxy-slaves.sh

hadoop-1.0.2/contrib/hdfsproxy/bin/start-hdfsproxy.sh

hadoop-1.0.2/contrib/hdfsproxy/bin/stop-hdfsproxy.sh

hadoop-1.0.2/contrib/hod/bin/VERSION

hadoop-1.0.2/contrib/hod/bin/checknodes

hadoop-1.0.2/contrib/hod/bin/hod

hadoop-1.0.2/contrib/hod/bin/hodcleanup

hadoop-1.0.2/contrib/hod/bin/hodring

hadoop-1.0.2/contrib/hod/bin/ringmaster

hadoop-1.0.2/contrib/hod/bin/verify-account

hadoop-1.0.2/contrib/vaidya/bin/vaidya.sh

hadoop-1.0.2/libexec/hadoop-config.sh

hadoop-1.0.2/libexec/jsvc.amd64

hadoop-1.0.2/sbin/hadoop-create-user.sh

hadoop-1.0.2/sbin/hadoop-setup-applications.sh

hadoop-1.0.2/sbin/hadoop-setup-conf.sh

hadoop-1.0.2/sbin/hadoop-setup-hdfs.sh

hadoop-1.0.2/sbin/hadoop-setup-single-node.sh

hadoop-1.0.2/sbin/hadoop-validate-setup.sh

hadoop-1.0.2/sbin/update-hadoop-env.sh

hadoop-1.0.2/src/contrib/ec2/bin/cmd-hadoop-cluster

hadoop-1.0.2/src/contrib/ec2/bin/create-hadoop-image

hadoop-1.0.2/src/contrib/ec2/bin/delete-hadoop-cluster

hadoop-1.0.2/src/contrib/ec2/bin/hadoop-ec2

hadoop-1.0.2/src/contrib/ec2/bin/hadoop-ec2-env.sh

hadoop-1.0.2/src/contrib/ec2/bin/hadoop-ec2-init-remote.sh

hadoop-1.0.2/src/contrib/ec2/bin/image/create-hadoop-image-remote

hadoop-1.0.2/src/contrib/ec2/bin/image/ec2-run-user-data

hadoop-1.0.2/src/contrib/ec2/bin/launch-hadoop-cluster

hadoop-1.0.2/src/contrib/ec2/bin/launch-hadoop-master

hadoop-1.0.2/src/contrib/ec2/bin/launch-hadoop-slaves

hadoop-1.0.2/src/contrib/ec2/bin/list-hadoop-clusters

hadoop-1.0.2/src/contrib/ec2/bin/terminate-hadoop-cluster