OpenCV背景去除的几种方法

2012-03-29 18:59

239 查看

1、肤色侦测法

肤色提取是基于人机互动方面常见的方法。因为肤色是人体的一大特征,它可以迅速从复杂的背景下分离出自己的特征区域。一下介绍两种常见的肤色提取:

(1)HSV空间的肤色提取

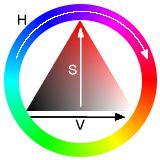

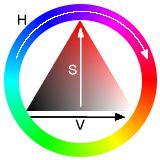

HSV色彩空间是一个圆锥形的模型,具体如右图所示:

色相(H)是色彩的基本属性,就是平常说的颜色名称,例如红色、黄色等,

依照右图的标准色轮上的位置,取360度得数值。(也有0~100%的方法确定) 饱和度(S)是色彩的纯度,越高色彩越纯,低则变灰。取值为0~100%。明度(V)也叫亮度,取值0~100。

根据肤色在HSV三个分量上的值,就可以简单的侦测出一张图像上肤色的部分。一下是肤色侦测函数的源代码:

[c-sharp] view plaincopy

void skinDetectionHSV(IplImage* pImage,int lower,int upper,IplImage* process)

{

IplImage* pImageHSV = NULL;

IplImage* pImageH = NULL;

IplImage* pImageS = NULL;

IplImage* pImageProcessed = NULL;

IplImage* tmpH = NULL;

IplImage* tmpS = NULL;

static IplImage* pyrImage = NULL;

CvSize imgSize;

imgSize.height = pImage->height;

imgSize.width = pImage->width ;

//create you want to use image and give them memory allocation

pImageHSV = cvCreateImage(imgSize,IPL_DEPTH_8U,3);

pImageH = cvCreateImage(imgSize,IPL_DEPTH_8U,1);

pImageS = cvCreateImage(imgSize,IPL_DEPTH_8U,1);

tmpS = cvCreateImage(imgSize,IPL_DEPTH_8U,1);

tmpH = cvCreateImage(imgSize,IPL_DEPTH_8U,1);

pImageProcessed = cvCreateImage(imgSize,IPL_DEPTH_8U,1);

pyrImage = cvCreateImage(cvSize(pImage->width/2,pImage->height/2),IPL_DEPTH_8U,1);

//convert RGB image to HSV image

cvCvtColor(pImage,pImageHSV,CV_BGR2HSV);

//Then split HSV to three single channel images

cvCvtPixToPlane(pImageHSV,pImageH,pImageS,NULL,NULL);

//The skin scalar range in H and S, Do they AND algorithm

cvInRangeS(pImageH,cvScalar(0.0,0.0,0,0),cvScalar(lower,0.0,0,0),tmpH);

cvInRangeS(pImageS,cvScalar(26,0.0,0,0),cvScalar(upper,0.0,0,0),tmpS);

cvAnd(tmpH,tmpS,pImageProcessed,0);

//

//cvPyrDown(pImageProcessed,pyrImage,CV_GAUSSIAN_5x5);

//cvPyrUp(pyrImage,pImageProcessed,CV_GAUSSIAN_5x5);

//Erode and dilate

cvErode(pImageProcessed,pImageProcessed,0,2);

cvDilate(pImageProcessed,pImageProcessed,0,1);

cvCopy(pImageProcessed,process,0);

//do clean

cvReleaseImage(&pyrImage);

cvReleaseImage(&pImageHSV);

cvReleaseImage(&pImageH);

cvReleaseImage(&pImageS);

cvReleaseImage(&pyrImage);

cvReleaseImage(&tmpH);

cvReleaseImage(&tmpS);

cvReleaseImage(&pImageProcessed);

}

(2)YCrCb空间的肤色提取

YCrCb也是一种颜色空间,也可以说是YUV的颜色空间。Y是亮度的分量,而肤色侦测是对亮度比较敏感的,由摄像头拍摄的RGB图像转化为YCrCb空间的话可以去除亮度对肤色侦测的影响。下面给出基于YCrCb肤色侦测函数的源代码:

[c-sharp] view plaincopy

void skinDetectionYCrCb(IplImage* imageRGB,int lower,int upper,IplImage* imgProcessed)

{

assert(imageRGB->nChannels==3);

IplImage* imageYCrCb = NULL;

IplImage* imageCb = NULL;

imageYCrCb = cvCreateImage(cvGetSize(imageRGB),8,3);

imageCb = cvCreateImage(cvGetSize(imageRGB),8,1);

cvCvtColor(imageRGB,imageYCrCb,CV_BGR2YCrCb);

cvSplit(imageYCrCb,0,0,imageCb,0);//Cb

for (int h=0;h<imageCb->height;h++)

{

for (int w=0;w<imageCb->width;w++)

{

unsigned char* p =(unsigned char*)(imageCb->imageData+h*imageCb->widthStep+w);

if (*p<=upper&&*p>=lower)

{

*p=255;

}

else

{

*p=0;

}

}

}

cvCopy(imageCb,imgProcessed,NULL);

}

2、基于混合高斯模型去除背景法

高斯模型去除背景法也是背景去除的一种常用的方法,经常会用到视频图像侦测中。这种方法对于动态的视频图像特征侦测比较适合,因为模型中是前景和背景分离开来的。分离前景和背景的基准是判断像素点变化率,会把变化慢的学习为背景,变化快的视为前景。

[c-sharp] view plaincopy

//

#include "stdafx.h"

#include "cv.h"

#include "highgui.h"

#include "cxtypes.h"

#include "cvaux.h"

# include <iostream>

using namespace std;

int _tmain(int argc, _TCHAR* argv[])

{

//IplImage* pFirstFrame = NULL;

IplImage* pFrame = NULL;

IplImage* pFrImg = NULL;

IplImage* pBkImg = NULL;

IplImage* FirstImg = NULL;

static IplImage* pyrImg =NULL;

CvCapture* pCapture = NULL;

int nFrmNum = 0;

int first = 0,next = 0;

int thresh = 0;

cvNamedWindow("video",0);

//cvNamedWindow("background",0);

cvNamedWindow("foreground",0);

cvResizeWindow("video",400,400);

cvResizeWindow("foreground",400,400);

//cvCreateTrackbar("thresh","foreground",&thresh,255,NULL);

//cvMoveWindow("background",360,0);

//cvMoveWindow("foregtound",0,0);

if(!(pCapture = cvCaptureFromCAM(1)))

{

printf("Could not initialize camera , please check it !");

return -1;

}

CvGaussBGModel* bg_model = NULL;

while(pFrame = cvQueryFrame(pCapture))

{

nFrmNum++;

if(nFrmNum == 1)

{

pBkImg = cvCreateImage(cvGetSize(pFrame),IPL_DEPTH_8U,3);

pFrImg = cvCreateImage(cvGetSize(pFrame),IPL_DEPTH_8U,1);

FirstImg = cvCreateImage(cvGetSize(pFrame),IPL_DEPTH_8U,1);

pyrImg = cvCreateImage(cvSize(pFrame->width/2,pFrame->height/2),IPL_DEPTH_8U,1);

CvGaussBGStatModelParams params;

params.win_size = 2000; //Learning rate = 1/win_size;

params.bg_threshold = 0.7; //Threshold sum of weights for background test

params.weight_init = 0.05;

params.variance_init = 30;

params.minArea = 15.f;

params.n_gauss = 5; //= K =Number of gaussian in mixture

params.std_threshold = 2.5;

//cvCopy(pFrame,pFirstFrame,0);

bg_model = (CvGaussBGModel*)cvCreateGaussianBGModel(pFrame,¶ms);

}

else

{

int regioncount = 0;

int totalNum = pFrImg->width *pFrImg->height ;

cvSmooth(pFrame,pFrame,CV_GAUSSIAN,3,0,0,0);

cvUpdateBGStatModel(pFrame,(CvBGStatModel*)bg_model,-0.00001);

cvCopy(bg_model->foreground ,pFrImg,0);

cvCopy(bg_model->background ,pBkImg,0);

//cvShowImage("background",pBkImg);

//cvSmooth(pFrImg,pFrImg,CV_GAUSSIAN,3,0,0,0);

//cvPyrDown(pFrImg,pyrImg,CV_GAUSSIAN_5x5);

//cvPyrUp(pyrImg,pFrImg,CV_GAUSSIAN_5x5);

//cvSmooth(pFrImg,pFrImg,CV_GAUSSIAN,3,0,0,0);

cvErode(pFrImg,pFrImg,0,1);

cvDilate(pFrImg,pFrImg,0,3);

//pBkImg->origin = 1;

//pFrImg->origin = 1;

cvShowImage("video",pFrame);

cvShowImage("foreground",pFrImg);

//cvReleaseBGStatModel((CvBGStatModel**)&bg_model);

//bg_model = (CvGaussBGModel*)cvCreateGaussianBGModel(pFrame,0);

/*

//catch target frame

if(nFrmNum>10 &&(double)cvSumImage(pFrImg)>0.3 * totalNum)

{

first = cvSumImage(FirstImg);

next = cvSumImage(pFrImg);

printf("Next number is :%d /n",next);

cvCopy(pFrImg,FirstImg,0);

}

cvShowImage("foreground",pFrImg);

cvCopy(pFrImg,FirstImg,0);

*/

if(cvWaitKey(2)== 27)

{

break;

}

}

}

cvReleaseBGStatModel((CvBGStatModel**)&bg_model);

cvDestroyAllWindows();

cvReleaseImage(&pFrImg);

cvReleaseImage(&FirstImg);

cvReleaseImage(&pFrame);

cvReleaseImage(&pBkImg);

cvReleaseCapture(&pCapture);

return 0;

}

3、背景相减背景去除方法

所谓的背景相减,是指把摄像头捕捉的图像第一帧作为背景,以后的每一帧都减去背景帧,这样减去之后剩下的就是多出来的特征物体(要侦测的物体)的部分。但是相减的部分也会对特征物体的灰阶值产生影响,一般是设定相关阈值要进行判断。以下是代码部分:

[c-sharp] view plaincopy

int _tmain(int argc, _TCHAR* argv[])

{

int thresh_low = 30;

IplImage* pImgFrame = NULL;

IplImage* pImgProcessed = NULL;

IplImage* pImgBackground = NULL;

IplImage* pyrImage = NULL;

CvMat* pMatFrame = NULL;

CvMat* pMatProcessed = NULL;

CvMat* pMatBackground = NULL;

CvCapture* pCapture = NULL;

cvNamedWindow("video", 0);

cvNamedWindow("background",0);

cvNamedWindow("processed",0);

//Create trackbar

cvCreateTrackbar("Low","processed",&thresh_low,255,NULL);

cvResizeWindow("video",400,400);

cvResizeWindow("background",400,400);

cvResizeWindow("processed",400,400);

cvMoveWindow("video", 0, 0);

cvMoveWindow("background", 400, 0);

cvMoveWindow("processed", 800, 0);

if( !(pCapture = cvCaptureFromCAM(1)))

{

fprintf(stderr, "Can not open camera./n");

return -2;

}

//first frame

pImgFrame = cvQueryFrame( pCapture );

pImgBackground = cvCreateImage(cvSize(pImgFrame->width, pImgFrame->height), IPL_DEPTH_8U,1);

pImgProcessed = cvCreateImage(cvSize(pImgFrame->width, pImgFrame->height), IPL_DEPTH_8U,1);

pyrImage = cvCreateImage(cvSize(pImgFrame->width/2, pImgFrame->height/2), IPL_DEPTH_8U,1);

pMatBackground = cvCreateMat(pImgFrame->height, pImgFrame->width, CV_32FC1);

pMatProcessed = cvCreateMat(pImgFrame->height, pImgFrame->width, CV_32FC1);

pMatFrame = cvCreateMat(pImgFrame->height, pImgFrame->width, CV_32FC1);

cvSmooth(pImgFrame, pImgFrame, CV_GAUSSIAN, 3, 0, 0);

cvCvtColor(pImgFrame, pImgBackground, CV_BGR2GRAY);

cvCvtColor(pImgFrame, pImgProcessed, CV_BGR2GRAY);

cvConvert(pImgProcessed, pMatFrame);

cvConvert(pImgProcessed, pMatProcessed);

cvConvert(pImgProcessed, pMatBackground);

cvSmooth(pMatBackground, pMatBackground, CV_GAUSSIAN, 3, 0, 0);

while(pImgFrame = cvQueryFrame( pCapture ))

{

cvShowImage("video", pImgFrame);

cvSmooth(pImgFrame, pImgFrame, CV_GAUSSIAN, 3, 0, 0);

cvCvtColor(pImgFrame, pImgProcessed, CV_BGR2GRAY);

cvConvert(pImgProcessed, pMatFrame);

cvSmooth(pMatFrame, pMatFrame, CV_GAUSSIAN, 3, 0, 0);

cvAbsDiff(pMatFrame, pMatBackground, pMatProcessed);

//cvConvert(pMatProcessed,pImgProcessed);

//cvThresholdBidirection(pImgProcessed,thresh_low);

cvThreshold(pMatProcessed, pImgProcessed, 30, 255.0, CV_THRESH_BINARY);

cvPyrDown(pImgProcessed,pyrImage,CV_GAUSSIAN_5x5);

cvPyrUp(pyrImage,pImgProcessed,CV_GAUSSIAN_5x5);

//Erode and dilate

cvErode(pImgProcessed, pImgProcessed, 0, 1);

cvDilate(pImgProcessed, pImgProcessed, 0, 1);

//background update

cvRunningAvg(pMatFrame, pMatBackground, 0.0003, 0);

cvConvert(pMatBackground, pImgBackground);

cvShowImage("background", pImgBackground);

cvShowImage("processed", pImgProcessed);

//cvZero(pImgProcessed);

if( cvWaitKey(10) == 27 )

{

break;

}

}

cvDestroyWindow("video");

cvDestroyWindow("background");

cvDestroyWindow("processed");

cvReleaseImage(&pImgProcessed);

cvReleaseImage(&pImgBackground);

cvReleaseMat(&pMatFrame);

cvReleaseMat(&pMatProcessed);

cvReleaseMat(&pMatBackground);

cvReleaseCapture(&pCapture);

return 0;

}

肤色提取是基于人机互动方面常见的方法。因为肤色是人体的一大特征,它可以迅速从复杂的背景下分离出自己的特征区域。一下介绍两种常见的肤色提取:

(1)HSV空间的肤色提取

HSV色彩空间是一个圆锥形的模型,具体如右图所示:

色相(H)是色彩的基本属性,就是平常说的颜色名称,例如红色、黄色等,

依照右图的标准色轮上的位置,取360度得数值。(也有0~100%的方法确定) 饱和度(S)是色彩的纯度,越高色彩越纯,低则变灰。取值为0~100%。明度(V)也叫亮度,取值0~100。

根据肤色在HSV三个分量上的值,就可以简单的侦测出一张图像上肤色的部分。一下是肤色侦测函数的源代码:

[c-sharp] view plaincopy

void skinDetectionHSV(IplImage* pImage,int lower,int upper,IplImage* process)

{

IplImage* pImageHSV = NULL;

IplImage* pImageH = NULL;

IplImage* pImageS = NULL;

IplImage* pImageProcessed = NULL;

IplImage* tmpH = NULL;

IplImage* tmpS = NULL;

static IplImage* pyrImage = NULL;

CvSize imgSize;

imgSize.height = pImage->height;

imgSize.width = pImage->width ;

//create you want to use image and give them memory allocation

pImageHSV = cvCreateImage(imgSize,IPL_DEPTH_8U,3);

pImageH = cvCreateImage(imgSize,IPL_DEPTH_8U,1);

pImageS = cvCreateImage(imgSize,IPL_DEPTH_8U,1);

tmpS = cvCreateImage(imgSize,IPL_DEPTH_8U,1);

tmpH = cvCreateImage(imgSize,IPL_DEPTH_8U,1);

pImageProcessed = cvCreateImage(imgSize,IPL_DEPTH_8U,1);

pyrImage = cvCreateImage(cvSize(pImage->width/2,pImage->height/2),IPL_DEPTH_8U,1);

//convert RGB image to HSV image

cvCvtColor(pImage,pImageHSV,CV_BGR2HSV);

//Then split HSV to three single channel images

cvCvtPixToPlane(pImageHSV,pImageH,pImageS,NULL,NULL);

//The skin scalar range in H and S, Do they AND algorithm

cvInRangeS(pImageH,cvScalar(0.0,0.0,0,0),cvScalar(lower,0.0,0,0),tmpH);

cvInRangeS(pImageS,cvScalar(26,0.0,0,0),cvScalar(upper,0.0,0,0),tmpS);

cvAnd(tmpH,tmpS,pImageProcessed,0);

//

//cvPyrDown(pImageProcessed,pyrImage,CV_GAUSSIAN_5x5);

//cvPyrUp(pyrImage,pImageProcessed,CV_GAUSSIAN_5x5);

//Erode and dilate

cvErode(pImageProcessed,pImageProcessed,0,2);

cvDilate(pImageProcessed,pImageProcessed,0,1);

cvCopy(pImageProcessed,process,0);

//do clean

cvReleaseImage(&pyrImage);

cvReleaseImage(&pImageHSV);

cvReleaseImage(&pImageH);

cvReleaseImage(&pImageS);

cvReleaseImage(&pyrImage);

cvReleaseImage(&tmpH);

cvReleaseImage(&tmpS);

cvReleaseImage(&pImageProcessed);

}

(2)YCrCb空间的肤色提取

YCrCb也是一种颜色空间,也可以说是YUV的颜色空间。Y是亮度的分量,而肤色侦测是对亮度比较敏感的,由摄像头拍摄的RGB图像转化为YCrCb空间的话可以去除亮度对肤色侦测的影响。下面给出基于YCrCb肤色侦测函数的源代码:

[c-sharp] view plaincopy

void skinDetectionYCrCb(IplImage* imageRGB,int lower,int upper,IplImage* imgProcessed)

{

assert(imageRGB->nChannels==3);

IplImage* imageYCrCb = NULL;

IplImage* imageCb = NULL;

imageYCrCb = cvCreateImage(cvGetSize(imageRGB),8,3);

imageCb = cvCreateImage(cvGetSize(imageRGB),8,1);

cvCvtColor(imageRGB,imageYCrCb,CV_BGR2YCrCb);

cvSplit(imageYCrCb,0,0,imageCb,0);//Cb

for (int h=0;h<imageCb->height;h++)

{

for (int w=0;w<imageCb->width;w++)

{

unsigned char* p =(unsigned char*)(imageCb->imageData+h*imageCb->widthStep+w);

if (*p<=upper&&*p>=lower)

{

*p=255;

}

else

{

*p=0;

}

}

}

cvCopy(imageCb,imgProcessed,NULL);

}

2、基于混合高斯模型去除背景法

高斯模型去除背景法也是背景去除的一种常用的方法,经常会用到视频图像侦测中。这种方法对于动态的视频图像特征侦测比较适合,因为模型中是前景和背景分离开来的。分离前景和背景的基准是判断像素点变化率,会把变化慢的学习为背景,变化快的视为前景。

[c-sharp] view plaincopy

//

#include "stdafx.h"

#include "cv.h"

#include "highgui.h"

#include "cxtypes.h"

#include "cvaux.h"

# include <iostream>

using namespace std;

int _tmain(int argc, _TCHAR* argv[])

{

//IplImage* pFirstFrame = NULL;

IplImage* pFrame = NULL;

IplImage* pFrImg = NULL;

IplImage* pBkImg = NULL;

IplImage* FirstImg = NULL;

static IplImage* pyrImg =NULL;

CvCapture* pCapture = NULL;

int nFrmNum = 0;

int first = 0,next = 0;

int thresh = 0;

cvNamedWindow("video",0);

//cvNamedWindow("background",0);

cvNamedWindow("foreground",0);

cvResizeWindow("video",400,400);

cvResizeWindow("foreground",400,400);

//cvCreateTrackbar("thresh","foreground",&thresh,255,NULL);

//cvMoveWindow("background",360,0);

//cvMoveWindow("foregtound",0,0);

if(!(pCapture = cvCaptureFromCAM(1)))

{

printf("Could not initialize camera , please check it !");

return -1;

}

CvGaussBGModel* bg_model = NULL;

while(pFrame = cvQueryFrame(pCapture))

{

nFrmNum++;

if(nFrmNum == 1)

{

pBkImg = cvCreateImage(cvGetSize(pFrame),IPL_DEPTH_8U,3);

pFrImg = cvCreateImage(cvGetSize(pFrame),IPL_DEPTH_8U,1);

FirstImg = cvCreateImage(cvGetSize(pFrame),IPL_DEPTH_8U,1);

pyrImg = cvCreateImage(cvSize(pFrame->width/2,pFrame->height/2),IPL_DEPTH_8U,1);

CvGaussBGStatModelParams params;

params.win_size = 2000; //Learning rate = 1/win_size;

params.bg_threshold = 0.7; //Threshold sum of weights for background test

params.weight_init = 0.05;

params.variance_init = 30;

params.minArea = 15.f;

params.n_gauss = 5; //= K =Number of gaussian in mixture

params.std_threshold = 2.5;

//cvCopy(pFrame,pFirstFrame,0);

bg_model = (CvGaussBGModel*)cvCreateGaussianBGModel(pFrame,¶ms);

}

else

{

int regioncount = 0;

int totalNum = pFrImg->width *pFrImg->height ;

cvSmooth(pFrame,pFrame,CV_GAUSSIAN,3,0,0,0);

cvUpdateBGStatModel(pFrame,(CvBGStatModel*)bg_model,-0.00001);

cvCopy(bg_model->foreground ,pFrImg,0);

cvCopy(bg_model->background ,pBkImg,0);

//cvShowImage("background",pBkImg);

//cvSmooth(pFrImg,pFrImg,CV_GAUSSIAN,3,0,0,0);

//cvPyrDown(pFrImg,pyrImg,CV_GAUSSIAN_5x5);

//cvPyrUp(pyrImg,pFrImg,CV_GAUSSIAN_5x5);

//cvSmooth(pFrImg,pFrImg,CV_GAUSSIAN,3,0,0,0);

cvErode(pFrImg,pFrImg,0,1);

cvDilate(pFrImg,pFrImg,0,3);

//pBkImg->origin = 1;

//pFrImg->origin = 1;

cvShowImage("video",pFrame);

cvShowImage("foreground",pFrImg);

//cvReleaseBGStatModel((CvBGStatModel**)&bg_model);

//bg_model = (CvGaussBGModel*)cvCreateGaussianBGModel(pFrame,0);

/*

//catch target frame

if(nFrmNum>10 &&(double)cvSumImage(pFrImg)>0.3 * totalNum)

{

first = cvSumImage(FirstImg);

next = cvSumImage(pFrImg);

printf("Next number is :%d /n",next);

cvCopy(pFrImg,FirstImg,0);

}

cvShowImage("foreground",pFrImg);

cvCopy(pFrImg,FirstImg,0);

*/

if(cvWaitKey(2)== 27)

{

break;

}

}

}

cvReleaseBGStatModel((CvBGStatModel**)&bg_model);

cvDestroyAllWindows();

cvReleaseImage(&pFrImg);

cvReleaseImage(&FirstImg);

cvReleaseImage(&pFrame);

cvReleaseImage(&pBkImg);

cvReleaseCapture(&pCapture);

return 0;

}

3、背景相减背景去除方法

所谓的背景相减,是指把摄像头捕捉的图像第一帧作为背景,以后的每一帧都减去背景帧,这样减去之后剩下的就是多出来的特征物体(要侦测的物体)的部分。但是相减的部分也会对特征物体的灰阶值产生影响,一般是设定相关阈值要进行判断。以下是代码部分:

[c-sharp] view plaincopy

int _tmain(int argc, _TCHAR* argv[])

{

int thresh_low = 30;

IplImage* pImgFrame = NULL;

IplImage* pImgProcessed = NULL;

IplImage* pImgBackground = NULL;

IplImage* pyrImage = NULL;

CvMat* pMatFrame = NULL;

CvMat* pMatProcessed = NULL;

CvMat* pMatBackground = NULL;

CvCapture* pCapture = NULL;

cvNamedWindow("video", 0);

cvNamedWindow("background",0);

cvNamedWindow("processed",0);

//Create trackbar

cvCreateTrackbar("Low","processed",&thresh_low,255,NULL);

cvResizeWindow("video",400,400);

cvResizeWindow("background",400,400);

cvResizeWindow("processed",400,400);

cvMoveWindow("video", 0, 0);

cvMoveWindow("background", 400, 0);

cvMoveWindow("processed", 800, 0);

if( !(pCapture = cvCaptureFromCAM(1)))

{

fprintf(stderr, "Can not open camera./n");

return -2;

}

//first frame

pImgFrame = cvQueryFrame( pCapture );

pImgBackground = cvCreateImage(cvSize(pImgFrame->width, pImgFrame->height), IPL_DEPTH_8U,1);

pImgProcessed = cvCreateImage(cvSize(pImgFrame->width, pImgFrame->height), IPL_DEPTH_8U,1);

pyrImage = cvCreateImage(cvSize(pImgFrame->width/2, pImgFrame->height/2), IPL_DEPTH_8U,1);

pMatBackground = cvCreateMat(pImgFrame->height, pImgFrame->width, CV_32FC1);

pMatProcessed = cvCreateMat(pImgFrame->height, pImgFrame->width, CV_32FC1);

pMatFrame = cvCreateMat(pImgFrame->height, pImgFrame->width, CV_32FC1);

cvSmooth(pImgFrame, pImgFrame, CV_GAUSSIAN, 3, 0, 0);

cvCvtColor(pImgFrame, pImgBackground, CV_BGR2GRAY);

cvCvtColor(pImgFrame, pImgProcessed, CV_BGR2GRAY);

cvConvert(pImgProcessed, pMatFrame);

cvConvert(pImgProcessed, pMatProcessed);

cvConvert(pImgProcessed, pMatBackground);

cvSmooth(pMatBackground, pMatBackground, CV_GAUSSIAN, 3, 0, 0);

while(pImgFrame = cvQueryFrame( pCapture ))

{

cvShowImage("video", pImgFrame);

cvSmooth(pImgFrame, pImgFrame, CV_GAUSSIAN, 3, 0, 0);

cvCvtColor(pImgFrame, pImgProcessed, CV_BGR2GRAY);

cvConvert(pImgProcessed, pMatFrame);

cvSmooth(pMatFrame, pMatFrame, CV_GAUSSIAN, 3, 0, 0);

cvAbsDiff(pMatFrame, pMatBackground, pMatProcessed);

//cvConvert(pMatProcessed,pImgProcessed);

//cvThresholdBidirection(pImgProcessed,thresh_low);

cvThreshold(pMatProcessed, pImgProcessed, 30, 255.0, CV_THRESH_BINARY);

cvPyrDown(pImgProcessed,pyrImage,CV_GAUSSIAN_5x5);

cvPyrUp(pyrImage,pImgProcessed,CV_GAUSSIAN_5x5);

//Erode and dilate

cvErode(pImgProcessed, pImgProcessed, 0, 1);

cvDilate(pImgProcessed, pImgProcessed, 0, 1);

//background update

cvRunningAvg(pMatFrame, pMatBackground, 0.0003, 0);

cvConvert(pMatBackground, pImgBackground);

cvShowImage("background", pImgBackground);

cvShowImage("processed", pImgProcessed);

//cvZero(pImgProcessed);

if( cvWaitKey(10) == 27 )

{

break;

}

}

cvDestroyWindow("video");

cvDestroyWindow("background");

cvDestroyWindow("processed");

cvReleaseImage(&pImgProcessed);

cvReleaseImage(&pImgBackground);

cvReleaseMat(&pMatFrame);

cvReleaseMat(&pMatProcessed);

cvReleaseMat(&pMatBackground);

cvReleaseCapture(&pCapture);

return 0;

}

相关文章推荐

- OpenCV背景去除的几种方法

- OpenCV背景去除的几种方法

- OpenCV背景去除的几种方法

- OpenCV背景去除的几种方法

- OpenCV常见的几种背景消除的方法

- OpenCV去除背景方法详解

- 高斯混合模型背景建模(BackgroundSubtractorMOG2)在opencv3.0与opencv2.4中的使用方法区别

- 抠火焰,介绍几种实用的抠黑色背景火焰素材的方法

- MFC几种给对话框添加背景图的方法

- 使用achartengine绘图引擎,去除图标后面的黑色背景的方法

- 计算机视觉 openCV2 几种fitting line 方法

- JavaScript去除空格的几种方法

- 关于iOS去除数组中重复数据的几种方法

- JavaScript去除空格的几种方法 (trim)

- 关于 去除UITableViewCell复用机制 的几种方法

- JAVA之设置背景图片的几种方法

- 去除list集合中重复项的几种方法

- iOS去除数组中重复元素的几种方法

- iOS去除图片背景颜色的方法

- opencv背景去除建模(BSM)