ETL´s: Talend Open Studio vs Pentaho Data Integration (Kettle). Comparative.

2011-09-27 18:35

1271 查看

Let’s try in this latest entry of the ETL processes series to make a comparison as complete as possible of Tools Talend Open Studio and Pentaho Data Integration (Kettle), which we have been using in

last months. For this study to be as comprehensive and rigorous as possible, we will divide the task in 5 sections:

Using Talend Open Studio in ETL Process Design

Property table.

Examples of Use

Strengths / weaknesses table.

Resource Links (comparative and additional information.)

Final opinion.

As further examples, you can also consult:

Tutorial Talend Open Studio 4 by Victor Javier Madrid on the web adictosaltrabajo.com.

Includes another example to process an EDI file with Talend.

White Paper on Open Source ETL tools conducted in French by Atol.

It includes a series of practical examples very complete.

Using Pentaho Data Integration (Kettle) in ETL Process Design

more future, since they are putting many resources in its development, and is being supplemented with other tools to create a true data integration suite. Also used in the Jaspersoft project, the fact of being more open and can be complemented with

the use of Java gives certain advantages over Pentaho.

By the other hand, Pentaho Data Integration is a very intuitive and easy to use. You can see from the beginning when you start to use, as I mentioned, which is developed through the

prism of the problems of ETL processes and data transformation. In some aspects it is faster and more agile than Talend, not having to be moving Java code generation all the time. We misses the management of a truly integrated project repository, such as Talend,

and an independent metadata of source/target systems.

A level of performance, and reviewing the different comparations and benchmarks, not see a clear winner. A tool is faster in some things (Talend in calculating additions or Lookups), while Pentaho

is faster, for example in the treatment of SCD or the parallelization process. In my ETL processes there have been no large differences in performance, although I found slightly more agile Pentaho when performing mass processes.

Taking into account all seen (and everything detailed in the above), I opted for Talend slightly, but before choosing a tool for a project, I conduct a thorough study of the type of work and

casuistic to which we will face in the design of our processes before opting for one or other tool. You may have specific factors that may recommend the use of one or another (such as the need to connect to a particular application or platform in which to

run the process). What is clear in both cases, is that either we could hold for the processes of construction of a DW in a real environment (as I have shown in this blog with the whole series of published examples.)

If you have worked with some of the tools, or both, maybe have to add something to this comparison. I hope your opinions.

Magic Quadrant for Data Integration

Tools.

In the latter study was included Talend as a emerging provider of data integration tools. If you are considering working with Talend, they say interesting things (both Strengths and Cautions) on the

evolution of the product and its future:

Strong Points:

Two levels: entry-level Open Source tool free (Talend Open Studio) and higher with a payment tool with more features and support (Talend Integration Suite).

Talend is getting almost unanimously positive results in business. Although the initial factor may be its price, its features and functionality are the second factor in its success.

Good connectivity in general. Complemented with the tools of Data Profiling and Data Quality. The passage of the versions to pay Open requires no extra learning curve.

Precautions:

There is a shortage of experts in the tool, although it is developing a network of alliances with other companies and develop its commercial network (although they are not in all regions).

There are some problems with the central repository (which is not in the Open version), when working to coordinate development works. Looks like they are trying to solve in the new versions.

Some customers have reported that the documentation is wrong and some problems in metadata management. It is also necessary to have an expert in Java or Perl to take full advantage of the tool

(such as indicated in comparison with Pentaho).

I recommend reading the report, there is a lot of information about data integration tools and particularly if you are looking for information on any individual (such as Talend, the purpose of this

comparative product).

last months. For this study to be as comprehensive and rigorous as possible, we will divide the task in 5 sections:

Using Talend Open Studio in ETL Process Design

Property table.

Examples of Use

Strengths / weaknesses table.

Resource Links (comparative and additional information.)

Final opinion.

Property table.

| Product | TALEND OPEN STUDIO ver.4.0 | PENTAHO DATA INTEGRACION CE (KETTLE) ver 3.2 |

| Manufacturer | Talend – France | Pentaho – United States |

| Web | www.talend.com | www.pentaho.com |

| License | GNU Lesser General Public License Version 2.1 (LGPLv2.1) | GNU Lesser General Public License Version 2.1 (LGPLv2.1 |

| Development Language | Java | Java |

| Release Year | 2006 | 2000 |

| GUI | Graphical tool based on Eclipse | Design Tool (Spoon) based on SWT |

| Runtime Environment | From design tool, or command line with Java or Perl language (independent of the tool) | From design tool, or command line with utilities Pan and Kitchen . |

| Features | With the design tool build the Jobs, using the set of components available.Work with project concept, which is a container of different Jobs with metadata and contexts. Talend is a code generator, so Jobs are translated into corresponding defined language (Java or Perl can choose when create a new project), compiled and executed .Components bind to each other with different types of connections. One is to pass information (which may be of Row or Iterate, as how to move the data). Also, you can connect with each other triggering connections (Run If, If Component Ok, If Component Error) that allow us to articulate the sequence of execution and ending time control.Jobs are exported at SO, and can run independently of the design tool on any platform that allows the execution of the selected language. In addition, all generated code is visible and modifiable (although you modify the tool to make any changes to the Jobs). | With the design tool built Spoon transformations (minimum design level) using the steps. At a higher level we have the Jobs that let you run the transformations and other components, and orchestrate process. PDI is not a code generator, is a transformation engine, where data and its transformations are separated.The transformations and Jobs are stored in XML format, which specifies the actions to take in data processing. In transformations use steps, which are linked to each other by jumps, which determine the flow of data between different components. For the jobs, we have another set of steps, which can perform different actions (or run transformations). The jumps in this case determine the execution order or conditional execution. |

| Components | Talend has a large number of components. The approach is to have a separate component as the action to take, and access to databases or other systems, there are different components according to the database engine that we will attack. For example, we have an input table component for each manufacturer (Oracle, MySQL, Informix, Ingres), or one for the SCD management for each RDBMS. You can see available components list here. | Smallest components set, but very much oriented towards data integration. For similar actions (eg reading database tables), a single step (no one from each manufacturer), and behavior according to the database defined by the connection. You can see available elements for transformations here and for jobs here. |

| Platform | Windows, Unix and Linux. | Windows, Unix and Linux. |

| Repository | Works with the workspace concept, at filesystem level. In this place you store all the components of a project (all Jobs, metadata definitions, custom code and contexts). The repository is updated with the dependencies of changend objects (expand to all project changes.) If we change the table definition in repository, for example, is updated in all the Jobs where it is used. | The Jobs and transformations are stored in XML format. We can choose to store at file system level or in the database repository (for teamwork). Dependencies are not updated if you change a transformation who is called from another. If the level of components within a single transformation or job. |

| Metadata | Full metadata that includes links to databases and the objects (tables, views, querys). Metadata info is centrally stored in workspace and its not necesary to read again from source or destination system, which streamlines the process. In addition, we can define metadata file structures (delimited, positional, Excel, xml, etc), which can then be reused in any component. | The metadata is limited to database connections, which metadata can be shared by different transformations and jobs.Database information (catalog tables / fields) or files specifications (structure) is stored in steps and can not be reused. This info is read in design time. |

| Contexts | Set of variables that are configured in the project and that can be used later in the Jobs for your behavior (for example to define productive and development environment). | Using Variables in tool parameters file (file kettle.properties). Passing parameters and arguments to the process (similar to the contexts), both in jobs and transformations. |

| Versions | It allows us to perform a complete management of objects versions (can recover previous versions) | Functionality provided in version 4.0. |

| Languages to define their own components (scripting) | Talend allows us to introduce our custom code using Java andGroovy.Additional custom SQL and Shell. | JavaScript used for the calculations and formulas.Aditional custom SQL, Java, Shell and open office formulas. |

| Additional tools | Talend offers additional tools for Data Profiling and Master Data Management (MDM). Open Studio have too a simple modeling tool to draw logical processes and models. | PDI 4.0 offers Agile functionality for dimensional modeling and models publication in Pentaho BI. |

| Plugins | Download new components through Talend Exchange . | Incorporation of additional plugins in theweb . |

| Support | An complete online community withTalend’s wiki , Talend Forum andbugtracker for the management of incidents and Bugs. | Includes forum Pentaho , Issue Tracking and Pentaho Community . |

| Documentation | Complete documentation in pdfformat that includes: Installation, User Manual and Documentation of components. | Online Documentation on the web. Books: Data Integration-Pentaho 3.2 Beginner’s Guide(M.C.Roldan),Pentaho Kettle Solutions (M.Casters, R.Bouman, J.van Dongen). |

Examples of Use (in Spanish)

| EXAMPLE | TALEND OPEN STUDIO | PENTAHO DATA INTEGRATION (KETTLE) |

| Charging time dimension of a DW | ETL process to load the time dimension. Example use of the ETL Talend. | Time Dimension ETL with PDI. |

| Implementation of dynamic sql statements | More examples of Talend.Execution of SQL statements constructed in execution time. | Passing parameters and dynamic operations in a transformation of PDI. |

| Loading a product dimension DW | Product Dimension ETL load.More examples of Talend.Using logs, metrics and statistics. | Product Dimension ETL with PDI (I).Extraccion to Stage Area. ETL Product Dimension with PDI (II). Loading to DW. |

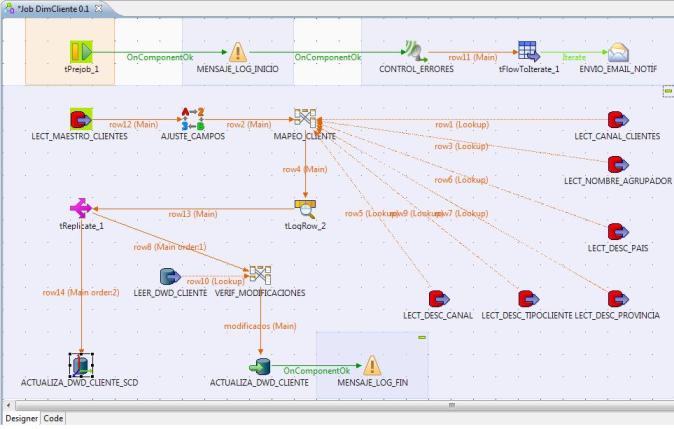

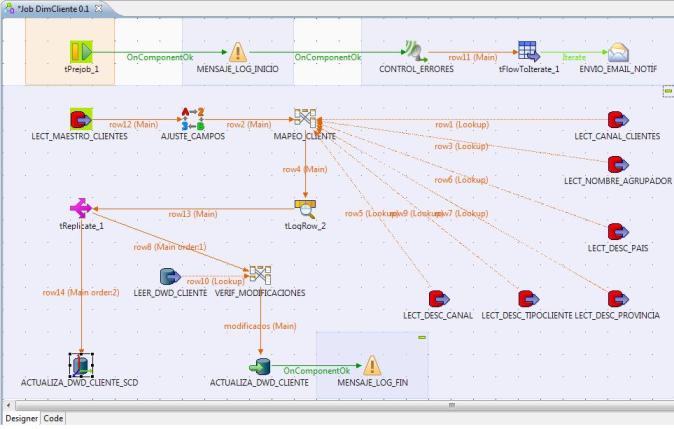

| Charging a customer dimension DW | Talend ETL Mapping Types. Customer Dimension ETL. | Customer Dimension ETL with PDI. |

| Treatment of slowly changing dimensions | Management of SCD (slowly changing dimensions.) | Treatment Slowly Changing Dimensions (SCD) with PDI. |

| Connecting to ERP Sap | Connecting to Sap with Talend | Connecting to Sap with Kettle (plugin ProERPConn) |

| Charging sales fact tables in a DW | Sales Facts table. Talend contexts. | Loading ETL sales made with PDI. ETL load PDI budgeting facts. |

| Exporting Jobs and planning processes | Export Talend.Planification jobs in ETL processes. | |

| Treatment of public data | Data Model and Process Load DW London’s public data. | |

| Understanding the user interface | Building ETL processes using Kettle (Pentaho Data Integration) | |

| Graduation Project (comparative Pentaho / JasperETL). Developed by Rodrigo Almeida, Mariano Heredia. | Thesis which details a Business Intelligence project using the tools of Pentaho and Jasper. In the ETL’s, compared Kettle with JasperETL (based on Talend). | Download the book on the web:http://sites.google.com/site/magm33332/bifloss.It includes a magnificent detail of the features of each tool. |

Tutorial Talend Open Studio 4 by Victor Javier Madrid on the web adictosaltrabajo.com.

Includes another example to process an EDI file with Talend.

White Paper on Open Source ETL tools conducted in French by Atol.

It includes a series of practical examples very complete.

Using Pentaho Data Integration (Kettle) in ETL Process Design

Table of strengths / weaknesses.

From my point of view, with the user experience of the two tools and the information collected about tools and others user experience, I can highlight the following aspects:| TALEND OPEN STUDIO | PENTAHO DATA INTEGRATION (KETTLE) |

| It is a code generator and this issue implies a heavy dependence of the project chosen language (Java in my case). By choosing java, we have all the advantages and disadvantages of this language.You need a high level of this language to get the most out of the application. | It is a transformation engine, and notes from the outset has been designed by people who needed to meet their needs in data integration, with great experience in this field. It is also easier to manage the datatypes with PDI, it is not as rigorous as Java. |

| Tool unintuitive and difficult to understand, but once you overcome this initial difficulty, we observe the very great potential and power of the application. | Very intuitive tool, with some basic concepts can make it works. Conceptually very simple and powerful. |

| Unified user interface across all components. Based on Eclipse, the knowledge of the tool enables us to use the interface. | The design of the interface can be a bit poor andthere is no unified interface for all components, being sometimes confusing. |

| Talend is investing significant resources in its development (through capital injections from various funds), which is producing a very rapid developmentof the tool. With a great potential, the product also is being supplemented with other tools for MDM and Data Profiling. | Much slower tool evolution and uncertain because Pentaho tends to leave the OpenSource focus. |

| Greater availability of components to connect to multiple systems and data sources, and constantly evolving. | Limited availability of components, but more than enough for most ETL or data integration process. |

| There is not a database repository (only in paid versions),but work with Workspace and the project concept gives us many opportunities. Very useful dependency analysis and update when elements are modified (which is distributed to all the Jobs of a project). | Database repository gives us many opportunities for teamwork. In this repository is stored xml, containing the actions that Transformations and Jobs take on the data. |

| A separated component by each database vendor. | A single component by database action type (and the characteristics of the connection used are those that determine their behavior). |

| Help shortcut in the application. Comprehensive online help components. When we designed our own code in Java, we have the context assistance of language provided by Eclipse. | Help poor, almost nonexistent in the application. The online help in the Pentaho website is not particularly full, and in some parts is very small, so that the only way to determine the functioning of the component is test it. |

| Logs: We can configure at project level or in each Job, indicating if we want to overwrite the configuration of the project in this regard. Log can be sent to database, console or file. The functionality is very developed, distinguishing logs of statistics, metrics and process logs (to handle errors). | Logs: different logs levels (from the most basic to the row detail in data flow). Sufficient to analyze the execution of the transformations and jobs. Possibility of record logs in database, but very limited. Log configuration is set at the level of transformations and Jobs. |

| Debug: Debug perspective in Eclipse, we can keep track of implementation (see the source code) as if we were programming in Eclipse. You can also include statistics and data display trace or response times in the execution of jobs using the graphical tool. | Debug: Contains a simple debug tool, very basic. |

| Versioning of objects: in the Workspace we can manage a complete versioning of the Jobs (with minus and major number). It enables us to recover earlier versions if problems occur. It has a massive tool to change versions of lots of objects (can be very useful for versioning of distributions). | Object versioning is scheduled to be included in version 4.0. |

| Parallelism: very small in Open version. Advanced functionality in paid versions (Integration Suite). | Parallelism: parallelism is very easy to make,using the Distribute Data option in the configuration of the information exchange between steps, but will have to take care with not consistent, depending type of process. |

| Automatic generation of HTML jobs documentation. Includes graphical display of the designs, tables of properties, additional documentation or explanatory texts that we have introduced in the components, etc. You can see an example here . | Unable to generate documentation of changes and Jobs. Using the graphics tool, we can include notes with comments on the drawing process. With project kettle-cookbook you can generate html documentation. |

| Simple tool for modeling chart. With it we can conceptually draw our Jobs designs and processes. | |

| Continuous generation of new versions, incorporating improvements and bug fixes. | The generation of new versions is not verycommon and we need to generate versions ourseles updated with the latest available sources: see blog entry of Fabin Schladitz . |

| Talend Exchange : place where the community develop their own components and share them with other users. | Pentaho also offers fans who develop and release plugins on their website , with less activity than Talend. |

| As a negative point, sometimes excessively slowcaused by the use of Java language. | As a downside, some components are not behaving as expected, to perform complex transformations or by linking calls between different transformations in Jobs. The problems could be overcome by changing the design of transformations. |

| It is an advantage to have a local repository where we store locally information about database, tables, views, structures, files (text, Excel, xml).Being in the repository can be reused and associated components not need to re-read of the data source metadata every time (for the case of databases, for example). It has a query assistant (SQL Builder) with many powerful features. | When working with databases with very large catalogs, it is inconvenient to have to recover the entire building, for example, a sql statement to read from a table (when we use the option of browsing the catalog). |

| Code reuse: we can include our own libraries, which are visible in all Jobs in a project. This allows us a way to design our own components. | JavaScript code written in the step components can not be reused in other components. Fairly limited to adding new functionality or modify existing ones. |

| Control of flow processes: on the one hand we have the data flow (row, iterate or lookup) and on the other triggers for control execution and orchestration of processes. The row and iterate combination is useful to orchestrate the loop process, with the aim of repetitive process. There is a major inconvenience we may complicate the process: you can not collect several data streams coming from the same origin (must have a different point of departure). | Flow control processes: passing information between components (steps) with jumps, in a unique manner, and the resulting flow varies with the type of control used. This approach has limitations with the control of iterative processes. As an interesting feature, encapsulation of transformations through the mappings, which allows us to define transformations for repetitive processes (similar to a function in a programming language). |

| Handling errors: when errors occur, we can manage the log, but we lose control. We cant reprocess rows. | Error management: errors management in the steps allow us to interact with those mistakes and fix them without completing the process (not always possible, only in some steps). |

| Execution: either from the tool (which is sometimes very slow, especially if you include statistics and execution traces). To run at command line level, is necesary to export Jobs. The export generates all objects (jar libraries) necessary to perform the job, including a .bat or .sh file to execute it. This way allow us to execute the job in any platform where you can run java or perl language, without needing to install Talend. | Execution: either from the tool (pretty good response times) or at command level with Pan (for transformations) and Kitchen(for jobs) . They aretwo very simple and functional utilities that allow us to execute XMLs specifications of jobs or transformations (either from file or from the repository). It is always necessary to run the process have installed the PDI tool. We have alsoCarte tool, a simple web server that allows you to execute transformations and jobs remotely. |

Comparatives and additional information.

Summary (final opinion).

From my point of view, I think both tools are complementary. Each one with a focus, but allow the same tasks of transformation and data integration. The product Talend hasmore future, since they are putting many resources in its development, and is being supplemented with other tools to create a true data integration suite. Also used in the Jaspersoft project, the fact of being more open and can be complemented with

the use of Java gives certain advantages over Pentaho.

By the other hand, Pentaho Data Integration is a very intuitive and easy to use. You can see from the beginning when you start to use, as I mentioned, which is developed through the

prism of the problems of ETL processes and data transformation. In some aspects it is faster and more agile than Talend, not having to be moving Java code generation all the time. We misses the management of a truly integrated project repository, such as Talend,

and an independent metadata of source/target systems.

A level of performance, and reviewing the different comparations and benchmarks, not see a clear winner. A tool is faster in some things (Talend in calculating additions or Lookups), while Pentaho

is faster, for example in the treatment of SCD or the parallelization process. In my ETL processes there have been no large differences in performance, although I found slightly more agile Pentaho when performing mass processes.

Taking into account all seen (and everything detailed in the above), I opted for Talend slightly, but before choosing a tool for a project, I conduct a thorough study of the type of work and

casuistic to which we will face in the design of our processes before opting for one or other tool. You may have specific factors that may recommend the use of one or another (such as the need to connect to a particular application or platform in which to

run the process). What is clear in both cases, is that either we could hold for the processes of construction of a DW in a real environment (as I have shown in this blog with the whole series of published examples.)

If you have worked with some of the tools, or both, maybe have to add something to this comparison. I hope your opinions.

Updated 18/06/10

I leave the link to the last Gartner study about Data Integration Tools, published in November 2009:Magic Quadrant for Data Integration

Tools.

In the latter study was included Talend as a emerging provider of data integration tools. If you are considering working with Talend, they say interesting things (both Strengths and Cautions) on the

evolution of the product and its future:

Strong Points:

Two levels: entry-level Open Source tool free (Talend Open Studio) and higher with a payment tool with more features and support (Talend Integration Suite).

Talend is getting almost unanimously positive results in business. Although the initial factor may be its price, its features and functionality are the second factor in its success.

Good connectivity in general. Complemented with the tools of Data Profiling and Data Quality. The passage of the versions to pay Open requires no extra learning curve.

Precautions:

There is a shortage of experts in the tool, although it is developing a network of alliances with other companies and develop its commercial network (although they are not in all regions).

There are some problems with the central repository (which is not in the Open version), when working to coordinate development works. Looks like they are trying to solve in the new versions.

Some customers have reported that the documentation is wrong and some problems in metadata management. It is also necessary to have an expert in Java or Perl to take full advantage of the tool

(such as indicated in comparison with Pentaho).

I recommend reading the report, there is a lot of information about data integration tools and particularly if you are looking for information on any individual (such as Talend, the purpose of this

comparative product).

相关文章推荐

- ETL Pentaho Data Integration (Kettle) 插入/更新 问题 etl

- 开源ETL工具 Pentaho Data Integration (Kettle)

- Pentaho Data Integration(Kettle) 6.0

- Pentaho Data Integration 7.1(kettle) 安装手册

- Pentaho Data Integration(Kettle) 使用MySQL作为资源库报错解决方法

- pentaho data-integration(kettle) 资源库备份

- 使用 Pentaho data-integration (Kettle) 进行数据转换出现中文乱码时的解决办法

- DataBinder.Eval(Container, "Text") vs DataBinder.Eval(Container.DataItem, "Text")

- Kettle 与 Talend Open Studio 的 ETL 比较

- Microsoft Visual Studio® 2005 下使用DataProviderSAP 调用SAP RFC

- 安装VS简化版。解决编译器报错“Cannot open include file: 'iostream.h': No such file or directory”

- Test Design Studio VS. QuickTest® Pro

- win7 + VS2013 出现Cannot open include file: 'SDKDDKVer.h'问题

- win7 + VS2013 出现Cannot open include file: 'SDKDDKVer.h'问题 附地址

- 【USACO】CODE[VS] 3060 && openjudge 2971 捉住那头牛

- Pentaho & Kettle下载地址

- Pentaho Work with Big Data(二)—— Kettle提交Spark作业

- 几种 ETL 工具的比较(Kettle,Talend,Informatica 等)

- VS Visual Studio connection(); Microsoft Visulal Studio vNext & Azure

- 关于ImageIO: CGImageRead_mapData 'open' failed ' XXX error = 2 (No such file or directory)问题