边学边记(五) lucene索引结构二(segments_N)

2010-06-02 14:36

375 查看

lucene中索引文件的存储用段segments来描述的,通过segments.gen来维护gen信息

和segments.gen一样头信息是一个表示此索引中段的格式化版本 即format值 根据format值判断此段信息中的信息存储格式和存储内容

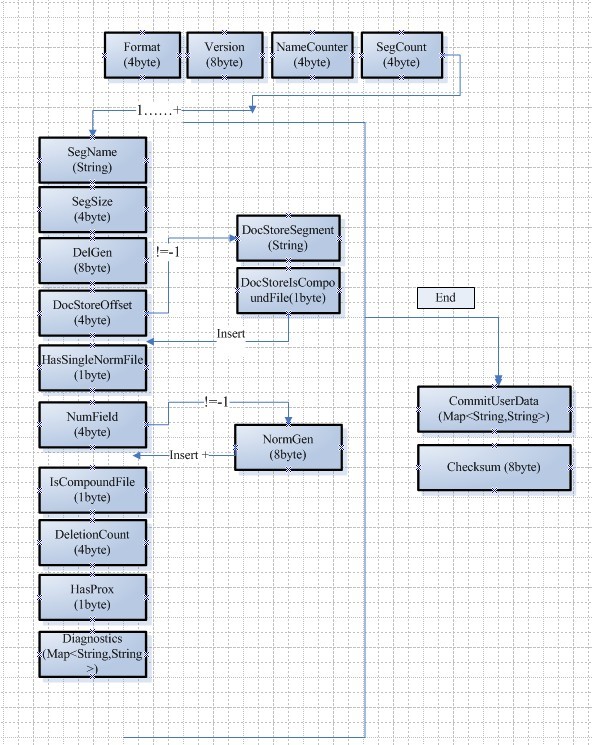

一个segment_N文件中保存的信息和信息的类型 如下图所示

信息存储的格式是这样的

Format, Version, NameCounter, SegCount, <SegName, SegSize, DelGen,

DocStoreOffset, [DocStoreSegment, DocStoreIsCompoundFile],

HasSingleNormFile, NumField,

NormGenNumField

,

IsCompoundFile, DeletionCount, HasProx,

Diagnostics>SegCount

, CommitUserData, Checksum

Format 是一个int32位的整数一般为-9 SegmentInfos.FORMAT_DIAGNOSTICS

Version 值保存操作此索引的时间的long型时间 System.currentTimeMillis()

NameCounter 用来生成新的segment的文件名

SegCount 记录索引中有多少个段 有多少个段后面红色标记的属性就会重复多少次

每个段的信息描述:

SegName,

SegSize, DelGen,

DocStoreOffset, [DocStoreSegment, DocStoreIsCompoundFile],

HasSingleNormFile, NumField,

NormGenNumField

,

IsCompoundFile, DeletionCount, HasProx,

Diagnostics

SegName -- 和字段有关的索引文件的前缀 上篇博文中生成的索引中 存储的应该是"_0"

SegSize -- 记录的此段中 document的个数

DelGen -- 记录了删除操作过后产生的*.del文件的数量 如果为-1 则没有此文件 如果为0 就在索引中查找_X.del文件 如果 >0就查找_X_N.del文件

DocStoreOffset -- 记录了当前段中的document的存储的位置偏移量 如果为-1 说明 此段使用独立的文件来存储doc,只有在偏移量部位-1的时候

[DocStoreSegment,

DocStoreIsCompoundFile] 信息才会存储

DocStoreSegment -- 存储和此段共享store的segment的name

DocStoreIsCompoundFile --用来标识存储是否是混合文件存储 如果值为1则说明使用了混合模式来存储索引 那么操作的时候就回去找.cfx文件

HasSingleNormFile -- 如果为1 表明 域(field)的信息存储来后缀为.fnm文件里 如果不是则存在.fN文件中

NumField -- field的个数 即

NormGen数组的大小

这个数组中具体存了域的gen信息 long类型 前提是每个field都分开存储的

DeletionCount,

HasProx,

Diagnostics

DeletionCount -- 记录在此段中被删除的doc的数量

HasProx -- 此段中是否已一些域(field) 省略了term的频率和位置 如果值为1 则不存在这样的field

Diagnostics -- 诊断表 key - value 比如当前索引的version,系统的版本,java的版本等

CommitUserData 记录用户自己的一些属性信息 在使用commit preparecommit 等操作的时候产生

Checksum 记录了当前Segments_N文件中到此信息之前的byte的数量 这个信息用来在打开索引的时候做一个核对 如果用户非法自己修改过此文件 那么这个文件记录的checksum就和文件的大小产生了冲突

这些信息都存储在SegmentInfos bean中 单个segmeng的信息存储在bean SegmentInfo 中 用上篇博客中写好的索引读取类

检测segments_n中存储的信息 这里仅检测前几个数据 其他的数据都由SegmentInfos 自己构造 读取函数都是从IndexInput类中继承

编写CheckSegmentsInfo 类

运行结果如下:

seg format:-9

seg Version:1275404730705

info Version:1275404730705

info Counter:1

info Seg Count:1

****************** segment [0]

segment name:_0

the doc count in segment:2

del doc count in segment:0

segment doc store offset:0

segment's DocStoreSegment:_0

segment's DocStoreIsCompoundFile:false

segment IsCompoundFile :false

segment's delcount:0

segment's is hasprox:true

Diagnostic key:os.version Diagnostic value:5.1

Diagnostic key:os Diagnostic value:Windows XP

Diagnostic key:lucene.version Diagnostic value:3.0.0 883080 - 2009-11-22 15:43:58

Diagnostic key:source Diagnostic value:flush

Diagnostic key:os.arch Diagnostic value:x86

Diagnostic key:java.version Diagnostic value:1.6.0

Diagnostic key:java.vendor Diagnostic value:Sun Microsystems Inc.

根据提到的个个byte的值表示的信息可以检测文件中都存的什么值,下面着重分信息lucene对string类型的存储和读取

luncene 写字符串的代码如下:

lucene写入字符串的时候使用的是UTF-8 编码格式,java本身的字符串是用unicode表示的 也就是UTF-16 1-2个字节表示 这里说下UTF-16是unicode的一种实现。

UTF-8编码的优点这里就不说了 和UTF-16不一样 UTF-8是用1-3个字节来存储的

转换为UTF-8后将所得的byte数组的长度作为前缀存为一个VInt类型整数后面跟字符串,例如:字符串:’“我” 在lucene中的存储为:

3 -26 -120 -111

3表示字符串"我"的utf8 的byte的长度 后面接的三位就是字符串的字节码

这样也是为了方便读取。具体代码可以看IndexInput类 。很简单

至于map<String,String>也就是一个循环

mapsize,<String,String>N

lucene读取segment_n文件的代码:

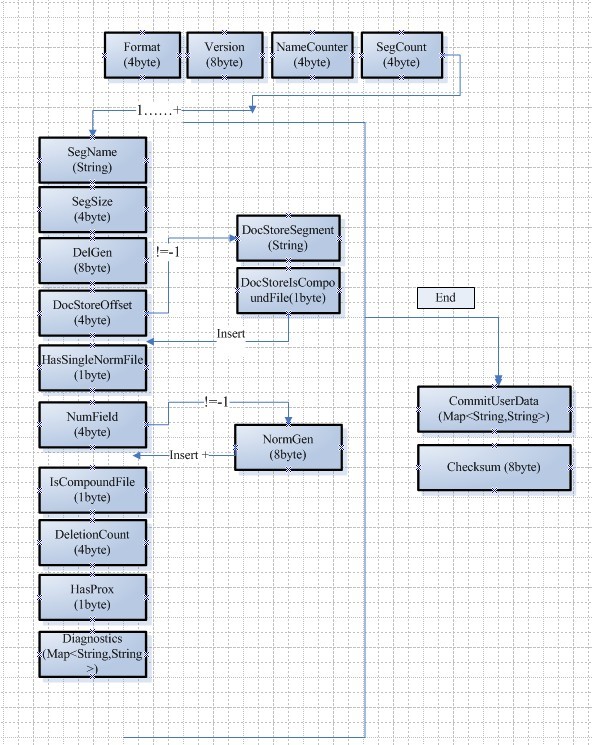

和segments.gen一样头信息是一个表示此索引中段的格式化版本 即format值 根据format值判断此段信息中的信息存储格式和存储内容

一个segment_N文件中保存的信息和信息的类型 如下图所示

信息存储的格式是这样的

Format, Version, NameCounter, SegCount, <SegName, SegSize, DelGen,

DocStoreOffset, [DocStoreSegment, DocStoreIsCompoundFile],

HasSingleNormFile, NumField,

NormGenNumField

,

IsCompoundFile, DeletionCount, HasProx,

Diagnostics>SegCount

, CommitUserData, Checksum

Format 是一个int32位的整数一般为-9 SegmentInfos.FORMAT_DIAGNOSTICS

Version 值保存操作此索引的时间的long型时间 System.currentTimeMillis()

NameCounter 用来生成新的segment的文件名

SegCount 记录索引中有多少个段 有多少个段后面红色标记的属性就会重复多少次

每个段的信息描述:

SegName,

SegSize, DelGen,

DocStoreOffset, [DocStoreSegment, DocStoreIsCompoundFile],

HasSingleNormFile, NumField,

NormGenNumField

,

IsCompoundFile, DeletionCount, HasProx,

Diagnostics

SegName -- 和字段有关的索引文件的前缀 上篇博文中生成的索引中 存储的应该是"_0"

SegSize -- 记录的此段中 document的个数

DelGen -- 记录了删除操作过后产生的*.del文件的数量 如果为-1 则没有此文件 如果为0 就在索引中查找_X.del文件 如果 >0就查找_X_N.del文件

DocStoreOffset -- 记录了当前段中的document的存储的位置偏移量 如果为-1 说明 此段使用独立的文件来存储doc,只有在偏移量部位-1的时候

[DocStoreSegment,

DocStoreIsCompoundFile] 信息才会存储

DocStoreSegment -- 存储和此段共享store的segment的name

DocStoreIsCompoundFile --用来标识存储是否是混合文件存储 如果值为1则说明使用了混合模式来存储索引 那么操作的时候就回去找.cfx文件

HasSingleNormFile -- 如果为1 表明 域(field)的信息存储来后缀为.fnm文件里 如果不是则存在.fN文件中

NumField -- field的个数 即

NormGen数组的大小

这个数组中具体存了域的gen信息 long类型 前提是每个field都分开存储的

DeletionCount,

HasProx,

Diagnostics

DeletionCount -- 记录在此段中被删除的doc的数量

HasProx -- 此段中是否已一些域(field) 省略了term的频率和位置 如果值为1 则不存在这样的field

Diagnostics -- 诊断表 key - value 比如当前索引的version,系统的版本,java的版本等

CommitUserData 记录用户自己的一些属性信息 在使用commit preparecommit 等操作的时候产生

Checksum 记录了当前Segments_N文件中到此信息之前的byte的数量 这个信息用来在打开索引的时候做一个核对 如果用户非法自己修改过此文件 那么这个文件记录的checksum就和文件的大小产生了冲突

这些信息都存储在SegmentInfos bean中 单个segmeng的信息存储在bean SegmentInfo 中 用上篇博客中写好的索引读取类

检测segments_n中存储的信息 这里仅检测前几个数据 其他的数据都由SegmentInfos 自己构造 读取函数都是从IndexInput类中继承

编写CheckSegmentsInfo 类

/****************

*

*Create Class:CheckSegmentsInfo.java

*Author:a276202460

*Create at:2010-6-2

*/

package com.rich.lucene.io;

import java.io.File;

import java.util.Iterator;

import java.util.Map;

import org.apache.lucene.index.SegmentInfo;

import org.apache.lucene.index.SegmentInfos;

import org.apache.lucene.store.Directory;

import org.apache.lucene.store.FSDirectory;

public class CheckSegmentsInfo {

/**

* @param args

* @throws Exception

*/

public static void main(String[] args) throws Exception {

String indexdir = "D:/lucenetest/indexs/txtindex/index4";

IndexFileInput input = null;

try {

input = new IndexFileInput(indexdir + "/segments_2");

System.out.println("seg format:" + input.readInt());

System.currentTimeMillis();

System.out.println("seg Version:" + input.readLong());

} finally {

if (input != null) {

input.close();

}

}

Directory directory = FSDirectory.open(new File(indexdir));

SegmentInfos infos = new SegmentInfos();

infos.read(directory, "segments_2");

System.out.println("info Version:" + infos.getVersion());

System.out.println("info Counter:" + infos.counter);

System.out.println("info Seg Count:" + infos.size());

for (int i = 0; i < infos.size(); i++) {

SegmentInfo info = infos.get(i);

System.out.println("****************** segment [" + i + "]");

System.out.println("segment name:" + info.name);

System.out.println("the doc count in segment:" + info.docCount);

System.out

.println("del doc count in segment:" + info.getDelCount());

System.out.println("segment doc store offset:"

+ info.getDocStoreOffset());

if (info.getDocStoreOffset() != -1) {

System.out.println("segment's DocStoreSegment:"

+ info.getDocStoreSegment());

System.out.println("segment's DocStoreIsCompoundFile:"

+ info.getDocStoreIsCompoundFile());

}

System.out.println("segment IsCompoundFile :"

+ info.getDocStoreIsCompoundFile());

System.out.println("segment's delcount:" + info.getDelCount());

System.out.println("segment's is hasprox:" + info.getHasProx());

Map infodiag = info.getDiagnostics();

Iterator keyit = infodiag.keySet().iterator();

while (keyit.hasNext()) {

String key = keyit.next().toString();

System.out.println("Diagnostic key:" + key

+ " Diagnostic value:" + infodiag.get(key));

}

Map userdatas = infos.getUserData();

Iterator datait = userdatas.keySet().iterator();

while(datait.hasNext()){

String key = datait.next().toString();

System.out.println("user data key:"+key+" value:"+userdatas.get(key));

}

}

}

}运行结果如下:

seg format:-9

seg Version:1275404730705

info Version:1275404730705

info Counter:1

info Seg Count:1

****************** segment [0]

segment name:_0

the doc count in segment:2

del doc count in segment:0

segment doc store offset:0

segment's DocStoreSegment:_0

segment's DocStoreIsCompoundFile:false

segment IsCompoundFile :false

segment's delcount:0

segment's is hasprox:true

Diagnostic key:os.version Diagnostic value:5.1

Diagnostic key:os Diagnostic value:Windows XP

Diagnostic key:lucene.version Diagnostic value:3.0.0 883080 - 2009-11-22 15:43:58

Diagnostic key:source Diagnostic value:flush

Diagnostic key:os.arch Diagnostic value:x86

Diagnostic key:java.version Diagnostic value:1.6.0

Diagnostic key:java.vendor Diagnostic value:Sun Microsystems Inc.

根据提到的个个byte的值表示的信息可以检测文件中都存的什么值,下面着重分信息lucene对string类型的存储和读取

luncene 写字符串的代码如下:

/** Writes a string.

* @see IndexInput#readString()

*/

public void writeString(String s) throws IOException {

UnicodeUtil.UTF16toUTF8(s, 0, s.length(), utf8Result);

writeVInt(utf8Result.length);

writeBytes(utf8Result.result, 0, utf8Result.length);

}/** Encode characters from this String, starting at offset

* for length characters. Returns the number of bytes

* written to bytesOut. */

public static void UTF16toUTF8(final String s, final int offset, final int length, UTF8Result result) {

final int end = offset + length;

byte[] out = result.result;

int upto = 0;

for(int i=offset;i<end;i++) {

final int code = (int) s.charAt(i);

if (upto+4 > out.length) {

byte[] newOut = new byte[2*out.length];

assert newOut.length >= upto+4;

System.arraycopy(out, 0, newOut, 0, upto);

result.result = out = newOut;

}

if (code < 0x80)

out[upto++] = (byte) code;

else if (code < 0x800) {

out[upto++] = (byte) (0xC0 | (code >> 6));

out[upto++] = (byte)(0x80 | (code & 0x3F));

} else if (code < 0xD800 || code > 0xDFFF) {

out[upto++] = (byte)(0xE0 | (code >> 12));

out[upto++] = (byte)(0x80 | ((code >> 6) & 0x3F));

out[upto++] = (byte)(0x80 | (code & 0x3F));

} else {

// surrogate pair

// confirm valid high surrogate

if (code < 0xDC00 && (i < end-1)) {

int utf32 = (int) s.charAt(i+1);

// confirm valid low surrogate and write pair

if (utf32 >= 0xDC00 && utf32 <= 0xDFFF) {

utf32 = ((code - 0xD7C0) << 10) + (utf32 & 0x3FF);

i++;

out[upto++] = (byte)(0xF0 | (utf32 >> 18));

out[upto++] = (byte)(0x80 | ((utf32 >> 12) & 0x3F));

out[upto++] = (byte)(0x80 | ((utf32 >> 6) & 0x3F));

out[upto++] = (byte)(0x80 | (utf32 & 0x3F));

continue;

}

}

// replace unpaired surrogate or out-of-order low surrogate

// with substitution character

out[upto++] = (byte) 0xEF;

out[upto++] = (byte) 0xBF;

out[upto++] = (byte) 0xBD;

}

}

//assert matches(s, offset, length, out, upto);

result.length = upto;

}lucene写入字符串的时候使用的是UTF-8 编码格式,java本身的字符串是用unicode表示的 也就是UTF-16 1-2个字节表示 这里说下UTF-16是unicode的一种实现。

UTF-8编码的优点这里就不说了 和UTF-16不一样 UTF-8是用1-3个字节来存储的

转换为UTF-8后将所得的byte数组的长度作为前缀存为一个VInt类型整数后面跟字符串,例如:字符串:’“我” 在lucene中的存储为:

3 -26 -120 -111

3表示字符串"我"的utf8 的byte的长度 后面接的三位就是字符串的字节码

这样也是为了方便读取。具体代码可以看IndexInput类 。很简单

至于map<String,String>也就是一个循环

mapsize,<String,String>N

lucene读取segment_n文件的代码:

/**

* Read a particular segmentFileName. Note that this may

* throw an IOException if a commit is in process.

*

* @param directory -- directory containing the segments file

* @param segmentFileName -- segment file to load

* @throws CorruptIndexException if the index is corrupt

* @throws IOException if there is a low-level IO error

*/

public final void read(Directory directory, String segmentFileName) throws CorruptIndexException, IOException {

boolean success = false;

// Clear any previous segments:

clear();

ChecksumIndexInput input = new ChecksumIndexInput(directory.openInput(segmentFileName));

generation = generationFromSegmentsFileName(segmentFileName);

lastGeneration = generation;

try {

int format = input.readInt();

if(format < 0){ // file contains explicit format info

// check that it is a format we can understand

if (format < CURRENT_FORMAT)

throw new CorruptIndexException("Unknown format version: " + format);

version = input.readLong(); // read version

counter = input.readInt(); // read counter

}

else{ // file is in old format without explicit format info

counter = format;

}

for (int i = input.readInt(); i > 0; i--) { // read segmentInfos

add(new SegmentInfo(directory, format, input));

}

if(format >= 0){ // in old format the version number may be at the end of the file

if (input.getFilePointer() >= input.length())

version = System.currentTimeMillis(); // old file format without version number

else

version = input.readLong(); // read version

}

if (format <= FORMAT_USER_DATA) {

if (format <= FORMAT_DIAGNOSTICS) {

userData = input.readStringStringMap();

} else if (0 != input.readByte()) {

userData = Collections.singletonMap("userData", input.readString());

} else {

userData = Collections.<String,String>emptyMap();

}

} else {

userData = Collections.<String,String>emptyMap();

}

if (format <= FORMAT_CHECKSUM) {

final long checksumNow = input.getChecksum();

final long checksumThen = input.readLong();

if (checksumNow != checksumThen)

throw new CorruptIndexException("checksum mismatch in segments file");

}

success = true;

}

finally {

input.close();

if (!success) {

// Clear any segment infos we had loaded so we

// have a clean slate on retry:

clear();

}

}

}/**

* Construct a new SegmentInfo instance by reading a

* previously saved SegmentInfo from input.

*

* @param dir directory to load from

* @param format format of the segments info file

* @param input input handle to read segment info from

*/

SegmentInfo(Directory dir, int format, IndexInput input) throws IOException {

this.dir = dir;

name = input.readString();

docCount = input.readInt();

if (format <= SegmentInfos.FORMAT_LOCKLESS) {

delGen = input.readLong();

if (format <= SegmentInfos.FORMAT_SHARED_DOC_STORE) {

docStoreOffset = input.readInt();

if (docStoreOffset != -1) {

docStoreSegment = input.readString();

docStoreIsCompoundFile = (1 == input.readByte());

} else {

docStoreSegment = name;

docStoreIsCompoundFile = false;

}

} else {

docStoreOffset = -1;

docStoreSegment = name;

docStoreIsCompoundFile = false;

}

if (format <= SegmentInfos.FORMAT_SINGLE_NORM_FILE) {

hasSingleNormFile = (1 == input.readByte());

} else {

hasSingleNormFile = false;

}

int numNormGen = input.readInt();

if (numNormGen == NO) {

normGen = null;

} else {

normGen = new long[numNormGen];

for(int j=0;j<numNormGen;j++) {

normGen[j] = input.readLong();

}

}

isCompoundFile = input.readByte();

preLockless = (isCompoundFile == CHECK_DIR);

if (format <= SegmentInfos.FORMAT_DEL_COUNT) {

delCount = input.readInt();

assert delCount <= docCount;

} else

delCount = -1;

if (format <= SegmentInfos.FORMAT_HAS_PROX)

hasProx = input.readByte() == 1;

else

hasProx = true;

if (format <= SegmentInfos.FORMAT_DIAGNOSTICS) {

diagnostics = input.readStringStringMap();

} else {

diagnostics = Collections.<String,String>emptyMap();

}

} else {

delGen = CHECK_DIR;

normGen = null;

isCompoundFile = CHECK_DIR;

preLockless = true;

hasSingleNormFile = false;

docStoreOffset = -1;

docStoreIsCompoundFile = false;

docStoreSegment = null;

delCount = -1;

hasProx = true;

diagnostics = Collections.<String,String>emptyMap();

}

}

相关文章推荐

- 边学边记(六) lucene索引结构三(_N.fnm)

- 边学边记(八) lucene索引结构五(_N.tis,_N.tii)

- Lucene索引文件结构图之一(segments&fnm&fdx&fdt)

- 边学边记(九) lucene索引结构五(_N.frq,_N.prx)

- 边学边记(七) lucene索引结构四(_N.fdx,_N.fdt)

- lucene建索引时的一个"Can't rename segments.new to segments"异常的原因

- Lucene 源代码剖析-4 索引文件结构(1)

- lucene的索引结构图

- lucene索引结构(三)-词项向量(TermVector)索引文件结构分析

- lucene 索引出错 no segments* file found in org.apache.lucene.store.MMapDirectory

- lucene索引结构(五)--词频倒排索引(frq)文件结构分析

- lucene索引结构(三)-词项向量(TermVector)索引文件结构分析

- lucene索引结构(一)--segment元数据信息

- Lucene的索引链结构_IndexChain

- (转) lucene索引结构改进-支持单机十亿级别的索引的检索

- Lucene索引的详细结构

- lucene索引结构(三)-词项向量(TermVector)索引文件结构分析

- lucene索引结构(二)--域(Field)信息索引

- lucene索引结构(六)--词位置(.prx)倒排索引文件结构分析

- Lucene索引文件结构图之一(prx&nrm&tvx&tvd&del&tvf)